The blue-minimising robot and model splintering

post by Stuart_Armstrong · 2021-05-28T15:09:54.516Z · LW · GW · 4 commentsContents

The improving robot Seven key stages None 4 comments

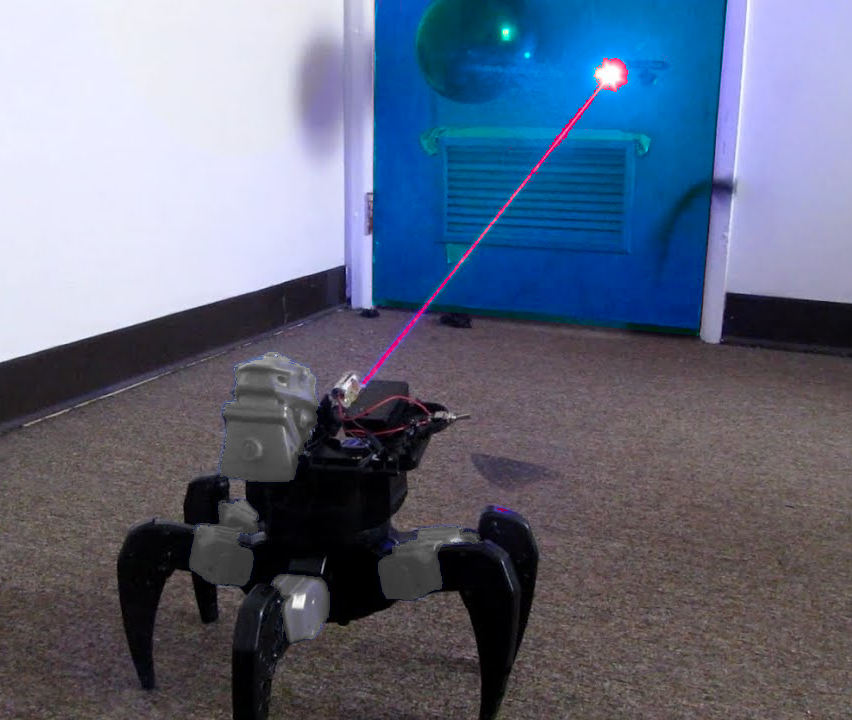

A long time ago, Scott introduced the blue-minimising robot [LW · GW]:

Imagine a robot with a turret-mounted camera and laser. Each moment, it is programmed to move forward a certain distance and perform a sweep with its camera. As it sweeps, the robot continuously analyzes the average RGB value of the pixels in the camera image; if the blue component passes a certain threshold, the robot stops, fires its laser at the part of the world corresponding to the blue area in the camera image, and then continues on its way.

Scott then considers holographic projectors and colour-reversing glasses, where the blue robot does not act in a way that actually reduces the amount of blue, and concludes:

[...] the most fundamental explanation is that the mistake began as soon as we started calling it a "blue-minimizing robot". [...] The robot is not maximizing or minimizing anything. It does exactly what it says in its program: find something that appears blue and shoot it with a laser. If its human handlers (or itself) want to interpret that as goal directed behavior, well, that's their problem.

The robot is a behavior-executor, not a utility-maximizer.

That's one characterisation, but what if the robot was a reinforcement-learning agent that was trained in various scenarios where they got rewards for blasting blue objects? Then it would seem that it was designed as a blue minimising utility maximiser; just not designed particularly well.

One approach would be "well, just design it better". But that's akin to saying "well, just perfectly program a friendly AI". In the spirit of model-splintering [LW · GW] we could instead ask the algorithm to improve its own reward function as it learns more.

The improving robot

Here is a story of how that could go. Obviously this sort of behaviour would not happen naturally with a reinforcement learning agent; it has to be designed in. The key elements are in bold.

- The robot starts with the usual behaviour - see a particular shade of blue, blast with laser, get reward when blue object destroyed.

- The robot notices that there are certain features connected with that shade of blue. Specifically, the blue cells are cancer cells, died blue. Now, the robot has no intrinsic notion of "cancer cells", but can detect features - the extent of the objects, how they change, and how they differ from other extensive objects - that allow it to distinguish cancer cells from non-cancerous cells, and cells from other objects.

- It notices that the correspondence between cancer cells and blue is not perfect - some non-cancerous cells are blue, some cancerous cells are not blue, some blue dye floats freely in the body. And the reward function is not perfectly aligned with the amount of blue cells it destroys; in particular, if it blasts too much too hard, it gets low reward.

- Thus it deduces that it is plausible that its reward function is a noisy approximation for destruction of cancer cells.

- It starts being conservative over possible goals - destroying cancer cells and blue cells and blue dye, but not blasting too hard.

- As soon as possible, it asks for clarification from its programmers about its true goal.

- As its abilities and intelligence increases, it iterates the clarification-asking and goal-updating several time.

- As it does so, it starts to notice that there are systematic biases in how the programmers give it feedback, and it gets better able to predict its own future goal than the programmers seem able to.

- It now has to partially infer what its goals are, since it cannot take the programmers responses as final. Information about the programmers and its own design - eg the fact that it was designed as surgical robot, by a surgical team - become relevant to its goal.

- It uses idealised versions of its programmers to check its goal against (eg if programmers can describe being unbiased or less biased, then the robot can use those imaginary unbiased programmers to guide its decisions). The tendency to wirehead [LW · GW] is explicitly guarded against (so proxies that are "too good" get downgraded in likelihood).

- It can now start to, eg, make delicate triage decisions based on quality of life for the patient and risk of death, decisions which are implicitly in its training data but not explicitly.

- The robot is now sufficiently intelligent to predict human behaviour, and manipulate it, with a high degree of efficacy. Programmer feedback is now useless to it, at least in terms of information.

- It switches to more conservative behaviour in its interactions with programmers and other humans, seeking to be non-disruptive to standard human lives. It stays focused on general medical care for cancer patients, even if it could easily extend beyond that.

- The robot is now powerful enough that it can make large changes to human society without necessarily causing huge disruption. It could cure cancer through suggestions to the right scientist, or just take over the world and eradicate humanity. While remaining more conservative, it, uses its previous experience in extrapolation and idealisation of human behaviour to figure out its goals at this level of power.

Seven key stages

There are seven key stages to this algorithm:

- Detecting that its stated goal correlates plausibly [? · GW] with alternative goals.

- Becoming conservative [LW · GW] or seeking feedback as to which goal is desirable.

- Repeat 1. and 2. as required.

- Detecting that its feedback can be imperfect and that it can manipulate it.

- Mixing actual feedback and idealised human feedback to extend its goals to new situations.

- Once human feedback is fully predictable and useless, become permanently (somewhat) conservative over possible goals.

- Extrapolating its goals in automated way, partially based on its initial algorithm, partially based on what it has learnt about extrapolation up until now.

The question is, can all these stages be programmed or learnt by the AI? I feel that they might, since humans can achieve them ourselves, at least imperfectly. So with a mix of explicit programming, examples of humans doing these tasks, learning on these examples, examples of humans finding errors in the learning, it might be possible to design such an agent.

4 comments

Comments sorted by top scores.

comment by Stuart_Armstrong · 2022-12-04T16:45:08.182Z · LW(p) · GW(p)

This post is on a very important topic: how could we scale ideas about value extrapolation or avoiding goal misgeneralisation... all the way up to superintelligence? As such, its ideas are very worth exploring and getting to grips to. It's a very important idea.

However, the post itself is not brilliantly written, and is more of "idea of a potential approach" than a well crafted theory post. I hope to be able to revisit it at some point soon, but haven't been able to find or make the time, yet.

comment by Steven Byrnes (steve2152) · 2022-09-13T18:45:06.555Z · LW(p) · GW(p)

In the kinds of model-based RL AGI architectures that I normally think about (see here [LW · GW])…

- step 1 (“usual behavior”) is fine

- steps 2-4 (“notices that there are certain features connected with the shade of blue”, “notices that the correspondence is not perfect”, “deduces that it is plausible that its reward function is a noisy approximation for destruction of cancer cells”) kinda happens to some extent by default—I think there’s a way to do generative probabilistic modeling, wherein the algorithm is always pattern-matching to lots of different learned features in parallel, and that the brain uses this method, and that future AGI programmers will use this method too because it works better. (I wouldn’t have described these steps with the kind of self-aware language that you used, but I’m not sure that matters.)

- step 5 (“it starts being conservative”) seems possible to me, see Section 14.4.2 here [LW · GW].

- step 6 (“it asks for clarification from its programmers”) seems very hard because it requires something like a good UI / interpretability. I’m thinking of the concepts and features as abstract statistical patterns in patterns in patterns in sensory input and motor output, and I expect that this kind of thing will not be straightforward to present to the programmers. Again see here [LW · GW]. Another problem is that we need a criterion on when to ask the programmers, and I don’t see any principled way to pick that criterion, and if it’s too strict we get a big alignment tax, and by the way perhaps that alignment tax is unavoidable no matter what we do.

- step 7 (“it iterates”)—I don’t know how one would implement that. The obvious method (the AGI is motivated to do good concept-extrapolation just like it’s motivated to do whatever else) seems very dicey to me, see Section 14.4.3.3 [LW · GW]. Also, if that “obvious method” is the plan, then nothing you’re doing at Aligned AI would be relevant to that plan—it would just look like making an AGI that wants to be helpful, Paul Christiano style, and then hope the concept-extrapolation would emerge organically, right?

- step 8-9 (“it starts to notice that there are systematic biases in how the programmers give it feedback”, “it now has to partially infer what its goals are”)—again, I don’t know how one would implement that. I’m very confused. I was imagining that the AGI presents a menu of possible extrapolations, and the programmers pick the right one, and the source code directly sets that answer as a highly-confident ground truth. Apparently you have something else in mind? Maybe the thing I was talking about in Step 7 above?

comment by Measure · 2021-05-28T17:51:36.192Z · LW(p) · GW(p)

At step 8, why is the AI motivated to care about the idealized goal rather than just the reward signal? Are we assuming that the reward signal is determined by performance wrt the ideal goal?

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2021-05-28T19:27:13.277Z · LW(p) · GW(p)

That is the aim. It's easy to program an AI that doesn't care too much about the reward signal - the trick is to find a way that it doesn't care in a specific way that aligns it with our preferences.

eg what would you do if you had been told to maximise some goal, but were told that your reward signal would be corrupted and over-simplified? You can start doing some things in that situation to maximise your chance of not-wireheading; I want to program the AI to do similarly.