Book Review: How Minds Change

post by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-05-25T17:55:32.218Z · LW · GW · 51 commentsIn 2009, Eliezer Yudkowsky published Raising the Sanity Waterline [LW · GW]. It was the first article in his Craft and the Community [? · GW] sequence about the rationality movement itself, and this first article served as something of a mission statement. The rough thesis behind this article—really, the thesis behind the entire rationalist movement—can be paraphrased as something like this:

We currently live in a world where even the smartest people believe plainly untrue things. Religion is a prime example: its supernatural claims are patently untrue, and yet a huge number of people at the top of our institutions—scholars, scientists, leaders—believe otherwise.

But religion is just a symptom. The real problem is humanity's lack of rationalist skills. We have bad epistemology, bad meta-ethics, and we don't update our beliefs based on evidence. If we don't master these skills, we're doomed to just replace religion with something equally as ridiculous.

We have to learn these skills, hone them, and teach them to others, so that people can make accurate decisions and predictions about the world without getting caught up in the fallacies so typical of human reasoning.

The callout of religion dates it: it was from the era where the early English-speaking internet was a battlefield between atheism and religion. Religion has slowly receded from public life since then, but the rationality community stuck around, in places like this site and SSC/ACX and the Effective Altruism community.

I hope you'll excuse me, then, if I say that the rationalist community has been a failure.

Sorry! Put down your pitchforks. That's not entirely true. There's a very real sense in which it's been a success. The community has spread and expanded to immense levels. Billions of dollars flow through Effective Altruist organizations to worthy causes. Rationalist and rationalist-adjacent people have written several important and influential books. And pockets of the Bay Area and other major cities have self-sustaining rationalist social circles, filled with amazing people doing ambitious and interesting things.

But that wasn't the point of the community. At least not the entire point. From Less Wrong's account of its own history [? · GW]:

After failed attempts at teaching people to use Bayes' Theorem, [Yudkowsky] went largely quiet from [his transhumanist mailing list] to work on AI safety research directly. After discovering he was not able to make as much progress as he wanted to, he changed tacts to focus on teaching the rationality skills necessary to do AI safety research until such time as there was a sustainable culture that would allow him to focus on AI safety research while also continuing to find and train new AI safety researchers.

In short: the rationalist community was intended as a way of preventing the rise of unfriendly AI.

The results on this goal have been mixed to say the least.

The ideas of AI Safety have made their way out there. Many people that are into AI have heard of ideas like a paperclip maximizer. Several AI Safety organizations have been founded, and a nontrivial chunk of Effective Altruists are actively trying to tackle this problem.

But the increased promulgation of the idea of transformational AI also caught the eye of some powerful and rich people, some of which proceeded to found OpenAI. Most people of a Yudkowskian bent consider this to be a major "own goal": although it's good to have one of the world's leading AI labs be a sort-of-non-profit that openly says that they care about AI Safety, they've also created a race to AGI, accelerating AI timelines like never before.

And it's not just AI. Outside of the rationalist community, the sanity waterline hasn't gotten much better. Sure, religion has retreated, but just as predicted, it's been replaced by things that are at least as ridiculous if not worse. Politics, in both the US and abroad, has gone insane and become more polarized than ever. Arguments are soldiers [? · GW] and debate is war. Making it worse, unlike religions, which are explicitly beliefs about the supernatural, these are beliefs about reality and the natural world that have about as much rigor in them as religious dogma. I'll leave it up to you to decide which outgroup belief you think this is a dogwhistle for.

This isn't where the community is supposed to have ended up. If rationality is systematized winning [LW · GW], then the community has failed to be rational.

How did it end up here? How could the community go so well in some ways, but so poorly in others?

I think the answer to this is that the community was successful by means of selection. The kind of people that flocked to the community had high intellectual curiosity, were willing to tolerate weird ideas, and, if I had to guess, maybe had trouble finding like-minded people who'd understand them outside the community.

Surveys on Less Wrong put them at an average IQ of 138 [LW · GW], and even the 2022 ACX survey had an average IQ of 137 (among people who filled that question out). That's higher than 99.3% of the world population. Even if you, like Scott Alexander, assume that's inflated by about 10–15 points, that's still higher than around 95% of the population. Having read Yudkowsky's writing, I'm not surprised: I consider myself smart (who doesn't?), but his writing is dense in a way that makes it sometimes hard even for me to grasp what he's saying until I read it over multiple times.

All this led to a community which is influential but insular. Its communication is laser-focused to a particular kind of person, and has been extraordinarily successful at getting that kind of person on board, while leaving the rest of the world behind.

Ideas have filtered out, sure, but they're distorted when they do, and it's happening slower than needed in order to make progress in AI Safety. Some people are better than others at getting ideas out of the bubble, like Scott Alexander, but it's worth remembering he's kept himself grounded among more average people via his day job in psychiatry and spent his teenage years learning how to manipulate consensus reality.

Not only that, but anecdotally it feels like the insularity has exacerbated bad tendencies in some people. They've found their tribe, and they feel that talking to people outside it is pointless. Even in my minimal IRL interactions with the community, I've heard people deriding "normies", or recklessly spending their weirdness points [LW · GW] in a way that throws up red flags for anyone who's worried that they may have stumbled into a cult.

On the AI front, I've seen some people, including one person who works as a career advisor at 80,000 Hours, assume that most important people involved in AI capabilities research either already understand the arguments made by Yudkowsky et al, or wouldn't be receptive to them anyway, and thus there's minimal point in trying to reach or convince them.

Are they right? Are most people beyond hope? Is all this pointless?

David McRaney started out as a blogger in 2009, the same year LessWrong was founded.

Although he doesn't (to my knowledge) consider himself a part of the rationalist community, his blog, You Are Not So Smart, focused on a sort of pop-culture form of some of the same ideas that were floating around Less Wrong and related communities. The thrust of it was to highlight a bunch of ways in which your brain could fool itself and act irrationally, everything from confirmation bias, to deindividuation in crowds and riots, to procrastination, to inattention blindness and the invisible gorilla.

In 2011, he published much of this into a book of the same name. In 2012, his blog became a podcast that's been running continuously ever since. In 2014, he published a follow-up book titled You Are Now Less Dumb. But in those early years, the thrust of it remained more or less the same: people are dumb in some very specific and consistent ways, including you. Let's have some fun pointing all this out, and maybe learn something in the process.

He didn't think people could be convinced. He'd grown up in Mississippi prior to the rise of the internet, where, in his words:

The people in movies and television shows seemed to routinely disagree with the adults who told us the South would rise again, homosexuality was a sin, and evolution was just a theory. Our families seemed stuck in another era. Whether the issue was a scientific fact, a social norm, or a political stance, the things that seemed obviously true to my friends, the ideas reaching us from far away, created a friction in our home lives and on the holidays that most of us learned to avoid. There was no point in trying to change some people’s minds.

And then he learned about Charlie Veitch.

Charlie was one of several 9/11 truthers—people who thought that the US government had planned and carried out the September 11th attacks—starring on a BBC documentary called Conspiracy Road Trip. He wasn't just a conspiracy theorist: he was a professional conspiracy theorist, famous online among the conspiracy community.

The premise of the BBC show was to take a handful of conspiracy theorists and bring them to see various experts around the world who would patiently answer their questions, giving them information and responding to every accusation that the conspiracy theorists threw at them.

For Charlie and his other 9/11 truthers, they put them in front of experts in demolition, officials from the Pentagon, and even put them in a commercial flight simulator. Still, at the end of the show, every single one of the conspiracy theorists had dismissed everything the experts had told them, and refused to admit they were wrong.

Except Charlie.

Charlie was convinced, and admitted to the BBC that he had changed his mind.

This wasn't without cost. After the episode aired, Charlie became a pariah among the conspiracy community. People attacked him and his family, accusing him of being paid off by the BBC and FBI. Someone made a YouTube channel called "Kill Charlie Vietch". Someone else found pictures of his two young nieces and photoshopped nudity onto them. Alex Jones claimed Charlie was a double agent all along, and urged his fans to stay vigilant.

Charlie kept to his newfound truth, and left the community for good.

David did not understand why Charlie had changed his mind. As he writes in the book:

For one thing, from writing my previous books, I knew the idea that facts alone could make everyone see things the same way was a venerable misconception. […]

In science communication, this used to be called the information deficit model, long debated among frustrated academics. When controversial research findings on everything from the theory of evolution to the dangers of leaded gasoline failed to convince the public, they’d contemplate how to best tweak the model so the facts could speak for themselves. But once independent websites, then social media, then podcasts, then YouTube began to speak for the facts and undermine the authority of fact-based professionals like journalists, doctors, and documentary filmmakers, the information deficit model was finally put to rest. In recent years, that has led to a sort of moral panic.

As described in the book, this set David on a new path of research. He infiltrated the hateful Westboro Baptist Church to attend a sermon, and later talked to an ex-member. He interviewed a Flat Earther live on stage. He even met with Charlie Vietch himself.

At the same time, he started interviewing various people who were trying to figure out what caused people to change their minds, as well as experts who were investigating why disagreements happen in the first place, and why attempts to change people's minds sometimes backfire.

He documented a lot of this in his podcast. Ultimately, he wrapped this all up in a book published just last year, How Minds Change.

I hesitate to summarize the book too much. I'm concerned about oversimplifying the points it's trying to make, and leaving you with the false sense that you've gotten a magic bullet for convincing anyone of anything you believe in.

If you're interested in this at all, you should just read the whole thing. (Or listen to the audiobook, narrated by David himself, where put his skills developed over years of hosting his podcast to good use.) It's a reasonably enjoyable read, and David does a good job of basing each chapter around a story, interview, or personal experience of his to help frame the science.

But I'll give you a taste of some of what's inside.

He talks to neuroscientists from NYU about how people end up with different beliefs in the first place. The neuroscientists tie it back to "The Dress", pictured above. Is it blue and black, or is it white and gold? There's a boring factual answer to that question which is "it's blue and black in real life", but the more interesting question is why did people disagree on the colors in the picture in the first place?

The answer is that people have different priors. If you spend a lot of time indoors, where lighting tends to be yellow, you'd likely see the dress as black and blue. If you spend more time outdoors, where lighting is bluer, you'd be more likely to see the dress as white and gold. The image left it ambiguous as to what the lighting actually was, so people interpreted the same factual evidence in different ways.

The researchers figured this out so well that they were able to replicate the effects in a new image. Are the crocs below gray, and the socks green? Or are the crocs pink, and the socks white, but illuminated by green light? Which one you perceive depends on how used you are to white tube socks!

This generalizes beyond just images: every single piece of factual information you consume is interpreted in light of your life experience and the priors encoded from it. If your experience differs from someone else's, it can seem like they're making a huge mistake. Maybe even a mistake so obvious that it can only be a malicious lie!

Then, he explores what causes people to defend incorrect beliefs. This probably won't surprise you, but it has to do with how much of their lives, social life, and identity are riding on those beliefs. The more those are connected, and the more those are perceived as being under threat, the more strongly people will be motivated to avoid changing their minds.

As one expert quoted in the book said, when they put people in an MRI and then challenged them on a political wedge issue, “The response in the brain that we see is very similar to what would happen if, say, you were walking through the forest and came across a bear.”

For a slight spoiler, this is what happened to Charlie Vietch. He'd started getting involved in an unrelated new-age-spiritualist sort of social scene, which meant dropping 9/11 trutherism was much less costly to him.

This is also what happened to the ex-Westboro members he talked to: they left the church for a variety of reasons first, established social connections and communication with those outside, and only then changed their minds about key church doctrine like attitudes towards LGBT folks.

To put it in Yudkowskian terms, these were people who, for one reason or another, had a Line of Retreat [LW · GW].

Then he talks about the mechanics of debate and persuasion.

He dives into research that shows that people are way better at picking apart other people's reasoning than their own. This suggests that reasoning can be approached as a social endeavor. Other people Babble arguments, and you Prune them [? · GW], or vice-versa.

He then interviews some experts about the "Elaboration-Likelihood Model". It describes two modes of persuasion:

- The "Peripheral Route", in which people don't think too hard about an argument and instead base their attitudes on quick, gut feelings like whether the person is attractive, famous, or confident-sounding.

- The "Central Route", which involves a much slower and more effortful evaluation of the content of the argument.

To me, this sounds a lot like System 1 and System 2 thinking. But the catch is it takes motivation to get people to expend the effort to move to the Central Route. Nobody's ever motivated to think too hard about ads, for example, so they almost always use the Peripheral Route. But if you're actually trying to change people's minds, especially on something they hold dear, you need to get them to the Central Route.

Then there are the most valuable parts of the book: techniques you can employ to do this for people in your own life.

David interviews three different groups of people that independently pioneered three surprisingly similar techniques:

- Deep Canvassing is a technique pioneered by the Los Angeles LGBT Centre. Volunteers developed it as a way to do door-to-door canvassing in support of LGBT rights and same-sex marriage in the wake of California's 2008 Prop 8 amendment which made same-sex marriage illegal.

- Street Epistemology is an outgrowth of the New Atheist movement, who went from trying to convince people that religion is false, to a much more generalized approach to helping people explore the underpinning behind any of their beliefs and whether those underpinnings are solid.

- Smart Politics is a technique developed by Dr. Karen Tamerius of the progressive-advocacy organization of the same name, and based on a therapy technique called Motivational Interviewing.

(Sorry, it doesn't look like the conservatives have caught on to this kind of approach yet.)

The key ingredients in all these techniques are kindness, patience, empathy, and humility. They're all meant to be done in a one-on-one, real-time conversation. Each of them has some differences in focus:

Among the persuasive techniques that depend on technique rebuttal, street epistemology seems best suited for beliefs in empirical matters like whether ghosts are real or airplanes are spreading mind control agents in their chemtrails. Deep canvassing is best suited for attitudes, emotional evaluations that guide our pursuit of confirmatory evidence, like a CEO is a bad person or a particular policy will ruin the country. Smart Politics is best suited for values, the hierarchy of goals we consider most important, like gun control or immigration reform. And motivational interviewing is best suited for motivating people to change behaviors, like getting vaccinated to help end a pandemic or recycling your garbage to help stave off climate change.

But all of them follow a similar structure. Roughly speaking, it's something like this:

- Establish rapport. Make the other person feel comfortable, assure them you're not out to shame or attack them, and ask for consent to work through their reasoning.

- Ask them for their belief. For best results, ask them for a confidence level from one to ten. Repeat it back to them in your own words to confirm you understand what they're saying. Clarify any terms they're using.

- Ask them why they believe what they do. Why isn't their confidence lower or higher?

- Repeat their reasons back to them in your own words. Check that you've done a good job summarizing.

- Continue like this for as long as you like. Listen, summarize, repeat.

- Wrap up, thank them for their time, and wish them well. Or, suggest that you can continue the conversation later.

Each technique has slightly different steps, which you can find in full in Chapter 9.

David also suggests adding a "Step 0" above all those, which is to ask yourself why you want to change this person's mind. What are your goals? Is it for a good reason?

The most important step is step 1, establishing rapport. If they say their confidence level is a one or a ten, that's a red flag that they're feeling threatened or uncomfortable and you should probably take a step back and help them relax. If done right, this should feel more like therapy than debate.

Why is this so important? Partly to leave them a line of retreat. If you can make them your friend, if you can show them that yes, even if you abandon this belief, there is still room for you to be accepted and respected for who you are, then they'll have much less reason to hold on to their previous beliefs.

Several things about these techniques are exciting to me.

First off is that they've been shown to work. Deep Canvassing in particular was studied academically in Miami. The results, even with inexperienced canvassers and ten-minute conversations:

When it was all said and done, the overall shift Broockman and Kalla measured in Miami was greater than “the opinion change that occurred from 1998 to 2012 towards gay men and lesbians in the United States.” In one conversation, one in ten people opposed to transgender rights changed their views, and on average, they changed that view by 10 points on a 101-point “feelings thermometer,” as they called it, catching up to and surpassing the shift that had taken place in the general public over the last fourteen years.

If one in ten doesn’t sound like much, you’re neither a politician nor a political scientist. It is huge. And before this research, after a single conversation, it was inconceivable. Kalla said a mind change of much less than that could easily rewrite laws, win a swing state, or turn the tide of an election.

The second is that in these techniques, people have to persuade themselves. You don't just dump a bunch of information on people and expect them to take it in: instead, they're all focused on Socratic questioning.

This makes the whole conversation much more of a give-and-take: because you're asking people to explain their reasoning, you can't perform these techniques without letting people make their arguments at you. That means they have a chance to persuade you back!

This makes these techniques an asymmetric weapon [? · GW] in the pursuit of truth. You have to accept that you might be wrong, and that you'll be the one whose opinion is shifted instead. You should embrace this, because that which can be destroyed by the truth, should be [? · GW]!

The last is that the people using these techniques have skin in the game.

These weren't developed by armchair academics in a lab. They were developed by LGBT activists going out there in the hot California sun trying to desperately advocate for their rights, and New Atheists doing interviews with theists that were livestreamed to the whole internet. If their techniques didn't work, they'd notice, same as a bodybuilder might notice their workouts aren't helping them gain muscle.

And the last is that there's the potential for driving social change.

The last chapter in the book talks about how individual mind changes turn into broader social change. It's not as gradual as you'd think: beliefs seem to hit a tipping point after which the belief cascades and becomes the norm.

As you probably expect, I've been a long-time fan of David's from back in the early days. As a result, it's a bit hard to do an unbiased review. I don't want to oversell this book: he's a journalist, not a psychology expert, and relies on second-hand knowledge from those he interviews. Similarly, I'm not a psychology expert myself, so it's hard for me to do a spot-check on the specific neurological and psychological details he brings up.

But I still think this would be valuable for more people in the rationalist community to read. David isn't as smart as Yudkowsky—few people are—but I do think he caught on to some things that Yudkowsky missed.

There's an undercurrent in Yudkowsky's writing where I feel like he underestimates more average people, like he has trouble differentiating the middle-to-left of the IQ bell-curve. Take this excerpt from his famous (fan)fiction, Harry Potter and the Methods of Rationality (Ch. 90):

"That's what I'd tell you if I thought you could be responsible for anything. But normal people don't choose on the basis of consequences, they just play roles. […] People like you aren't responsible for anything, people like me are, and when we fail there's no one else to blame."

I know Yudkowsky doesn't necessarily endorse everything his fictional characters say, but I can't help but wonder whether that's an attitude he still keeps beneath the surface.

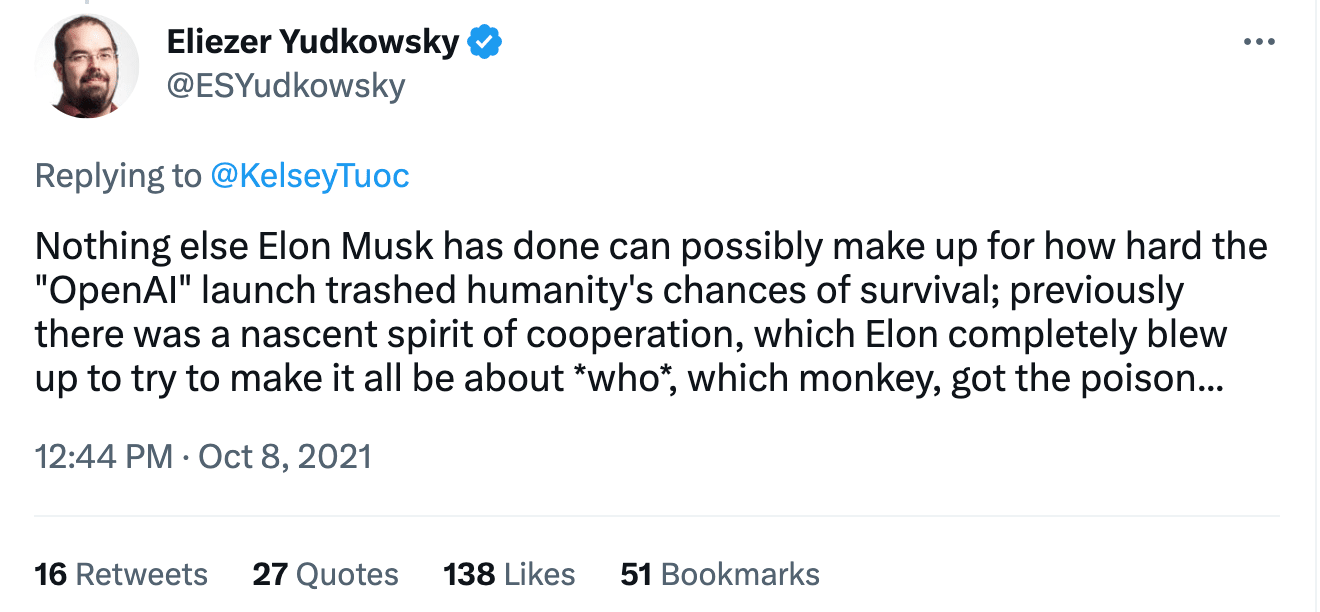

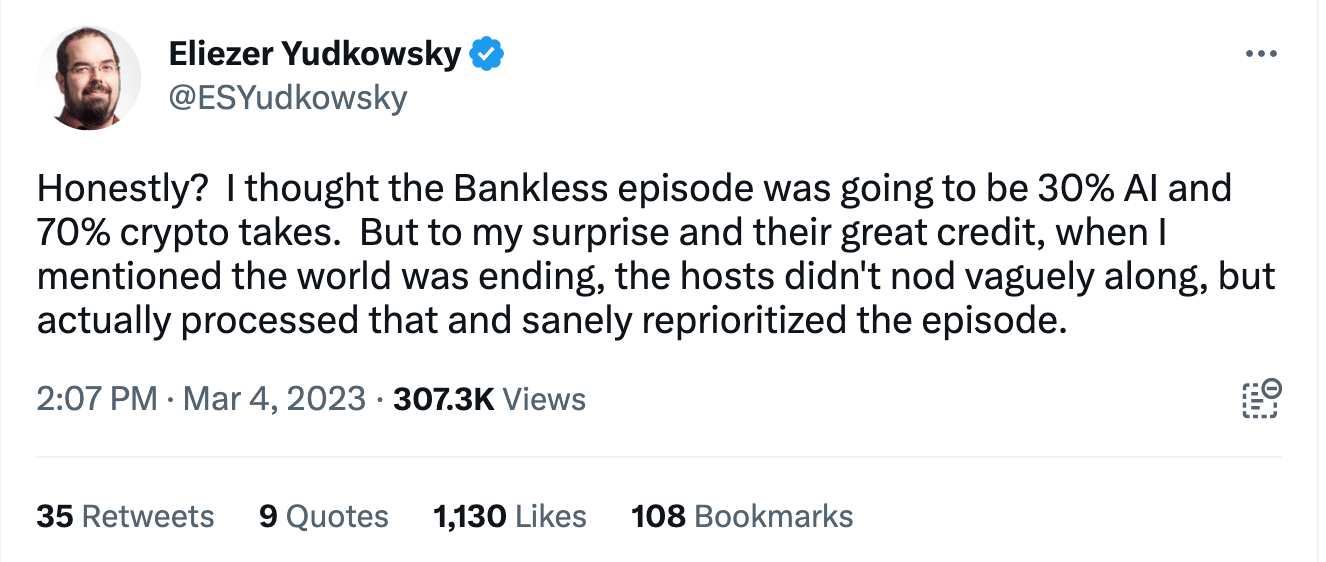

It's not just his fiction. Recently he went on what he thought was a low-stakes crypto podcast and was surprised that the hosts wanted to actually hear him out when he said we were all going to die soon:

The other thing that seemed to surprise him was the popularity of the episode. This led to him doing more podcasts and more press, including a scathing article in Time magazine that's been getting a lot of attention lately.

I'm sorry to do this to you Eliezer, but in your own words, surprise is a measure of a poor hypothesis [LW · GW]. I think you have some flaws in your model of the public.

In short: I don't believe that the average AI researcher, let alone the public at large, fully grasps the arguments for near-to-mid-term AI extinction risk (e.g. within the next 10–50 years). I also don't think that trying to reach them is hopeless. You just have to do it right.

This isn't just based on hearsay either. I've personally tried these techniques on a small number of AI capabilities researchers I've gotten to know. Results have been somewhat mixed, but not as universally negative as you'd expect. I haven't gotten to do much of it yet—there's only so much emotional energy I can spend meditating on the non-trivial chance that neither me nor anyone I know will have our children live to adulthood—but I've had at least one person that was grateful for the time and space I gave them to think through this kind of stuff, time that they normally wouldn't get otherwise, and came out mostly agreeing with me.

The book, and my own experiences, have started to make me think that rationality isn't a solo sport. Maybe Eliezer and people like him can sit down alone and end up with new conclusions. But most of the rest of the world benefits from having someone sit with them and probe their thoughts and their reasons for believing what they believe.

If you're the kind of person who's been told you're a good listener, if you're the kind of person that ends up as your friends' informal therapist, or if you're the kind of person that is more socially astute, even if it means you're more sensitive to the weirder aspects of the rationalist movement, those are all likely signs that you might be particularly well-suited to playing this kind of role for people. Even if you aren't, you could try anyway.

And you might have some benefit in doing it soon. That last part, about social change? With AI chatbots behaving badly around the world, and the recent open letter calling for a pause in AI training making the news, I think we're approaching a tipping point on AI existential risk.

The more people we have that understand the risks, be they AI researchers or otherwise, the better that cascade will go. The Overton window is shifting, and we should be ready for it.

51 comments

Comments sorted by top scores.

comment by jaspax · 2023-05-30T19:00:50.748Z · LW(p) · GW(p)

(Sorry, it doesn't look like the conservatives have caught on to this kind of approach yet.)

Actually, if you look at religious proselytization, you'll find that these techniques are all pretty well-known, albeit under different names and with different purposes. And while this isn't actually synonymous with political canvassing, it often has political spillover effects.

If you wanted, one could argue this the other way: left-oriented activism is more like proselytization than it is factual persuasion. And LessWrong, in particular, has a ton of quasi-religious elements, which means that its recruitment strategy necessarily looks a lot like evangelism.

Replies from: jim-pivarski, charles-m, mruwnik, bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by Jim Pivarski (jim-pivarski) · 2023-06-01T19:51:54.422Z · LW(p) · GW(p)

And even more deeply than door-to-door conversations, political and religious beliefs spread through long-term friend and romantic relationships, even unintentionally.

I can attest to this first-hand because I converted from atheism to Catholicism (25 years ago) by the unintended example of my girlfriend-then-wife, and then I saw the pattern repeat as a volunteer in RCIA, an education program for people who have decided to become Catholic (during the months before confirmation), and pre-Cana, another program for couples who plan to be married in the church (also months-long). The pattern in which a romantic relationship among different-religion (including no-religion) couples eventually ends up with one or the other converting is extremely common. I'd say that maybe 90% of the people in RCIA had a Catholic significant other, and maybe 40% of the couples in pre-Cana were mixed couples that became both-Catholic. What this vantage point didn't show me was the fraction in which the Catholic member of the couple converted away or maybe just got less involved and decided against being married Catholic (and therefore no pre-Cana). I assume that happens approximately as often. But it still shows that being friends or more than friends is an extremely strong motivator for changing one's views, whichever direction it goes.

Since it happened to me personally, the key thing in my case was that I didn't start with a clear idea of what Catholics (or some Catholics, anyway) actually believe. In reading this article and the ones linked from it, I came to Talking Snakes: A Cautionary Tale [LW · GW], which illustrates the point very well: scottalexander quoted Bill Maher as saying that Christians believe that sin was caused by a talking snake, and scottalexander himself got into a conversation with a Muslim in Cairo who thought he believed that monkeys turned into humans. Both are wild caricatures of what someone else believes, or at least a way of phrasing it that leads to the wrong mental image. In other words, miscommunication. What I found when I spent a lot of time with a Catholic—who wasn't trying to convert me—was that what some Catholics (can't attest for all of them) meant by the statements in their creed isn't at all the ridiculous things that written creed could be made to sound like.

In general, that point of view is the one Yudkowsky dismissed in Outside the Laboratory [? · GW], which is to say that physical and religious statements are in different reality-boxes [LW · GW], but he dismissed it out of hand. Maybe there are large groups of people who interpret religious statements the same way they interpret the front page of the newspaper, but it would take a long-term relationship, with continuous communication, to even find out if that is true, for a specific individual. They might say that they're biblical literalists on the web or fill out surveys that way, but what someone means by their words can be very surprising. (Which is to say, philosophy is hard.) Incidentally, another group I was involved in, a Faith and Reason study group in which all of the members were grad students in the physical sciences, couldn't even find anyone who believed in religious claims that countered physical facts. Our social networks didn't include any.

Long-term, empathic communication trades the birds-eye view of surveys for narrow depth. Surely, the people I've come in contact with are not representative of the whole, but they're not crazy, either.

Replies from: tim-freeman↑ comment by Tim Freeman (tim-freeman) · 2023-06-04T20:40:55.544Z · LW(p) · GW(p)

If you are Catholic, or remember being Catholic, and you're here, maybe you can explain something for me.

How do you reconcile God's benevolence and omnipotence with His communication patterns? Specifically: I assume you believe that the Good News was delivered at one specific place and time in the world, and then allowed to spread by natural means. God could have given everyone decent evidence that Jesus existed and was important, and God could have spread that information by some reliable means. I could imagine a trickster God playing games with an important message like that, but the Christian God is assumed to be good, not a trickster. How do you deal with this?

Replies from: jim-pivarski↑ comment by Jim Pivarski (jim-pivarski) · 2023-06-05T21:40:54.982Z · LW(p) · GW(p)

I'm still Catholic. I was answering your question and it got long, so I moved it to a post: Answer to a question: what do I think about God's communication patterns? [LW · GW]

↑ comment by Charles M (charles-m) · 2023-06-02T15:44:17.284Z · LW(p) · GW(p)

The whole technique of asking peoples' opinion and repeating it back to them is extraordinarily effective with respect to currently in-fashion gender ideology. "What is a Woman" did just that; let people explain themselves in their own words and calmly and politely repeated it back. They hung themselves with no counter argument whatsoever. Now, whether they ever actually changed their mind is another thing.

I think you could do the same in the climate change context, though it's not quite as easy.

↑ comment by mruwnik · 2023-06-01T14:15:26.753Z · LW(p) · GW(p)

Jehovah's Witnesses are what first came to mind when reading the OP. They're sort of synonymous with going door to door in order to have conversations with people, often saying that they're willing for their minds to be changed through respectful discussions. They also are one of few christian-adjacent sects (for lack of a more precise description) to actually show large growth (at least in the west).

↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:13:44.689Z · LW(p) · GW(p)

This is absolutely a fair point that I did not think about. All of David's examples in the book are left-ish-leaning and I was mostly basing it on those. My goal with that sentence was to just lampshade that fact.

comment by Tim Freeman (tim-freeman) · 2023-06-02T16:08:54.425Z · LW(p) · GW(p)

In response to "The real problem is humanity's lack of rationalist skills. We have bad epistemology, bad meta-ethics, and we don't update our beliefs based on evidence.":

Another missing rationalist skill is having some sensible way to decide who to trust. This is necessary because there isn't time to be rational about all topics. At best you can dig at the truth of a few important issues and trust friends to give you accurate beliefs about the rest. This failure has many ramifications:

- The SBF/FTX fiasco.

- I quit LessWrong for some years in part because there were people there who were arguing in bad faith and the existing mechanisms to control my exposure to such people were ineffective.

- Automata and professional trolls lie freely on social media with no effective means to stop them.

- On a larger scale, bad decisions about who to trust lead to perpetuation of religion, bad decisionmaking around Covid, and many other beliefs held mostly by people who haven't taken the time to attempt to be rational about them.

comment by Ruby · 2023-06-01T00:48:26.395Z · LW(p) · GW(p)

Curated. I like this post taking LessWrong back to its roots of trying to get us humans to reason better and believe truth things. I think we need that now as much as we did in 2009, and I fear that my own beliefs have become ossified through identity and social commitment, etc. LessWrong now talks a lot of about AI, and AI is increasingly a political topic (this post is a little political in a way I don't want to put front and center but I'll curate anyway), which means recalling the ways our minds get stuck and exploring ways to ask ourselves questions in ways where the answer could come back different.

Replies from: toothpaste, bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by toothpaste · 2023-06-01T06:22:35.514Z · LW(p) · GW(p)

Won't the goal of getting humans to reason better necessarily turn political at a certain point? After all, if there is one side of an issue that is decidedly better from some ethical perspective we have accepted, won't the rationalist have to advocate that side? Won't refraining from taking political action then be unethical? This line of reasoning might need a little bit of reinforcement to be properly convincing, but it's just to make the point that it seems to me that since political action is action, having a space cover rationality and ethics and not politics would be stifling a (very consequential) part of the discussion.

I'm not here very frequently, I just really like political theory and have seen around the site that you guys try to not discuss it too much. Not very common to find a good place to discuss it, as one would expect. But I'd love to find one!

Replies from: ryan_b, mruwnik↑ comment by ryan_b · 2023-06-01T21:52:46.685Z · LW(p) · GW(p)

Won't the goal of getting humans to reason better necessarily turn political at a certain point?

Trivially, yes. Among other things, we would like politicians to reason better, and for everyone to profit thereby.

I'm not here very frequently, I just really like political theory and have seen around the site that you guys try to not discuss it too much.

As it happens, this significantly predates the current political environment. Minimizing talking about politics, in the American political party horse-race sense, is one of our foundational taboos. It is not so strong anymore - once even a relevant keyword without appropriate caveats would pile on downvotes and excoriation in the comments - but for your historical interest the relevant essay is Politics Is The Mind-Killer. [LW · GW] You can search that phrase, or similar ones like "mind-killed" or "arguments are soldiers" to get a sense of how it went. The basic idea was that while we are all new at this rationality business, we should try to avoid talking about things that are especially irrational.

Of course at the same time the website was big on atheism, which is an irony we eventually recognized and corrected. The anti-politics taboo softened enough to allow talking about theory, and mechanisms, and even non-flashpoint policy (see the AI regulation posts). We also added things like arguing about whether or not god exists to the taboo list. There was a bunch of other developments too, but that's the directional gist.

Happily for you and me both, political theory tackled well as theory finds a good reception here. As an example I submit A voting theory primer for rationalists [LW · GW] and the follow-up posts by Jameson Quinn [LW · GW]. All of these are on the subject of theories of voting, including discussing some real life examples of orgs and campaigns on the subject, and the whole thing is one of my favorite chunks of writing on the site.

↑ comment by mruwnik · 2023-06-01T14:22:06.507Z · LW(p) · GW(p)

It depends what you mean by political. If you mean something like "people should act on their convictions" then sure. But you don't have to actually go in to politics to do that, the assumption being that if everyone is sane, they will implement sane policies (with the obvious caveats of Moloch, Goodhart etc.).

If you mean something like "we should get together and actively work on methods to force (or at least strongly encourage) people to be better", then very much no. Or rather it gets complicated fast.

↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:43:29.067Z · LW(p) · GW(p)

Thank you. I don't think it's possible to review this book without talking a bit about politics, given that so many of the techniques were forged and refined via political canvassing, but I also don't think that's the main takeaway, and I hope this introduced some good ideas to the community.

comment by simon · 2023-05-26T07:30:12.357Z · LW(p) · GW(p)

This post has a lot of great points.

But one thing that mars it for me to some extent is the discussion of OpenAI.

To me, the criticism of OpenAI feels like it's intended as a tribal signifier, like "hey look, I am of the tribe that is against OpenAI".

Now maybe that's unfair and you had no intention of anything like that and my vibe detection is off, but if I get that impression, I think it's reasonably likely that OpenAI decisionmakers would get the same impression, and I presume that's exactly what you don't want based on the rest of the post.

And even leaving aside practical considerations, I don't think OpenAI warrants being treated as the leading example of rationality failure.

First, I am not convinced that the alternative to OpenAI existing is the absence of a capabilities race. I think, in contrast, that a capabilities race was inevitable and that the fact that the leading AI lab has as decent a plan as it does is potentially a major win by the rationality community.

Also, while OpenAI's plans so far look inadequate, to me they look considerably more sane than MIRI's proposal to attempt a pivotal act with non-human-values-aligned AI. There's also potential for OpenAI's plans to be improved as more knowledge on mitigating AI risk is obtained, which is helped by their relatively serious attitude as compared to, for example, Google after their recent reorganization. Meta.

And while OpenAI is creating a race dynamic by getting ahead, IMO MIRI's pivotal act plan would be creating a far worse race dynamic if they were showing signs of being able to pull it off anytime soon.

I know many others don't disagree, but I think that there is enough of a case for OpenAI being less bad than potential alternatives to feel using it as if it were an uncontroversial bad thing detracts from the post.

Replies from: bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:20:47.615Z · LW(p) · GW(p)

I single it out because Yudkowsky singled it out and seems to see it as a major negative consequence to the goals he was trying to achieve with the community.

comment by zrezzed (kyle-ibrahim) · 2023-05-28T18:19:38.680Z · LW(p) · GW(p)

This isn't where the community is supposed to have ended up. If rationality is systematized winning [LW · GW], then the community has failed to be rational.

Great post, and timely, for me personally. I found myself having similar thoughts recently, and this was a large part of why I recently decided to start engaging with the community more (so apologies for coming on strong in my first comment, while likely lacking good norms).

Some questions I'm trying to answer, and this post certainly helps a bit:

- Is there general consensus on the "goals" of the rationalist community? I feel like there implicitly is something like "learn and practice rationality as a human" and "debate and engage well to co-develop valuable ideas".

- Would a goal more like "helping raise the overall sanity waterline" ultimately be a more useful, and successful "purpose" for this community? I potentially think so. Among other reasons, as bc4026bd4aaa5b7fe points out, there are a number of forces that trend this community towards being insular, and an explicit goal against that tendency would be useful.

comment by DirectedEvolution (AllAmericanBreakfast) · 2023-05-26T15:46:34.233Z · LW(p) · GW(p)

Just noting a point of confusion - if changing minds is a social endeavor having to do with personal connection, why is it necessary to get people to engage System 2/Central Route thinking? Isn’t the main thing to get them involved in a social group where the desired beliefs are normal and let System 1/Peripheral Route thinking continue to do its work?

Replies from: AnthonyC, Seth Herd↑ comment by AnthonyC · 2023-05-27T03:07:54.946Z · LW(p) · GW(p)

If I understand correctly I think it's more that system 1/peripheral route thinking can get someone to affectively endorse an idea without forming a deeper understanding of it, whereas system 2/central route thinking can produce deeper understanding, but many (most?) people need to feel sufficiently psychologically and socially safe/among friends to engage in that kind of thinking.

↑ comment by Seth Herd · 2023-05-27T20:11:10.189Z · LW(p) · GW(p)

I think you are absolutely correct that getting someone involved in a social group where everyone already has those ideas would be better at changing minds. But that's way harder than getting someone to have a ten-minute conversation. In fact, it's so hard that I don't think it's ever been studied experimentally. Hopefully I'm wrong and there are limited studies; but I've looked for them and not found them (~5 years ago).

I'd frame it this way: what you're doing in that interview is supplying the motivation to do System 2 thinking. The Socratic method is about asking people the same questions they'd ask themselves if they cared enough about that topic, and had the reasoning skills to reach the truth.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-26T07:52:42.377Z · LW(p) · GW(p)

Great post, will buy the book and take a look!

I feel like I vaguely recall reading somewhere that some sort of california canvassing to promote gay rights experiment either didn't replicate or turned out to be outright fraud. Wish I could remember the details. It wasn't the experiment you are talking about though hopefully?

↑ comment by Steven Byrnes (steve2152) · 2023-05-26T14:08:41.002Z · LW(p) · GW(p)

I just started the audiobook myself, and in the part I’m up to the author mentioned that there was a study of deep canvassing that was very bad and got retracted, but then later, there was a different group of scientists who studied deep canvassing, more on which later in the book. (I haven’t gotten to the “later in the book” yet.)

Wikipedia seems to support that story, saying that the first guy was just making up data (see more specifically "When contact changes minds" on wikipedia).

“If a fraudulent paper says the sky is blue, that doesn’t mean it’s green” :)

UPDATE: Yeah, my current impression is that the first study was just fabricated data. It wasn't that the data showed bad results so he massaged it, more like he never bothered to get data in the first place. The second study found impressive results (supposedly - I didn't scrutinize the methodology or anything) and I don't think the first study should cast doubt on the second study.

Replies from: bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:23:38.429Z · LW(p) · GW(p)

Thanks for the summary. Yes, David addresses this in the book. There was an unfortunately fraudulent paper published due to (IIRC) the actions of a grad student, but the professors involved retracted the original paper and later research reaffirmed the approach did work.

↑ comment by Alan E Dunne · 2023-05-26T14:25:07.161Z · LW(p) · GW(p)

https://statmodeling.stat.columbia.edu/2015/12/16/lacour-and-green-1-this-american-life-0/

and generally "beware the one of just one study"

Replies from: Seth Herd, bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by Seth Herd · 2023-05-27T20:55:14.264Z · LW(p) · GW(p)

I read this. This is about the first study, which was retracted. However, a second, carefully monitored and reviewed study found most of the same results, including the remarkably high effect size of one in ten people appearing to completely drop their prejudice toward homosexuals after the ten-minute intervention.

Yes beware the one study. But in the absence of data, small amounts are worth a good deal, and careful reasoning from other evidence is worth even more.

My reasoning from indirect data and personal experience are in line with this one study. The 900 studies on how minds don't change are almost all about impersonal, data-and-argument based approaches.

Emotions affect how we make and change beliefs. You can't force someone to change their mind, but they can and do change their mind when they happen to think through an issue without being emotionally motivated to keep their current belief.

↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:24:11.551Z · LW(p) · GW(p)

As mentioned above, David addresses this in the book. There was an unfortunately fraudulent paper published due to (IIRC) the actions of a grad student, but the professors involved retracted the original paper and later research reaffirmed the approach did work.

comment by PeterMcCluskey · 2023-05-27T02:19:37.172Z · LW(p) · GW(p)

Many rationalists do follow something resembling the book's advice.

CFAR started out with too much emphasis on lecturing people, but quickly noticed that wasn't working, and pivoted to more emphasis on listening to people and making them feel comfortable. This is somewhat hard to see if you only know the rationalist movement via its online presence.

Eliezer is far from being the world's best listener, and that likely contributed to some failures in promoting rationality. But he did attract and encourage people who overcame his shortcomings for CFAR's in-person promotion of rationality.

I consider it pretty likely that CFAR's influence has caused OpenAI to act more reasonably than it otherwise would act, due to several OpenAI employees having attended CFAR workshops.

It seems premature to conclude that rationalists have failed, or that OpenAI's existence is bad.

Sorry, it doesn’t look like the conservatives have caught on to this kind of approach yet.

That's not consistent with my experiences interacting with conservatives. (If you're evaluating conservatives via broadcast online messages, I wouldn't expect you to see anything more than tribal signaling).

It may be uncommon for conservatives to use effective approaches at explicitly changing political beliefs. That's partly because politics are less central to conservative lives. You'd likely reach a more nuanced conclusion if you compare how Mormons persuade people to join their religion, which incidentally persuades people to become more conservative.

Replies from: adrian-arellano-davin, bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by mukashi (adrian-arellano-davin) · 2023-05-27T02:41:54.378Z · LW(p) · GW(p)

Any source you would recommend to know more about the specific practices of Mormons you are referring to?

Replies from: PeterMcCluskey↑ comment by PeterMcCluskey · 2023-05-27T16:51:06.398Z · LW(p) · GW(p)

No. I found a claim of good results here. Beyond that I'm relying on vague impressions from very indirect sources, plus fictional evidence such as the movie Latter Days.

↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:51:57.041Z · LW(p) · GW(p)

Fair enough, I haven't interacted with CFAR at all. And the "rationalists have failed" framing is admittedly partly bait to keep you reading, partly parroting/interpreting how Yudkowsky appears to see his efforts towards AI Safety, and partly me projecting my own AI anxieties out there.

The Overton window around AI has also been shifting so quickly that this article may already be kind of outdated. (Although I think the core message is still strong.)

Someone else in the comments pointed out the religious proselytization angle, and yeah, I hadn't thought about that, and apparently neither did David. That line was basically a throwaway joke lampshading how all the organizations discussed in the book are left-leaning, I don't endorse it very strongly.

comment by Thomas Kwa (thomas-kwa) · 2023-05-28T21:17:16.388Z · LW(p) · GW(p)

It's not just his fiction. Recently he went on what he thought was a low-stakes crypto podcast and was surprised that the hosts wanted to actually hear him out when he said we were all going to die soon:

I don't think we can take this as evidence that Yudkowsky or the average rationalist "underestimates more average people". In the Bankless podcast, Eliezer was not trying to do anything like trying to explore the beliefs of the podcast hosts, just explaining his views. And there have been attempts at outreach before. If Bankless was evidence towards "the world at large is interested in Eliezer's ideas and takes them seriously", The Alignment Problem and Human Compatible and rejection of FDT from academic decision theory journals is stronger evidence against. It seems to me that the lesson we should gather is that alignment's time in the public consciousness has come sometime in the last ~6 months.

I'm also not sure the techniques are asymmetric.

- Have people with false beliefs tried e.g. Street Epistemology and found it to fail?

- I think few of us in the alignment community are actually in a position to change our minds about whether alignment is worth working on. With a p(doom) of ~35% I think it's unlikely that arguments alone push me below the ~5% threshold where working on AI misuse, biosecurity, etc. become competitive with alignment. And there are people with p(doom) of >85%.

That said it seems likely that rationalists should be incredibly embarrassed for not realizing the potential asymmetric weapons in things like Street Epistemology. I'd make a Manifold market for it, but I can't think of a good operationalization.

Replies from: thomas-kwa, cata↑ comment by Thomas Kwa (thomas-kwa) · 2023-10-28T23:47:49.600Z · LW(p) · GW(p)

I think few of us in the alignment community are actually in a position to change our minds about whether alignment is worth working on. With a p(doom) of ~35% I think it's unlikely that arguments alone push me below the ~5% threshold where working on AI misuse, biosecurity, etc. become competitive with alignment. And there are people with p(doom) of >85%.

I have changed my mind and now think some of the core arguments for x-risk don't go through, so it's plausible that I go below 5% if there is continued success in alignment-related ML fields and could substantially change my mind from a single conversation.

↑ comment by cata · 2023-05-29T00:10:28.114Z · LW(p) · GW(p)

I think few of us in the alignment community are actually in a position to change our minds about whether alignment is worth working on. With a p(doom) of ~35% I think it's unlikely that arguments alone push me below the ~5% threshold where working on AI misuse, biosecurity, etc. become competitive with alignment. And there are people with p(doom) of >85%.

This makes little sense to me, since "what should I do" isn't a function of p(doom). It's a function of both p(doom) and your inclinations, opportunities, and comparative advantages. There should be many people for whom, rationally speaking, a difference between 35% and 34% should change their ideal behavior.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2023-06-02T14:44:14.216Z · LW(p) · GW(p)

Thanks, I agree. I would still make the weaker claim that more than half the people in alignment are very unlikely to change their career prioritization from Street Epistemology-style conversations, and that in general the person with more information / prior exposure to the arguments will be less likely to change their mind.

comment by romeostevensit · 2023-05-27T19:46:43.496Z · LW(p) · GW(p)

How identification works, afaict: one of the ways alienation works is by directly invalidating people's experiences or encouraging preference falsification about how much they prefer being in the shape of a good, interchangeable worker, which is a form of indirectly invalidating your own experience, especially experiences of suffering. People feel that their own experience is not a valid source of sovereignty and so they are encouraged to invest their experience into larger, more socially legible and accepted constructs. This construct needs to be immutable and therefore more difficult to attack. But this creates a problem, the socially constructed categories are now under disputed ownership from many people each trying to define or control it in a way advantageous to themselves, and to avoid running into the same problem they had originally. Namely that if others control the category definition, then they will again feel like their experience is invalidated. So now you have activation of fighting/tribal circuitry in the people involved.

The next layer up is particularly sticky and difficult, something like "I am being attacked because I am X." Attempting to defuse this construct is perceived as an attempt to invalidate/erase their experience, rather than an attempt to show them how constructing things in this way both causes them suffering, and encourages them to spread this shape of suffering to others and fight hard for it, along with not providing them a good predictive model of the world that would allow them to update towards more useful actions and mental representations.

Attempting to defuse this structure directly is extraordinarily difficult, and I have mostly found success only with techniques that first encourage the person to exit the tight network of concepts involved and return to direct experience (the classic, you are racist against this group, but here is such a person in front of you, notice how your direct experience is that you can talk to them and get back reasonable human sounding things rather than the caricature you expect).

Replies from: romeostevensit↑ comment by romeostevensit · 2023-05-27T19:49:54.729Z · LW(p) · GW(p)

A nice compression I hadn't thought of before is that moral categories and group categories are not type safe within the brain.

comment by Review Bot · 2024-02-21T02:01:37.471Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year. Will this post make the top fifty?

comment by Fleece Minutia · 2024-01-06T13:57:33.582Z · LW(p) · GW(p)

Thanks for the review! It got me to buy and then devour the book. It was a great read; entertaining and providing a range of useful mental models. Applying McRaney's amalgam Street Epistemology is hard work, but makes for exceedingly interesting and very friendly conversations about touchy subjects - I am happy to have this tool in my life now.

comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T01:07:34.667Z · LW(p) · GW(p)

The mods seem to have shortened my account name. For the record, it was previously bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a

comment by Collapse Kitty · 2023-06-02T10:28:26.092Z · LW(p) · GW(p)

I am just the kind of person you describe. I've committed nearly all spare time over the last few years to understanding the core issues of alignment, and the deeper flaws of Moloch/collective action leading to the polycrisis.

What does the next step look like? Social media participation? I don't think that's a viable battleground.

There are dozens of AI experts now giving voice to existential dangers. What angle or strategy is not yet being employed by them that we might back?

What are the best causes to throw one's efforts behind right now? Are there trustworthy companies/non-profits that need volunteers? What is our call to action for those we do inform about the catastrophic risks humanity is facing?

Sorry about the rough formatting and rambling train of thought. I'm eager to commit more of my life to working toward whatever strategy seems most viable, but feel quite lost at the moment.

Replies from: bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a↑ comment by bc4026bd4aaa5b7fe (bc4026bd4aaa5b7fe0bdcd47da7a22b453953f990d35286b9d315a619b23667a) · 2023-06-03T02:01:50.993Z · LW(p) · GW(p)

I don't have an answer for you, you'll have to chart your own path. I will say that I agree with your take on social media, it seems very peripheral-route-focused.

If you're looking to do something practical on AI consider looking into a career counseling organization like 80000 Hours. From what I've seen, they fall into some of the traps I mentioned here (seems like they mostly think that trying to change people's minds isn't very valuable unless you actively want to become a political lobbyist) but they're not bad overall for answering these kinds of questions.

Ultimately though, it's your own life and you alone have to decide your path through it.

comment by Raphael Royo-Reece (raphael-royo-reece) · 2023-06-01T12:33:29.070Z · LW(p) · GW(p)

I cannot agree with this post enough. One of the things that stops me despairing and keeps me trying to convince people of AI is that I think Eliezer is overly pessimistic when it comes to people taking threats seriously.

He has a lot of reasons to be - I mean he's been harping for 20 years and only now does there seem to be traction - but that is where I think it's likiest there is a way for us to avoid doom.

comment by Jeffs · 2023-06-01T05:16:45.242Z · LW(p) · GW(p)

I would love to see a video or transcript of this technique in action in a 1:1 conversation about ai x-risk.

Answer to my own question: https://www.youtube.com/watch?v=0VBowPUluPc

comment by Rheagan Zambelli (rheagan-zambelli) · 2023-06-01T07:50:34.174Z · LW(p) · GW(p)

If there were no demons, perhaps there would be no need for the rituals found in religion to dispel them….

Replies from: CronoDAScomment by toothpaste · 2023-06-01T06:00:30.405Z · LW(p) · GW(p)

You claim that the point of the rationalist community was to stop an unfriendly AGI. One thing that confuses me is exactly how it intends to do so, because that certainly wasn't my impression of it. I can see the current strategy making sense if the goal is to develop some sort of Canon for AI Ethics that researchers and professionals in the field get exposed to, thus influencing their views and decreasing the probability of catastrophe. But is it really so?

If the goal is to do it by shifting public opinion in this particular issue, by making a majority of people rationalists, or by making political change and regulations, it isn't immediately obvious to me. And I would bet against it because institutions that for a long time have been following those strategies with success, from marketing firms to political parties to lobbying firms to scientology, seem to operate very differently, as this post also implies.

If the goal is to do so by converting most people to rationalism (in a strict sense), I'd say I very much disagree with that being likely or maybe even a desirable effort. I'd love to discuss this subject here in more detail and have my ideas go through the grinder, but I've found this place to be very hard to penetrate, so I'm rarely here.

Replies from: mruwnik↑ comment by mruwnik · 2023-06-01T14:34:46.789Z · LW(p) · GW(p)

The answer is to read the sequences (I'm not being facetious). They were written with the explicit goal of producing people with EY's rationality skills in order for them to go into producing Friendly AI (as it was called then). It provides a basis for people to realize why most approaches will by default lead to doom.

At the same time, it seems like a generally good thing for people to be as rational as possible, in order to avoid the myriad cognitive biases and problems that plague humanities thinking, and therefore actions. My impression is that the hope was to make the world more similar to Dath Ilan.

Replies from: toothpaste↑ comment by toothpaste · 2023-06-01T18:14:30.225Z · LW(p) · GW(p)

So the idea is that if you get as many people in the AI business/research and as possible to read the sequences, then that will change their ideas in a way that will make them work in AI in a safer way, and that will avoid doom?

I'm just trying to understand how exactly the mechanism that will lead to the desired change is supposed to work.

If that is the case, I would say the critique made by OP is really on point. I don't believe the current approach is convincing many people to read the sequences, and I also think reading the sequences won't necessarily make people change their actions when business/economic/social incentives work otherwise. The latter being unavoidably a regulatory problem, and the former a communications strategy problem.

Or are you telling me to read the sequences? I intend to sometime, I just have a bunch of stuff to read already and I'm not exactly good at reading a lot consistently. I don't deny having good material on the subject is not essential either.

Replies from: mruwnik↑ comment by mruwnik · 2023-06-02T09:50:47.268Z · LW(p) · GW(p)

More that you get as many people in general to read the sequences, which will change their thinking so they make fewer mistakes, which in turn will make more people aware both of the real risks underlying superintelligence, but also of the plausibility and utility of AI. I wasn't around then, so this is just my interpretation of what I read post-facto, but I get the impression that people were a lot less doomish then. There was a hope that alignment was totally solvable.

The focus didn't seem to be on getting people into alignment, as much as it generally being better for people to think better. AI isn't pushed as something everyone should do - rather as what EY knows - but something worth investigating. There are various places where it's said that everyone could use more rationality, that it's an instrumental goal like earning more money. There's an idea of creating Rationality Dojos [? · GW], as places to learn rationality like people learn martial arts. I believe that's the source of CFAR.

It's not that the one and only goal of the rationalist community was to stop an unfriendly AGI. It's just that is the obvious result of it. It's a matter of taking the idea seriously [? · GW], then shutting up and multiplying [? · GW] - assuming that AI risk is a real issue, it's pretty obvious that it's the most pressing problem facing humanity, which means that if you can actually help, you should step up [? · GW].

Business/economic/social incentives can work, no doubt about that. The issue is that they only work as long as they're applied. Actually caring about an issue (as in really care, like oppressed christian level, not performance cultural christian level) is a lot more lasting, in that if the incentives disappear, they'll keep on doing what you want. Convincing is a lot harder, though, which I'm guessing is your point? I agree that convincing is less effective numerically speaking, but it seems a lot more good (in a moral sense), which also seems important [? · GW]. Though this is admittedly a lot more of an aesthetics thing...

I most certainly recommend reading the sequences, but by no means meant to imply that you must. Just that stopping an unfriendly AGI (or rather the desirability of creating an friendly AI) permeates the sequences. I don't recall if it's stated explicitly, but it's obvious that they're pushing you in that direction. I believe Scott Alexander described the sequences as being totally mind blowing the first time he read them, but totally obvious on rereading them - I don't know which would be your reaction. You can try the highlights [? · GW] rather than the whole thing, which should be a lot quicker.

comment by Don Salmon (don-salmon) · 2023-06-01T02:47:03.931Z · LW(p) · GW(p)

Very interesting, many fascinating points, but also much misunderstanding of the nature of IQ tests and their relationship to the question of "intelligence."

As a clinical psychologist (full disclosure - with an IQ above the one mentioned in the post) who has administered over two thousand IQ tests, AND conducted research on various topics, I can tell you, the one thing for certain (apart from the near-universal replicability crisis in psychology) is that psychologists to date have no conception as well, obviously, no way of measuring any kind of 'general" intelligence (no, the "g" factor does NOT represent general intelligence)

IQ does, however, measure a certain kind of "intelligence," which one might refer to as quantitative rather than qualitative. It manifests most clearly in the "thinking" of "philosophers" like Daniel Dennett, who consciously deny the experience of consciousness.

Since virtually every scientific experiment ever conducted begins with experience (not measurable, empirical data, but the kind of radical empiricism, or experiential data, that William James spoke of), to deny consciousness is to deny science.

yet, that is what much of transhumanism is about, itself a form of quantitative, analytic, detached thought.

if you want to do some really hard thinking, here's two questions:

1. What does "physical" mean (and I'm not looking for the usual tautological answer of "it's what physics studies")

Be careful - it took a well respected and much published philosophy professor 6 months to come up with the acknowledgement that nobody knows what it means, only what it negates.... see if you can do better!)

2. (this is ONLY to be pondered after successfully answering #1). Describe in quite precise terms what kind of scientific experiment could be conducted to provide evidence (not proof, just evidence) of something purely physical, which exists entirely apart from, independent of, any form of sentience, intelligence, awareness, consciousness, etc)

↑ comment by FinalFormal2 · 2023-06-08T19:57:43.405Z · LW(p) · GW(p)

AND conducted research on various topics

Wow that's impressive.