2020 PhilPapers Survey Results

post by Rob Bensinger (RobbBB) · 2021-11-02T05:00:13.859Z · LW · GW · 50 commentsContents

1. Decision theory 2. (Non-animal) ethics 3. Minds and animal ethics 4. Metaphysics, philosophy of physics, and anthropics 5. Superstition 6. Identity politics topics 7. Metaphilosophy 8. How have philosophers' views changed since 2009? None 50 comments

In 2009, David Bourget and David Chalmers ran the PhilPapers Survey (results, paper), sending questions to "all regular faculty members" at top "Ph.D.-granting [philosophy] departments in English-speaking countries" plus ten other philosophy departments deemed to have "strength in analytic philosophy comparable to the other 89 departments".

Bourget and Chalmers now have a new PhilPapers Survey out, run in 2020 (results, paper). I'll use this post to pick out some findings I found interesting, and say opinionated stuff about them. Keep in mind that I'm focusing on topics and results that dovetail with things I'm curious about (e.g., 'why do academic decision theorists and LW decision theorists disagree so much?'), not giving a neutral overview of the whole 100-question survey.

The new survey's target population consists of:

(1) in Australia, Canada, Ireland, New Zealand, the UK, and the US: all regular faculty members (tenuretrack or permanent) in BA-granting philosophy departments with four or more members (according to the PhilPeople database); and (2) in all other countries: English-publishing philosophers in BA-granting philosophy departments with four or more English-publishing faculty members.

In order to make comparisons to the 2009 results, the 2020 survey also looked at a "2009-comparable departments" list selected using similar criteria to the 2009 survey:

It should be noted that the “2009-comparable department” group differs systematically from the broader target population in a number of respects. Demographically, it includes a higher proportion of UK-based philosophers and analytic-tradition philosophers than the target population. Philosophically, it includes a lower proportion of theists, along with many other differences evident in comparing 2020 results in table 1 (all departments) to table 9 (2009-comparable departments).

Based on this description, I expect the "2009-comparable departments" in the 2020 survey to be more elite, influential, and reasonable than the 2020 "target group", so I mostly focus on 2009-comparable departments below. In the tables below, if the row doesn't say "Target" (i.e., target group), the population is "2009-comparable departments".

Note that in the 2020 survey (unlike 2009), respondents could endorse multiple answers.

1. Decision theory

Newcomb's problem: The following groups (with n noting their size, and skipping people who skipped the question or said they weren't sufficiently familiar with it) endorsed the following options in the 2020 survey:

| Group | n | one box | two boxes | diff |

| Philosophers (Target) | 1071 | 31% | 39% | 8% |

| Decision theorists (Target) | 48 | 21% | 73% | 58% |

| Philosophers | 470 | 28% | 43% | 15% |

| Decision theorists | 22 | 23% | 73% | 50% |

5% of decision theorists said they "accept a combination of views", and 9% said they were "agnostic/undecided".

I think decision theorists are astonishingly wrong here, so I was curious to see if other philosophy fields did better.

I looked at every field where enough surveyed people gave their views on Newcomb's problem. Here they are in order of 'how much more likely are they to two-box than to one-box':

| Group | n | one box | two boxes | diff |

| Philosophers of gender, race, and sexuality | 13 | 23% | 54% | 31% |

| 20th-century-philosophy specialists | 23 | 13% | 43% | 30% |

| Social and political philosophers | 62 | 19% | 48% | 29% |

| Philosophers of law | 14 | 14% | 43% | 29% |

| Phil. of computing and information (Target) | 18 | 22% | 50% | 28% |

| Philosophers of social science | 24 | 33% | 58% | 25% |

| Philosophers of biology | 20 | 30% | 55% | 25% |

| General philosophers of science | 53 | 26% | 51% | 25% |

| Philosophers of language | 100 | 25% | 47% | 22% |

| Philosophers of mind | 96 | 25% | 45% | 20% |

| Philosophers of computing and information | 5 | 20% | 40% | 20% |

| 19th-century-philosophy specialists | 10 | 10% | 30% | 20% |

| Philosophers of action | 32 | 28% | 47% | 19% |

| Logic and philosophy of logic | 58 | 26% | 43% | 17% |

| Philosophers of physical science | 25 | 28% | 44% | 16% |

| Epistemologists | 129 | 32% | 47% | 15% |

| Meta-ethicists | 72 | 29% | 43% | 14% |

| Metaphysicians | 123 | 32% | 44% | 12% |

| Normative ethicists | 102 | 32% | 42% | 10% |

| Philosophers of religion | 15 | 33% | 40% | 7% |

| Philosophers of mathematics | 19 | 32% | 37% | 5% |

| 17th/18th-century-philosophy specialists | 39 | 31% | 36% | 5% |

| Metaphilosophers | 23 | 35% | 39% | 4% |

| Applied ethicists | 50 | 34% | 38% | 4% |

| Philosophers of cognitive science | 50 | 32% | 36% | 4% |

| Aestheticians | 14 | 29% | 21% | -8% |

| Greek and Roman philosophy specialists | 17 | 41% | 29% | -12% |

(Note that many of these groups are small-n. Since philosophers of computing and information were an especially small and weird group, and I expect LWers to be extra interested in this group, I also looked at the target-group version for this field.)

Every field did much better than decision theory (by the "getting more utility in Newcomb's problem" metric). However, the only fields that favored one-boxing over two-boxing was ancient Greek and Roman philosophy, and aesthetics.

After those two fields, the best fields were philosophy of cognitive science, applied ethics, metaphilosophy, philosophy of mathematics, and 17th/18th century philosophy (only 4-5% more likely to two-box than one-box), followed by philosophy of religion, normative ethics, and metaphysics.

My quick post-hoc, low-confidence guess about why these fields did relatively well is (hiding behind a spoiler tag so others can make their own unanchored guesses):

My inclination is to model the aestheticians, historians of philosophy, philosophers of religion, and applied ethicists as 'in-between' analytic philosophers and the general public (who one-box more often than they two-box, unlike analytic philosophers). I think of specialists in those fields as relatively normal people, who have had less exposure to analytic-philosophy culture and ideas and whose views therefore tend to more closely resemble the views of some person on the street.

This would also explain why the "2009-comparable departments", who I expected to be more elite and analytic-philosophy-ish, did so much worse than the "target group" here.

I would have guessed, however, that philosophers of gender/race/sexuality would also have done relatively well on Newcomb's problem, if 'analytic-philosophy-ness' were the driving factor.

I'm pretty confused about this, though the small n for some of these populations means that a lot of this could be pretty random. (E.g., network effects: a single just-for-fun faculty email thread about Newcomb's problem could convince a bunch of philosophers of sexuality that two-boxing is great. Then this would show up in the survey because very few philosophers of sexuality have ever even heard of Newcomb's problem, and the ones who haven't heard of it aren't included.)

At the same time, my inclination is to treat philosophers of cognitive science, mathematics, normative ethics, metaphysics, and metaphilosophy as 'heavily embedded in analytic philosophy land, but smart enough (/ healthy enough as a field) to see through the bad arguments for two-boxing to some extent'.

There's also a question of why cognitive science would help philosophers do better on Newcomb's problem, when computer science doesn't. I wonder if the kinds of debates that are popular in computer science are the sort that attract people with bad epistemics? ('Wow, the Chinese room argument is amazing, I want to work in this field!') I really have no idea, and wouldn't have predicted this in advance.

Normative ethics also surprises me here. And both of my explanations for 'why did field X do well?' are post-hoc, and based on my prior sense that some of these fields are much smarter and more reasonable than others.

It's very plausible that there's some difference between the factors that make aestheticians one-box more, and the factors that make philosophers of cognitive science one-box more. To be confident in my particular explanations, however, we'd want to run various tests and look at various other comparisons between the groups.

The fields that did the worst after decision theory were philosophy of gender/race/sexuality, 20th-century philosophy, philosophy of language, philosophy of law, political philosophy, and philosophy of biology, of social science, and of science-in-general.

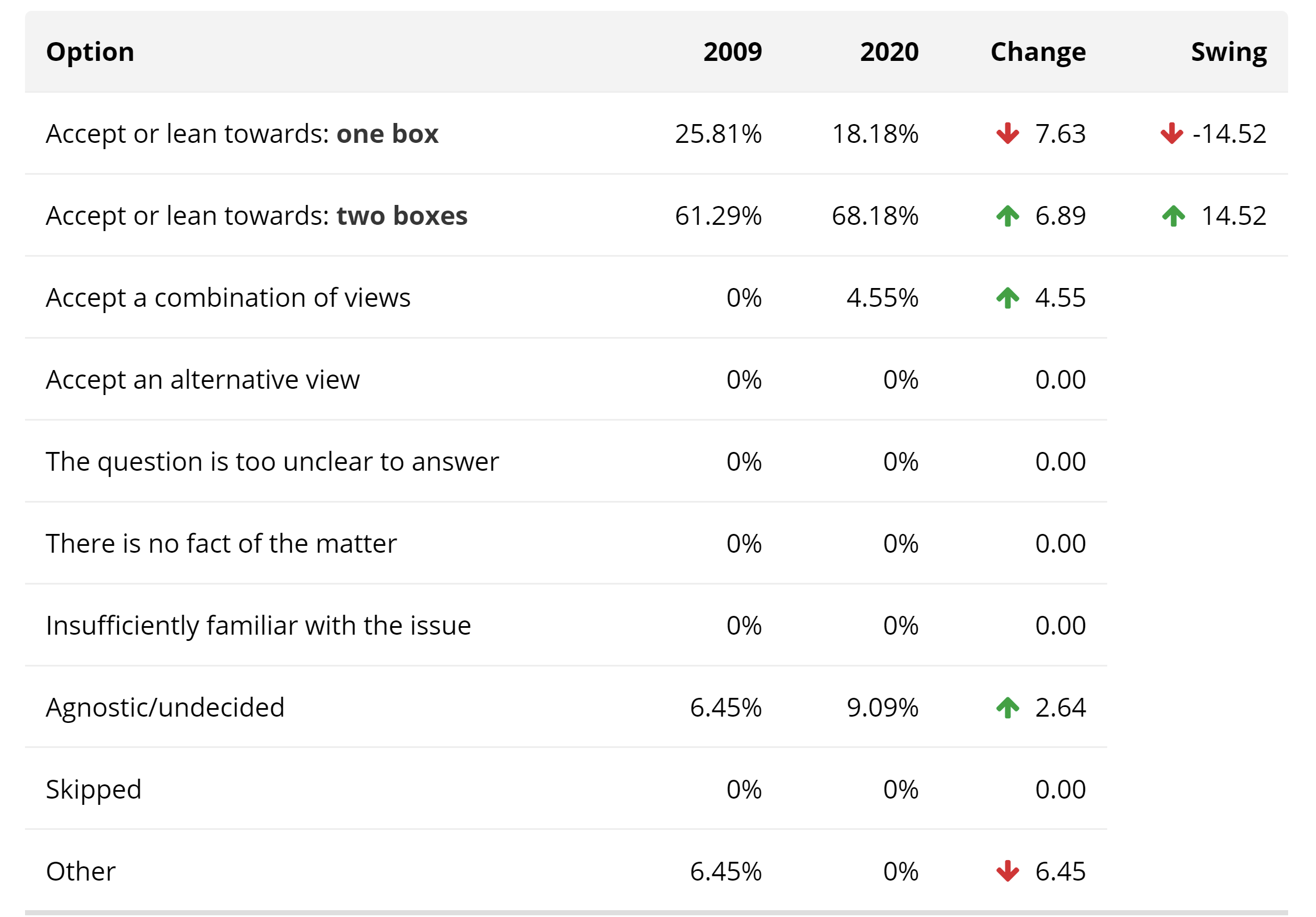

A separate question is whether academic decision theory has gotten better since the 2009 survey. Eyeballing the (small-n) numbers, the answer is that it seems to have gotten worse: two-boxing became even more popular (in 2009-comparable departments), and one-boxing even less popular:

n=31 for the 2009 side of the comparison, n=22 for the 2020 side. The numbers above are different from the ones I originally presented because Bourget and Chalmers include "skip" and "insufficiently familiar" answers, and exclude responses that chose multiple options, in order to make the methodology more closely match that of the 2009 survey.

2. (Non-animal) ethics

Regarding "Meta-ethics: moral realism or moral anti-realism?":

| Group | n | moral realism | moral anti-realism |

| Philosophers (Target) | 1719 | 62% | 26% |

| Philosophers | 630 | 62% | 27% |

| Applied ethicists | 64 | 67% | 23% |

| Normative ethicists | 132 | 74% | 16% |

| Meta-ethicists | 94 | 68% | 22% |

Regarding "Moral judgment: non-cognitivism or cognitivism?":

| Group | n | cognitivism | non-cognitivism |

| Philosophers (Target) | 1636 | 69% | 21% |

| Philosophers | 594 | 70% | 20% |

| Applied ethicists | 62 | 76% | 23% |

| Normative ethicists | 132 | 82% | 14% |

| Meta-ethicists | 93 | 76% | 16% |

Regarding "Morality: expressivism, naturalist realism, constructivism, error theory, or non-naturalism?":

| Group | n | non-nat | nat realism | construct | express | error |

| Philosophers (Target) | 1024 | 27% | 32% | 21% | 11% | 5% |

| Philosophers | 386 | 25% | 33% | 19% | 10% | 5% |

| Applied ethicists | 40 | 20% | 35% | 38% | 5% | 0% |

| Normative ethicists | 90 | 34% | 36% | 21% | 8% | 1% |

| Meta-ethicists | 68 | 37% | 29% | 18% | 13% | 7% |

Regarding "Normative ethics: virtue ethics, consequentialism, or deontology?" (putting in parentheses the percentage that only chose the option in question):

| Group | n | deontology | consequentialism | virtue ethics | combination |

| All (Target) | 1741 | 32% (20%) | 31% (21%) | 37% (25%) | 16% |

| All | 631 | 37% (23%) | 32% (22%) | 31% (19%) | 17% |

| Applied... | 63 | 56% (33%) | 38% (22%) | 33% (8%) | 27% |

| Normative... | 132 | 46% (27%) | 32% (20%) | 36% (17%) | 25% |

| Meta... | 94 | 44% (28%) | 32% (22%) | 24% (13%) | 19% |

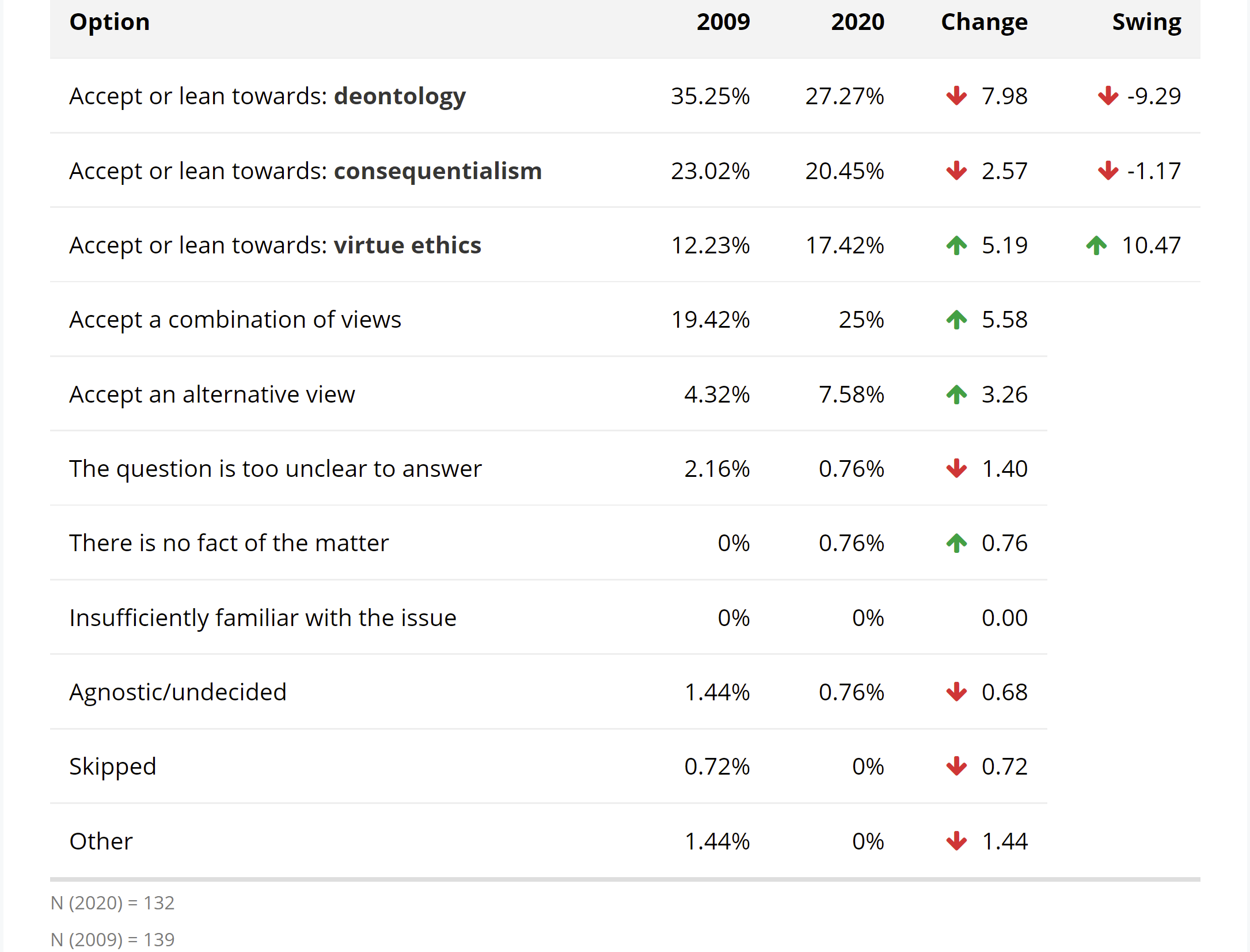

Excluding responses that endorsed multiple options, we can see that normative ethicists have moved away from deontology and towards virtue ethics since 2009, though deontology is still the most popular:

30 normative-ethicist respondents also wrote in "pluralism" or "pluralist" in the 2020 survey.

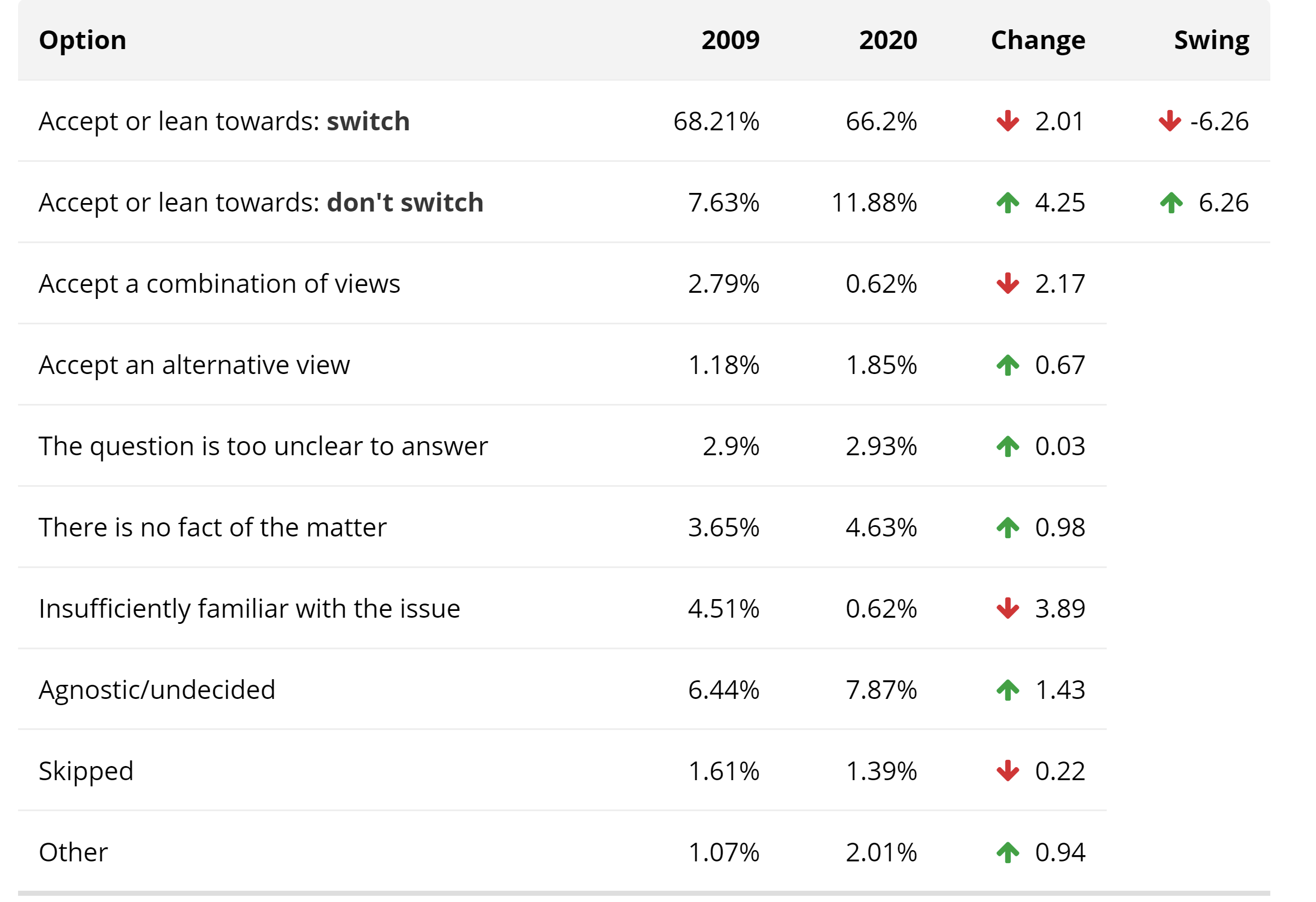

Regarding "Trolley problem (five straight ahead, one on side track, turn requires switching, what ought one do?): don't switch or switch?":

| Group | n | switch | don't switch |

| Philosophers (Target) | 1736 | 63% | 13% |

| Philosophers | 635 | 68% | 12% |

| Applied ethicists | 63 | 71% | 13% |

| Normative ethicists | 132 | 70% | 13% |

| Meta-ethicists | 94 | 70% | 13% |

Regarding "Footbridge (pushing man off bridge will save five on track below, what ought one do?): push or don't push?":

| Group | n | push | don't push |

| Philosophers (Target) | 1740 | 22% | 56% |

| Philosophers | 636 | 23% | 58% |

| Applied ethicists | 63 | 24% | 63% |

| Normative ethicists | 132 | 17% | 70% |

| Meta-ethicists | 94 | 18% | 66% |

Regarding "Human genetic engineering: permissible or impermissible?":

| Group | n | permissible | impermissible |

| Philosophers (Target) | 1059 | 62% | 19% |

| Philosophers | 379 | 68% | 13% |

| Applied ethicists | 39 | 85% | 8% |

| Normative ethicists | 83 | 69% | 12% |

| Meta-ethicists | 59 | 68% | 12% |

Regarding "Well-being: hedonism/experientialism, desire satisfaction, or objective list?":

| Group | n | hedonism | desire satisfaction | objective list |

| Philosophers (Target) | 967 | 10% | 19% | 53% |

| Philosophers | 348 | 9% | 19% | 54% |

| Applied ethicists | 43 | 16% | 26% | 56% |

| Normative ethicists | 90 | 11% | 21% | 63% |

| Meta-ethicists | 62 | 11% | 24% | 55% |

Moral internalism "holds that a person cannot sincerely make a moral judgment without being motivated at least to some degree to abide by her judgment". Regarding "Moral motivation: externalism or internalism?":

| Group | n | internalism | externalism |

| Philosophers (Target) | 1429 | 41% | 39% |

| Philosophers | 528 | 38% | 42% |

| Applied ethicists | 57 | 53% | 37% |

| Normative ethicists | 128 | 34% | 51% |

| Meta-ethicists | 92 | 35% | 47% |

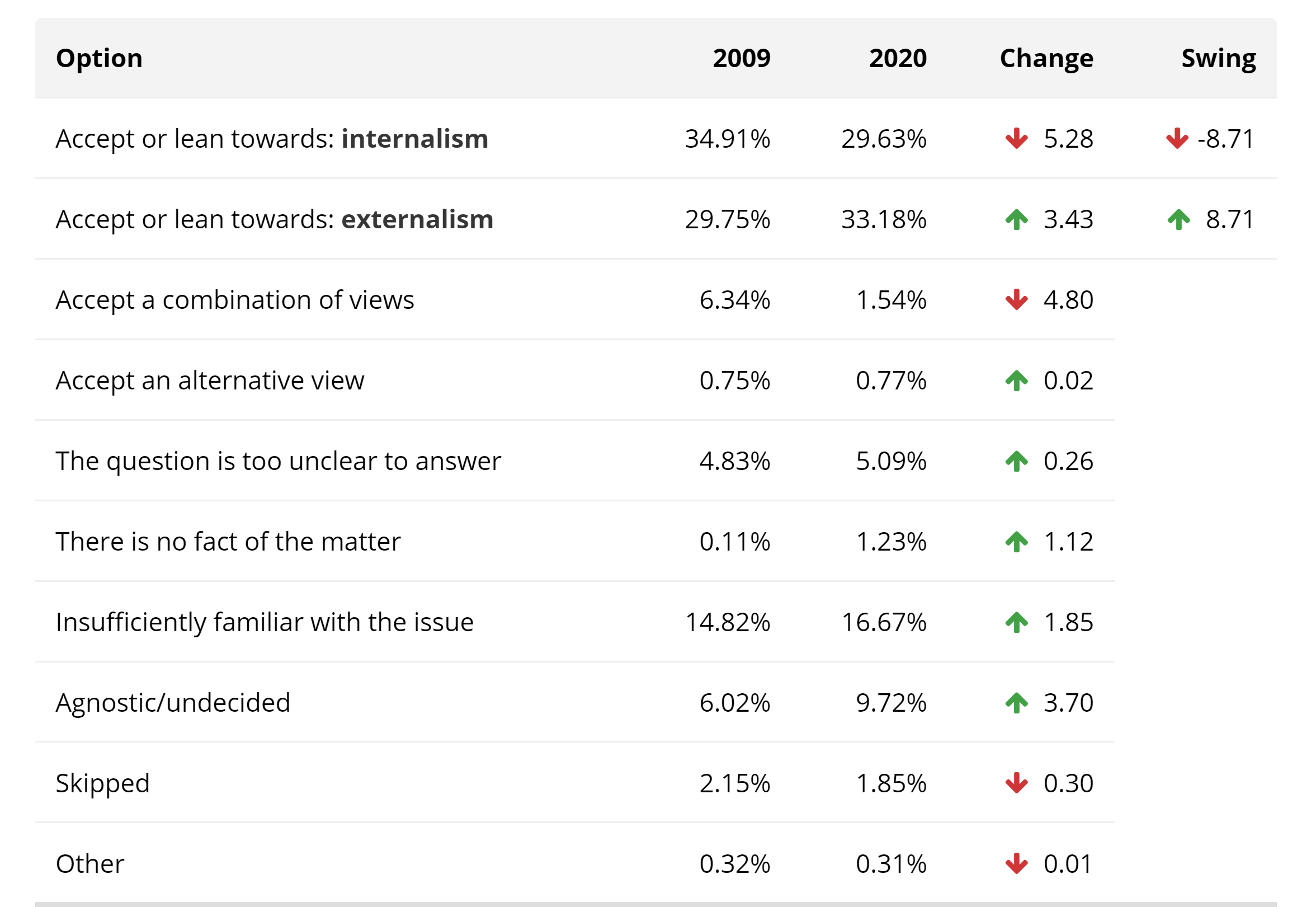

One of the largest changes in philosophers' views since the 2009 survey is that philosophers have somewhat shifted toward externalism. In 2009, internalism was 5% more popular than externalism; now externalism is 3% more popular than internalism.

(Again, the 2009-2020 comparisons give different numbers for 2020 in order to make the two surveys' methodologies more similar.)

3. Minds and animal ethics

Regarding "Hard problem of consciousness (is there one?): no or yes?":

| Group | n | yes | no |

| Philosophers (Target) | 1468 | 62% | 30% |

| Philosophers of computing and information (Target) | 18 | 44% | 50% |

| Philosophers | 366 | 58% | 33% |

| Philosophers of cognitive science | 40 | 48% | 50% |

| Philosophers of mind | 91 | 62% | 34% |

Regarding "Mind: non-physicalism or physicalism?":

| Group | n | physicalism | non-physicalism |

| Philosophers (Target) | 1733 | 52% | 32% |

| Phil of computing and information (Target) | 25 | 64% | 24% |

| Philosophers | 630 | 59% | 27% |

| Philosophers of cognitive science | 73 | 78% | 12% |

| Philosophers of computing and information | 5 | 60% | 20% |

| Philosophers of mind | 135 | 60% | 26% |

Regarding "Consciousness: identity theory, panpsychism, eliminativism, dualism, or functionalism?":

| Group | n | dualism | elim | function | identity | panpsychism |

| Philosophers (Target) | 1020 | 22% | 5% | 33% | 13% | 8% |

| Phil of computing (Target) | 16 | 25% | 19% | 31% | 0% | 0% |

| Philosophers | 362 | 22% | 4% | 33% | 14% | 7% |

| Phil of cognitive science | 43 | 14% | 12% | 40% | 19% | 2% |

| Philosophers of mind | 95 | 24% | 2% | 38% | 12% | 10% |

Regarding "Zombies: conceivable but not metaphysically possible, metaphysically possible, or inconceivable?" (also noting "agnostic/undecided" results):

| Group | n | inconceivable | conceivable +impossible | possible | agnostic |

| Philosophers (Target) | 1610 | 16% | 37% | 24% | 11% |

| Phil of computing (Target) | 24 | 29% | 25% | 17% | 8% |

| Philosophers | 582 | 15% | 42% | 22% | 11% |

| Phil of cognitive science | 72 | 24% | 50% | 11% | 6% |

| Phil of computing | 5 | 20% | 40% | 20% | 0% |

| Philosophers of mind | 132 | 20% | 51% | 17% | 3% |

My understanding is that the "psychological view" of personal identity more or less says 'you're software', the "biological view" says 'you're hardware', and the "further-fact view" says 'you're a supernatural soul'. Regarding "Personal identity: further-fact view, psychological view, or biological view?":

| Group | n | biological | psychological | further-fact |

| Philosophers (Target) | 1615 | 19% | 44% | 15% |

| Phil of computing... (Target) | 23 | 22% | 70% | 4% |

| Philosophers | 598 | 20% | 44% | 13% |

| Philosophers of cognitive science | 69 | 26% | 55% | 6% |

| Philosophers of computing... | 5 | 40% | 60% | 20% |

| Philosophers of mind | 130 | 26% | 47% | 12% |

Comparing this to some other philosophy subfields, as a gauge of their health:

| Group | n | biological | psychological | further-fact |

| Decision theorists | 18 | 17% | 67% | 17% |

| Epistemologists | 144 | 22% | 35% | 17% |

| General philosophers of science | 58 | 29% | 55% | 7% |

| Metaphysicians | 150 | 23% | 39% | 15% |

| Normative ethicists | 127 | 14% | 46% | 15% |

| Philosophers of language | 120 | 18% | 38% | 17% |

| Philosophers of mathematics | 18 | 17% | 61% | 6% |

| Philosophers of religion | 25 | 24% | 16% | 44% |

Decision theorists come out of this looking pretty great (I claim). This is particularly interesting to me, because some people diagnose the 'academic decision theorist vs. LW decision theorist' disagreement as coming down to 'do you identify with your algorithm or with your physical body?'.

The above is some evidence that either this diagnosis is wrong, or academic decision theorists haven't fully followed their psychological view of personal identity to its logical conclusions.

Regarding "Mind uploading (brain replaced by digital emulation): survival or death?" (adding answers for "the question is too unclear to answer" and "there is no fact of the matter"):

| Group | n | survival | death | Q too unclear | no fact |

| Philosophers (Target) | 1016 | 27% | 54% | 5% | 4% |

| Phil of computing... (Target) | 19 | 47% | 42% | 11% | 0% |

| Philosophers | 369 | 28% | 54% | 5% | 4% |

| Decision theorists | 12 | 42% | 8% | 8% | 17% |

| Philosophers of cognitive science | 37 | 35% | 51% | 5% | 3% |

| Philosophers of mind | 91 | 34% | 52% | 4% | 2% |

From my perspective, decision theorists do great on this question — very few endorse "death", and a lot endorse "there is no fact of the matter" (which, along with "survival", strike me as good indirect signs of clear thinking given that this is a kind-of-terminological question and, depending on terminology, "death" is at best a technically-true-but-misleading answer).

Also, a respectable 25% of decision theorists say "agnostic/undecided", which is almost always something I give philosophers points for — no one's an expert on everything, a lot of these questions are confusing, and recognizing the limits of your own understanding is a very positive sign.

Regarding "Chinese room: doesn't understand or understands?" (adding "the question is too unclear to answer" responses):

| Group | n | understands | doesn't | Q too unclear |

| Philosophers (Target) | 1031 | 18% | 67% | 6% |

| Phil of computing... (Target) | 18 | 22% | 56% | 17% |

| Philosophers | 381 | 18% | 66% | 6% |

| Philosophers of cognitive science | 44 | 34% | 50% | 7% |

| Philosophers of mind | 91 | 15% | 70% | 8% |

Regarding "Other minds (for which groups are some members conscious?)" (looking only at the "2009-comparable departments", except for philosophy of computing and information because there aren't viewable results for that subgroup):

(Options: adult humans; cats; fish; flies; worms; plants; particles; newborn babies; current AI systems; future AI systems.)

(Respondent groups: philosophers; applied ethicists; decision theorists; meta-ethicists; metaphysicians; normative ethicists; philosophy of biology; philosophers of cognitive science; philosophers of computing and information; philosophers of mathematics; philosophers of mind.)

| n | adult h | cat | fish | fly | worm | plant | partic | baby h | AI | AI fut | |

| Phil | 404 | 97% | 93% | 68% | 36% | 24% | 7% | 2% | 89% | 2% | 43% |

| App | 35 | 100% | 94% | 77% | 31% | 17% | 3% | 0% | 89% | 0% | 63% |

| Dec | 14 | 86% | 86% | 71% | 36% | 21% | 0% | 0% | 64% | 0% | 57% |

| MtE | 64 | 98% | 92% | 72% | 38% | 23% | 6% | 2% | 91% | 0% | 47% |

| MtP | 108 | 98% | 97% | 71% | 42% | 32% | 7% | 3% | 95% | 4% | 49% |

| Nor | 85 | 99% | 94% | 73% | 35% | 22% | 5% | 0% | 88% | 4% | 41% |

| Bio | 13 | 100% | 85% | 62% | 38% | 15% | 8% | 0% | 69% | 8% | 54% |

| Cog | 44 | 98% | 98% | 73% | 39% | 25% | 11% | 2% | 95% | 2% | 50% |

| Com | 19 | 100% | 89% | 68% | 32% | 26% | 5% | 0% | 89% | 11% | 58% |

| Mat | 14 | 100% | 93% | 86% | 57% | 43% | 7% | 0% | 93% | 7% | 50% |

| Min | 92 | 99% | 97% | 79% | 45% | 30% | 10% | 4% | 96% | 0% | 43% |

I am confused, delighted, and a little frightened that an equal (and not-super-large) number of decision theorists think adult humans and cats are conscious. (Though as always, small n.)

Also impressed that they gave a low probability to newborn humans being conscious — it seems hard to be confident about the answer to this, and being willing to entertain 'well, maybe not' seems like a strong sign of epistemic humility beating out motivated reasoning.

Also, 11% of philosophers of cognitive science think PLANTS are conscious??? Friendship with philosophers of cognitive science ended, decision theorists new best friend.

Regarding "Eating animals and animal products (is it permissible to eat animals and/or animal products in ordinary circumstances?): vegetarianism (no and yes), veganism (no and no), or omnivorism (yes and yes)?":

| Group | n | omnivorism | vegetarianism | veganism |

| Philosophers (Target) | 1764 | 48% | 26% | 18% |

| Philosophers | 643 | 46% | 27% | 21% |

| Applied ethicists | 64 | 33% | 23% | 41% |

| Normative ethicists | 131 | 40% | 27% | 31% |

| Meta-ethicists | 94 | 41% | 23% | 24% |

| Philosophers of mind | 134 | 48% | 31% | 12% |

| Philosophers of cognitive science | 73 | 48% | 29% | 14% |

4. Metaphysics, philosophy of physics, and anthropics

Regarding "Sleeping beauty (woken once if heads, woken twice if tails, credence in heads on waking?): one-half or one-third?" (including the answers "this question is too unclear to answer," "accept an alternative view," "there is no fact of the matter," and "agnostic/undecided"):

| Group | n | 1/3 | 1/2 | unclear | alt | no fact | agnostic |

| Philosophers (Target) | 429 | 28% | 19% | 8% | 1% | 3% | 40% |

| Philosophers | 191 | 27% | 20% | 4% | 2% | 5% | 42% |

| Decision theorists | 13 | 54% | 8% | 0% | 15% | 0% | 23% |

| Epistemologists | 70 | 33% | 20% | 3% | 3% | 1% | 40% |

| Logicians and phil of logic | 28 | 36% | 14% | 4% | 7% | 4% | 36% |

| Phil of cognitive science | 18 | 28% | 22% | 6% | 0% | 0% | 44% |

| Philosophers of mathematics | 6 | 50% | 17% | 0% | 0% | 0% | 33% |

Regarding "Cosmological fine-tuning (what explains it?): no fine-tuning, brute fact, design, or multiverse?":

| Group | n | design | multiverse | brute fact | no fine-tuning |

| Philosophers (Target) | 807 | 17% | 15% | 32% | 22% |

| Philosophers | 289 | 14% | 16% | 35% | 22% |

| Decision theorists | 13 | 8% | 23% | 23% | 38% |

| General phil of science | 33 | 9% | 21% | 48% | 24% |

| Metaphysicians | 93 | 28% | 18% | 32% | 13% |

| Phil of cognitive science... | 32 | 6% | 19% | 50% | 13% |

| Phil of physical science | 16 | 13% | 25% | 38% | 6% |

Regarding "Quantum mechanics: epistemic, hidden-variables, many-worlds, or collapse?":

| Group | n | collapse | hidden-var | many-worlds | epistemic |

| Philosophers (Target) | 556 | 17% | 22% | 19% | 13% |

| Philosophers | 197 | 13% | 29% | 24% | 8% |

| Decision theorists | 8 | 38% | 13% | 63% | 13% |

| General phil of science | 26 | 31% | 23% | 27% | 4% |

| Metaphysicians | 78 | 12% | 35% | 31% | 5% |

| Phil of cognitive science... | 16 | 6% | 31% | 31% | 19% |

| Phil of physical science | 15 | 13% | 33% | 33% | 0% |

From SEP:

What is the metaphysical basis for causal connection? That is, what is the difference between causally related and causally unrelated sequences?

The question of connection occupies the bulk of the vast literature on causation. [...] Fortunately, the details of these many and various accounts may be postponed here, as they tend to be variations on two basic themes. In practice, the nomological, statistical, counterfactual, and agential accounts tend to converge in the indeterministic case. All understand connection in terms of probability: causing is making more likely. The change, energy, process, and transference accounts converge in treating connection in terms of process: causing is physical producing. Thus a large part of the controversy over connection may, in practice, be reduced to the question of whether connection is a matter of probability or process (Section 2.1).

Regarding "Causation: process/production, primitive, counterfactual/difference-making, or nonexistent?":

| Group | n | counterfactual | process | primitive | non |

| Philosophers (Target) | 892 | 37% | 23% | 21% | 4% |

| Philosophers | 342 | 39% | 22% | 21% | 3% |

| Decision theorists | 14 | 71% | 7% | 7% | 7% |

| Metaphysicians | 103 | 32% | 28% | 28% | 5% |

| Philosophers of cognitive science | 38 | 58% | 21% | 13% | 5% |

| Philosophers of physical science | 16 | 63% | 19% | 0% | 6% |

Regarding Foundations of mathematics: constructivism/intuitionism, structuralism, set-theoretic, logicism, or formalism?:

| Group | n | constructiv | formal | logicism | structural | set |

| Philosophers (Target) | 600 | 15% | 6% | 12% | 21% | 15% |

| Philosophers | 229 | 12% | 4% | 12% | 24% | 17% |

| Philosophers of mathematics | 15 | 13% | 7% | 7% | 40% | 33% |

5. Superstition

Regarding "God: atheism or theism?" (with subfields ordered by percentage that answered "theism"):

| Group | n | theism |

| Philosophy (Target) | 1770 | 19% |

| Philosophy | 645 | 13% |

| Philosophy of religion | 27 | 74% |

| Medieval and Renaissance philosophy | 10 | 60% |

| Philosophy of action | 42 | 21% |

| 17th/18th century philosophy | 64 | 20% |

| 20th century philosophy | 36 | 19% |

| Metaphysics | 153 | 16% |

| Normative ethics | 132 | 16% |

| 19th century philosophy | 22 | 14% |

| Asian philosophy | 7 | 14% |

| Decision theory | 22 | 14% |

| Philosophy of mind | 135 | 13% |

| Aesthetics | 30 | 13% |

| Ancient Greek and Roman philosophy | 31 | 13% |

| Applied ethics | 64 | 13% |

| Epistemology | 153 | 13% |

| Logic and philosophy of logic | 70 | 13% |

| Philosophy of language | 128 | 12% |

| Philosophy of social science | 27 | 11% |

| Meta-ethics | 94 | 10% |

| Philosophy of law | 21 | 10% |

| Philosophy of mathematics | 20 | 10% |

| Social and political philosophy | 100 | 10% |

| Philosophy of gender, race, and sexuality | 23 | 9% |

| Philosophy of biology | 24 | 8% |

| Philosophy of physical science | 26 | 8% |

| General philosophy of science | 65 | 6% |

| Philosophy of cognitive science | 73 | 5% |

| Philosophy of computing and information | 5 | 0% |

| Philosophy of the Americas | 5 | 0% |

| Continental philosophy | 15 | 0% |

| Philosophy of computing and information (Target) | 25 | 0% |

| Metaphilosophy | 29 | 0% |

This question is 'philosophy in easy mode', so seems like a decent proxy for field health / competence (though the anti-religiosity of Marxism is a confounding factor in my eyes, for fields where Marx is influential).

The "A-theory of time" says that there is a unique objectively real "present", corresponding to "which time seems to me to be right now", that is universal and observer-independent, contrary to special relativity. The "B-theory of time" says that there is no such objective, universal "present".

This provides another good "reasonableness / basic science literacy" litmus test, so I'll order the subfields (where enough people in the field answered at all) by how much more they endorse B-theory over A-theory. Regarding "Time: B-theory or A-theory?":

| Group | n | A-theory | B-theory | diff |

| Philosophy (Target) | 1123 | 27% | 38% | 11% |

| Philosophy | 449 | 22% | 44% | 22% |

| 19th century philosophy | 13 | 31% | 8% | -23% |

| Philosophy of religion | 22 | 45% | 27% | -18% |

| Medieval and Renaissance philosophy | 9 | 33% | 22% | -11% |

| Philosophy of law | 10 | 30% | 20% | -10% |

| Aesthetics | 18 | 22% | 17% | -5% |

| Philosophy of social science | 17 | 24% | 24% | 0% |

| Social and political philosophy | 42 | 29% | 33% | 4% |

| Philosophy of gender, race, and sexuality | 12 | 25% | 33% | 8% |

| Ancient Greek and Roman philosophy | 21 | 24% | 33% | 9% |

| Philosophy of action | 31 | 29% | 39% | 10% |

| 20th century philosophy | 22 | 23% | 36% | 13% |

| Normative ethics | 78 | 31% | 44% | 13% |

| Philosophy of cognitive science | 49 | 18% | 31% | 13% |

| Meta-ethics | 60 | 27% | 43% | 16% |

| Philosophy of mathematics | 17 | 12% | 29% | 17% |

| Asian philosophy | 5 | 20% | 40% | 20% |

| Applied ethics | 29 | 31% | 52% | 21% |

| Epistemology | 113 | 21% | 42% | 21% |

| Philosophy of mind | 111 | 20% | 41% | 21% |

| 17th/18th century philosophy | 44 | 14% | 36% | 22% |

| Metaphilosophy | 23 | 17% | 39% | 22% |

| Metaphysics | 144 | 28% | 51% | 23% |

| Logic and philosophy of logic | 61 | 28% | 52% | 24% |

| Phil of computing and information (Target) | 20 | 25% | 50% | 25% |

| Philosophy of language | 107 | 22% | 54% | 32% |

| General philosophy of science | 48 | 19% | 56% | 37% |

| Philosophy of biology | 16 | 19% | 63% | 44% |

| Decision theory | 16 | 13% | 63% | 50% |

| Philosophy of physical science | 26 | 12% | 62% | 50% |

Decision theorists doing especially well here is surprising to me! Especially since they didn't excel on theism; if they'd hit both out of the park, from my perspective that would have been a straightforward update to "wow, decision theorists are really exceptionally reasonable as analytic philosophers go, even if they're getting Newcomb's problem in particular wrong".

As is, this still strikes me as a reason to be more optimistic that we might be able to converge with working decision theorists in the future. (Or perhaps more so, a reason to be relatively optimistic about persuading decision theorists vs. people working in most other philosophy areas.)

(Added: OK, after writing this I saw decision theorists do great on the 'personal identity' and 'mind uploading' questions, and am feeling much more confident that productive dialogue is possible. I've added those two questions earlier in this post.)

(Added added: OK, decision theorists are also unusually great on "which things are conscious?" and they apparently love MWI. How have we not converged more???)

6. Identity politics topics

Regarding "Race: social, unreal, or biological?":

| Group | n | biological | social | unreal |

| Philosophy (Target) | 1649 | 19% | 63% | 15% |

| Philosophy | 591 | 21% | 67% | 12% |

(Note that many respondents said 'yes' to multiple options.)

7. Metaphilosophy

Regarding "Philosophical progress (is there any?): a little, a lot, or none?":

| Group | n | none | a little | a lot |

| Philosophers (Target) | 1775 | 4% | 47% | 42% |

| Philosophers | 645 | 3% | 45% | 46% |

Regarding "Philosophical knowledge (is there any?): a little, none, or a lot?":

| Group | n | none | a little | a lot |

| Philosophers (Target) | 1110 | 4% | 33% | 56% |

| Philosophers | 397 | 4% | 30% | 58% |

Another interesting result is "Philosophical methods (which methods are the most useful/important?)", which finds (looking at analogous-to-2009 departments):

- 66% of philosophers think "conceptual analysis" is especially important, 14% disagree.

- 60% say "empirical philosophy", 12% disagree.

- 59% say "formal philosophy", 10% disagree.

- 51% say "intuition-based philosophy", 27% disagree.

- 44% say "linguistic philosophy", 23% disagree.

- 39% say "conceptual engineering", 23% disagree.

- 29% say "experimental philosophy", 39% disagree.

8. How have philosophers' views changed since 2009?

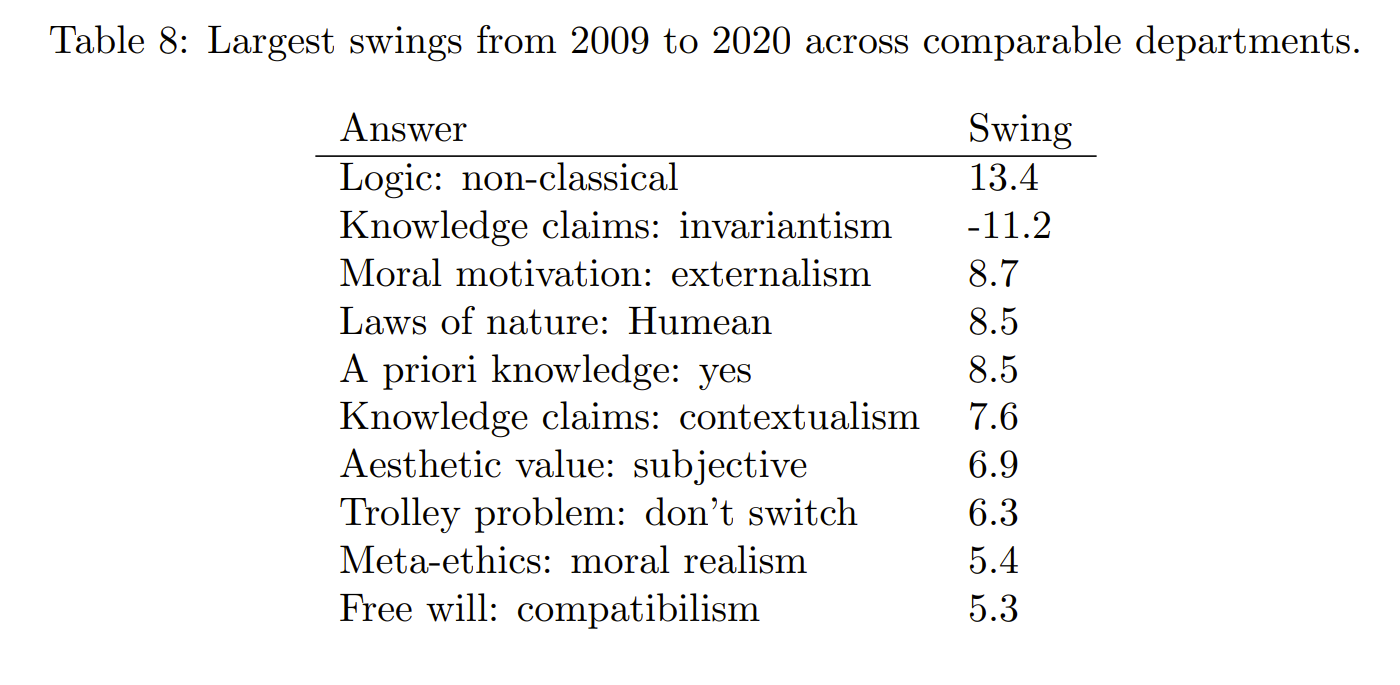

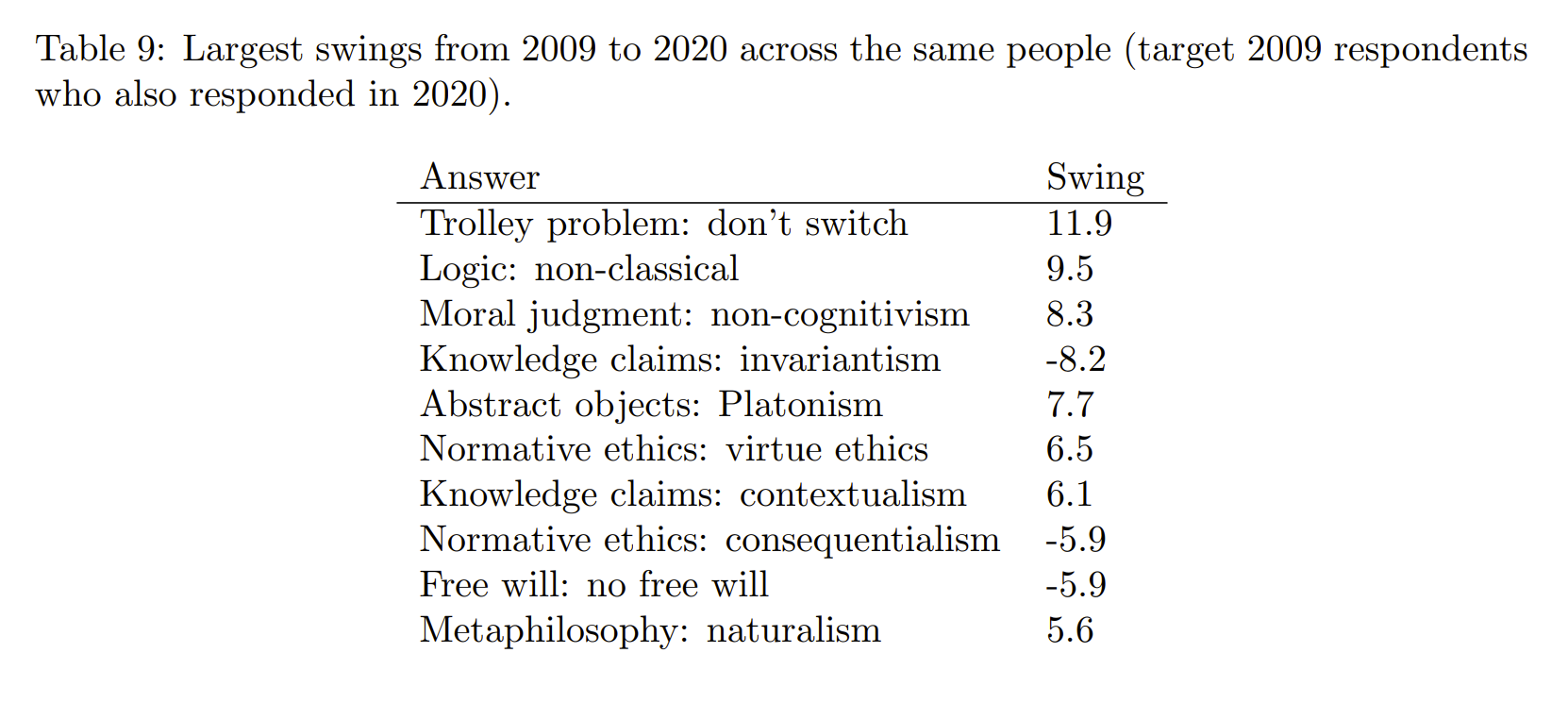

Bourget and Chalmers' paper has a table for the largest changes in philosophers' views since 2009:

As noted earlier in this post, one of the larger shifts in philosophers' views was a move away from moral internalism and toward externalism.

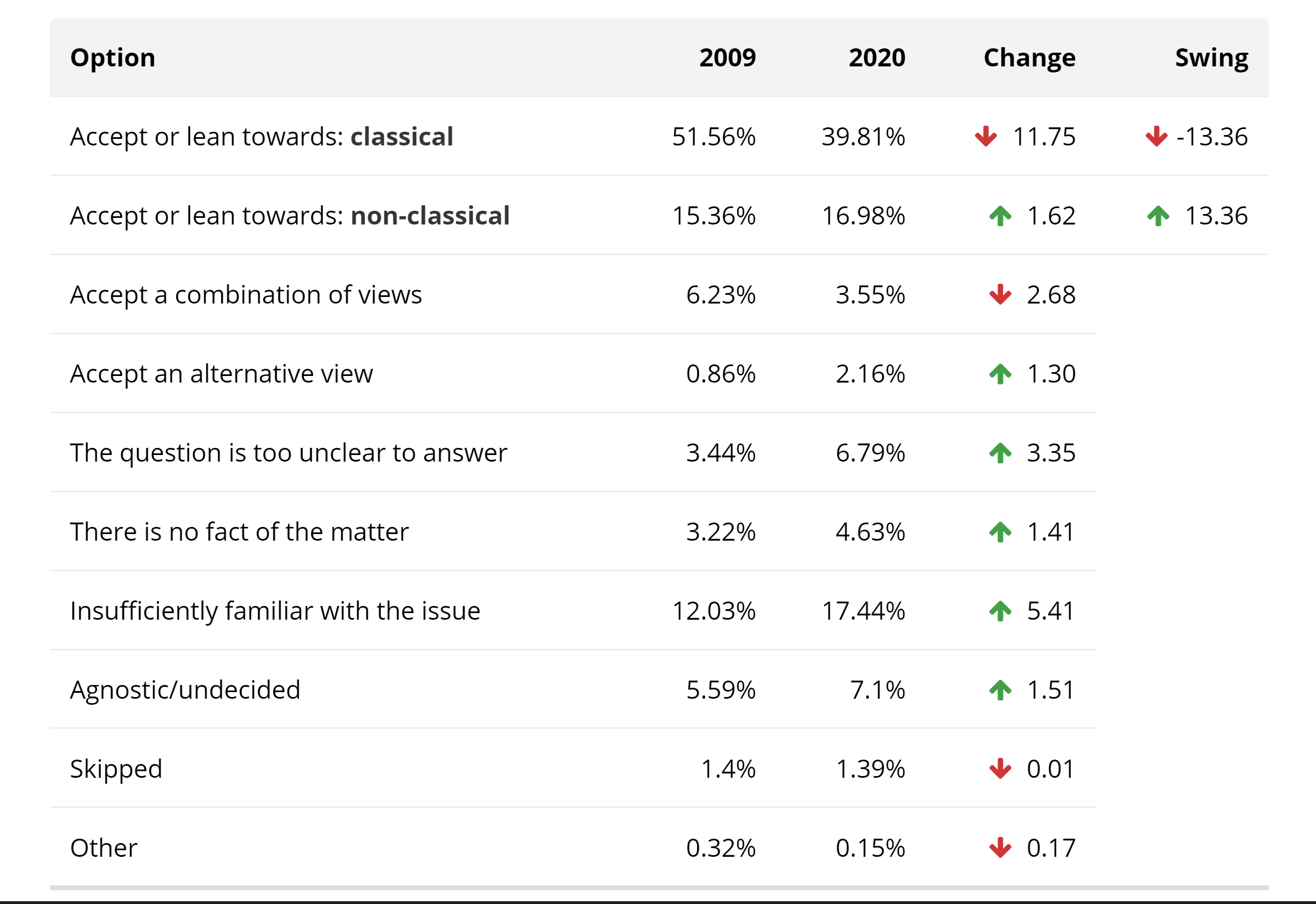

On 'which do you endorse, classical logic or non-classical?' (a strange question, but maybe this is something like 'what kind of logic is reality's source code written in?'), non-classical logic is roughly as unpopular as ever, but fewer now endorse classical logic, and more give answers like "insufficiently familiar with the issue" and "the question is too unclear to answer":

Epistemic contextualism says that the accuracy of your claim that someone "knows" something depends partly on contextual features — e.g., the standards for "knowledge" can rise "as the stakes rise or the skeptical doubts become more serious".

Here, it was the less popular view (invariantism) that lost favor; and the view that lost favor again lost it via an increase in 'other' answers (especially "insufficiently familiar with the issue" and "agnostic/undecided") more so than increased favor for its rival view (contextualism):

Humeanism (a misnomer, since Hume himself wasn't a Humean, though his skeptical arguments helped inspire the Humeans) say that "laws of nature" aren't fundamentally different from other observed regularities, they're just patterns that humans have given a fancy high-falutin name to; whereas anti-Humeans think there's something deeper about laws of nature, that they in some sense 'necessitate' things to go one way rather than another.

(Maybe Humeans = 'laws of nature are program outputs like any other', non-Humeans = 'laws of nature are part of reality's source code'?)

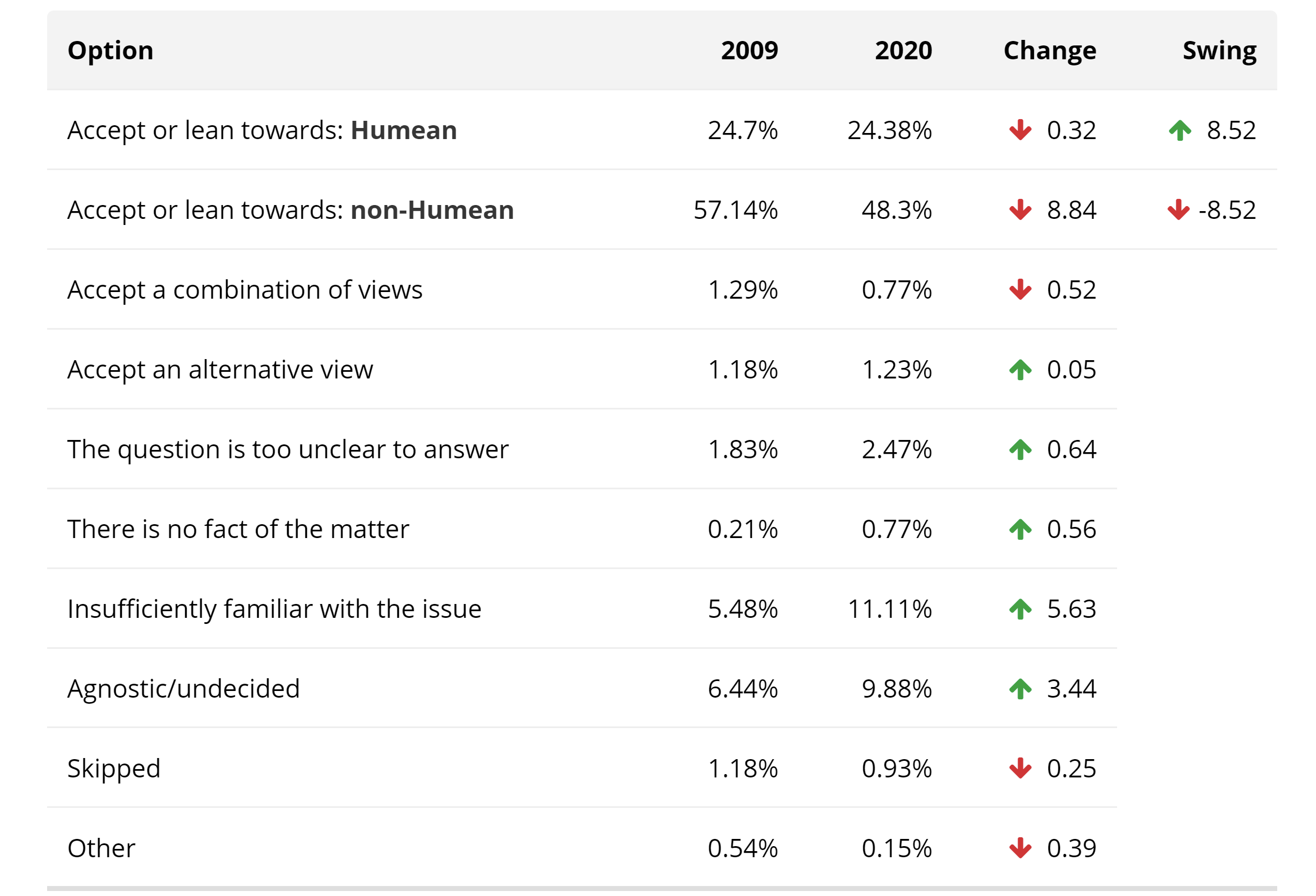

Once again, one view lost favor (the more popular view, non-Humeanism), but the other didn't gain favor; instead, more people endorsed "insufficiently familiar with the issue", and "agnostic/undecided", etc.:

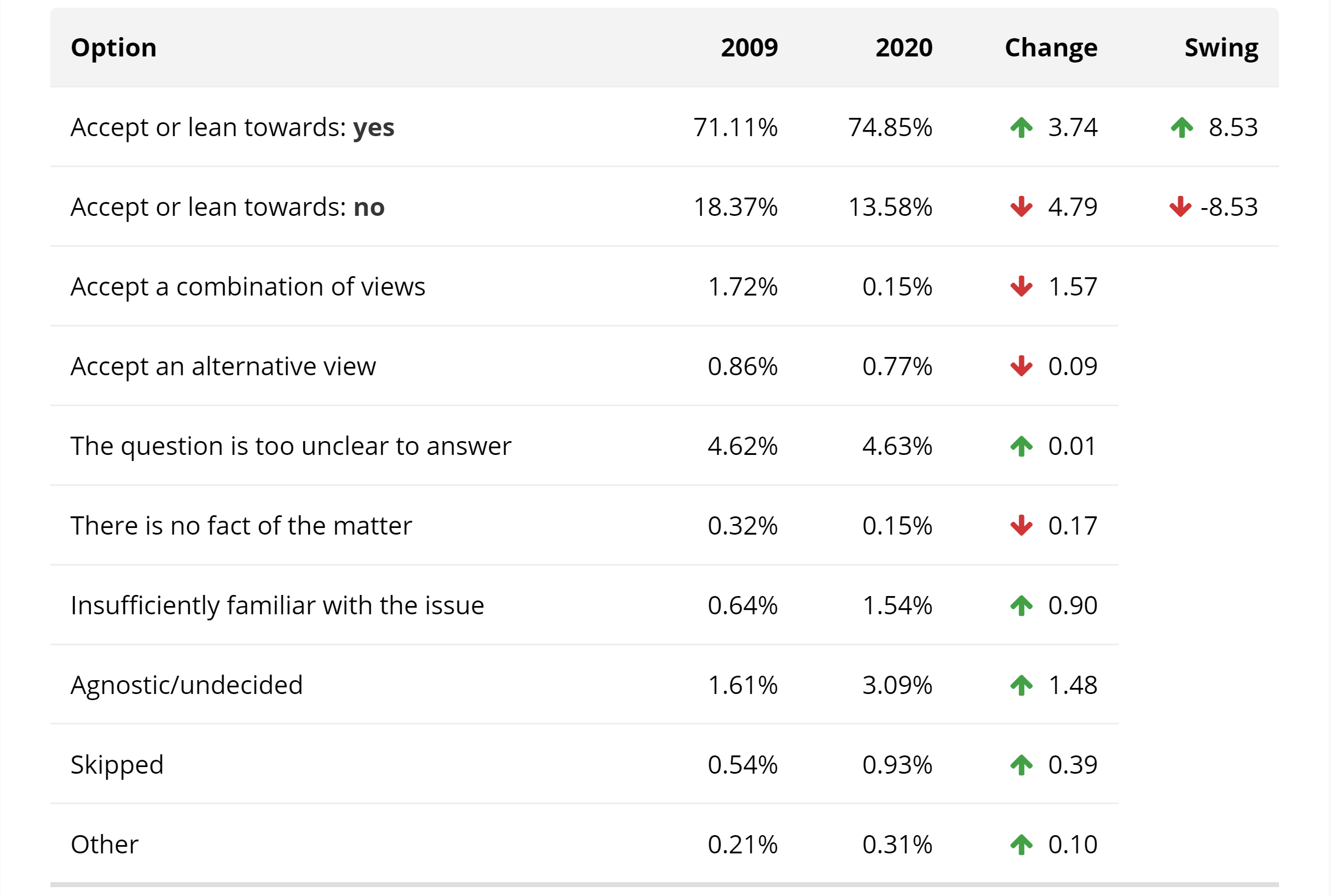

Philosophers in 2020 are more likely to say that "yes", humans have a priori knowledge of some things (already very much the dominant view):

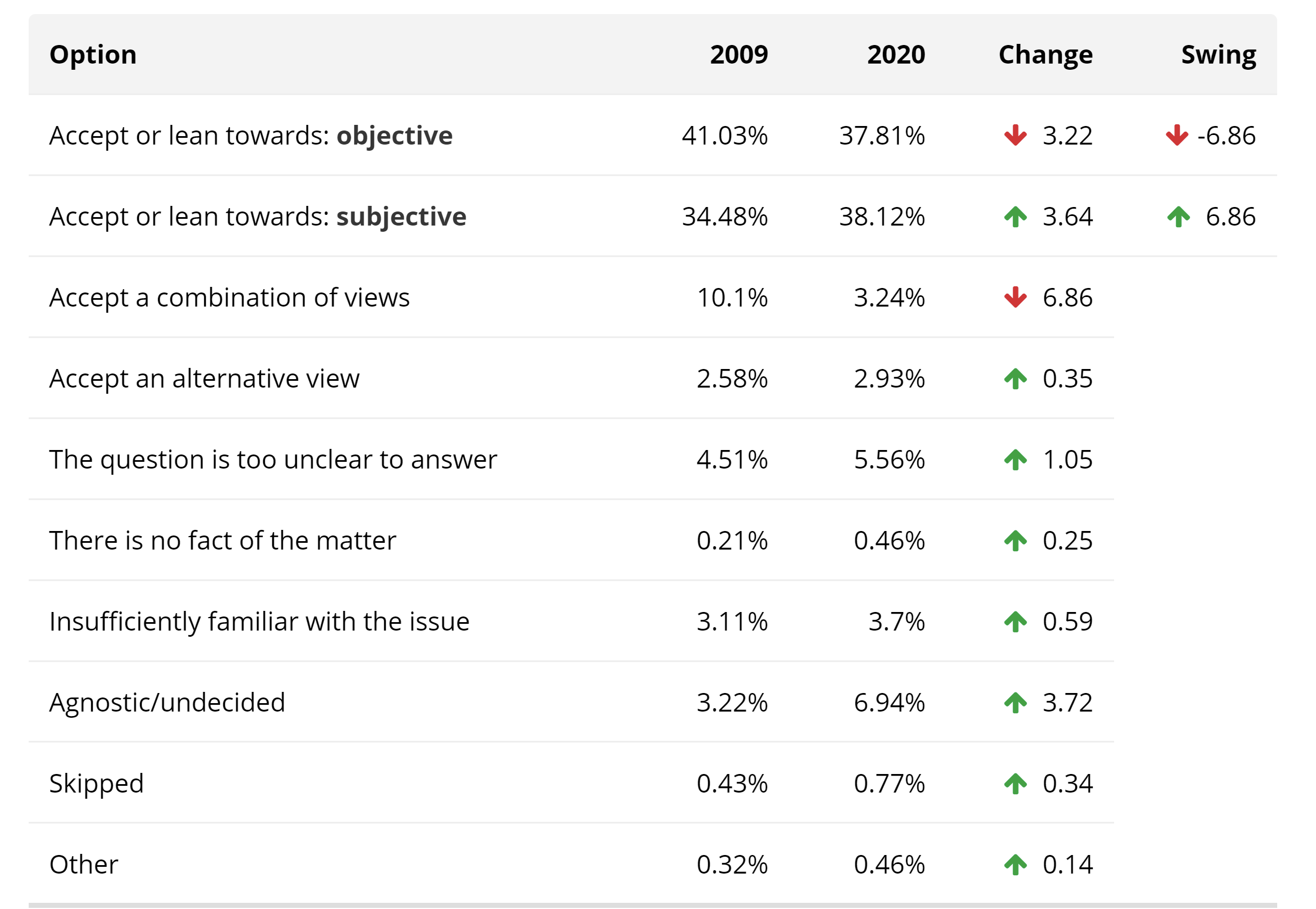

'Aesthetic value is objective' was favored over 'subjective' (by 3%) in 2009; now 'subjective' is favored over 'objective' (by 4%). "Agnostic/undecided" also gained ground.

Philosophers mostly endorsed "switch" in the trolley dilemma, and still do; but "don't switch" gained a bit of ground, and "insufficiently familiar with the issue" lost ground.

Moral realism also became a bit more popular (was endorsed by 56% of philosophers, now 60%), as did compatibilism about free will (was 59% compatibilism, 14% libertarianism, 12% no free will; now 62%, 13%. and 10%).

The paper also looked at the individual respondents who answered the survey in both 2009 and 2020. Individuals tended to update away from switching in the trolley dilemma, away from consequentialism, and toward virtue ethics and non-cognitivism. They also updated toward Platonism about abstract objects, and away from 'no free will'.

These are all comparisons across 2009-target-population philosophers in general, however. In most (though not all) cases, I'm more interested in the views of subfields specialized in investigating and debating a topic, and how the subfield's view changes over time. Hence my earlier sections largely focused on particular fields of philosophy.

50 comments

Comments sorted by top scores.

comment by Jayson_Virissimo · 2021-11-02T05:36:37.373Z · LW(p) · GW(p)

For reference, here are the raw data from when LWers took the survey in 2012 and here [LW · GW] is the associate post from which it was extracted.

comment by Unnamed · 2021-11-02T07:55:54.956Z · LW(p) · GW(p)

The survey results page also lists "Strongest correlations" with other questions. If I'm reading the tables correctly for the Newcomb's Problem results, there were 17 groups (in the target population who gave a particular answer to one of the other survey questions) in which one-boxing was at least as common as two-boxing. In order (of one-boxers minus two-boxers):

Political philosophy: communitarianism (84 vs 67)

Semantic content: radical contextualism (most or all) (49 vs 34)

Analysis of knowledge: justified true belief (49 vs 34)

Response to external-world skepticism: pragmatic (51 vs 37)

Normative ethics: virtue ethics (112 vs 100)

Philosopher: Quine (33 vs 22)

Arguments for theism: moral (22 vs 12)

Hume: skeptic (72 vs 63)

Aim of philosophy: wisdom (96 vs 88)

Philosophical knowledge: none (12 vs 5)

Philosopher: Marx (8 vs 2)

Aim of philosophy: goodness/justice (73 vs 69)

A priori knowledge: [no] (62 vs 58)

Consciousness: panpsychism (16 vs 13)

External world: skepticism (20 vs 18)

Eating animals and animal products: omnivorism (yes and yes) (168 vs 168)

Truth: epistemic (26 vs 26)

↑ comment by Rob Bensinger (RobbBB) · 2021-11-02T11:52:55.604Z · LW(p) · GW(p)

This is fantastic.

... Virtue ethicists one-box?!

I'm guessing this is a case of "views that correlate with being less socially/culturally linked to analytic philosophers, will tend to correlate more with one-boxing". But it would be wild if something were going on like:

-

Consequentialists two-box, because thinking about "consequences" primes you to accept the CDT argument that you should maximize your direct causal impact.

-

Deontologists two-box, because thinking about duties/principles primes you to accept the "I should do the capital-r Rational thing even if it's not useful" argument.

-

Virtue ethicists one-box, because (a subset of) one-boxers are the ones talking about 'making yourself into the right kind of agent'. (Or, more likely, virtue ethicists one-box just because they lack the other views' reasons to two-box.)

↑ comment by Vaniver · 2021-11-03T17:55:06.582Z · LW(p) · GW(p)

Virtue ethicists one-box, because (a subset of) one-boxers are the ones talking about 'making yourself into the right kind of agent'.

This seems sort of obvious to me, and I'm kind of surprised that only a bit over half of the virtue ethicists one-box.

[EDIT] I think I was giving the virtue ethicists too much credit and shouldn't have been that surprised--this is actually a challenging situation to map onto traditional virtues, and 'invent the new virtue for this situation' is not that much a standard piece of virtue ethics. I would be surprised if only a bit over half of virtue ethicists pay up in Parfit's Hitchhiker, even tho the problems are pretty equivalent.

↑ comment by Unnamed · 2021-11-02T22:03:08.104Z · LW(p) · GW(p)

53% of virtue ethicists one-box (out of those who picked a side).

Seems plausible that it's for kinda-FDT-like reasons, since virtue ethics is about 'be the kind of person who' and that's basically what matters when other agents are modeling you. It also fits with Eliezer's semi-joking(?) tweet "The rules say we must use consequentialism, but good people are deontologists, and virtue ethics is what actually works."

Whereas people who give the pragmatic response to external-world skepticism seem more likely to have "join the millionaires club" reasons for one-boxing.

↑ comment by steven0461 · 2021-11-02T22:15:41.856Z · LW(p) · GW(p)

Taking the second box is greedy and greed is a vice. This might also explain one-boxing by Marxists.

comment by Rob Bensinger (RobbBB) · 2021-11-03T19:37:22.002Z · LW(p) · GW(p)

By the very crude method (ignoring "skip" and "insufficiently familiar" answers) 'give the field a point for every 1% of the field that endorses theism', and 'give the field a point for every 1% that endorses A-theory minus every 1% that endorses B-theory', the seven highest-scoring fields (i.e., the ones I'd expect to be least reasonable and least science-literate) are:

- 92 points: philosophy of religion

- 71 points: Medieval and Renaissance philosophy

- 37 points: 19th century philosophy

- 20 points: philosophy of law

- 18 points: aesthetics

- 11 points: philosophy of social science; philosophy of action

The seven lowest scoring (aka most promising fields) are:

- -42 points: philosophy of physical science

- -36 points: philosophy of biology; decision theory

- -31 points: general philosophy of science

- -22 points: metaphilosophy

- -20 points: philosophy of language

- -11 points: logic and philosophy of logic

(Philosophy of computing and information only has data for the target group, which is less comparable, so I leave it out here. It would get -25 points.)

Replies from: Jayson_Virissimo↑ comment by Jayson_Virissimo · 2021-11-03T20:51:32.576Z · LW(p) · GW(p)

Are we assuming affirming A-theory is indicative of science illiteracy because it is incompatible with special relativity or for some other reason?

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-03T23:46:34.738Z · LW(p) · GW(p)

Basically, though with two wrinkles:

- By "science illiteracy" I mean something like 'having relatively little familiarity with or interest in scientific knowledge and the scientific style of thinking', not 'happens to not be familiar with special relativity'. E.g., I don't dock anyone points for saying they're agnostic about A-theory -- no one can be an expert on every topic. But you can at least avoid voting in favor of A-theory, without first doing enough basic research to run into the special relativity issue.

- Some versions of the A-theory might technically be compatible with special relativity. E.g., maybe you received a divine revelation to the effect of 'there's a secret Correct Frame of Reference, and you alone among humans (not, e.g., your brother Fred who lives on Mars) live your life in that Frame'. The concern is less 'these two claims are literally inconsistent', more 'reasonable mentally stable people should not think they have Bayesian evidence that their Present Moment is metaphysically special and unique'.

- (Or, if they've literally just never heard of relativity in spite of being a faculty member at a university, they should refrain from weighing in on the A-theory vs. B-theory debate until they've done some googling.)

↑ comment by Said Achmiz (SaidAchmiz) · 2021-11-05T13:39:43.944Z · LW(p) · GW(p)

(Or, if they’ve literally just never heard of relativity in spite of being a faculty member at a university, they should refrain from weighing in on the A-theory vs. B-theory debate until they’ve done some googling.)

But if they’ve never heard of relativity (or, more likely, have essentially no idea of the content of the theory of relativity), how the heck should they know that relativity has any connection to the “A-theory vs. B-theory” debate, and that they therefore should refrain from weighing in, on the basis of that specific bit of ignorance?

Maybe there are all sorts of “unknown unknowns”—domains of knowledge of whose existence or content I am unaware—which materially affect topics on which I might, if asked, feel that I may reasonably express a view (but would not think this if my ignorance of those domains were to be rectified). But I don’t know what they might be, or on what topics they might bear—that’s what makes them unknown unknowns!—and yet any view I currently hold (or might assent to express, if asked) might be affected by them!

Should I therefore refrain from weighing in on anything, ever, until such time as I have familiarized myself with literally all human knowledge?

I do not think that this is a reasonable standard to which we should hold anyone…

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-05T18:57:46.885Z · LW(p) · GW(p)

I think that's a very fair point, and after thinking about it more I'm guessing I'd want to exclude the people who voted 'lean A-theory' and 'lean B-theory', and just include the people who strongly endorsed one or the other (since the survey distinguishes those options).

I think it's a very useful signal either way, even the 'unfair' version. And I think it really does take very little googling (or very little physics background knowledge) to run into the basic issues; e.g., SEP is a standard resource I'd expect a lot of philosophers to make a beeline to if they wanted a primer. But regardless, saying it's as an unambiguous or extreme a sign as theism is too harsh.

(Though in some ways it's a more extreme sign than theism, because theism is a thing a lot of people were raised in and have strong emotional attachments—it's epistemic 'easy mode' in the sense that it's an especially outrageous belief, but it can be 'hard mode' emotionally. I wanted something like a-theory added to the mix partly because it's a lot harder to end up 'a-theorist by default' than to end up 'theist by default'.)

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-05T19:10:12.786Z · LW(p) · GW(p)

Ehh, I think I want to walk back my 'go easy on a-theorists' update a bit. Even if I give someone a pass on the physics-illiteracy issue, I feel like I should still dock a lot of points for Bayes-illiteracy. What evidence does someone think they've computed that allows them to update toward A-theory? How did the 'mysterious Nowness of Now' causally impact your brain so as to allow you to detect its existence, relative to the hypothetical version of you in a block universe?

Crux: If there's a reasonable argument out there for having a higher prior on A-theory, in a universe that looks remotely like our universe, then I'll update.

Heck, thinking in terms of information theory and physical Bayesian updates at all is already most of what I'm asking for here. What I'm expecting instead is 'well, A-theory felt more intuitive to me, and the less intuitive-to-me view gets the burden of proof'.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2021-11-05T20:28:55.027Z · LW(p) · GW(p)

Counterpoint: how much of the literature on the philosophical presentism vs. eternalism debate have you acquainted yourself with?

For example, you linked to the SEP entry on time, so surely you noticed the philosophers’ extant counterarguments to the “relativity entails B-theory” argument? What is your opinion on papers like this one or this one?

Now, if your response is “I have not read these papers”, then are you not in the same situation as the philosopher who is unfamiliar with relativity?

(But in fact a quick survey of the literature on the “nature of time” debate—including, by the way, the SEP entry you linked!—shows that philosophers involved in this debate are familiar with relativity’s implications in this context… perhaps more familiar, on average, than are physicists—or rationalists—with the philosophical positions in question.)

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-05T22:11:03.661Z · LW(p) · GW(p)

I haven't read those papers, and am not very familiar with the presentism debate, though I'm not 0% familiar. Nor have I read the papers you link. If there's a specific argument that you think is a good argument for the A-theory view (e.g., if you endorse one of the papers), I'm happy to check it out and see if it updates me.

But the mere fact that "papers exist", "people who disagree with me exist", and "people publishing papers in favor of A-theory nowadays are all familiar with special relativity (at least to some degree)" doesn't move me, no -- I was taking all of those things for granted when I wrote the OP.

Having interacted with a decent number of analytic-philosophy metaphysics papers and arguments at college, I think I already know the rough base rate of 'crazy and poorly-justified views on reality' in this area, and I think it's very high by LW standards (though I think metaphysics is unusually healthy for a philosophy field).

Since I'm not a domain expert, it might turn out that I'm missing something crucial and the A-theory has some subtle important argument favoring it; but I don't treat this as nontrivially likely merely because professional metaphysicians who have heard of special relativity disagree with me, no. (If I'm wrong, most of my probability mass would be on 'I'm defining the A-theory wrong, actually A-theorists are totally fine with there being no unique special Present Moment.' Though then I'd wonder what the content of the theory is!)

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2021-11-05T23:10:14.609Z · LW(p) · GW(p)

If there’s a specific argument that you think is a good argument for the A-theory view (e.g., if you endorse one of the papers), I’m happy to check it out and see if it updates me.

No, not particularly. Actually, I do not have an opinion on the matter one way or the other!

As for the rest of your comment… it is understandable, as far as it goes; but note that a philosopher could say just the same thing, but in reverse.

He might say: “The mere fact that ‘a physics theory exists’, ‘physicists think that their theory has some bearing on this philosophical argument’, and ‘physicists have some familiarity with the state of the philosophical debate on the matter’ doesn’t move me.”

Our philosopher might say, further: “I think I already know the rough base rate of ‘physical scientists with delusions of philosophy’; I have interacted with many such folks, who think that they do not need to study philosophy in order to have an opinion on philosophers’ debates.”

And he might add, in all humility: “Since I’m not a domain expert, it might turn out that I’m missing something crucial, and the theory of relativity has some important consequence that bears on the argument; but I don’t treat this as nontrivially likely merely because professional physicists who have heard of the ‘eternalism vs. presentism’ debate disagree with me.”

Now, suppose I am a curious, though reasonably well-informed, layman—neither professionally a philosopher nor yet a physicist—and I observe this back-and-forth. What should I conclude from this exchange, about which one of you is right?

… and that would be the argument that I would make, if it were the case that you dismissed the philosophers’ arguments without reading them, while the philosophers dismissed your arguments (and/or those of the physicists) without reading them. But that’s not the case! Instead, what we have is a situation where you dismiss their arguments without reading them, while they have read your arguments, and are disagreeing with them on that, informed, basis.

Now what should I (the hypothetical well-informed layman) conclude?

Of course, the matter is more complicated than even that, because philosophers hardly agree with each other on this matter. But let’s not lose sight of the point of this discussion thread, which is: should a philosopher who endorses A-theory be docked “rationality points” (on the reasoning that any such philosopher must surely be suffering from “science illiteracy”—because if they had done any “basic research” [i.e., five minutes of web-searching], they would have learned about the special relativity issue, and would—we are meant to assume—immediately and reliably conclude that they had no business having an opinion about the nature of time, at least not without gaining a thorough technical understanding of special relativity)?

I think the answer to that question is “no, definitely not”. It’s obvious from a casual literature search that philosophers who are familiar with the “eternalism vs. presentism” debate at all, are also familiar with the question of special relativity’s implications for that debate. Whatever is causing some of them to still favor A-theory, it ain’t “science illiteracy”, inability to use Google, or any other such simple foolishness.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-06T01:17:30.455Z · LW(p) · GW(p)

it is understandable, as far as it goes; but note that a philosopher could say just the same thing, but in reverse.

Sure! And similarly, if you were an agnostic, and I were an atheist making all the same statements about theism, you could say 'philosophers of religion could say just the same thing, but in reverse'.

Yet this symmetry wouldn't be a good reason for me to doubt atheism or put more time into reading theology articles.

I think the specific symmetry you're pointing at doesn't quite work (special relativity doesn't have the same standing as A-theory arguments, either in fact or in philosophers' or physicists' eyes), but it's not cruxy in any case.

Instead, what we have is a situation where you dismiss their arguments without reading them, while they have read your arguments, and are disagreeing with them on that, informed, basis.

At a minimum, you should say that I'm making a bizarrely bold prediction (at least from an outside-view perspective that thinks philosophers have systematically accurate beliefs about their subject matter). If I turn out to be right, after having put so little work in, it suggests I have surprisingly 'efficient' heuristics -- ones that can figure out truth on at least certain classes of question, without putting in a ton of legwork first. (Cf. skills like 'being able to tell whether certain papers are shit based on the abstract'.)

You're free to update toward the hypothesis that I'm overconfident; the point of my sharing my views is to let you consider hypotheses like that, rather than hiding any arrogant-sounding beliefs of mine from view. I'm deliberately stating my views in a bold, stick-my-neck-out way because those are my actual views -- I think we do for-real live in the world where A-theory is obviously false.

I'm not saying any of this to shut down discussion, or say I'm unwilling to hear arguments for A-theory. But I do think there's value in combating underconfidence just as much as overconfidence, and in trying to reach conclusions efficiently rather than going through ritualistic doubts.

If you think I'm going too fast, then that's a testable claim, since we can look at the best A-theory arguments and see if they change my mind, the minds of people we both agree are very sane, etc. But I'd probably want to delegate that search for 'best arguments' to someone who's more optimistic that it will change anything.

Whatever is causing some of them to still favor A-theory, it ain’t “science illiteracy”, inability to use Google, or any other such simple foolishness.

Depending on how much we're talking about 'philosophers who don't work on the metaphysics of time professionally, but have a view on this debate' (the main group I discussed in the OP) vs. 'A-theorists who write on the topic professionally', I'd say it's mostly a mix of (a) not using google / not having basic familiarity with the special relativity argument; (b) misunderstanding the force of the special relativity argument; and (c) misunderstanding/rejecting the basic Bayesian idea of how evidence, burdens of proof, updating, priors, and thermodynamic-work-that-makes-a-map-reflect-a-territory work, in favor of epistemologies that put more weight on 'metaphysical intuitions that don't make Bayesian sense but feel really compelling when I think them'.

I'd say much the same thing about professional theologians who argue that God must be real (in order for us to know stuff at all) because there's no reason for evolution to give humans accurate cognition; or, for that matter, about theologians who argue that God must be real because speciation isn't real. There are huge industries of theist scholars who have spent their whole lives arguing such things. Can they really be so wrong, when the counter-argument is so obvious, so strong, and so googlable?

Apparently, they can.

Replies from: RobbBB, TAG↑ comment by Rob Bensinger (RobbBB) · 2021-11-06T01:26:30.798Z · LW(p) · GW(p)

To put it in simpler terms: is a physicist who believes an invisible, undetectable dragon lives in their garage 'science-illiterate'?

I'd say that they're at best science-illiterate, if not outright unhinged. If you want to say that it's impossible to be science-illiterate while knowing a bunch of physics facts or while being able to do certain forms of physics lab work, then I assume we're defining the word 'science-illiterate' differently. But hopefully this example clarifies in what basic sense I'm using the term.

↑ comment by TAG · 2021-11-07T16:53:14.018Z · LW(p) · GW(p)

Yet this symmetry wouldn’t be a good reason for me to doubt atheism or put more time into reading theology articles.

You don't have the kind of knock down arguments against theism that you can think you have either .

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-07T18:04:50.270Z · LW(p) · GW(p)

If the arguments I have against theism don't count as 'knock-down arguments'... so much the worse for knock-down arguments, I suppose? The practical implication ('this deserves the same level of intellectual respect and attention as leprechauns') holds regardless.

Replies from: TAG↑ comment by TAG · 2021-11-05T15:06:47.472Z · LW(p) · GW(p)

Or B theory just isn't that good. If physical reductionism is the correct theory of mind, so that the mind is just another part of the block, it's difficult to see where so much as an illusion of temporal flow comes from.

Some versions of the A-theory might technically be compatible with special relativity.

Well, Copenhagen is compatible with SR (collapse is nonlocal, but cannot be used for nonlocal signaling), and it allows you to identify a moving present moment as where collapse is occurring.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-05T19:04:47.852Z · LW(p) · GW(p)

If physical reductionism is the correct theory of mind, so that the mind is just another part of the block, it's difficult to see where so much as an illusion of temporal flow comes from.

No? Seems trivially easy to see, and I don't think reductionism matters here. If I were an immaterial Cartesian soul plugged in to the Matrix, and the Matrix ran on block-universe physics, the same arguments for 'it feels like there's an objective Now but really this is just an implication of my being where I am in the block universe' would hold. The argument is about your relationship to other spatial and temporal slices of the Matrix, not about the nature of your brain or mind.

Well, Copenhagen is compatible with SR (collapse is nonlocal, but cannot be used for nonlocal signaling), and it allows you to identify a moving present moment as where collapse is occurring.

On the (false) collapse interpretation of QM, I can cause collapses; but so can my brother Fred who lives on Mars. Which of our experiences coincide with 'the present', if there is a single unique reference-frame-independent Present Moment?

Replies from: TAG↑ comment by TAG · 2021-11-07T17:27:44.754Z · LW(p) · GW(p)

Reductionism is critical to understanding block universe theory , because if you allow a nonohysycal soul or wotnot the implications can be prevented from following.

‘it feels like there’s an objective Now but really this is just an implication of my being where I am in the block universe’ would hold.

If the immaterial soul had the property of being wholly located at one 4D point in the block at a time then you could recover the notion of a distinguished and moving Now..but that isn't block universe theory, it's some sort of hybrid. In actual block universe theory, you're just a 4D section of a 4D block, and your entire history exists at once.

You don't believe in souls and you do believe in reductionism, so you can't use souls to rescue block universe theory.

On the (false) collapse interpretation of QM, I can cause collapses; but so can my brother Fred who lives on Mars. Which of our experiences coincide with ‘the present’, if there is a single unique reference-frame-independent Present Moment?

I said that collapse is nonlocal.

comment by Lance Bush (lance-bush) · 2021-11-03T02:46:43.224Z · LW(p) · GW(p)

The number of moral realists, and especially non-naturalist moral realists, both strike me as indications that there is something wrong with contemporary academic philosophy. It almost seems like philosophers reliably hold one of the less defensible positions across many issues.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-03T19:17:51.861Z · LW(p) · GW(p)

We've talked about this a bit, but to restate my view on LW: I think there's enormous variation in what people mean by "moral realism", enough to make it a mostly useless term (and, as a corollary, I claim we shouldn't update much about philosophers' competence based on how realist or anti-realist they are).

Even 'morality: subjective or objective?' seems almost optimized to be confusing, for the same reason it would be confusing to ask "are the rules of baseball subjective, or objective?".

Baseball's rules are subjective in the sense that humans came up with them (/ located them in the space of all possible game rules), but objective in the sense that you can't just change the rules willy-nilly (without in effect changing the topic away from "baseball" and to a new game of your making). The same is true for morality.

(Though there's an additional sense in which morality might be called 'subjective', namely that there isn't a single agreed-upon 'rule set' corresponding to the word 'morality', and different people favor different rule sets -- like if soccer fans and American-football fans were constantly fighting about which of the two games is the 'real football'.

And there's an additional sense in which morality might be called 'objective', namely that the rules of morality don't allow you to stop playing the game. With baseball, you can choose to stop playing, and no one will complain about it. With morality, we rightly treat the 'game' as one that everyone is expected to play 24/7, at least to the degree of obeying game rules like 'don't murder'.)

This is also why I don't care how many philosophers think aesthetic value is subjective vs. objective. The case is quantitatively stronger for 'aesthetic value is subjective' than for 'morality is subjective' (because it's more likely that Bob and Alice's respective Aesthetics Rules will disagree about 'Mozart is one of the best musicians' than that Bob and Alice's Morality Rules will disagree about 'it's fine to kill Mozart for fun'), but qualitatively the same ambiguities are involved.

It would be better to ask questions like 'is it a supernatural miracle that morality exists / that humans happen to endorse the True Morality' and 'if all humans were ideally rational and informed, how much would they agree about what's obligatory, impermissible, worthy of praise, worthy of punishment, etc.?', rather than asking about 'subjective', 'objective', 'real', or 'unreal'.

Replies from: RobbBB, lance-bush↑ comment by Rob Bensinger (RobbBB) · 2021-11-03T19:24:54.119Z · LW(p) · GW(p)

Copying over a comment I left on Facebook:

I claim that there are basically four positions here:

1. Magical-thinking anti-realist: There's nothing special about morality, it's just like the rules of chess. So let's stop being moral!

2. Reasonable anti-realist: There's nothing special about morality, it's just like the rules of chess. It's important to emphasize that the magical-thinking realists are wrong, though, so let's say 'moral statements aren't mind-independently true', even though there's a sense in which they are mind-independently true (eg, the same sense in which statements about chess rules are mind-independently true).

3. Reasonable realist: There's nothing special about morality, it's just like the rules of chess. It's important to emphasize that the magical-thinking anti-realists are wrong, though, so let's say 'moral statements are mind-independently true', even though there's a sense in which they aren't mind-independently true (eg, the same sense in which statements about chess rules aren't mind-independently true).

4. Magical-thinking realist: Morality has to be incredibly magically physics-transcendingly special, otherwise (the magical-thinking anti-realist is right / God doesn't exist / etc.). So I hereby assert that it is indeed special in that way!

Terminology choices aside, views 2 and 3 are identical, and the whole debate gets muddled and entrenched because people fixate on the 'realism' rather than on the thing anyone actually cares about.

Cf. people who say 'we can't say non-realism is true, that would give aid and comfort to crazy cultural relativists who (incoherently) think we can't ban female genital mutilation because there are no grounds for imposing any standards across cultural divides'.

Whether you're more scared of crazy cultural relativists or of crypto-religionists isn't a good way of dividing up the space of views about the metaphysics of morality! But somehow here (I claim) we are.

My own view is the one endorsed in Eliezer Yudkowsky's By Which It May Be Judged [LW · GW] (roughly Frank Jackson's analytic descriptivism). This is what I mean when I use moral language.

When it comes to describing moral discourse in general, I endorse semantic pluralism [LW · GW] / 'different groups are talking about wildly different things, and in some cases talking about nothing at all, when they use moral language'.

You could call these views "anti-realist" in some senses. In other senses, you could call them realist (as I believe Frank Jackson does). But ultimately the labels are unimportant; what matters is the actual content of the view, and we should only use the labels if they help with understanding that content, rather than concealing it under a pile of ambiguities and asides.

Replies from: lance-bush↑ comment by Lance Bush (lance-bush) · 2021-11-03T23:03:43.344Z · LW(p) · GW(p)

When it comes to describing moral discourse in general, I endorse semantic pluralism [LW · GW] / 'different groups are talking about wildly different things, and in some cases talking about nothing at all, when they use moral language'.

I agree, but this is orthogonal to whether moral realism is true. Questions about moral realism generally concern whether there are stance-independent moral facts. Whether or not there are such facts does not directly depend on the descriptive status of folk moral thought and discourse. Even if it did, it's unclear to me how such an approach would vindicate any substantive account of realism.

You could call these views "anti-realist" in some senses. In other senses, you could call them realist (as I believe Frank Jackson does).

I'd have to know more about what Jackson's specific position is to address it.

But ultimately the labels are unimportant; what matters is the actual content of the view, and we should only use the labels if they help with understanding that content, rather than concealing it under a pile of ambiguities and asides.

I agree with all that. I just don't agree that this is diagnostic of debates in metaethics about realism versus antirealism. I don't consider the realist label to be unhelpful, I do think it has a sufficiently well-understood meaning that its use isn't wildly confused or unhelpful in contemporary debates, and I suspect most people who say that they're moral realists endorse a sufficiently similar enough cluster of views that there's nothing too troubling about using the term as a central distinction in the field. There is certainly wiggle room and quibbling, but there isn't nearly enough actual variation in how philosophers understand realism for it to be plausible that a substantial proportion of realists don't endorse the kinds of views I'm objecting to and claiming are indicative of problems in the field.

I don't know enough about Jackson's position in particular, but I'd be willing to bet I'd include it among those views I consider objectionable.

↑ comment by Lance Bush (lance-bush) · 2021-11-03T22:46:24.360Z · LW(p) · GW(p)

I think there's enormous variation in what people mean by "moral realism", enough to make it a mostly useless term

I disagree with this claim, and I don’t think that, even if there were lots of variation in what people meant by moral realism, that this would render my claim that the large proportion of respondents who favor realism indicates a problem in the profession. The term is not “useless,” and even if the term were useless, I am not talking about the term. I am talking about the actual substantive positions held by philosophers: whatever their conception of “realism,” I am claiming that enough of that 60% endorse indefensible positions that it is a problem.

I have a number of objections to the claim you’re making, but I’d like to be sure I understand your position a little better, in case those objections are misguided. You outline a number of ways we might think of objectivity and subjectivity, but I am not sure what work these distinctions are doing. It is one thing to draw a distinction, or identify a way one might use particular terms, such as “objective” and “subjective.” It is another to provide reasons or evidence to think these particular conceptions of the terms in question are driving the way people responded to the PhilPapers survey.

I’m also a bit puzzled at your focus on the terms “objective” and “subjective.” Did they ask whether morality was objective/subjective in the 2009 or 2020 versions of the survey?

It would be better to ask questions like 'is it a supernatural miracle that morality exists / that humans happen to endorse the True Morality

I doubt that such questions would be better.

Both of these questions are framed in ways that are unconventional with respect to existing positions in metaethics, both are a bit vague, and both are generally hard to interpret.

For instance, a theist could believe that God exists and that God grounds moral truth, but not think that it is a “supernatural miracle” that morality exists. It's also unclear what it means to say morality "exists." Even a moral antirealist might agree that morality exists. That just isn't typically a way that philosophers, especially those working in metaethics, would talk about putative moral claims or facts.

I’d have similar concerns about the unconventionality of asking about “the True Morality.” I study metaethics, and I’m not entirely sure what this would even mean. What does capitalizing it mean?

It also seems to conflate questions about the scope and applicability of moral concerns with questions about what makes moral claims true. More importantly, it seems to conflate descriptive claims about the beliefs people happen to hold with metaethical claims, and may arbitrarily restrict morality to humans in ways that would concern respondents.

I don't know how much this should motivate you to update away from what you're proposing here, but I can do so here. My primary area of specialization, and the focus of my dissertation research, concerns the empirical study of folk metaethics (that is, the metaethical positions nonphilosophers hold). In particular, my focus in on the methodology of paradigms designed to assess what people think about the nature of morality. Much of my work focuses on identifying ways in which questions about metaethics could be ambiguous, confusing, or otherwise difficult to interpret (see here). This also extends to a lesser extent to questions about aesthetics (see here). Much of this work focuses on presenting evidence of interpretative variation specifically in how people respond to questions about metaethics. Interpretative variation refers to the degree to which respondents in a study interpret the same set of stimuli differently from one another. I have amassed considerable evidence of interpretative variation in lay populations specifically with respect to how they respond to questions about metaethics.

While I am confident there is interpretative variation in how philosophers responded to the questions in the PhilPapers survey, I'm skeptical that such variation would encompass such radically divergent conceptions of moral realism that the number of respondents who endorsed what I'd consider unobjectionable notions of realism would be anything more than a very small minority.

I say all this to make a point: there may be literally no person on the planet more aware of, and sensitive to, concerns about how people would interpret questions about metaethics. And I am still arguing that you are very likely missing the mark in this particular case.

Replies from: lance-bush↑ comment by Lance Bush (lance-bush) · 2021-11-03T22:53:59.292Z · LW(p) · GW(p)

I also wanted to add that I am generally receptive to the kind of approach you are taking. My approach to many issues in philosophy is roughly aligned with quietists and draws heavily on identifying cases in which a dispute turns out to be a pseudodispute predicated on imprecision in language or confusion about concepts. More generally, I tend to take a quietist or a "dissolve the problem away" kind of approach. I say this to emphasize that it is generally in my nature to favor the kind of position you're arguing for here, and that I nevertheless think it is off the mark in this particular case. Perhaps the closest analogy I could make would be to theism: there is enough overlap in what theism refers to that the most sensible stance to adopt is atheism.

comment by AlexMennen · 2021-11-02T06:17:34.305Z · LW(p) · GW(p)

The combination of the two proposed explanations for why certain fields have a higher rate of one-boxing than others seems kind of plausible, but also very suspicious, because being more like decision theorists than like normies (and thus possibly getting more exposure to pro-two-boxing arguments that are popular among decision theorists) seems very similar to being more predisposed to good critical thinking on these sorts of topics (and thus possibly more likely to support one-boxing for correct reasons), so, by combining these two effects, we can explain why people in some subfield might be more likely than average to one-box and also why people in that same subfield might be more likely than average to two-box, and just pick whichever of these explanations correctly predicts whatever people in that field end up answering.

Of course, this complaint makes it seem especially strange state that two-boxing ended up being so popular among decision theorists.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-02T06:35:37.721Z · LW(p) · GW(p)

Yeah, I don't think that combo of hypotheses is totally unfalsifiable (eg, normative ethicists doing so well is IMO a strike against my hypotheses), but it's definitely flexible enough that it has to get a lot less credit for correct predictions. It's harder to falsify, so it doesn't win many points when it's verified.

Fortunately, both parts of the hypothesis can be tested in some ways separately. E.g., maybe I'm wrong about 'most non-philosophers one-box' and the Guardian poll was a fluke; I haven't double-checked yet, and don't feel that confident in a single Guardian survey.

comment by steven0461 · 2021-11-02T21:53:02.979Z · LW(p) · GW(p)

With Newcomb's Problem, I always wonder how much the issue is confounded by formulations like "Omega predicted correctly in 99% of past cases", where given some normally reasonable assumptions (even really good predictors probably aren't running a literal copy of your mind), it's easy to conclude you're being reflective enough about the decision to be in a small minority of unpredictable people. I would be interested in seeing statistics on a version of Newcomb's Problem that explicitly said Omega predicts correctly all of the time because it runs an identical copy of you and your environment.

Replies from: steven0461↑ comment by steven0461 · 2021-11-02T22:07:47.726Z · LW(p) · GW(p)

I also wonder if anyone has argued that you-the-atoms should two-box, you-the-algorithm should one-box, and which entity "you" refers to is just a semantic issue.

comment by Aryeh Englander (alenglander) · 2021-11-04T02:58:23.755Z · LW(p) · GW(p)

For what it's worth, I know of at least one decision theorist who is very familiar with and closely associated with the LessWrong community who at least at one point not long ago leaned toward two-boxing. I think he may have changed his mind since then, but this is at least a data point showing that it's not a given that philosophers who are closely aligned with LessWrong type of thinking necessarily one-box.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-04T03:30:18.955Z · LW(p) · GW(p)

Yeah, I see possible signs of this in the survey data itself -- decision theorists strongly favor two-boxing, but a lot of their other answers are surprisingly LW-like if there's no causal connection like 'decision theorists are unusually likely to read LW'. It's one reasonable explanation, anyway.

comment by evhub · 2021-11-03T19:32:20.932Z · LW(p) · GW(p)

I'm confused what “hidden-variable” interpretation this survey is referring to. “Hidden-variable,” to me, sounds inconsistent with Bell's theorem, in which case I would say that shows just as much physics illiteracy as the A-theory of time. But maybe they just mean “hidden-variable” to refer to pilot wave theory or something?

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-11-03T19:37:56.392Z · LW(p) · GW(p)

It refers to pilot wave theory, Bohmian mechanics, etc.