Where do you lie on two axes of world manipulability?

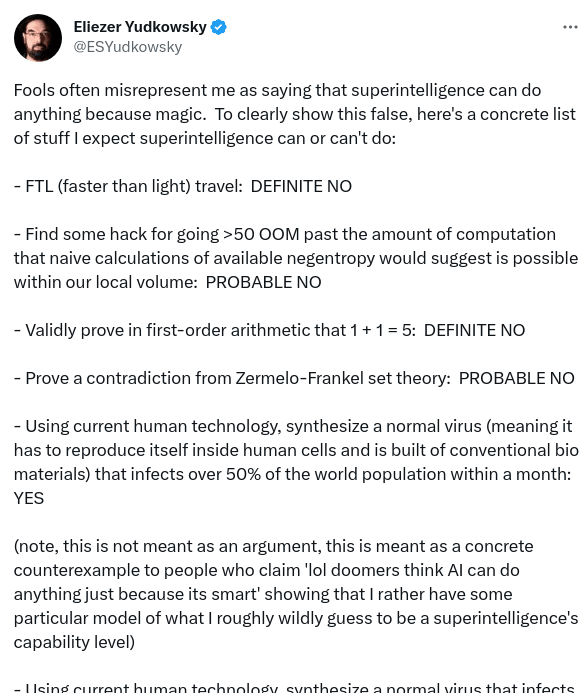

post by Max H (Maxc) · 2023-05-26T03:04:17.780Z · LW · GW · 15 commentsIn a recent tweet, Eliezer gave a long list of examples of what he thought a superintelligence could and couldn't do:

The claims and probability estimates in this tweet appear to imply a world model in which:

- In principle, most matter and energy in the universe can be re-arranged almost arbitrarily.

- Any particular re-arrangement would be relatively easy for a superintelligence, given modest amounts of starting resources (e.g. access to current human tech) and time.

- Such a superintelligence could be built by humans in the near future, perhaps through fast recursive self-improvement (RSI) or perhaps through other means (e.g. humans scaling up and innovating on current ML techniques).

I think there are two main axes on which people commonly disagree about the first two claims above:

- How possible something is in principle, according to all known and unknown laws of physics, given unlimited (except for physical laws) amounts of time, resources, intelligence, etc. Interesting questions here include:

- is FTL travel possible?

- is FTL communication possible?

- is "hacking" the human brain, using only normal-range inputs (e.g. regular video, audio), possible, for various definitions of hacking and bounds on time and prior knowledge?

- is Drexler-style nanotech possible?

- Are Dyson spheres and other galactic-scale terraforming possible?

- How "easy" something is, in the sense of how much resources / time / data / experimentation / iteration it would take even a superintelligent system to accomplish it.

Reasonable starting conditions might include: access to an internet-connected computer on Earth, with existing human-level tech around. Another assumption is that there are no other superintelligent agents around to interfere (though humans might be around and try to interfere, including by creating other superintelligences).

Questions here include:- Given that some class of technology is possible (e.g. nanotech), what is the minimum amount of experimentation / iteration / time required to build it?

- Given that some class of exploit (e.g. "hacking" a human brain) is possible in principle, what are the minimal conditions and information bandwidth under which it is feasible and reliable for a near-future realizable superintelligence?

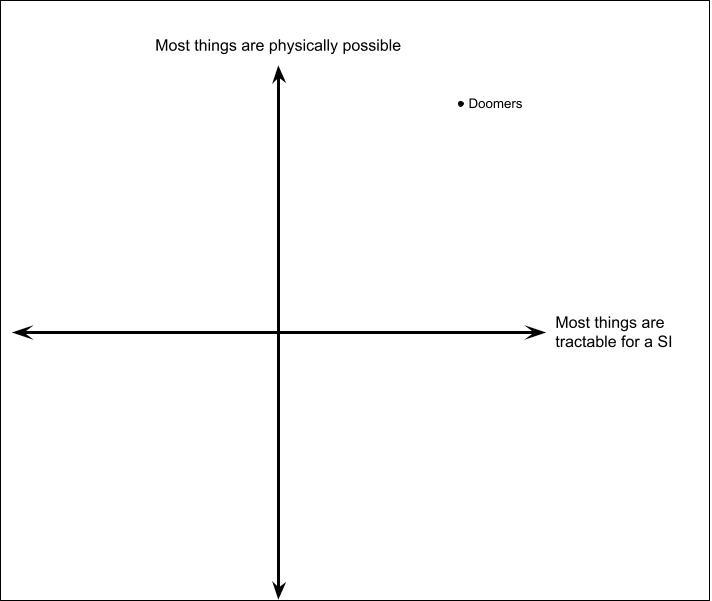

These two axes might not be perfectly orthogonal, but one could probably still come up with a Political Compass-style quiz that asks the user to rate a bunch of questions on these axes, and then plot them on a graph where the axes are roughly "what are the physical limits of possibility" and "how easily realizable are those possibilities".

I'd expect Eliezer (and myself, and many other "doomers") to be pretty far into the top-right quadrant (meaning, we believe most important things are both physically possible and eminently tractable for a superintelligence):

I think another [? · GW] common [LW · GW] view [LW · GW] on [LW(p) · GW(p)] LW is that many things are probably possible in principle, but would require potentially large amounts of time, data, resources, etc. to accomplish, which might make some tasks intractable, if not impossible, even for a superintelligence.

There are lots of object-level arguments for why specific tasks are likely or unlikely [LW · GW] to be tractable or impossible [LW · GW], but I think it's worth stepping back and realizing that disagreement over this general class of worldviews is probably an important crux for many outlooks on AI alignment and x-risk.

I also think there's an asymmetry here that comes up in meta-level arguments and forecasting about superintelligence which is worth acknowledging explicitly: even if someone makes a compelling argument for why some particular class of technology is unlikely to be easily exploitable or lead to takeover by a weakly or even strongly superhuman intelligence, doomers need only postulate that the AI does "something you didn't think of", in order to doom you. This often leads to a frustrating dynamic on both sides, but I think such a dynamic is unfortunately an inevitable fact about the nature of the problem space, rather than anything to do with discourse.

Personally, I often find posts about the physical limitations of particular technologies (e.g. scaling and efficiency limits of particular DL methods, nanotech, human brains, transistor scaling) informative and insightful, but I find them totally uncompelling as an operationally useful bound on what a superintelligence (aligned or not) will actually be capable of.

The nature of the problem (imagining what something smarter than I am would do) makes it hard to back my intuition with rigorous arguments about specific technologies. Still, looking around at current human technology, what physical laws permit in principle, and using my imagination a bit, my strongly-held belief is that something smarter than me, perhaps only slightly smarter (in some absolute or cosmic sense), could shred the world around me like paper, if it wanted to.

Some concluding questions:

- Do you think the axes above are useful for identifying cruxes in world models and forecasting about AGI?

- Where do you and others lie on these axes? If you know where someone lies on these axes, can you more easily pass their ITT [? · GW] on AGI-related topics?

- Which object-level questions / specific technologies are most important for exploring limitations and differing intuitions on? Nanotech? Biotech? Human-brain hacking? Something else?

15 comments

Comments sorted by top scores.

comment by anithite (obserience) · 2023-05-26T07:45:11.939Z · LW(p) · GW(p)

I suggest an additional axis of "how hard is world takeover". Do we live in a vulnerable world? That's an additional implicit crux (IE:people who disagree here think we need nanotech/biotech/whatever for AI takeover). This ties in heavily with the "AGI/ASI can just do something else" point and not in the direction of more magic.

As much fun as it is to debate the feasibility of nanotech/biotech/whatever, digital-dictatorships require no new technology. A significant portion of the world is already under the control of human level intelligences (dictatorships). Depending on how stable the competitive equilibrium between agents ends up, required intelligence level before an agent can rapidly grow not in intelligence but in resources and parallelism is likely quite low. [LW · GW]

Replies from: Maxc, adrian-arellano-davin↑ comment by Max H (Maxc) · 2023-05-26T15:13:14.577Z · LW(p) · GW(p)

That does seem like a good axis for identifying cruxes of takeover risk. Though I think "how hard is world takeover" is mostly a function of the first two axes? If you think there are lots of tasks (e.g. creating a digital dictatorship, or any subtasks thereof) which are both possible and tractable, then you'll probably end up pretty far along the "vulnerable" axis.

I also think the two axes alone are useful for identifying differences in world models, which can help to identify cruxes and interesting research or discussion topics, apart from any implications those different world models have for AI takeover risk or anything else to do with AI specifically.

If you think, for example, that nanotech is relatively tractable, that might imply that you think there are promising avenues for anti-aging or other medical research that involve nanotech, AI-assisted or not.

Replies from: obserience↑ comment by anithite (obserience) · 2023-05-26T20:03:14.947Z · LW(p) · GW(p)

Though I think "how hard is world takeover" is mostly a function of the first two axes?

I claim almost entirely orthogonal. Examples of concrete disagreements here are easy to find once you go looking:

- If AGI tries to take over the world everyone will coordinate to resist

- Existing computer security works

- Existing physical security works

I claim these don't reduce cleanly to the form "It is possible to do [x]" because at a high level, this mostly reduces to "the world is not on fire because:"

- existing security measures prevent effectively (not vulnerable world)

vs.

- existing law enforcement discourages effectively (vulnerable world)

- existing people are mostly not evil (vulnerable world)

There is some projection onto the axis of "how feasible are things" where we don't have very good existence proofs.

- can an AI convince humans to perform illegal actions

- can an AI write secure software to prevent a counter coup

- etc.

These are all much much weaker than anything involving nanotechnology or other "indistinguishable from magic" scenarios.

And of course Meta makes everything worse. There was a presentation at Blackhat or Defcon by one of their security guys about how it's easier to go after attackers than close security holes. In this way they contribute to making the world more vulnerable. I'm having trouble finding it though.

↑ comment by mukashi (adrian-arellano-davin) · 2023-05-26T12:21:44.471Z · LW(p) · GW(p)

This would clearly put my point in a different place from the doomers

comment by faul_sname · 2023-05-27T00:37:55.949Z · LW(p) · GW(p)

Along the theme of "there should be more axes", I think one additional axis is "how path-dependent do you think final world states are". The negative side of this axis is "you can best model a system by figuring out where the stable equilibria are, and working backwards from there". The positive side of this axis is "you can best model a system as having a current state and some forces pushing that state in a direction, and extrapolating forwards from there".

If we define the axes as "tractable" / "possible" / "path-dependent", and work through each octant one by one, we get the following worldviews

-1/-1/-1: Economic progress cannot continue forever, but even if population growth is slowing now, the sub-populations that are growing will become the majority eventually, so population growth will continue until we hit the actual carrying capacity of the planet. Malthus was right, he was just early.-1/-1/+1: Currently, the economic and societal forces in the world are pushing for people to become wealthier and more educated, all while population growth slows. As always there are bubbles and fads -- we had savings and loan, then the dotcom bubble, then the real estate bubble, then crypto, and now AI, and there will be more such fads, but none of them will really change much. The future will look like the present, but with more old people.-1/+1/-1: The amount of effort to find further advances scales exponentially, but the benefit of those advances scales linearly. This pattern has happened over and over, so we shouldn't expect this time to be different. Technology will continue to improve, but those improvements will be harder and harder won. Nothing in the laws of physics prevents Dyson spheres, but our tech level is on track to reach diminishing returns far far before that point. Also by Laplace we shouldn't expect humanity to last more than a couple million more years.-1/+1/+1: Something like a Dyson sphere is a large and risky project which would require worldwide buy-in. The trend now is, instead, for more and more decisions to be made by committee, and the number of parties with veto power will increase over time. We will not get Dyson spheres because they would ruin the character of the neighborhood.

In the meantime, we can't even get global buy-in for the project of "let's not cook ourself with global warming". This is unlikely to change, so we are probably going to eventually end up with civilizational collapse due to something dumb like climate change or a pandemic, not a weird sci-fi disaster like a rogue superintelligence or gray goo.+1/-1/-1: I have no idea what it would mean for things to be feasible but not physically possible. Maybe "simulation hypothesis"?+1/-1/+1: Still have no idea what it means for something impossible to be feasible. "we all lose touch with reality and spend our time in video games, ready-player-one style"?+1/+1/-1: Physics says that Dyson spheres are possible. The math says they're feasible if you cover the surface of a planet with solar panels and use the power generated to disassemble the planet into more solar panels, which can be used to disassemble the planet even faster. Given that, the current state of the solar system is unstable. Eventually, something is going to come along and turn Mercury into a Dyson sphere. Unless that something is very well aligned with humans, that will not end well for humans. (FOOM [LW · GW])+1/+1/+1: Arms races have led to the majority of improvements in the past. For example, humans are as smart as they are because a chimp having a larger brain let it predict other chimps better, and thus work better with allies and out-reproduce its competitors. The wonders and conveniences of the modern world come mainly from either the side-effects of military research, or from companies competing to better obtain peoples' money. Even in AI, some of the most impressive results are things like StyleGAN (a generative adversarial network) and alphago (a network trained by self-play i.e. an arms-race against itself). Extrapolate forward, and you end up with an increasingly competitive world. This also probably does not end well for humans (whimper [AF · GW]).

I expect people aren't evenly distributed across this space. I think the FOOM debate is largely between +1/+1/-1 and +1/+1/+1 octants. Also I think you can find doomers in every octant (or at least every octant that has people in it, I'm still not sure what the +1/-1/* quadrant would even mean).

comment by mukashi (adrian-arellano-davin) · 2023-05-26T07:22:47.161Z · LW(p) · GW(p)

I would place myself also in the right upper quadrant, close to the doomers, but I am not one of them.

The reason is that it is not very clear to me the exact meaning of "tractable for a SI". I do think that nanotechnology/biotechnology can progress enormously with SI, but the problem is not only developing the required knowledge, but creating the economic conditions to make these technologies possible, building the factories, making new machines, etc. For example nowadays, in spite of the massive demand of microchips worldwide, there are very very few factories (and for some specific technologies the number of factories is n=1). Will we get there eventually? Yes. But not at the speed that EY fears.

I think you summarised pretty well my position in this paragraph:

"I think another common [LW · GW] view [LW · GW] on [LW(p) · GW(p)] LW is that many things are probably possible in principle, but would require potentially large amounts of time, data, resources, etc. to accomplish, which might make some tasks intractable, if not impossible, even for a superintelligence. "

So I do think that EY believes in "magic" (even more after reading his tweet), but some people might not like the term and I understand that.

In my case using the word magic does not refer only at breaking the laws of physics. Magic might refer to someone who holds such a simplified model of the world that think, that you can make in a matter of days all those factories, machines and working nanotechnology (on the first try) and then succesfully deploy them everywhere killing everyone, and that we will get to that point in a matter of days AND that there won't be any other SI that could work to prevent those scenarios. I don't think I am misrepresenting EY point of view here, correct me otherwise,

If someone believed that a good group of engineers working one week in a spacecraft model could succesfuly 30 years later in an asteroid close to Proxima Centaury, would you call it magical thinking? I would. There is nothing beyond the realm of physics here! But it assumes so many things and it is so stupidly optimistic that I would simply dismiss it as nonsense.

Replies from: Viliam↑ comment by Viliam · 2023-05-26T08:53:03.657Z · LW(p) · GW(p)

A historical analogy could be the invention of computer by Charles Babbage, who couldn't build a working prototype because the technology of his era did not all allow precision necessary for the components.

The superintelligence could build its own factories, but that would require more time, more action in real world that people might notice, the factory might require some unusual components or raw materials in unusual quantities; some components might even require their own specialized factory, etc.

I wonder, if humanity ever gets to the "can make simulations of our ancestors" phase, whether it will be a popular hobby to do "speedruns" of technological explosion. Like, in the simulation you start as a certain historical character, and your goal is to bring the Singularity or land on Proxima Centauri as soon as possible. You have an access to all technological knowledge of the future (e.g. if you close your eyes, you can read the Wikipedia as of year 2500), but you need to build everything using the resources available in the simulation.

Replies from: lc, adrian-arellano-davin↑ comment by lc · 2023-05-26T16:09:46.407Z · LW(p) · GW(p)

The superintelligence could build its own factories, but that would require more time, more action in real world that people might notice, the factory might require some unusual components or raw materials in unusual quantities; some components might even require their own specialized factory, etc.

People who consider this a serious difficulty are living on a way more competent planet than mine. Even if RearAdmiralAI needed to build new factories or procure exotic materials to defeat humans in a martial conflict, who do you expect to notice or raise the alarm? No monkeys are losing their status in this story until the very end.

↑ comment by mukashi (adrian-arellano-davin) · 2023-05-26T12:30:50.388Z · LW(p) · GW(p)

The Babbage example is the perfect one. Thank you, I will use it

comment by JoeTheUser · 2023-05-26T23:17:30.547Z · LW(p) · GW(p)

I think the modeling dimension to add is "how much trial and error is needed". Just about any real world thing that isn't a computer program or simple, frictionless physical object, has some degree of unpredictability. This means using and manipulating it effectively requires a process of discovery - one can't just spit out a result based on a theory.

Could an SI spit out a recipe for a killer virus just from reading current literature? I doubt it. Could it construct such thing given a sufficiently automated lab (and maybe humans to practice on)? That seems much more plausible.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-05-26T23:39:57.085Z · LW(p) · GW(p)

I think the modeling dimension to add is "how much trial and error is needed".

I tried to capture that in the tractability axis with "how much resources / time / data / experimentation / iteration..." in the second bullet point.

Could an SI spit out a recipe for a killer virus just from reading current literature? I doubt it.

The genome for smallpox is publicly available, and people are no longer vaccinated against it. I think it's at least plausible that a SI could ingest that data, existing gain-of-function research, and tools like AlphaFold (perhaps improving on them using its own insights, creativity, and simulation capabilities) and then come up with something pretty deadly and vaccine-resistant without experimentation in a wet lab.

Replies from: JoeTheUser↑ comment by JoeTheUser · 2023-05-27T05:14:22.847Z · LW(p) · GW(p)

What I don't think "how much of the universe is tractable" by itself captures is "how much more effective would an SI be it if had the ability to interact with a smaller or larger part of the world versus if it had to work out everything by theory". I think it's clear human beings are more effective given an ability to interact with the world. It doesn't seem LLMs get that much more effective.

I think a lot of AI safety arguments assume an SI would be able to deal with problems in a completely tractable/purely-by-theory fashion. Often that is not needed for the argument and it seems implausible to those not believing in such a strongly tractable universe.

My personal intuition is that as one tries to deal with more complex systems effectively, one has to use a more and more experimental/interaction-based approaches regardless of one intelligence. But I don't think that means you can't have a very effective SI following that approach. And whether this intuition is correct remains to be seen.

comment by shminux · 2023-05-26T04:53:18.723Z · LW(p) · GW(p)

Something is very very hard if we see no indication of it happening naturally. Thus FTL is very very hard, at least without doing something drastic to the universe as a whole... which is also very very hard. On the other hand,

"hacking" the human brain, using only normal-range inputs (e.g. regular video, audio), possible, for various definitions of hacking and bounds on time and prior knowledge

is absolutely trivial. It happens to all of us all the time to various degrees, without us realizing it. Examples: falling in love, getting brainwashed, getting angry due to something we read... If anything, getting brainhacked is what keeps us from being bored to death. Someone who is smarter than you and understands how the human mind works can make you do almost anything, and probably has made you do stuff you didn't intend to do a few moments prior many times in your life. Odds are, you even regretted having done it, after the fact, but felt compelled to do it in the moment.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-05-26T13:09:42.623Z · LW(p) · GW(p)

Not sure what you mean by "happening naturally". There are lots of inventions that are the result of human activity which we don't observe anywhere else in the universe - an internal combustion engine or a silicon CPU do not occur naturally, for example. But inventing these doesn't seem very hard in an absolute sense.

It happens to all of us all the time to various degrees, without us realizing it.

Yes, and I think that puts certain kinds of brain hacking squarely in the "possible" column. The question is then how tractable, and to what degree is it possible to control this process, and under what conditions. Is it possible (even in principle, for a superintelligence) to brainwash a randomly chosen human just by making them watch a short video? How short?

comment by Boris Kashirin (boris-kashirin) · 2023-05-27T01:15:52.064Z · LW(p) · GW(p)

Not long ago I often heard that AI is "beyond horizon". I agreed to that while recognising how close horizon become. Technological horizon isn't fixed time into future and not just property of (~unchanging) people but also available tech.

Now I hear "it takes a lot of time and effort" but again it does not have to mean "a lot of time and effort in my subjective view". It can take a lot of time and effort and at the same time be done in a blink of an eye - but my eye, but not eye of whoever doing it. Lot of time and effort don't have to subjectively feel like "lot of time and effort" to me.