Open Thread Feb 22 - Feb 28, 2016

post by Elo · 2016-02-21T21:14:17.216Z · LW · GW · Legacy · 229 commentsIf it's worth saying, but not worth its own post (even in Discussion), then it goes here.

Notes for future OT posters:

1. Please add the 'open_thread' tag.

2. Check if there is an active Open Thread before posting a new one. (Immediately before; refresh the list-of-threads page before posting.)

3. Open Threads should be posted in Discussion, and not Main.

4. Open Threads should start on Monday, and end on Sunday.

229 comments

Comments sorted by top scores.

comment by Houshalter · 2016-02-22T14:57:55.716Z · LW(p) · GW(p)

I found this paper which is interesting. But at the start he tells an interesting anecdote about existential risk:

Replies from: ViliamThe first occurred at Los Alamos during WWII when we were designing atomic bombs. Shortly before the first field test (you realize that no small scale experiment can be done -- either you have the critical mass or you do not), a man asked me to check some arithmetic he had done, and I agreed, thinking to fob it off on some subordinate. When I asked what it was, he said, "it is the probability that the test bomb will ignite the whole atmosphere," I decided I would check it myself! The next day when he came for the answers I remarked to him, "The arithmetic was apparently correct but I do not know about the formulas for the capture cross sections for oxygen and nitrogen -- after all, there could be no experiments at the needed energy levels." He replied, like a physicist talking to a mathematician, that he wanted me to check the arithmetic not the physics, and left. I said to myself, "what have you done, Hamming, you are involved in risking all life that is known in the Universe, and you do not know much of an essential part?" I was pacing up and down the corridor when a friend asked me what was bothering me. I told him. His reply was, "Never mind, Hamming, no one will ever blame you." Yes, we risked all the life we knew of in the known universe on some mathematics. Mathematics is not merely an idle art form, it is an essential part of our society.

↑ comment by Viliam · 2016-02-22T19:40:23.208Z · LW(p) · GW(p)

His reply was, "Never mind, Hamming, no one will ever blame you."

This is a good example of optimizing for the wrong goal.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2016-02-28T00:41:45.104Z · LW(p) · GW(p)

+1

comment by Lumifer · 2016-02-22T20:42:37.964Z · LW(p) · GW(p)

LW might find that interesting:

Replies from: indexador2, MrMind, FurcasI'm becoming a Christian, not just one who occasionally went to church as a kid, but a real one that believes in Christ, loving God with all my heart, etc.

Most ex-atheists who become deists turn to Buddhism, so I thought I'd be clear why they are all wrong (Robert Wright!). I'd like to thank Mencius Moldbug, Dierdre McCloskey, Mike Behe, Tim Keller (four names probably never listed in sequence ever), and hundreds more...Below are snippets (top and bottom) from my Christian apology: I came to Christ via rational inference, not a personal crisis.

↑ comment by indexador2 · 2016-02-22T20:55:07.484Z · LW(p) · GW(p)

If evolution is untrue, it changes everything.

Just by reading this phrase, I can conclude that everything else is probably useless.

Replies from: Viliam, username2, Transfuturist, ChristianKl↑ comment by Viliam · 2016-02-23T08:58:43.295Z · LW(p) · GW(p)

Here is a shortened version:

Darwin’s grandfather believed in something similar to abiogenesis. Later in Darwin’s life, scientists found something that appeared to be the first proto-cell, but later they found a progenitor to this in oceanic mud. Darwin believed that the discovery of the first life form would occur soon, but it didn't happen. Organisms that reproduce, metabolize energy, and create a cell wall, require at least a hundred proteins, each of which has approximately 300 amino acids, and all need to be able to work with each other.

To reach this level of sophistication via chemical evolution defies explanation. The experiments in 1953 created some of the amino acids found in all life forms, but this is a far cry from creating proteins. The origin of life is one of those puzzles that has been right around the corner, for the past two centuries. Imagining something is not a scientific argument, but simply speculation. Many present all evolution as similar to how wolves changed to sheepdogs, or the way in which bacteria develop resistance to penicillin, but such change will not create radically new protein complexes or new species.

If evolution is untrue, it changes everything. After I accepted that a creator exists, I found myself attending church, engaging in Bible study, and reading Christian authors. Various facts all began to make much more sense.

Replies from: MrMind, efalken, Jiro, Houshalter, ChristianKl↑ comment by MrMind · 2016-02-23T13:48:41.130Z · LW(p) · GW(p)

The experiments in 1953 created some of the amino acids found in all life forms, but this is a far cry from creating proteins.

But, apparently, it's not a far cry from a supernatural person to create all universe(s).

My two cents, extrapolating from this and other converts (e.g. UnequallyYoked, which I still follow): there's a certain tendency of the brain to want to believe in the supernatural. In those people who have this urge at a stronger level, but who come in contact with rationality, a sort of cognitive conflict is formed, like an addict trying to fight the urge to use drugs.

As soon as a hole in rationality is perceived, this gives the brain the excuse to switch to a preferred mode of thinking, wether the hole is real or not, and wether there exists more probable alternatives.

Admittedly, this is just a "neuronification" of a psychological phoenomenon, and it does lower believers' status by comparing them to drug addicts...

↑ comment by efalken · 2016-02-23T15:33:19.325Z · LW(p) · GW(p)

I'm a big Eliezer fan, and like reading this blog on occasion. I consider myself rational, Dunning-Kruger effect notwithstanding (ie, I'm too dumb or biased to know I'm not dumb or biased, trapped!). In any case, I think the above is pretty good, but I would stress the ID portion of my paper, which is in the PDF not the post, is that the evolutionary mechanism as observed empirically scales O(2^n), not O(n), generally, where n is the number of mutations needed to create a new function. Someday we may see evolution that scales, at which point I will change my mind, but thus far, I think Behe is correct in his 'edge of evolution' argument (eg, certain things, like anti-freeze in fish, are evolutionarily possible, others, like creating a flagellum, are not). As per the Christianity part, the emphasis on the will over reason gives a sustainable, evolutionarily stable 'why' to habits of character and thought that are salubrious, stoicism with real inspiration. Christianity also is the foundation for individualism and bourgeois morality that has generated flourishing societies, so, it works personally and for society.

My younger self disagreed with my current self, so I can empathize and respect those why find my reasoning unconvincing, but I don't think it's useful in figuring things out to simply attribute my belief to bias or insecurity.

Replies from: MrMind, ChristianKl, Gunnar_Zarncke↑ comment by MrMind · 2016-02-24T08:33:41.847Z · LW(p) · GW(p)

Someday we may see evolution that scales, at which point I will change my mind, but thus far, I think Behe is correct in his 'edge of evolution' argument

This is the part I cannot wrap around my mind: let's say that evolution, as it's presently understood, cannot explain the totality or even the birth of the life evolved on this planet. How can one jump from "not explained by present understanding of evolution" to "explained by a deity"? I mean, why the probability of the supernatural is so low compared to say, intelligent aliens intervention, panspermia or say a passing black hole that happens to create a violation of the laws of biochemistry?

As per the Christianity part, the emphasis on the will over reason gives a sustainable, evolutionarily stable 'why' to habits of character and thought that are salubrious, stoicism with real inspiration.

Have I understood correctly that, once estabilished for whatever reason that a deity exists, that you choose which deity exactly based on your historical and moral preferences?

Replies from: entirelyuseless↑ comment by entirelyuseless · 2016-02-24T14:28:46.704Z · LW(p) · GW(p)

It is a serious mistake to assume that because something could happen by natural laws, it is automatically more probable than something which would be a violation of natural laws.

For example, suppose I flipped a coin 10,000 times in a row and always got heads. In theory there are many possible explanations for this. But suppose by careful investigation we had reduced it to two possibilities:

- It was a fair coin, and this happened by pure luck.

- God made it happen through a miraculous intervention.

Number 1 could theoretically happen by natural laws, number 2 could not. But number 2 is more probable anyway.

The same thing might well be true about explanations such as "a passing black hole that happens to create a violation of the laws of biochemistry." I see no reason to think that such things are more probable than the supernatural.

(That said, I agree that Eric is mistaken about this.)

Replies from: MrMind↑ comment by MrMind · 2016-02-25T09:02:48.526Z · LW(p) · GW(p)

But suppose by careful investigation we had reduced it to two possibilities:

Just to be clear, this is obviously not what is happening with Eric. But let's run with the scenario:

Number 1 could theoretically happen by natural laws, number 2 could not. But number 2 is more probable anyway.

I would contest that this is not the case. If you think that n° 2 is more probable, I would say it's just measuring that the probability you assign to the supernatural is higher than 2^10k (besides, this is exactly Jaynes' suggested way to numerically estimate intuitive probabilities).

But your probability is just a prior: while n° 1 is justifiable by appealing to group invariance or symmetric ignorance, n° 2 just pops out of nowhere.

It certainly feels that n° 2 should be more probable, but the wrong answer also feels right in the Wason selection task.

This is what I was asking Eric: by what process were you able to eliminate every other possible explanation, so that the supernatural is the only remaining one?

I suspect also that, in your hypothetical scenario, this would be the same process hidden in the sentence "by careful investigation".

↑ comment by ChristianKl · 2016-02-23T17:06:28.928Z · LW(p) · GW(p)

eg, certain things, like anti-freeze in fish, are evolutionarily possible, others, like creating a flagellum, are not

The evolution of the flagellum works because proteins used in it are useful in other context:

Replies from: efalkenThe best studied flagellum, of the E. coli bacterium, contains around 40 different kinds of proteins. Only 23 of these proteins, however, are common to all the other bacterial flagella studied so far. Either a “designer” created thousands of variants on the flagellum or, contrary to creationist claims, it is possible to make considerable changes to the machinery without mucking it up.

What’s more, of these 23 proteins, it turns out that just two are unique to flagella. The others all closely resemble proteins that carry out other functions in the cell. This means that the vast majority of the components needed to make a flagellum might already have been present in bacteria before this structure appeared.

↑ comment by efalken · 2016-02-23T17:32:47.304Z · LW(p) · GW(p)

Of the millions of proteins on the planet it is unremarkable most exist elsewhere, just as it's likely most parts in a car can be found in other machines. Further, these aren't identical proteins, merely 'homologous' ones, where there's a stretch of, say, a 70% match over 40% of the protein, so that makes this finding not surprising (lug nut in engine A like fastener in engine B). A Type 3 Secretory System has about 1/3 of the proteins in a flagellum (depends which T3SS, which flagellum), but to get from one to the other needs probably ten thousand new nucleotides in a specific constellation, and nothing close to that kind of change has been observed in the lab w/ fruit flies or E. coli. So, it's possible, but still improbable, like turning one of my programs into another via random change and selection, there are many similarities in all my programs, but it just would take too long. Possible is not probable, and unlike cosmological improbabilities, there's no anthropic principle to save this. Pointing out homologs still leaves the problem of tranversing a highly spiked fitness landscape, but if this is ever demonstrated on say, a new protein complex in E. coli, I'd say, you win (but more complex than moving a gene closer to a promoter region as in the citT).

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-23T17:59:07.317Z · LW(p) · GW(p)

Any present version of a protein that evolved >1,000,000,000 years ago is only homologous and not identical to it's predecessor.

just would take too long

A billion years does happen to be really long, especially if you have very many tiny spots of life all around the planets that evolve on their own.

What works in a lab in a few years is radically different than what works in billions of billions of parallel experiments done for billions of years.

Pointing out homologs still leaves the problem of tranversing a highly spiked fitness landscape, but if this is ever demonstrated on say, a new protein complex in E. coli, I'd say, you win

How do you judge something to be a new protein complex? Bacteria's pass their plasmides around.

E.coli likely hasn't good radically new protein complexes in the last millions of years so anything it does presently is highly optimized and proteins are only homologous to their original functions.

I think you are more likely to find new things in bacteria's that actually adept to radically new enviroments.

Replies from: efalken↑ comment by efalken · 2016-02-24T01:21:57.946Z · LW(p) · GW(p)

The years thing seems to make everything probable, because we have basically 600 MM years of evolution from something simple to everything today, and that's a lot of time. But it is not infinite. When we look at what evolution actually accomplishes in 10k generations, it is basically a handful of point mutations, frameshifts, and transpositions. Consider humans have 50MM new functioning nucleotides developed over 6 million years from our 'common ape' ancestor: where are the new unique functioning nucleotides (say, 1000) in the various human haplogroups? Evolution in humans seems to have stopped. Dawkins has said given enough time 'anything' can happen. True, but in finite time a lot less happens.

They've been looking at E. coli for 64000k+ generations. That's where we should see something, and instead all we get is turning a gene that is sometimes on, to always on (citT), via a mutation that put it near a different promoter gene. That's kinda cool, and I admit there's some evolution, but it seems to have limits.

But, thanks for the respectful tone. I think it's important to remember that people who disagree with you can be neither stupid or disingenuous (there's a flaw in the Milgrom-Stokey no-trade theorem, and I think it's related to the 'Fact-Free Learning' paper of Aragones et al.)

Replies from: ChristianKl, Kaj_Sotala, Viliam↑ comment by ChristianKl · 2016-02-24T09:20:13.545Z · LW(p) · GW(p)

They've been looking at E. coli for 64000k+ generations. That's where we should see something

There's your flaw in reasoning. 64000k is relatively tiny. But more importantly bacteria's today are highly optimized while bacteria's 2 billion years ago when the flagellum evolved weren't. I would expect more innovation back then.

One example for that optimization is that human's carry around a lot of pseudogenes. Those are sequences that were genes and stopped being genes when a few mutations happened.

Carrying those sequences around is good for innovation as far as producing new proteins that serve new functions.

The strong evolution pressure that exists on E-coli today results in E-coli not carrying around a lot of pseudogenes. Generally being near strong local maxima also reduces innovation.

If you want to look at new bacterias with radical innovations the one's in Three Mile Island.

Consider humans have 50MM new functioning nucleotides developed over 6 million years from our 'common ape' ancestor: where are the new unique functioning nucleotides (say, 1000) in the various human haplogroups? Evolution in humans seems to have stopped.

No evolution in humans hasn't stopped.

It is strong enough that natives skin color strongly correlates to their local sunlight patterns. We don't only have black native people at the equator in Africa but also in South America. Vitamin D3 seems to be important enough to exert enough evolutionary pressure.

In area's with high malaria density in West Africa 25% have the sickle cell trait. It's has much lower prevelance in Western Europe where there's less malaria.

Western Europe has much higher rates of lactose intolerance than other human populations.

Those are the examples I can bring on the top of my head. There are likely other differences. Due to the current academic climate the reasons for the genetic differences between different human haplogroups happen to be underresearched. I would predict that this changes in the next ten years but you might have to read the relevant papers in Chinese ;)

↑ comment by Kaj_Sotala · 2016-02-26T17:16:59.447Z · LW(p) · GW(p)

Evolution in humans seems to have stopped.

The 10,000 Year Explosion disagrees; to quote my own earlier summary of it:

Replies from: efalken60,000 years ago, there were something like a quarter of a million modern humans. 3,000 years ago, thanks to the higher food yields allowed by agriculture, there were 60 million humans. A larger population means there's more genetic variance: mutations that had previously occurred every 10,000 years or so were now showing up every 400 years. The changed living conditions also began to select for different genes. A "gene sweep" is a process where beneficial alleles increase in frequency, "sweeping through" the population until everyone has them. Hundreds of these are still ongoing today. For European and Chinese samples, the sweeps' rate of origination peaked at about 5,000 years ago and at 8,500 years ago for one African sample. While the full functions of these alleles are still not known, it is known that most involve changes in metabolism and digestion, defenses against infectious disease, reproduction, DNA repair, or in the central nervous system.

The development of agriculture led, among other things, to a different mix of foods, frequently less healthy than the one enjoyed by hunter-gatherers. For instance, vitamin D was poorly available in the new diet. However, it is also created by ultraviolet radiation from the sun interacting with our skin. After the development of agriculture, several new mutations showed up that led to people in the areas more distant from the equator having lighter skins. There is also evidence of genes that reduce the negative effects associated with e.g. carbohydrates and alcohol. Today, people descending from populations that haven't farmed as long, like Australian Aborigines and many Amerindians, have a distinctive track record of health problems when exposed to Western diets. DNA retrieved from skeletons indicates that 7,000 to 8,000 years ago, no-one in central and northern Europe had the gene for lactose tolerance. 3,000 years, about 25 percent of people in central Europe had it. Today, about 80 percent of the central and northern European population carries the gene. [...]

People in certain areas have more mutations giving them a resistance to malaria than people in others. The human skeleton has become more lightly built, more so in some populations. Skull volume has decreased apparently in all populations: in Europeans it is down 10 percent from the hight point about 20,000 years ago. For some reason, Europeans also have a lot of variety in eye and hair color, whereas most of the rest of the world has dark eyes and dark hair, implying some Europe-specific selective pressure that happened to also affect those.

As for cognitive changes: there are new versions of neurotransmitter receptors and transporters. Several of the alleles have effects on serotonin. There are new, mostly regional, versions of genes that affect brain development: axon growth, synapse formation, formation of the layers of the cerebral cortex, and overall brain growth. Evidence from genes affecting both brain development and muscular strength, as well as our knowledge of the fact that humans in 100,000 BC had stronger muscles than we do have today, suggests that we may have traded off muscle strength for higher intelligence. There are also new versions of genes affecting the inner ear, implying that our hearing may still be adapting to the development of language - or that specific human populations might even be adapting to characteristics of their local languages or language families.

↑ comment by efalken · 2016-02-29T00:45:32.149Z · LW(p) · GW(p)

Allele variation that generates different heights or melanin within various races, point mutations like sickle cell, the mutations that generate lactose tolerance in adults, or that affect our ability to process alcohol, are micro-evolution. They do not extrapolate to new tissues and proteins that define different species. I accept that polar bears descended from a brown bear, that the short-limb, heat-conserving body of an Eskimo was the result of the standard evolutionary scenario. I have no reason to doubt the Earth existed for billions of years.

Humans have hundreds of orphan genes unique among mammals. To say this is just an extension of micro-evolution relies on the possibility it could happen, but you need 50MM new nucleotides that work to arise within 500k generations. Genetic drift could generate that many mutations, but the chance these would be functional assumes proteins are extremely promiscuous. When you look at what it takes to make a functioning protein within the state-space of all amino acid sequences, and how proteins work in concert with promoter genes, RNA editing, and connecting to other proteins, the probability this happened via mutation and selection is like a monkey typing a couple pages of Shakespeare: possible, but not probable.

This all argues for a Creator, who could be an alien, or an adolescent Sim City programmer in a different dimension, or a really smart and powerful guy that looks like Charlton Heston. The argument for a Christian God relies on issues outside of argument by design

Replies from: gjm, gjm, entirelyuseless↑ comment by gjm · 2016-02-29T14:34:17.742Z · LW(p) · GW(p)

micro-evolution. They do not extrapolate to new tissues and proteins that define different species.

You claimed that evolution in humans seems to have stopped. Kaj_Sotala gave you evidence that it hasn't. Of course the examples he gave were of "micro-evolution"; what else would you expect when the question is about what's happened in recent evolution, within a particular species?

↑ comment by gjm · 2016-02-29T14:43:49.455Z · LW(p) · GW(p)

Humans have hundreds of orphan genes unique among mammals.

There's some reason to think that most human "orphan genes" are actually just, so to speak, random noise. Do you have good evidence for hundreds of actually useful orphan genes?

↑ comment by entirelyuseless · 2016-02-29T02:05:05.468Z · LW(p) · GW(p)

I'm curious what you think the earth looked like during those billions of years. Scientists have pretty concrete ideas of what things were like over time: where the continents were, which species existed at which times, and so on. Do you think they are right about these things, or is it all just guesswork?

When I was younger I thought that evolution was false, but I started to change my mind once I started to think about that kind of concrete question. If the dating methods are generally accurate (and I am very sure that they are), it follows that most of that scientific picture is going to be true.

This wouldn't be inconsistent with the kind of design that you are talking about, but it strongly suggests that if you had watched the world from an external, large scale, point of view, it would look pretty much like evolution, even if on a micro level God was inserting genes etc.

↑ comment by Viliam · 2016-02-24T08:48:40.449Z · LW(p) · GW(p)

Evolution in humans seems to have stopped.

White skin, blue eyes, lactose digestion in adulthood... (some people say even consciousness)... are relatively recent adaptations.

What did you expect, tentacles? ;)

↑ comment by Gunnar_Zarncke · 2016-02-24T21:23:48.191Z · LW(p) · GW(p)

The question is: Would somebody who builds his argument on one more missing step reverse his stance when that more step is also found or would he just point out the next currently missing bit?

↑ comment by Jiro · 2016-02-23T15:37:00.826Z · LW(p) · GW(p)

I think he's equivocating on "if evolution is untrue, it changes everything". That statement is literally true in the same sense that "if I'm a brain in a jar, it changes everything" or "if the world was created by Zeus, it changes everything" are true. But that's not what he's using it to mean.

Replies from: Viliam↑ comment by Viliam · 2016-02-24T08:38:27.312Z · LW(p) · GW(p)

Of course; "it changes everything" doesn't mean "I can stop using logic and just take my preferred fairy tale". There are many possible changes.

Also by "evolution" he means "creating a life from non-life, in the lab, today", because that's the part he is unsatisfied with.

So, more or less: "if you cannot show me how to create life from non-life, then Santa must be real".

↑ comment by Houshalter · 2016-02-24T14:11:59.658Z · LW(p) · GW(p)

Here is a great video that explain how abiogenesis happened.

Here is another great video on the evolution of the flagellum.

All of his videos are fantastic. And there is a great deal more stuff like that on youtube if you search around. It's really inexcusable for an intelligent person to doubt evolution these days. The evidence is vast and overwhelming.

Replies from: gjm↑ comment by gjm · 2016-02-24T14:33:08.776Z · LW(p) · GW(p)

I'm not sure Eric is denying common descent (the subject of your last link). My impression is that he's some sort of theistic evolutionist, is happy with the idea that all today's life on earth is descended from a common ancestor[1] but thinks that where the common ancestor came from, and how it was able to give rise to the living things we see today given "only" a few billion years and "only" the size of the earth's biosphere, are questions with no good naturalistic answer, and that God is the answer to both.

[1] Or something very similar; perhaps there are scenarios with a lot of "horizontal transfer" near the beginning, in which the question "one common ancestor or several?" might not even have a clear meaning.

[EDITED because I wrote "Erik" instead of "Eric"; my brain was probably misled by the "k" in the surname. Sorry, Eric.]

Replies from: Jiro, Houshalter↑ comment by Jiro · 2016-02-24T21:57:10.907Z · LW(p) · GW(p)

I'm not sure Eric is denying common descent

Well, he says:

Many present all evolution as similar to how wolves changed to sheepdogs, or the way in which bacteria develop resistance to penicillin, but such change will not create radically new protein complexes or new species.

If he doesn't believe that species can become other species, he can't believe in common descent (unless he believes that the changes in species happen when scientists say they happen, but he attributes this to God).

Replies from: gjm↑ comment by gjm · 2016-02-25T00:11:53.172Z · LW(p) · GW(p)

unless he believes that the changes in species happen when scientists say they happen, but he attributes this to God

This is approximately what many Christians believe. (The idea being that the broad contours of the history of life on earth are the way the scientific consensus says, but that various genetic novelties were introduced as a result of divine guidance of some kind.)

I'm not sure whether this is Eric's position. He denies being a young-earth creationist, but he does also make at least one argument against "universal common descent". Eric, if you're reading this, would you care to say a bit more about what you think did happen in the history of life on earth? What did the scientists get more or less right and what did they get terribly wrong?

↑ comment by Houshalter · 2016-02-24T17:41:43.547Z · LW(p) · GW(p)

Only the last link is about common descent. And it isn't agnostic on theistic evolution; there's a whole section on experiments for testing evolution through Random Mutation and Natural Selection. The first link covers abiogenesis, and the second the evolution of complicated structures like the flagellum.

I don't think theistic evolution is that much more rational than standard creationism. It's like someone realized the evidence for evolution was overwhelming, but was unable to completely update their beliefs.

Replies from: gjm↑ comment by gjm · 2016-02-24T17:49:21.387Z · LW(p) · GW(p)

Only the last link is about common descent.

That would by why I called it "the subject of your last link" rather than, say, "the subject of all your links".

there's a whole section on experiments for testing evolution through Random Mutation and Natural Selection.

I do not think anything on that page says very much about whether the evolution of life on earth (including in particular human life) has benefited from occasional tinkering by a god or gods. (For the avoidance of doubt: I am very confident it hasn't.)

I don't think theistic evolution is that much more rational than standard creationism.

I think it's quite a bit better -- the inconsistencies with other things we have excellent evidence for are subtler -- but that wasn't my point. I was just trying to avoid arguments with strawmen. If Erik accepts common descent, there is little point directing him to a page listing evidence for common descent as if that refutes his position.

↑ comment by ChristianKl · 2016-02-23T11:25:38.820Z · LW(p) · GW(p)

Organisms that reproduce, metabolize energy, and create a cell wall, require at least a hundred proteins, each of which has approximately 300 amino acids, and all need to be able to work with each other.

In reality of course they don't need any proteins and it's quite possible that the first cells were simply RNA based.

↑ comment by Transfuturist · 2016-02-22T23:42:03.027Z · LW(p) · GW(p)

The equivocation of 'created' in those four points are enough to ignore it entirely.

↑ comment by ChristianKl · 2016-02-22T23:16:59.641Z · LW(p) · GW(p)

It does happen to be a bit frightening to see an economics PHD doubt evolution. I think it would be good if someone like Scott Alexander writes a basic "here's why evolution is true"-post.

Replies from: None, Artaxerxes, Viliam↑ comment by [deleted] · 2016-02-22T23:28:39.382Z · LW(p) · GW(p)

I think it would be good if someone like Scott Alexander writes a basic "here's why evolution is true"-post.

I don't think such a thing is possible. There's too many bad objections to evolution floating around in the environment.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-23T11:02:22.770Z · LW(p) · GW(p)

The goal hasn't to be to address every bad objection. Addressing objections strong enough to convince an economics PhD and at the same time providing the positive reasons that make us believe in evolution would be valuable.

↑ comment by Artaxerxes · 2016-02-23T06:16:26.825Z · LW(p) · GW(p)

Dawkins' Greatest Show on Earth is pretty comprehensive. The shorter the work as compared to that, the more you risk missing widely held misconceptions people have.

↑ comment by Viliam · 2016-02-23T09:02:09.846Z · LW(p) · GW(p)

It does happen to be a bit frightening to see an economics PHD doubt evolution.

I wouldn't expect economics PhD to give people better insights into biology. (Only indirectly, as PhD in economics is a signal of high IQ.) A biology PhD would be more scary.

Replies from: ChristianKl, username2↑ comment by ChristianKl · 2016-02-23T11:22:43.918Z · LW(p) · GW(p)

An economics PhD should understand that markets with decentralized decision making often beat intelligent design.

Replies from: James_Miller, None↑ comment by James_Miller · 2016-02-23T15:26:58.175Z · LW(p) · GW(p)

As an econ PhD, I'm theoretically amazed that multicellular organisms could overcome all of the prisoners' dilemma type situations they must face. You don't get large corporations without some kind of state, so why does decentralized evolution allow for people-states? I've also wondered, given how much faster bacteria and viruses evolve compared to multicellular organisms, why are not the viruses and bacteria winning by taking all of the free energy in people? Yes, I understand some are in a symbiotic relationship with us, but shouldn't competition among microorganisms cause us to get nothing? If one type of firm innovated much faster than another type, the second type would be outcompeteted in the marketplace.(I do believe in evolution, of course, in the same way I accept relativity is correct even though I don't understand the theory behind relativity.)

Replies from: ChristianKl, Lumifer↑ comment by ChristianKl · 2016-02-23T17:20:55.802Z · LW(p) · GW(p)

You don't get large corporations without some kind of state, so why does decentralized evolution allow for people-states?

In the absence of any state holding the monopoly of power a large corporation automatically grows into a defacto state as the British East India company did in India. Big mafia organisations spring up even when the state doesn't want them to exist. The same is true for various terrorist groups.

From here I could argue that the economics establishment seems to fail at their job when they fail to understand how coorperation can infact arise but I think there good work on cooperation such as Sveriges Riksbank Prize winner Elinor Ostrom.

If I understand her right than the important thing for solving issues of tragedy of the commons isn't centralized decision making but good local decision making by people on-the-ground.

Replies from: James_Miller, bogus↑ comment by James_Miller · 2016-02-24T03:04:03.601Z · LW(p) · GW(p)

The British East India company and the mafia were/are able to use the threat of force to protect their property rights. Tragedy of the commons problems get much harder to solve the more people there are who can defect. I have a limited understanding of mathematical models of evolution, but it feels like the ways that people escape Moloch would not work for billions of competing microorganisms. I can see why studying economics would cause someone to be skeptical of evolution.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-24T09:59:27.251Z · LW(p) · GW(p)

Microorganisms can make collective decisions via quorum sensing. Shared DNA works as a committment device.

I can see why studying economics would cause someone to be skeptical of evolution.

Interesting. Given that your field seems to be about understanding game theory and exactly how to escape Moloch, have you thought about looking deeper into the subject to see whether the microorganisms due something that useful in a more wider scale and could move on the economist's understanding of cooperation?

Beliefs have to pay rent ;)

Replies from: James_Miller↑ comment by James_Miller · 2016-02-24T17:17:01.958Z · LW(p) · GW(p)

have you thought about looking deeper into the subject to see whether the microorganisms due something that useful in a more wider scale and could move on the economist's understanding of cooperation?

I have thought about studying in more depth the math of evolutionary biology.

↑ comment by bogus · 2016-02-24T01:12:52.514Z · LW(p) · GW(p)

In the absence of any state holding the monopoly of power a large corporation automatically grows into a defacto state as the British East India company did in India.

The British East India Company was a state-supported group, so it doesn't count. But you're right that in most cases there is a winner-take-all dynamic to coercive power, so we're going to find a monopoly of force and a de-facto state. This is not inevitable though; for instance, forager tribes in general manage to do without, as did some historical stateless societies, e.g. in medieval Iceland. Loose federation of well-defended city states is an intermediate possibility that's quite well attested historically.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-24T09:43:29.726Z · LW(p) · GW(p)

But you're right that in most cases there is a winner-take-all dynamic to coercive power, so we're going to find a monopoly of force and a de-facto state.

That wasn't the argument I was making. The argument I was making that in the absence of a state that holds the monopoly of force any organisation that grows really big is going to use coercive power and become states-like.

Replies from: bogus↑ comment by bogus · 2016-02-24T09:52:43.394Z · LW(p) · GW(p)

Sure, but that's just what a winner-takes-all dynamic looks like in this case.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-24T10:02:12.056Z · LW(p) · GW(p)

The argument is about explaining why we don't see corporation in the absence of states. It's not about explaining that there are societies that have no corporations. It's not about explaining that there are societies that have no states.

Replies from: bogus↑ comment by bogus · 2016-02-24T12:49:59.070Z · LW(p) · GW(p)

Large companies can definitely coexist with small states, though. For instance, medieval Italy was largely dominated by small, independent city-states (Germany was rather similar), but it also saw the emergence of large banking companies (though these were not actual corporations) such as the Lombards, Bardi and Perruzzi. Those companies were definitely powerful enough to finance actual governments, e.g. in England, and yet the small city states endured for many centuries; they finally declined as a result of aggression from large foreign monarchies.

↑ comment by Lumifer · 2016-02-23T16:28:34.738Z · LW(p) · GW(p)

I'm theoretically amazed that multicellular organisms could overcome all of the prisoners' dilemma type situations they must face.

You mean competition between cells in a multi-cellular organism? They don't compete, they come from the same DNA and they "win" by perpetuating that DNA, not their own self. Your cells are not subject to evolution -- you are, as a whole.

shouldn't competition among microorganisms cause us to get nothing?

In the long term, no, because a symbiotic system (as a whole) outcompetes greedy microorganisms and it's surviving that matters, not short-term gains. If you depend on your host and you kill your host, you die yourself.

Replies from: Vaniver, James_Miller↑ comment by Vaniver · 2016-02-23T16:45:59.604Z · LW(p) · GW(p)

You mean competition between cells in a multi-cellular organism? They don't compete, they come from the same DNA and they "win" by perpetuating that DNA, not their own self. Your cells are not subject to evolution -- you are, as a whole.

Doesn't this line of reasoning prove the non-existence of cancer?

Replies from: Lumifer, ChristianKl↑ comment by Lumifer · 2016-02-23T17:51:21.693Z · LW(p) · GW(p)

No, I don't think so. Cancerous cells don't win at evolution. In fact, is they manage to kill the host, they explicitly lose.

Survival of the fittest doesn't prove the non-existence of broken bones, either.

Replies from: Vaniver↑ comment by Vaniver · 2016-02-23T19:56:56.151Z · LW(p) · GW(p)

It seems to me that the better argument is more along the lines of "bodies put a lot of effort into policing competition among their constituent parts" and "bodies put a lot of effort into repelling invaders." It is actually amazing that multicellular organisms overcome the prisoners' dilemma type situations, and there are lots of mechanisms that work on that problem, and amazing that pathogens don't kill more of us than they already do.

And when those mechanisms fail, the problems are just as dire as one would expect. Consider something like Tasmanian Devil Facial Tumor Disease, a communicable cancer which killed roughly half of all Tasmanian devils (and, more importantly, would kill every devil in a high-density environment). Consider that about 4% of all humans were killed by influenza in 1918-1920. So it's no surprise that the surviving life we see around us today is life that puts a bunch of effort into preventing runaway cell growth and runaway pathogen growth.

Replies from: Lumifer↑ comment by Lumifer · 2016-02-23T20:13:18.181Z · LW(p) · GW(p)

It is actually amazing that multicellular organisms overcome the prisoners' dilemma type situations

I just don't see those "prisoners' dilemma type situations". Can you illustrate? What will cells of my body win by defecting and how can they defect?

Cancer is not successful competition, it's breakage.

amazing that pathogens don't kill more of us than they already do.

That's anthropics for you :-)

Replies from: Vaniver↑ comment by Vaniver · 2016-02-24T01:33:38.598Z · LW(p) · GW(p)

What will cells of my body win by defecting and how can they defect?

Consider something like Aubrey de Grey's "survival of the slowest" theory of mitochondrial mutation. The "point" of mitochondria is to do work involving ATP that slowly degrades them, they eventually die, and are replaced by new mitochondria. But it's possible for several different mutations to make a mitochondrion much slower at doing its job--which is bad news for the cell, since it has access to less energy, but good news for that individual mitochondrion, because less pollution builds up and it survives longer.

But because it survives longer, it's proportionally more likely to split to replace any other mitochrondion that works itself to death. And so eventually every mitochondrion in the cell becomes a descendant of the mutant malfunctioning mitochondrion and the cell becomes less functional.

(I believe, if things are working correctly the cell realizes that it is now a literal communist cell, and self-destructs, and is replaced by another cell with functional mitochondria. If you didn't have this process, many more cells would be non-functional. But I'm not a biologist and I'm not certain about this bit.)

Replies from: Lumifer↑ comment by Lumifer · 2016-02-24T15:37:48.251Z · LW(p) · GW(p)

but good news for that individual mitochondrion, because less pollution builds up and it survives longer.

Recall that we are talking about evolution. Taking the Selfish Gene approach, it's all about genes making copies of themselves. Only the germ-line cells matter, the rest of the cells in your body are irrelevant to evolution except for their assistance to sperm and eggs. The somatic cells never survive past the current generation, they do not replicate across generations.

Your mitochondrion might well live longer, but it still won't make it to the next generation. The only way for it to propagate itself is to propagate its DNA and that involves being as helpful to the host as possible, even at the cost of "personal sacrifice". Greedy mitochondrions, just as greedy somatic cells, will just be washed out by evolution. They do not win.

Replies from: Vaniver↑ comment by Vaniver · 2016-02-24T18:33:48.817Z · LW(p) · GW(p)

Recall that we are talking about evolution.

I'm well aware. If you don't think that evolution describes the changes in the population of mitochondria in a cell, then I think you're taking an overly narrow view of evolution!

Your mitochondrion might well live longer, but it still won't make it to the next generation.

I happen to be male; none of my mitochondria will make it to the next human generation anyway. (You... did know that mitochondrial lines have different DNA than their human hosts, right?)

But for the relevant population--mitochondria within a single cell--these mutants do actually win and take over the population of the cell, because they're reproductively favored over the previous strain. And if we go up a level to cells, if that cell divides, both of its descendants will have those new mitochondria along for the ride. (At this level, those cells are reproductively disfavored, and thus we wouldn't expect this to spread.)

That is, evolution on the lower level does work against evolution on the upper level, because the incentives of the two systems are misaligned. Since the lower level has much faster generations, you'll get many more cycles of evolution on the lower level, and thus we would naively expect the lower level to dominate. If a bacterial infection can go through a thousand generations, why can't it evolve past the defenses of a host going through a single generation? If the cell population of a tumor can go through a thousand generations, why can't it evolve past the defenses of a host going through a single generation?

The answer is twofold: 1) it can, and when it does that typically leads to the death of the host, and 2) because it can, the host puts in a lot of effort to make that not happen. (You can use evolution on the upper level to explain why these mechanisms exist, but not how they operate. That is, you can make statements like "I expect there to be an immune system" and some broad properties of it but may have difficulty predicting how those properties are achieved.)

(That is, the lower level gets both the forces leading to 'disorder' from the perspective of the upper system, and corrective forces leading to order. This can lead to spectacular booms and busts in ways that you don't see with normal selective gradients.)

Replies from: Lumifer↑ comment by Lumifer · 2016-02-24T20:35:50.435Z · LW(p) · GW(p)

If you don't think that evolution describes the changes in the population of mitochondria in a cell, then I think you're taking an overly narrow view of evolution!

That may well be so, but still in the context of this discussion I don't think that it's useful to describe the changes in the population of mitochondria in an evolutionary framework (your lower level, that is).

happen to be male; none of my mitochondria will make it to the next human generation anyway.

Unless you have a sister :-) Yes, I know that mDNA is special.

The answer is twofold:

There is also the third option: symbiosis. If you managed to get your hooks into a nice and juicy host, it might be wise to set up house instead of doing the slash-and-burn.

Since this started connected to economics, there are probably parallels with roving bandits and stationary bandits.

↑ comment by ChristianKl · 2016-02-23T17:23:52.239Z · LW(p) · GW(p)

In the long-term cancer sells die with the organism that hosts them. Viruses also do kill people regularly and die with their hosts.

Replies from: Vaniver↑ comment by Vaniver · 2016-02-23T19:58:06.832Z · LW(p) · GW(p)

Sure. The impression one gets from this is that an answer to James_Miller's question is that they frequently fail to solve that problem, and then die.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-23T20:07:59.186Z · LW(p) · GW(p)

Individual people die but the species doesn't die.

Replies from: Vaniver↑ comment by James_Miller · 2016-02-24T03:10:41.730Z · LW(p) · GW(p)

In the long term, no, because a symbiotic system (as a whole) outcompetes greedy microorganisms and it's surviving that matters, not short-term gains.

OK, but I have lots of different types of bacteria in me. If one type of bacteria doubled the amount of energy it consumed, and this slightly reduced my reproductive fitness, then this type of bacteria would be better off. If all types of bacteria in me do this, however, I die. It's analogous to how no one company would pollute so much so as to poison the atmosphere and kill everyone, but absent regulation the combined effect of all companies would be to do (or almost do) this.

Replies from: Lumifer↑ comment by Lumifer · 2016-02-24T15:43:28.808Z · LW(p) · GW(p)

If one type of bacteria doubled the amount of energy it consumed, and this slightly reduced my reproductive fitness, then this type of bacteria would be better off.

It's not obvious to me that it will better off. There is a clear trade-off here, the microorganisms want to "steal" some energy from the host to live, but not too much or the host will die and so will they. I am sure evolution fine-tunes this trade-off in order to maximize survival, as usual.

The process, of course, is noisy. Bacteria mutate and occasionally develop high virulence which can kill large parts of host population (see e.g. the Black Plague). But those high-virulence strains do not survive for long, precisely because they are so "greedy".

↑ comment by [deleted] · 2016-02-24T14:05:59.469Z · LW(p) · GW(p)

YSITTBIDWTCIYSTEIWEWITTAW is a little long for an acronym, but ADBOC for "Agree Denotationally But Object Connotationally'

Replies from: gjm↑ comment by gjm · 2016-02-24T14:37:13.217Z · LW(p) · GW(p)

"Your statement is technically true but I disagree with the connotations if you're suggesting that ...", I guess. I'm hampered by not being sure whether you're objecting connotationally to (1) the idea that having an economics PhD is a guarantee of understanding the fundamentals of economics, or (2) the analogy between markets / command economies and unguided evolution / intelligent design, or (3) something else.

"... that economics is why evolution wins in the actual world"?

Replies from: None↑ comment by [deleted] · 2016-02-24T15:26:51.288Z · LW(p) · GW(p)

Even if 'markets with decentralized decision making often beat intelligent design', that doesn't mean decentralised decision making dominates centralised planning (what I assume he means by intelligent design)

Replies from: gjm↑ comment by gjm · 2016-02-24T16:05:27.460Z · LW(p) · GW(p)

But ChristianKI is neither claiming nor implying that (so far as I can see); his point is that Eric is arguing "look at these amazing things; they're far too amazing to have been done without a guiding intelligence" but his experience in economics should show him that actually often (and "often" is all that's needed here) distributed systems of fairly stupid agents can do better than centralized guiding intelligences.

(I don't find that convincing, but for what I think are different reasons from yours. Eric's hypothetical centralized guiding intelligence is much, much smarter than (e.g.) the Soviet central planners.)

↑ comment by username2 · 2016-02-23T10:28:17.828Z · LW(p) · GW(p)

An economics PhD who works in academia will meet colleagues from biology department. They have plenty of opportunities to clarify their misconceptions if they are curious and actually want to learn something.

Replies from: Douglas_Knight, James_Miller↑ comment by Douglas_Knight · 2016-02-23T21:53:09.300Z · LW(p) · GW(p)

Falkenstein does not work in academia.

↑ comment by James_Miller · 2016-02-23T15:35:14.681Z · LW(p) · GW(p)

My understanding is that most biologists don't work on evolution and know little about the mathematical theories of evolution.

↑ comment by MrMind · 2016-02-23T13:51:08.008Z · LW(p) · GW(p)

I came to Christ via rational inference, not a personal crisis.

Reading this evokes in me physical sensations of discomfort, although it shouldn't. As others have said, it's important to study the failures as well as the successes.

Replies from: Viliam, WalterL↑ comment by WalterL · 2016-02-24T20:29:22.331Z · LW(p) · GW(p)

Just stopping by to chuckle at the phrase "evokes in me physical sensations of discomfort" from someone whose forum name is that of a worm that crawls in peoples ears and mind controls them in order to destroy the human race.

Replies from: MrMindcomment by turchin · 2016-02-22T23:20:43.590Z · LW(p) · GW(p)

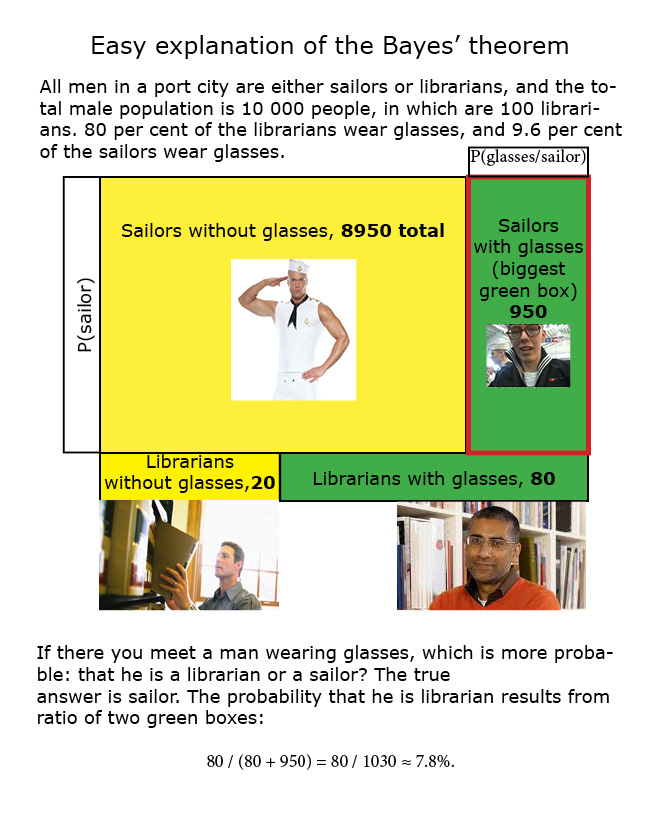

I created easy explanation of Bayes theorem as a small map: http://immortality-roadmap.com/bayesl.jpg (can't insert jpg into a comment)

It is based on following short story: All men in a port city are either sailors or librarians, and the total male population is 10 000 people, in which are 100 librarians. 80 per cent of the librarians wear glasses, and 9.6 per cent of the sailors wear glasses. If there you meet a man wearing glasses, which is more probable: that he is a librarian or a sailor? The true answer is sailor. The probability that he is librarian results from ratio of two green boxes:

80 / (80 + 950) = 80 / 1030 ≈ 7.8%.

The digits are the same as in original EY explanation, but I exclude the story about mammography, as it may be not easy to understand two complex scientific topics simultaneously. http://www.yudkowsky.net/rational/bayes

↑ comment by Gunnar_Zarncke · 2016-02-24T21:14:50.171Z · LW(p) · GW(p)

(can't insert jpg into a comment)

But you can - see doku

Also: The linked image is very small.

Replies from: turchincomment by Viliam · 2016-02-24T09:33:57.844Z · LW(p) · GW(p)

When I was thinking about "quantum immortality", I realized that I am bad at guessing the most likely very unlikely outcomes.

I don't mean the kind of "quantum immortality" when you throw a tantrum and kill yourself whenever you don't win a lottery, but rather the one that happens spontaneously, even if you are not aware of the concept. With sufficiently small probability (quantum amplitude) "miracles" happen, and in a thousand years all your surviving copies will be alive only thanks to some "miracle".

But of course, not all "miracles" are equally unlikely; even if all of them have microscopic probability, still some of them are relatively more likely than others, sometimes by several orders of magnitude. We should expect to find ourselves in the futures that required relatively more likely "miracles". If you can survive thousand years by two strategies, A and B, where A has a probability of 1/10^50, and B has a probability of 1/10^60, then in a thousand years, if you exist, you should expect to find yourself in the situation A. But I have problem finding out the possible ways and estimating their probabilities. I mean, even if I find some, there is still a chance that I missed something else that could be relatively more likely than the variants I have considered, which means that all my results are unlikely even in the "quantum immortality" future.

(This is also an objection against the constructions where people plan to kill themselves if they don't win a lottery. Push the probability too far, and your suicide mechanism will "miraculously" fail, because some "miracle" had greater probability than the outcome you wanted to achieve. The mechanism will fail, or some intruders will break into your quantum-suicide lab, or an alien attack will disable all quantum detectors on the Earth.)

I cannot even answer a simpler question: In the "quantum immortality" future, should you expect to find yourself more exceptional than other people, or not?

The first idea was that the older you get, the more lucky you are. Even now, think about all those people who died before for various reasons -- you were not one of them! Also a "miracle" saving your life seems more likely than a lot of "miracles" saving lives of many people, because the improbability would grow exponentially with the number of people saved. Thus you should expect yourself to be saved by "miracles" while observing other people not having the same luck.

Or maybe I'm wrong. My crazy example was a nuclear war starting and a bomb dropping on your city, just above your head. How can you survive that? Well, maybe an angel can randomly generate from particles in the sky, and descend to protect you with its wings. This scenario is more likely than a scenario where thousands of angels generate the same way and protect everyone. So a personal "miracle" seems more likely than a "miracle" for everyone. -- But of course now I am privileging an unlikely solution, where much more likely solutions exist; namely, the bomb could malfunction, and the whole town could be saved. So maybe the "miracle" saving everyone is actually more likely than a "miracle" saving only me.

Similarly, a situation thousand years later where I live because cheap immortality for everyone was invented in 20?? seems more likely that a situation thousand years later where only I lived for a thousand years because of series of "miracles" that happened specially to me (or maybe just one huge "miracle", such as me randomly changing to a vampire). So maybe it is possible to experience the "quantum immortality" and still be just an average muggle.

Returning to the original question, what is the most likely way to survive thousand years? Million years? 10^100 years?

(EDIT: There is also a chance that the whole concept is completely confused; for example that "the average living copy of yourself in a thousand years" is a wrong way to predict your most likely personal experience in the future. Instead, the correct approach may be to talk about your typical person-moment in space-time, because there is no reason to privilege the future. I mean, you already know that many of your copies will not have person-moments in far future. And maybe your expected person-moment is plus or minus what you are experiencing now, precisely because you are not immortal.)

Replies from: turchin, qmotus↑ comment by turchin · 2016-02-24T13:00:55.433Z · LW(p) · GW(p)

One should look on most probable outcomes:

- You are cryopreseved and resurrected lately - 1 per cent (in my case)

- You are resurrected by future AI based on your digital footprint - also around 1 per cent

- You live in simulation with afterlife. Probability can't be estimated but may be very high like 90 per cent.

These three outcomes are most probable futures if you survive death and they will look almost similar: you are resurrected in the future by strong AI. Only outcomes with even higher probability could change the situation, but they are unlikely given that probability of this ones is already high.

All other outcomes are much smaller given known priors about state of tech progress.

Using wording like "blobs of amplitude" makes all story overcomplicated.

Replies from: qmotus↑ comment by qmotus · 2016-02-25T12:39:00.223Z · LW(p) · GW(p)

You are cryopreseved and resurrected lately - 1 per cent (in my case)

Why do you give this such a low estimate? Because you're not signed up?

Replies from: turchin↑ comment by turchin · 2016-02-25T14:14:07.840Z · LW(p) · GW(p)

I signed to russian Cryorus. My doubts are mostly about will I be cryopreserved if die (I live alone and if I suddenly die, no body will know for days) and about the ability of Cryorus to exist in next 30 years. If I live in Pheonix and have a family dedicated to cryonics I would rise the probability of success to 10 per cent.

↑ comment by qmotus · 2016-02-25T12:42:07.018Z · LW(p) · GW(p)

There is also a chance that the whole concept is completely confused; for example that "the average living copy of yourself in a thousand years" is a wrong way to predict your most likely personal experience in the future. Instead, the correct approach may be to talk about your typical person-moment in space-time, because there is no reason to privilege the future. I mean, you already know that many of your copies will not have person-moments in far future. And maybe your expected person-moment is plus or minus what you are experiencing now, precisely because you are not immortal.

This sounds interesting, but I don't quite get what you mean by saying that many copies won't have person-moments in the future or how this leads to non-immortality. Can you elaborate?

In general, I agree that estimating these probabilities is very difficult. I suppose the likeliest ways may, in any case, be orders of magnitude more likely than others; meaning that if QI works and, say, resurrection by a future AI or hypercivilization is the likeliest way to live for a hundred million years, the other alternatives may not matter much. But it's hard to say anything even remotely definite about it.

Replies from: Viliam↑ comment by Viliam · 2016-02-25T13:25:21.051Z · LW(p) · GW(p)

I am confused a lot about this, so maybe what I write here doesn't make sense at all.

I'm trying to take a "timeless view" instead of taking time as something granted that keeps flowing linearly. Why? Essentially, because if you just take time as something that keeps flowing linearly, then in most Everett branches you die, end of story. Taking about "quantum immortality" already means picking selectively the moments in time-space-branches where you exist. So I feel like perhaps we need to pick randomly from all such moments, not merely from the moments in the future.

To simplify the situation, let's assume a simpler universe -- not the one we live in, but the one we once believed we lived in -- a universe without branches, with a single timeline. Suppose this classical universe is deterministic, and that at some moment you die.

The first-person view of your life in the classical universe would be "you are born, you live for a few years, then you die, end of story". The third-person / timeless / god's view would be "here is a timeline of your person-moments; within those moments you live, outside of them you don't". The god could watch your life as a movie in random order, because every sequence would make sense, it would follow the laws of physics and every person-moment would be surrounded by your experience.

From god's point of view, it could make sense to take a random person-moment of your life, and examine what you experience there. Random choice always needs some metric over the set we choose from, but with a single timeline this is simple: just give each time interval the same weight. For example, if you live 80 years, and spend 20 years in education, it would make sense to say "if we pick a random moment of your life, with probability 25% you are in education at that moment".

(Because that's the topic I am interested in: how does a typical random moment look like.)

Okay, now instead of the classical universe let's think about a straw-quantum universe, where the universe only splits when you flip the magical quantum coin, otherwise it remains classical. (Yes, this is complete bullshit. A simple model to explain what I mean.) Let's assume that you live 80 years, and when you are 40, you flip the coin and make an important decision based on the outcome, that will dramatically change your life. You during the first 40 years you only had one history, and during the second 40 years, you had two histories. In first-person view, it was 80 years either way, 1/2 the first half, 1/2 the second half.

Now let's again try the god's view. The god sees your life as a movie containing together 120 years; 40 years of the first half, and 2× 40 years of the second half. If the god is trying to pick a random moment in your life, does it mean she is twice as likely to pick a moment from your second half than from your first half? -- This is only my intuition speaking, but I believe that this would be a wrong metric. In a correct metric, when the god chooses a "random moment of your life", it should have 50% probability to be before you flipped the magical coin, and 50% probability after your flipped the magical coins. As if somehow having two second halves of your life only made each of them half as thick.

Now a variation of the straw-quantum model, where after 40 years you flip a magical coin, and depending on the outcome you either die immediately, or live for another 40 years. In this situation I believe from the god's view, a random moment of your life has 2/3 probability to be during the first 40 years, and 1/3 during the second 40 years.

If you agree with this, then the real quantum universe is the same thing, except that branching happens all the time, and there are zillions of the most crazy branches. (And I am ignoring the problem of defining what exactly means "you", especially in some sufficiently weird branches.) It could be, from god's point of view, that although in some tiny branches you life forever, still a typical random you-moment would be e.g. during your first 70 years (or whatever is the average lifespan in your reference group).

And... this is quite a confusing part here... I suspect that in some sense the god's view may be the correct way to look at oneself, especially when thinking about antropic problems. That the typical random you-moment, as seen by the god, is the typical experience you have. So despite the "quantum immortality" being real, if the typical random you-moment happens in the ordinary boring places, within the 80 years after your birth, with a sufficiently high probability, then you will simply subjectively not experience the "quantum immortality". Because in a typical moment of life, you are not there yet. But you will... kinda... never be there, because the typical moment of your life is what is real. You will always be in a situation where you are potentially immortal, but in reality too young to perceive any benefits from that.

In other words, if someone asks "so, if I attach myself to a perfectly safe quantum-suicide machine that will immediately kill me unless I win the lottery, and then I turn it on, what will be my typical subjective experience?" then the completely disappointing (but potentially correct) answer is: "your typical subjective experience will always be that you didn't do the experiment yet". Instead of enjoying the winnings of the lottery, you (from the god's perspective, which is potentially the correct one) will only experience getting ready to do the experiment, not the outcomes of it. If you are the kind of person who would seriously perform such experiment, from your subjective point of view, the moment of the experiment is always in the future. (It doesn't mean you can never do it. If you want, you can try it tomorrow. It only means that the tomorrow is always tomorrow, never yesterday.)

Or, using the Nietzsche's metaphor of "eternal recurrence", whenever you perfom the quantum-suicide experiment, your life will restart. Thus your life will only include the moments before the experiment, not after.

For the "spontaneous" version of the experiment, you are simply more likely to be young than old, and you will never be thousand years old. Not necessarily because there is any specific line you could not cross, but simply because in a typical moment of your life, you are not thousand years old yet. (From god's point of view, your thousand-years-old-moments are so rare, that they are practically never picked at random, therefore your typical subjective experience is not being thousand years old.)

Of course on a larger scale (god sees all those alternative histories and alternative universes where life itself never happened), your subjective measure is almost zero. But that's okay, because the important things are ratios of your subjective experience. I'm just thinking that comparing "different you-moments thousand years in the future" is somehow a wrong operation, something that doesn't cut the possibility-space naturally; and that the natural operation would be comparing "different you-moments" regardless of the time, because from the timeless view there is nothing special about "the time thousand years from now".

Or maybe this is all completely wrong...

Replies from: entirelyuseless, ShardPhoenix↑ comment by entirelyuseless · 2016-02-25T16:13:52.646Z · LW(p) · GW(p)

You may be getting at the truth here, but there is a simpler way to think about it.

“Why should I fear death? If I am, then death is not. If death is, then I am not. Why should I fear that which can only exist when I do not?"

Whether you pick your point of view, or a divine point of view, if you pick any moment in your life, random or not, you are not dead yet. So basically you have a kind of personal plot armor: your life is finite in duration but is an open set of moments, in each of which you are alive, and which does not have an end point. Of course the set has a limit, but the limit is not part of the set. So subjectively, you will always be alive, but you will also always be within that finite period.

Replies from: Viliam, qmotus↑ comment by Viliam · 2016-02-26T08:06:23.941Z · LW(p) · GW(p)

Because there are other things associated with death, such as suffering from a painful terminal illness, where the excuse of Epicurus does not apply. With things like this, "quantum immortality" could potentially be the worst nightmare; maybe it means than after thousand years, in the Everett branches where you are still alive, in most of them you are in a condition where you would prefer to be dead.

Replies from: entirelyuseless↑ comment by entirelyuseless · 2016-02-26T15:14:53.931Z · LW(p) · GW(p)

I agree, except that you are not actually refuting Epicurus: you are not saying that death should be feared, but that we should fear not dying soon enough, especially if we end up not dying at all.

↑ comment by qmotus · 2016-02-26T08:53:24.164Z · LW(p) · GW(p)

your life is finite in duration

Maybe I'm misunderstanding something. How do we know this?

Replies from: entirelyuseless↑ comment by entirelyuseless · 2016-02-26T15:16:57.141Z · LW(p) · GW(p)

Either it is finite in duration, or mostly finite in duration, as Viliam said. These come approximately to the same thing, even if they are not exactly the same.

↑ comment by ShardPhoenix · 2016-02-26T02:14:30.199Z · LW(p) · GW(p)

Very interesting insight. It does feel like it solves the problem in some way, and yet in a quantum version as specified, it seems there must be a 1000_year_old_Villiam out there going "huh, I guess I was wrong back on Less Wrong that one time..." Can we really say he doesn't count, even if his measure is small?

Replies from: Viliam↑ comment by Viliam · 2016-02-26T08:01:41.821Z · LW(p) · GW(p)

Can we really say he doesn't count, even if his measure is small?

He certainly counts for himself, but probably doesn't for Viliam2016.

Replies from: qmotus↑ comment by qmotus · 2016-02-26T08:51:28.764Z · LW(p) · GW(p)

Viliam2016 is probably relatively young, healthy and living in a country with a fairly high quality of life, meaning that he can expect to live for several decades more at least. But as humans, our measure diminishes fairly slowly at first, but then starts diminishing much faster. For Viliam age 90, Viliam age 95 may seem like he doesn't have that much measure; and for Viliam age 100, Viliam 101 may look like an unlikely freak of nature. But there's only a few months difference there. So at which point do the unlikely future selves start to matter? (The same applies to younger, terminally ill Viliams as well.)

comment by Lumifer · 2016-02-23T18:35:52.617Z · LW(p) · GW(p)

Behold and despair.

Replies from: username2, Gunnar_Zarncke, MrMind, gwern, Houshalter↑ comment by Gunnar_Zarncke · 2016-02-24T21:12:11.602Z · LW(p) · GW(p)

It holds before us a mirror or 'progression' of civilization.

ADDED: A friend mentioned that todays predominance of sports (and actors before that) is more an indication of contemporary interests that will be forgotten before long. Probably the athens also had sport stars at their time that were talked about a lot.

↑ comment by Houshalter · 2016-02-25T00:11:51.345Z · LW(p) · GW(p)

Not really surprising. The most popular anything will always be whatever pleases the lowest common denominator.

comment by RainbowSpacedancer · 2016-02-22T03:43:03.109Z · LW(p) · GW(p)

I have large PR problems when talking about rationality with others unfamiliar with it, with the Straw Vulcan being the most common trap conversation will fall into.

Are there any guides out there in the vein of the EA Pitch Wiki that could help someone avoid these traps and portray rationality in a more positive light? If not, would it be worth creating one?

So far I've found, how rationality can make your life more awesome, rationality for curiosity sake, rationality as winning, PR problems and the contrary rationality isn't all that great.

Replies from: Artaxerxes, ChristianKl↑ comment by Artaxerxes · 2016-02-22T06:58:41.762Z · LW(p) · GW(p)

Not a guide, but I think the vocab you use matters a lot. Try tabooing 'rationality', the word itself mindkills some people straight to straw vulcan etc. Do the same with any other words that have the same effect.

Replies from: RainbowSpacedancer↑ comment by RainbowSpacedancer · 2016-02-24T06:44:26.593Z · LW(p) · GW(p)

Revisiting past conversations I think this is exactly what has been happening. When I mention rationality, reason, logic it becomes a logic v. emotion discussion. I'll taboo in future, thanks!

↑ comment by ChristianKl · 2016-02-22T16:57:09.704Z · LW(p) · GW(p)

I have large PR problems when talking about rationality with others unfamiliar with it, with the Straw Vulcan being the most common trap conversation will fall into.

What exactly are you doing that you have PR problems?

Are you simply relabeling normal conversations with friends as PR?

Replies from: RainbowSpacedancer↑ comment by RainbowSpacedancer · 2016-02-24T06:54:10.516Z · LW(p) · GW(p)

What exactly are you doing that you have PR problems?

Something like,

A: I've been reading a lot about rationality in the last year or two. It's pretty great.

B: What's that?

A: Explanation of instrumental + epistemic OR Biases a la Kahneman

B: Sounds dumb. I do that already.

A: I've found it great because X, Y, Z.

B: I think emotion is much more important than rationality. I don't want to be a robot.

Are you simply relabeling normal conversations with friends as PR?

Yes. Sorry for the lack of clarity.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-24T10:16:01.938Z · LW(p) · GW(p)

Yes. Sorry for the lack of clarity.

The problem isn't simply clarity. The frame of mind of treating a conversation with your friends as PR is not useful for getting your friends to trust you and positively respond to what you are saying. If you do that, it's no wonder that someone thinks you are a Straw Vulcan because that mindset is communicating that vibe.

That said, let's focus on your message. You aren't telling people that you are using rationality to make you life better. You are telling people that you read about rationality. That doesn't show a person the value of rationality.

If I want to talk about the value of rationality I could take about how I'm making predictions in my daily life and the value that brings me. I can talk about how great it is to play double crux with other rationalists and actually have them change their mind.

If I want to talk about the effect it has on friends, I can talk about how a fellow rationalist who thought he only cared about the people he's interacting with used rationality techniques to discover that he actually cares about rescuing children in the third world from dying from malaria.

If I want to talk about society then I can talk about how the Good Judgement project outperforms CIA analysts who have access to classified information by 30%. I can talk about how better predictions of the CIA before the Iraq war might have stopped the war and therefore really matter a great deal. Superforcasting is a great book for having those war-stories.

Replies from: RainbowSpacedancer↑ comment by RainbowSpacedancer · 2016-02-26T18:44:25.683Z · LW(p) · GW(p)

The problem isn't simply clarity.

In this case it is. I believe I have been less than clear again.

The frame of mind of treating a conversation with your friends as PR is not useful for getting your friends to trust you and positively respond to what you are saying.

Agreed - but I've never done that. The conversations are ordinary in that I share rationality in the same way I would share a book or movie I've enjoyed. It is "I enjoy X, you should try it I bet you would enjoy it too" as opposed to, "I want to spread X and my friends are good targets for that." I literally meant I relabeled an ordinary conversation as PR, not that I was in the spread rationality mindset. My brain did a thing where,

'I'm having trouble sharing rationality with friends in a way that doesn't happen with my other interests. I bet other rationalists have similar problems. I wonder if there is any PR material on LW that might help with this.'

... and boom my brain labels it as a PR problem. I'm trying to not get caught up in the words here, do you follow my meaning?

Your recommendations on talking about the value rationality brings me look good. Thank you for them.

Replies from: ChristianKl↑ comment by ChristianKl · 2016-02-26T20:55:00.157Z · LW(p) · GW(p)

The conversations are ordinary in that I share rationality in the same way I would share a book or movie I've enjoyed.

We don't enjoy a topic as diverse as rationality in the same way we enjoy a book or movie. A book or movie is a much more concrete experience.

You could speak about individual books like Kahnmann's instead of using the label rationality.

comment by Fluttershy · 2016-02-22T03:00:52.096Z · LW(p) · GW(p)