Happy paths and the planning fallacy

post by Adam Zerner (adamzerner) · 2021-07-18T23:26:30.920Z · LW · GW · 10 commentsContents

10 comments

The other day at work I was talking with my boss about a project I am working on. He wanted an update on what tasks are remaining. I spent some time walking him through this. At the end, he asked, "So just to confirm, these six tasks are all that is remaining for this project?".

I wasn't sure how to respond. It didn't feel true that those were the only six tasks that were remaining. But if it wasn't true, then what are the other remaining tasks? I didn't have an answer to that, so I was confused [? · GW].

Then it hit me. Those six were the only known tasks that were remaining. But there very well might be unknown tasks that are left to do. So I told him this, and that we should expect the unexpected.

He responded by saying that he agrees, but also rephrased his question. He asked me to confirm that if we're talking about the happy path, that the six tasks I outlined are the only ones on the happy path.

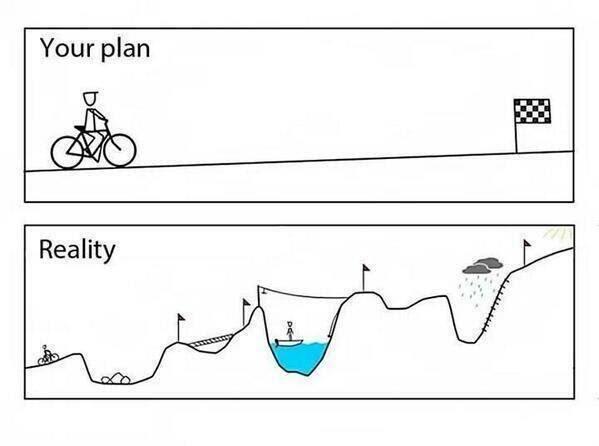

I don't see a problem with talking about happy paths. Well, at least not in theory. In theory, it might be useful to have a sense of what that best case scenario path forward looks like. But in practice, I worry that, somewhere along the way, happy paths will get converted to expected paths.

That's a little tangential to the main point I want to make in this post though. The main point I want to make in this post is that the terminology of happy path, expected path, and unhappy path seems like it'd be really helpful for thinking about and fighting against the planning fallacy [LW · GW].

What is the planning fallacy? Well, using this new terminology, the planning fallacy is our tendency to confuse the happy path with the expected path. When people think about how long something will take − what the expected path is − they tend to think mostly about what the happy path looks like. And therefore, they tend to underestimate how long things will take. Or how many resources they will require.

For example, consider students predicting how long their homework will take:

Buehler et al. asked their students for estimates of when they (the students) thought they would complete their personal academic projects. Specifically, the researchers asked for estimated times by which the students thought it was 50%, 75%, and 99% probable their personal projects would be done. Would you care to guess how many students finished on or before their estimated 50%, 75%, and 99% probability levels?

- 13% of subjects finished their project by the time they had assigned a 50% probability level;

- 19% finished by the time assigned a 75% probability level;

- and only 45% (less than half!) finished by the time of their 99% probability level.

As Buehler et al. wrote, “The results for the 99% probability level are especially striking: Even when asked to make a highly conservative forecast, a prediction that they felt virtually certain that they would fulfill, students’ confidence in their time estimates far exceeded their accomplishments.”

The issue is that these students were only thinking about the happy path.

A clue to the underlying problem with the planning algorithm was uncovered by Newby-Clark et al., who found that

- Asking subjects for their predictions based on realistic “best guess” scenarios; and

- Asking subjects for their hoped-for “best case” scenarios . . .

... produced indistinguishable results.

When people are asked for a “realistic” scenario, they envision everything going exactly as planned, with no unexpected delays or unforeseen catastrophes—the same vision as their “best case.”

Reality, it turns out, usually delivers results somewhat worse than the “worst case.”

After thinking of this analogy, I put it to use a day later. I had to go to Mexico with my girlfriend for dental work she needed. We had an appointment at 10am and were staying at a hotel about two miles away. I was thinking about what time we should wake up in order to be at the appointment in time. My first instinct was to think, "It'll take X minutes to brush our teeth, Y minutes to check out of the hotel, Z minutes to cross the border, etc. etc." But then I realized something! "Hey, all of this stuff I'm refering to, this is just the happy path! I need to consider unexpected things too." The terminology of "happy path" helped me to recognize my falling for the planning fallacy. I suspect that I'm not alone here, and that it'd be a helpful tool for others as well.

However, I'm not that optimistic. Simply knowing about biases, or even having good terminology/analogies for them usually isn't enough. We still really struggle with them. And sure enough, I was no exception.

After I realized my happy path error, I thought about it again and concluded that we should wake up at 8:30am. My girlfriend convinced me that we could wake up at 8:45am instead, which I agreed to. She ended up waking up even earlier and gathering our stuff to check out. Then it felt to me like we had a lot of time, so I took a leisurely shower. We didn't leave the room until about 9:30am. Then we realized we need to fill up our watercooler with ice. Which was on a different floor of the hotel.

Ultimately we got to the dentist right on time, but I think it was lucky. Crossing the border happened really quickly, but could have taken longer. Checking out of the hotel also was really quick, but could have taken longer. At the end of the day, I did a good job at first of recognizing the planning fallacy and adjusting for it, but then in the morning I erased the good work I had done and committed the planning fallacy again. I think this goes to show how hard it is to actually fight against such biases and win.

That doesn't mean we shouldn't try to win though, nor that we can't make incremental progress. Hopefully this terminology of "happy path" will help people make such incremental progress.

10 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2021-07-19T19:23:21.906Z · LW(p) · GW(p)

About the business case with your boss: It might help to always make the distinction between the happy path and the committed case. And I wouldn't call it 'pessimistic' or 'realistic' or '99%' or so but rather something like 'committed' or 'qualified'. Qualified is good because it makes explicit that to get to such an estimate you need to add details.

comment by SarahSrinivasan (GuySrinivasan) · 2021-07-19T00:09:39.553Z · LW(p) · GW(p)

In our house we heuristic over this problem by, and I quote, "accounting for the planning fallacy twice". This works very well for us in practice.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2021-07-22T13:14:20.624Z · LW(p) · GW(p)

But be aware of Hofstadter's Law:

Replies from: GuySrinivasanIt always takes longer than you expect, even when you take into account Hofstadter's Law.[2]

↑ comment by SarahSrinivasan (GuySrinivasan) · 2021-07-22T20:46:49.990Z · LW(p) · GW(p)

Converges in the limit, we're all good here.

comment by Viliam · 2021-07-19T20:22:46.048Z · LW(p) · GW(p)

In software development we sometimes play this game where you estimate how long a task will take, and then by sleight of hand the estimate becomes a commitment.

As a completely unrelated fact, most software projects exceed the deadlines.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-07-19T20:53:04.599Z · LW(p) · GW(p)

In software development we sometimes play this game where you estimate how long a task will take, and then by sleight of hand the estimate becomes a commitment.

Yeah, I ran into that recently and it lead to issues. Not fun.

Replies from: GuySrinivasan↑ comment by SarahSrinivasan (GuySrinivasan) · 2021-07-19T22:40:43.055Z · LW(p) · GW(p)

In software development, I take joy from (honestly) reporting credible intervals that are far too large for anyone's comfort.

"Does it help?" you ask.

Well, joy is a good thing.

Replies from: Ericfcomment by helicase · 2022-08-14T15:47:35.346Z · LW(p) · GW(p)

one theory that seems to work is people are pretty good at median time estimates, but a lot of uncertainty + how time estimates can never be negative = there's a huge right-tail skew, so some tasks will blow up and completely dominate your average/total time.

comment by Pattern · 2021-07-20T17:32:42.892Z · LW(p) · GW(p)

The obvious thing to do, would be to replace these probabilities with qualitative words that get the same results, then work out what the appropriate probability based on the words is.

The issue is that these students were only thinking about the happy path.

Or they were lying.