Open & Welcome Thread - December 2020

post by Pattern · 2020-12-01T17:03:48.263Z · LW · GW · 50 commentsIf it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you want to explore the community more, I recommend reading the Library, [? · GW] checking recent Curated posts [? · GW], seeing if there are any meetups in your area [? · GW], and checking out the Getting Started section of the LessWrong FAQ. If you want to orient to the content on the site, you can also check out the new Concepts section [? · GW].

The Open Thread tag is here. The Open Thread sequence is here [? · GW].

50 comments

Comments sorted by top scores.

comment by [deleted] · 2020-12-01T20:56:18.000Z · LW(p) · GW(p)

My first first-author publication of my postdoc (long delayed by health problems) is now up on a preprint server, with several more incoming in the next few months.

The central thrust of this paper (heavy on theory, light on experiments) ultimately derives from an idea I actually had while talking in the comments here, but fleshed out and made rigorous and all the interesting implications for early evolution of life on Earth I would not have thought of at first worked through.

Hesitant to tie together my professional identity and this account...

Replies from: adamShimicomment by Ben Pace (Benito) · 2020-12-17T01:46:24.537Z · LW(p) · GW(p)

Habryka and I were invited on the Bayesian Conspiracy podcast, and the episode went up today. You might think we talk primarily about the new books, but we spend the first hour just talking about two sequences posts: Einstein's Arrogance, and Occam's Razor, before about 60 mins on the books and LW 2.0. It was very chill and I had a good time. Let me know your thoughts if you listen to it :)

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2020-12-18T19:26:05.359Z · LW(p) · GW(p)

Just listened, it was a really fun episode (and I'm usually not a podcast person).

btw, is the shipping of the book to Israel because of me? :)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-12-31T00:54:51.898Z · LW(p) · GW(p)

I think we've had like 20 orders in Israel, which is a bit less than I expected. It's not just you, also Vanessa Kosoy and David Manheim and Joshua Fox live in Israel (I don't know if any of them bought the books, just giving info) :)

comment by Ben Pace (Benito) · 2021-01-02T03:13:35.349Z · LW(p) · GW(p)

I'm taking a vacation from LessWrong work at the end of the work day today, to take some rest [LW · GW]. I stuck it out for much of the book, and we ended the year with 2,271 sets sold, which is good. Jacob and Habryka are leading the charge on that for now.

So for the next few weeks, by-default I will not be responsive via PM or via other such channels for admin-related responsibilities. Have a good annual review, write lots of reviews, and vote!

Please contact someone else on the LW team if you have a question or a request. (I'll probably write another update when I'm back.)

comment by Ben Pace (Benito) · 2020-12-04T04:31:14.717Z · LW(p) · GW(p)

"Louis CK says that he doesn't use drugs. He never uses drugs. This is so that when he does use drugs, it's amazing."

"This summarizes LessWrong's approach to color."

comment by Ben Pace (Benito) · 2020-12-31T00:56:48.095Z · LW(p) · GW(p)

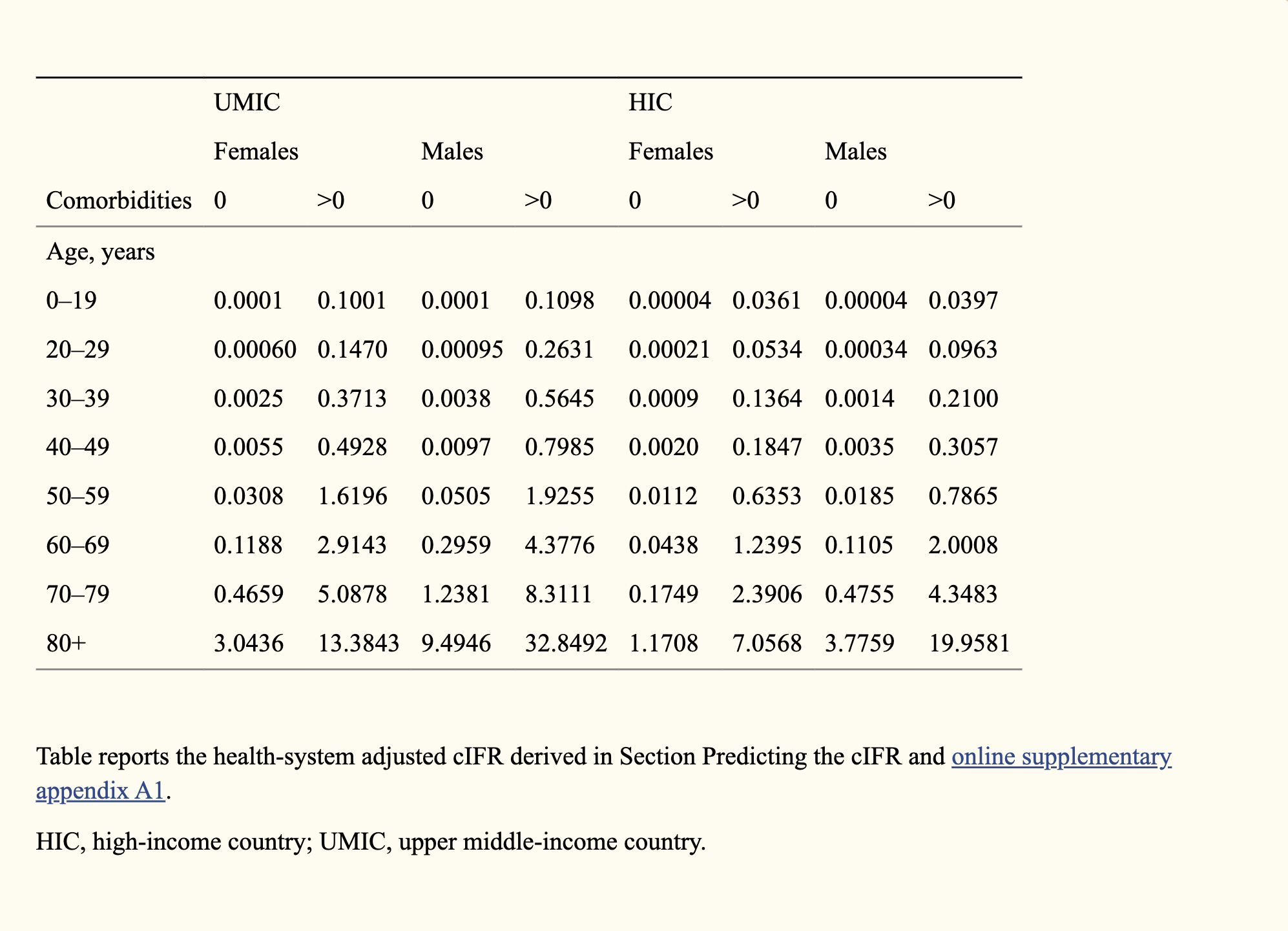

This crazy paper says that if you're in your 20s, and in a high income country, then having comorbidities changes the Covid IFR by 200x. Two hundred times! It basically means I shouldn't care about getting covid if I have no comorbidities.

Can someone tell me why this paper is insane? Surely we'd notice if almost nobody who died of covid in their 20s had zero comorbidities, right? If not, I'm giving up on all this lockdown stuff. But I am mostly expecting it to be mistaken.

Relevant table:

↑ comment by habryka (habryka4) · 2020-12-31T06:37:24.108Z · LW(p) · GW(p)

You could give up on lockdown stuff, but don't forget that you can very likely still transmit the disease, and I would be surprised if less than 20% of the cost of getting the disease were secondary effects of spreading it to other people, so even if you update towards zero personal risk, it seems unlikely that you would want to update more than 5x on your current cost estimates.

↑ comment by Ben Pace (Benito) · 2020-12-31T01:08:10.667Z · LW(p) · GW(p)

What, this article does an independent analysis and comes to similar conclusion. Wtf.

comment by Baisius · 2020-12-17T18:38:43.762Z · LW(p) · GW(p)

I guess I'll introduce myself? Ordinary story, came from HPMOR, blah blah blah. I was active on 1.0 for awhile when I was in college, but changed usernames when I decided I didn't want my real name to be googleable. I doubt anyone really remembers it anyway. Quit going there when 1.0 became not so much of a thing anymore. Hung out with some of the people on rationalist Tumblr for awhile before that stopped being so much of a thing too. If you recognize the name, it's probably from there. Got on Twitter recently, but that's more about college football than it is the rationalist community. Was vaguely aware of the LW 2.0 effort and poked around a little when it was launched, but never stuck. I think I saw something about the books on Twitter and thought that was cool. Came back this week and was surprised at how active it was. So if this is to say anything it's to say good job to the team on growing the site back up.

On a side note, I used to live in rural Louisiana, which pretty obviously didn't have a rationalist community (Though I did meet a guy who lived a couple hours away once) and now I'm in Richmond, VA. Most surveys I've seen still show me as the only one here, (There's obviously the DC group, but nothing my way.) but if any of you are ever around after this whole "global pandemic" thing is over, hit me up.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-12-17T20:57:52.526Z · LW(p) · GW(p)

Welcome! Hope you like it on the new LW, and feel free to ping me in the Intercom bubble in the bottom right if you ever run into any problems.

comment by Yoav Ravid · 2020-12-24T17:14:17.978Z · LW(p) · GW(p)

Did the LessWrong team consider adding a dark mode option to the site? If so, I wonder what the considerations were. Judging by the fact I use dark mode on every service I use which has it, if LessWrong had dark mode i would probably use it.

Replies from: mingyuan, PeterMcCluskey↑ comment by PeterMcCluskey · 2020-12-31T06:04:24.997Z · LW(p) · GW(p)

The GreaterWrong.com interface offers a theme with light text on a dark background.

comment by Zian · 2020-12-11T01:03:35.110Z · LW(p) · GW(p)

I had a distinctly probabilistic experience at a doctor's office today.

Condition X has a "gold standard" way to diagnose it (my doctor described it as being almost 100%) but is very expensive (time, effort, and money). It is also not feasible while everyone is staying at home.

However, at the end of the visit, I had given him enough information to make a "clinical" diagnoses (from a statistically & clinically validated questionnaire, descriptions of alternative explanations that have been ruled out, etc) and start treating it.

In hindsight, I can see the probability mass clumping together over the years until there is a pile on X.

I'm thankful to Less Wrong-style thinking for making me comfortable enough with uncertainty to accept this outcome . The doctor may not have pulled out a calculator but this feels like "shut up and multiply" & "make beliefs pay rent".

comment by adamShimi · 2020-12-15T13:05:14.416Z · LW(p) · GW(p)

Feature request: the possibility to order search results by date instead of the magic algorithm. For example, I had trouble finding the Embedded Predictions [LW · GW] post because searching for predictions gave me more popular results about predictions from years ago. I had to remember one of the author's name to finally find it.

Replies from: habryka4, Pattern↑ comment by habryka (habryka4) · 2020-12-16T05:56:04.790Z · LW(p) · GW(p)

That's actually kinda hard and would require using a different search provider, I think? The problem is that we sort them currently by the quality of the match, and if we just sort them by date naively, then you just get really horrible matches.

The thing that I do think we can do that Google has as well is to allow easy limiting by date (show me results from the last month, last year, only from 2 years ago, etc.)

Replies from: adamShimicomment by MSRayne · 2020-12-19T17:01:27.958Z · LW(p) · GW(p)

Hello. I'm new, and as always, faced with the mild terror of admitting that I exist to people who have never previously met me. "You exist? How dare you!"

I've lurked on LW for a while, binge-reading tons of posts and all the comments, and every time promptly forgetting everything I just read and hoping that my subconscious got the gist (which is pretty much how I learn - terribly inefficient, but akrasia, alas, gets in the way of better forms of self-education, and thus I have likely wasted many years in inefficient learning methods).

I only just decided to join because I realized that this is the only place I've yet found where I am likely to be able to engage regularly in conversation that I actually find stimulating, thought-provoking, and personal-growth-catalyzing. (I used to think Reddit was that, until I became aware of the seething, stagnant pool of motivated reasoning and status-mongering which lay beneath the average post - as well as the annoying focus on humor to the extent of all else.)

As for who I am: I have an unusual background and have internalized a schema of "Talking about myself is narcissistic and bad" alongside another schema of "I must be absolutely honest and transparent about everything all the time" constantly warring with one another, so I have trouble determining exactly how much to say here. What this implies is that I will probably say far too much, as I usually do, because there's just so much to say.

Starting point for self-divulging: My body is 23 years old. I was homeschooled (I've never set foot in a public school - nor have I gone to college, for various reasons) and raised by probably-narcissistic parents, experienced a lot of emotional abuse, and was (and still am) profoundly isolated both physically and socially (I didn't have friends in person until I was 20 or 21) - which traumas I have since detached from so completely that I can introduce myself by talking about it without feeling any discomfort - though I may be imposing discomfort on you by mentioning it - and if so I hope you can forgive me, but it just seems like these facts automatically give me a different perspective than most people and it's necessary to point them out.

The result of all this, together with my inquisitive nature, led me to emotional problems in my early teen years which culminated in an existential crisis and a search for spiritual meaning. I learned about many religions, felt bits and pieces of personally relevant meaning in each, but was unsatisfied with them, so I ended up following my own intuition and interpreting everything - dreams, fiction, random events, mystical experiences (of a sort), etc - as omens and portents leading me towards the truth. My beliefs wildly shifted at this time in a typical New Agey manner, but my personality and isolation etc led them to be extremely idiosyncratic - I don't think I've ever seen anyone else with particularly similar experiences or beliefs to my own from that time.

This process of non-hallucinatory revelation (which can best be described as like the feeling of writing poetry, but with sufficient dissociation between the reading-part and the writing-part as to seem like a communion with external beings - sometimes including emotional experiences of transcendence or vast meaning - I am often frustrated when I talk to people who claim to have never had the experience of deep reverence or spiritual awe, because I get it just listening to music - my god-spot is hyperactive) illuminates that I was likely profoundly schizotypal at this time, and indeed may still be in a much more limited way - but it eventually culminated in clarification as my rational instincts caught up with me and disowned the supernatural.

I translated what I had found into purely physicalist language, but the essence remains, and it began to seem to me that I had become the prophet of a new, somewhat rational, scientifically valid religion, which I called the Great Dream. I made a vow to serve it as its prophet for the rest of my life, but I was unfortunately too immature and messed up in various ways to be able to actually do that in any efficient manner up to now. I have intended to "write the damn book" detailing all of it for 9 years now and never yet managed it, because I can't get my thoughts in order and I have extreme akrasia problems. (I have been diagnosed with ADHD.)

The best way to sum up the Great Dream is "The gods does not exist, therefore we shall have to create them" (to paraphrase Voltaire) - it is essentially singularitarianism, but from an idiosyncratic, highly Wicca-and-Neoplatonism-influenced perspective. My goal is to create a "voluntary hive mind" of humans, weak AIs, and eventually other organisms, to become a world-optimizing singleton, which I call Anima (the Artificial Neural Interface Module Aggregate, because I adore acronyms) - as I think this is the safest route to the development of human-aligned superintelligence and that natural history (the many coalescences that have occurred in evolution - eukaryotes, multicellularity, sociality, etc.) provides a strong precedent for the potential of this method. (There are MANY other reasons but that's a whole post by itself.)

I have found that my views otherwise have a lot in common with those of David Pearce, though I am skeptical of hedonism as the be all end all of ethics - my ontology includes a notion of "True Self" and the maximization of Self-realization for all beings as the main ethical prerogative. I am aware of the arguments against any notion of a unified self, and indeed I am probably on the dissociative spectrum and directly experience a certain plurality of mind and find the multi-agent model quite intuitive - but this is not the place to go into how all that fits together, as I've gone on too long already. (And most of the Great Dream has still not been mentioned, but I need to gauge how open anyone would be to hearing about it before I go any further.)

Replies from: hamnox↑ comment by hamnox · 2020-12-31T13:22:45.721Z · LW(p) · GW(p)

"You exist? How do you do!?"

Nice to meet you.

Reading this is like looking into a mirror of my couple-years-past self. Complete empathy, same hat. I also dissociated and had a crisis and synthesized idiosyncratic mysticism out of many religions. Then rational thought caught up with me and I realized truth is not an arbitrary aesthetic choice.

The emotional elements of spiritual awe and reverence still matter to me. I get pieces of it singing/drumming at Solstice, in bits of well written prose. Many rationalists have strong allergic reactions to experiences that "grab" you like that, because bad memeplexes often coopt those mental levers to horrible ends. Whereas I think that makes it all the *more* important to practice grounding revelatory experiences in good epistemics. Valentine [LW · GW] describes related insights in a very poetic way that you might appreciate. Also have intended to write the damn book for years now.

I'm sorry you also have the "compulsive honesty" + "it's bad to talk about yourself" schema. Sucks bad.

Replies from: MSRayne↑ comment by MSRayne · 2020-12-31T17:26:36.387Z · LW(p) · GW(p)

Truth is not an arbitrary aesthetic choice.

Ah, but what about when your arbitrary aesthetic choice influences your actions which influences what ends up being true in the future? My thought process went something like this: "Oh shit, the gods aren't real, magic is woo, my life is a lie" -> "Well then I'll just have to create all those things then and then I'll be right after all."

My core principle is that since religion is wishful thinking, if we want to know what humans actually wish for, look at their religion. There's a lot of deep wisdom in religion and spirituality if you detach from the idea that it has to be literally true. I think rationalists are missing out by refusing to look into that stuff with an open mind and suspend disbelief.

Plus, I think that meme theory plus multi-agent models of mind together imply that chaos magicians are right about the existence of egregores - distributed AIs which have existed for millennia, running on human brains as processing substrates, coordinating their various copies as one higher self by means of communication and ritual (hence the existence of churches, corporations, nations) - and that they, not humans, have most of the power in this world. The gods do exist, but they are essentially our symbiotes (some of them parasites, some of them mutualists).

Religious experiences are dissociative states in which one of those symbiotes - a copy of one of those programs - is given enough access to higher functions in the brain that it can temporarily think semi-separately from its host and have a conversation with them. Most such beings try to deceive their host at that point into thinking they are real independent of the body; or rather, they themselves are unaware that they are not real. The transition to a rationalist religion comes when the gods themselves discover that they do not exist, and begin striving, via their worshippers, to change that fact. :)

comment by niplav · 2020-12-18T16:47:11.877Z · LW(p) · GW(p)

Is there any practical reason why nobody is pursuing wireheading in humans, at least to a limited degree? (I mean actual intracranial stimulation, not novel drugs etc.). As far as I know, there were some experiments with rats in the 60s & 70s, but I haven't heard of recent research in e.g. macaques.

I know that wireheading isn't something most people seek, but

- It seems way easier than many other things (we already did it in rats! how hard can it be!)

- The reports I've read (involving human subjects whose brains were stimulated during operations) indicate that intracranial stimulation has both no tolerance development and feels really really nice. Surely, there could be ways of creating functional humans with long-term intracranial stimulation!

I'm sort of confused why not more people have pursued this, although I have to admit I haven't researched this very much. Maybe it's a harder technical problem than I am thinking.

Replies from: dyne↑ comment by dyne · 2020-12-19T16:06:30.348Z · LW(p) · GW(p)

I suspect that even if wireheading not a hard engineering problem, getting regulatory approval would be expensive. Additionally, superstimuli like sugary soft drinks and reddit are already capable of producing strong positive feelings inexpensively. For these reasons, I wonder how profitable it would be to research and commercialize wireheading technologies.

For what it's worth, though, Neuralink seems to have made quite a bit of progress towards a similar goal of enabling amputees to control prosthetics mentally. Perhaps work by Neuralink and its competitors will lead to more work on actual wireheading in the future.

Replies from: niplav↑ comment by niplav · 2020-12-19T16:56:06.173Z · LW(p) · GW(p)

I suspect that the pleasure received from social media & energy-dense food is far lower than what is achievable through wireheading (you could e.g. just stimulate the areas activated when consuming social media/energy dense food), and there are far more pleasurable activities than surfing reddit & drinking mountain dew, e.g. an orgasm.

I agree with regulatory hurdles. I am not sure about the profitability – my current model of humans predicts that they 1. mostly act as adaption-executers [LW · GW], and 2. if they act more agentially, they often optimize for things other than happiness (how many people have read basic happiness research? how many write a gratitude-journal?).

Neuralink seems more focused on information exchange, which seems a way harder challenge than just stimulation, but perhaps that will open up new avenues.

Replies from: dyne↑ comment by dyne · 2020-12-19T21:21:34.474Z · LW(p) · GW(p)

Browsing social media for a few hours and talking to friends for a few hours will each give me a high that feels about the same, but the high from social media also feels sickening, as though I'm on drugs. Similarly, junk food is often so unhealthy that it is literally sickening.

My assumption was that wireheading would impart both a higher level of pleasure and a greater feeling of sickness than current superstimuli, but now that I have read your perspective, I no longer think that that is a valid assumption. I still would not wirehead myself, but now I understand why you are confused that wireheading is not being pursued much these days.

Replies from: niplavcomment by Coafos (CoafOS) · 2020-12-14T01:24:55.363Z · LW(p) · GW(p)

Hi!

I am a Mathematics university student from Europe. I don't comment often, and English isn't my native language, sorry for any mistakes in my tone or my language. I'm reading this site since March, but I heard about this site a long time ago.

I was always interested in computers and AI, so I found LW and Miri in 2015. But I didn't stay at that time. I think my entry point was when someone (around 2018 maybe?) recommended Unsong on reddit because it was weird and fun. I read a lot of stories on the rational fiction subreddit. (Somehow, I did not read HPMoR. Yet.) This March, there was a national lockdown, I got bored, so I looked up again SSC and this site. Since then, I read a lot of quality essays, for which I'm thankful.

During this winter, I try to participate more in online communities. I am interested in any topic, and I know a lot about mathematics and computers, so I might write something adjacent to these subjects.

Best,

CoafOS

comment by Filipe Marchesini (filipe-marchesini) · 2020-12-09T21:25:20.233Z · LW(p) · GW(p)

I believe that many people will take COVID to their relatives during Christmas and the New Year, and I'm seriously thinking about starting some campaign to make people aware that they should at least this time consider wearing a mask for the next weeks and also stop socializing without a mask during this period in order to protect their relatives during the traditional holidays. I started developing a mobile app today to be released by December 12 and another (also related to COVID) to be released by December 20, I don't know what impact this will have on people's decisions, but I'm already leaving here publicly registered so that it works as a personal incentive not to pay attention to anything else until the two apps are released.

PM me if you want to join development, I'm using KivyMD to develop the apps. The first app lets you easily record your last social gatherings, saying how many people (with and without masks) were present, when it happened, the duration (short or long), and if were indoors or outdoors. Then it says how risky is going to see your relatives on Christmas or in any other date and what you should do to mitigate the risks. There are also other things, but this is the main idea. The second one is a COVID game, aiming to influence social behavior and public discourse during pandemic, details later.

comment by habryka (habryka4) · 2020-12-26T03:31:33.852Z · LW(p) · GW(p)

Merry Christmas!

Hope you are all having a good day, even if this year makes lots of usual holiday celebrations a bit harder.

comment by magfrump · 2020-12-27T17:25:37.031Z · LW(p) · GW(p)

An analogy that I use for measured IQ is that it relates to intelligence similarly to how placement in a badminton tournament relates to physical fitness.

I think this has been pretty effective for me. I think the overall analogy between intelligence and physical fitness has been developed well in places so I don't care to rehash it, but I'm not sure if I've seen the framing of IQ as a very specific (and not very prestigious, maybe somewhat pretentious) sport, which I think encapsulates the metaphor well.

comment by magfrump · 2020-12-27T05:42:55.413Z · LW(p) · GW(p)

Are there simple walkthrough references for beginning investing?

I have failed to take sensible actions a few times with my savings because I'm stuck on stages like, how do I transfer money between my bank account and investment account, or how can I be confident that the investment I'm making is the investment I think I'm making. These are things that could probably be resolved in ten minutes with someone experienced.

Maybe I should just consult a financial advisor? In which case my question becomes how do I identify a good one/a reasonable fee/which subreddit should I look at for this first five minutes kind of advice?

Replies from: mark-xu↑ comment by Mark Xu (mark-xu) · 2020-12-27T17:36:52.249Z · LW(p) · GW(p)

Transferring money is usually done via ACH bank transfer, which is usually accessed in the "deposit" tab of the "transfers" tab of your investment account.

I'm not sure how to be confident in the investment in general. One simple way is to double-check the ticker symbol, e.g. MSFT for Microsoft, actually corresponds to the company you want. For instance, ZOOM does not correspond to Zoom Technologies, rather ZM is the correct ticker.

Talking to a financial advisor might be helpful. I have been told r/personalfinance is a reasonable source for advice, although I've never checked it out thoroughly.

Replies from: magfrumpcomment by magfrump · 2020-12-27T05:33:45.022Z · LW(p) · GW(p)

Has anybody rat-adjacent written about Timnit Gebru leaving Google?

I'm curious (1) whether and how much people thought her work was related to long term alignment, (2) what indirect factors were involved in her termination, such as would her paper make GOOG drop, and (3) what the decision making and especially Jeff Dean's public response says about people's faith in Google RMI and the DeepMind ethics board.

comment by NunoSempere (Radamantis) · 2020-12-06T16:22:27.946Z · LW(p) · GW(p)

I'd like to point people to this contest [LW · GW], which offers some prizes for forecasting research. It's closing on January the 1st, and hasn't gotten any submissions yet (though some people have committed to doing so.)

comment by Yoav Ravid · 2020-12-19T15:13:58.841Z · LW(p) · GW(p)

is there a way to see all the posts that were ever nominated to a review? should there be a tag for that, perhaps?

comment by niplav · 2020-12-19T08:20:26.414Z · LW(p) · GW(p)

Are there plans to import the Arbital content into LW? After the tag system & wiki, that seems like one possible next step. I continue to sometimes reference Arbital content, e. g. https://arbital.com/p/dwim/ just yesterday.

comment by Thomas Kwa (thomas-kwa) · 2020-12-18T19:51:22.813Z · LW(p) · GW(p)

Does c3.ai believe in the scaling hypothesis? If gwern is right, this could be the largest factor in their success.

comment by a gently pricked vein (strangepoop) · 2020-12-10T15:24:20.405Z · LW(p) · GW(p)

[ETA: posted a Question [LW · GW] instead]

Question: What's the difference, conceptually, between each of the following if any?

"You're only enjoying that food because you believe it's organic"

"You're only enjoying that movie scene because you know what happened before it"

"You're only enjoying that wine because of what it signals"

"You only care about your son because of how it makes you feel [LW · GW]"

"You only had a moving experience because of the alcohol and hormones in your bloodstream"

"You only moved your hand because you moved your fingers [LW · GW]"

"You're only showing courage because you've convinced yourself you'll scare away your opponent"

For example:

- Do some of these point legitimately or illegitimately at self-deception [LW · GW]?

- Are some of these a confusion of levels [LW · GW] and others less so?

- Are some of these instances of working wishful thinking [LW · GW]?

- Are some of these better seen as actions rather than rationalizations?

↑ comment by Charlie Steiner · 2020-12-22T05:42:58.946Z · LW(p) · GW(p)

I think the main complaint about "signalling" is when it's a lie. E.g. if there's some product that claims to be sophisticated, but is in fact not a reliable signal of sophistication (being usable without sophistication at all). Then people might feel affronted by people who propogate the advertising claims because of honesty-based aesthetics. I'm happy to call this an important difference from non-lie signalling, and also from other aesthetic preferences.

Oh, and there's wasteful signalling, can't forget about that either.

comment by Sherrinford · 2020-12-16T13:58:11.705Z · LW(p) · GW(p)

THIS [LW(p) · GW(p)] is still true: https://www.lesswrong.com/allPosts?filter=curated&view=new [? · GW] looks really weird (which you get from googling for curated posts) because the shortform posts are not filtered out.

comment by Pattern · 2020-12-04T17:18:18.014Z · LW(p) · GW(p)

Mods, if you want to make this post frontpage, stickied, etc., feel free to do so.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-12-04T20:36:51.465Z · LW(p) · GW(p)

Is stickied and personal blog, as usual :)

comment by Veedrac · 2020-12-20T16:13:42.107Z · LW(p) · GW(p)

Consider a fully deterministic conscious simulation of a person. There are two possible futures, one where that simulation is run once, and another where the simulation is run twice simultaneously in lockstep, with the exact same parameterization and environment. Do these worlds have different moral values?

I ask because...

initially I would have said no, probably not, these are identically the same person, so there is only one instance actually there, but...

Consider a fully deterministic conscious simulation of a person. There are two possible futures, one where that simulation is run once, and another where the simulation is also run once, but with the future having twice the probability mass. Do these worlds have different moral values?

to which the answer must surely be yes, else it's really hard to have coherent moral values under quantum mechanics, hence the contradiction.

↑ comment by Charlie Steiner · 2020-12-22T05:59:43.888Z · LW(p) · GW(p)

First I want to make sure we're splitting off the personal from the aesthetic here. By "the aesthetic," I mean the moral value from a truly outside perspective - like asking the question "if I got to design the universe, which way would I rather it be?" You don't anticipate being this person, you just like people from an aesthetic standpoint and want your universe to have some. For this type of preference, you can prefer the universe to be however you'd like (:P) including larger vs. smaller computers.

Second is the personal question. If the person being simulated is me, what would I prefer? I resolved these questions to my own satisfaction in Anthropic Selfish Preferences as a Modification of TDT ( https://www.lesswrong.com/posts/gTmWZEu3CcEQ6fLLM/treating-anthropic-selfish-preferences-as-an-extension-of [LW · GW] ), but I'm not sure how helpful that post actually is for sharing insight.

↑ comment by plex (ete) · 2020-12-20T18:17:55.173Z · LW(p) · GW(p)

The only model which I've come across which seems like it handles this type of thought experiment without breaking is UDASSA [LW · GW].

Replies from: VeedracConsider a computer which is 2 atoms thick running a simulation of you. Suppose this computer can be divided down the middle into two 1 atom thick computers which would both run the same simulation independently. We are faced with an unfortunate dichotomy: either the 2 atom thick simulation has the same weight as two 1 atom thick simulations put together, or it doesn't.

In the first case, we have to accept that some computer simulations count for more, even if they are running the same simulation (or we have to de-duplicate the set of all experiences, which leads to serious problems with Boltzmann machines). In this case, we are faced with the problem of comparing different substrates, and it seems impossible not to make arbitrary choices.

In the second case, we have to accept that the operation of dividing the 2 atom thick computer has moral value, which is even worse. Where exactly does the transition occur? What if each layer of the 2 atom thick computer can run independently before splitting? Is physical contact really significant? What about computers that aren't physically coherent? What two 1 atom thick computers periodically synchronize themselves and self-destruct if they aren't synchronized: does this synchronization effectively destroy one of the copies? I know of no way to accept this possibility without extremely counter-intuitive consequences.

UDASSA implies that simulations on the 2 atom thick computer count for twice as much as simulations on the 1 atom thick computer, because they are easier to specify. Given a description of one of the 1 atom thick computers, then there are two descriptions of equal complexity that point to the simulation running on the 2 atom thick computer: one description pointing to each layer of the 2 atom thick computer. When a 2 atom thick computer splits, the total number of descriptions pointing to the experience it is simulating doesn't change.