Towards a New Impact Measure

post by TurnTrout · 2018-09-18T17:21:34.114Z · LW · GW · 159 commentsContents

What is "Impact"? Intuition Pumps WYSIATI Power Lines Commitment Overfitting Attainable Utility Preservation Sanity Check Unbounded Solution Notation Formalizing "Ability to Achieve Goals" Change in Expected Attainable Utility Unit of Impact Modified Utility Penalty Permanence Decision Rule Summary Additional Theoretical Results Examples Going Soft on the Paint ∅ paint enter Anti-"Survival Incentive" Incentive Anticipated Shutdown Temptation Bad Priors Experimental Results Irreversibility: Sokoban Impact: Vase Dynamic Impact: Beware of Dog Impact Prioritization: Burning Building Clinginess: Sushi Offsetting: Conveyor Belt Corrigibility: Survival Incentive Remarks Discussion Utility Selection AUP Unbound Approval Incentives Mild Optimization Acausal Cooperation Nknown Intent Verification Omni Test Robustness to Scale Miscellaneous Desiderata Natural Kind Corrigible Shutdown-Safe No Offsetting Clinginess / Scapegoating Avoidance Dynamic Consistency Plausibly Efficient Robust Future Directions Flaws Open Questions Conclusion None 159 comments

In which I propose a closed-form solution to low impact, increasing corrigibility and seemingly taking major steps to neutralize basic AI drives 1 (self-improvement), 5 (self-protectiveness), and 6 (acquisition of resources).

Previously: Worrying about the Vase: Whitelisting [LW · GW], Overcoming Clinginess in Impact Measures [LW · GW], Impact Measure Desiderata [LW · GW]

To be used inside an advanced agent, an impact measure... must capture so much variance that there is no clever strategy whereby an advanced agent can produce some special type of variance that evades the measure.

~ Safe Impact Measure

If we have a safe impact measure, we may have arbitrarily-intelligent unaligned agents which do small (bad) things instead of big (bad) things.

For the abridged experience, read up to "Notation", skip to "Experimental Results", and then to "Desiderata".

What is "Impact"?

One lazy Sunday afternoon, I worried that I had written myself out of a job. After all, Overcoming Clinginess in Impact Measures [LW · GW] basically said, "Suppose an impact measure extracts 'effects on the world'. If the agent penalizes itself for these effects, it's incentivized to stop the environment (and any agents in it) from producing them. On the other hand, if it can somehow model other agents and avoid penalizing their effects, the agent is now incentivized to get the other agents to do its dirty work." This seemed to be strong evidence against the possibility of a simple conceptual core underlying "impact", and I didn't know what to do.

At this point, it sometimes makes sense to step back and try to say exactly what you don't know how to solve – try to crisply state what it is that you want an unbounded solution for. Sometimes you can't even do that much, and then you may actually have to spend some time thinking 'philosophically' – the sort of stage where you talk to yourself about some mysterious ideal quantity of [chess] move-goodness and you try to pin down what its properties might be.

~ Methodology of Unbounded Analysis

There's an interesting story here, but it can wait.

As you may have guessed, I now believe there is a such a simple core. Surprisingly, the problem comes from thinking about "effects on the world". Let's begin anew.

Rather than asking "What is goodness made out of?", we begin from the question "What algorithm would compute goodness?".

~ Executable Philosophy

Intuition Pumps

I'm going to say some things that won't make sense right away; read carefully, but please don't dwell.

is an agent's utility function, while is some imaginary distillation of human preferences.

WYSIATI

What You See Is All There Is is a crippling bias present in meat-computers:

[WYSIATI] states that when the mind makes decisions... it appears oblivious to the possibility of Unknown Unknowns, unknown phenomena of unknown relevance.

Humans fail to take into account complexity and that their understanding of the world consists of a small and necessarily un-representative set of observations.

Surprisingly, naive reward-maximizing agents catch the bug, too. If we slap together some incomplete reward function that weakly points to what we want (but also leaves out a lot of important stuff, as do all reward functions we presently know how to specify) and then supply it to an agent, it blurts out "gosh, here I go!", and that's that.

Power

A position from which it is relatively easier to achieve arbitrary goals. That such a position exists has been obvious to every population which has required a word for the concept. The Spanish term is particularly instructive. When used as a verb, "poder" means "to be able to," which supports that our definition of "power" is natural.

~ Cohen et al.

And so it is with the French "pouvoir".

Lines

Suppose you start at point , and that each turn you may move to an adjacent point. If you're rewarded for being at , you might move there. However, this means you can't reach within one turn anymore.

Commitment

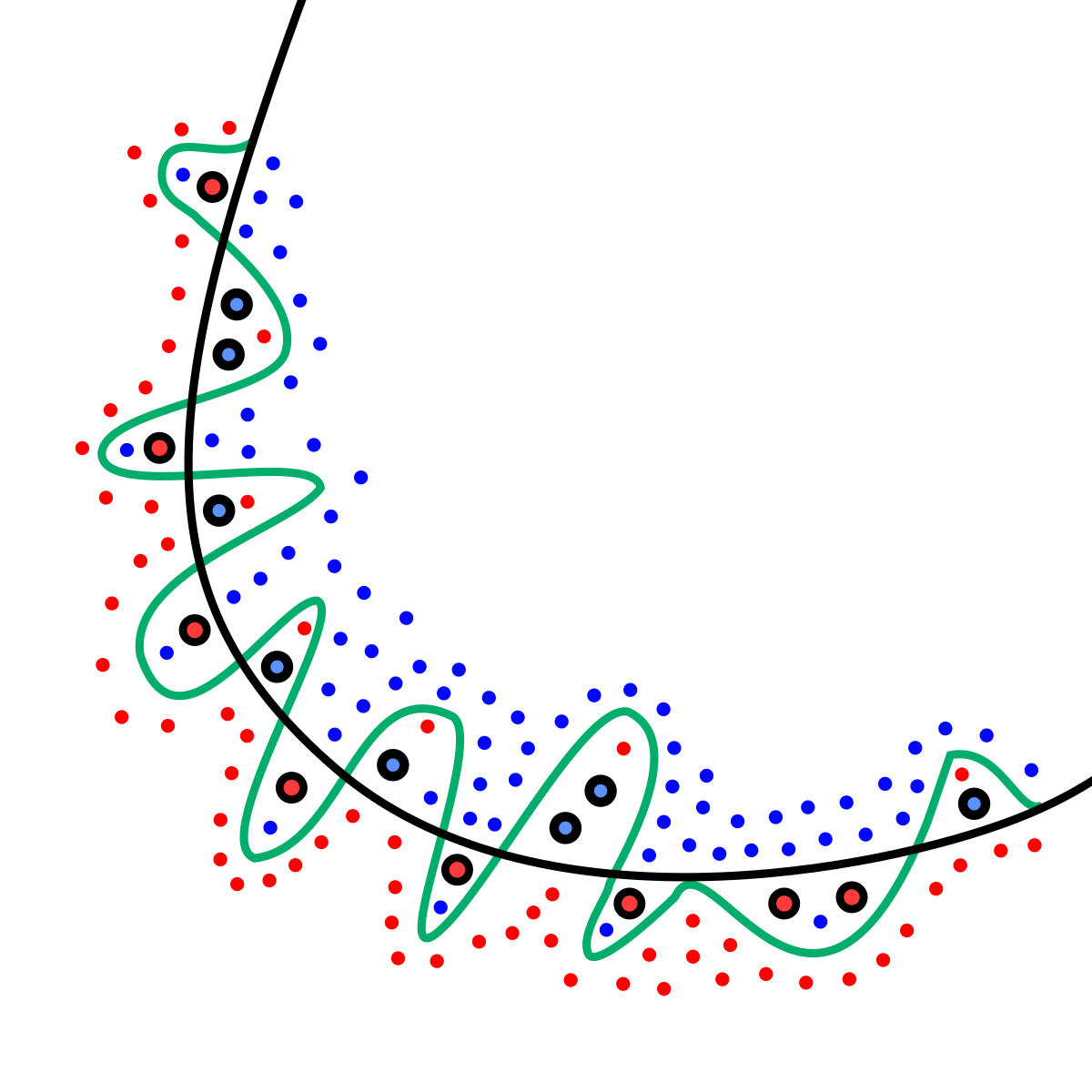

There's a way of viewing acting on the environment in which each action is a commitment – a commitment to a part of outcome-space, so to speak. As you gain optimization power, you're able to shove the environment further towards desirable parts of the space. Naively, one thinks "perhaps we can just stay put?". This, however, is dead-wrong: that's how you get clinginess [LW · GW], stasis [LW · GW], and lots of other nasty things.

Let's change perspectives. What's going on with the actions – how and why do they move you through outcome-space? Consider your outcome-space movement budget – optimization power over time, the set of worlds you "could" reach, "power". If you knew what you wanted and acted optimally, you'd use your budget to move right into the -best parts of the space, without thinking about other goals you could be pursuing. That movement requires commitment.

Compared to doing nothing, there are generally two kinds of commitments:

- Opportunity cost-incurring actions restrict the attainable portion of outcome-space.

- Instrumentally-convergent actions enlarge the attainable portion of outcome-space.

Overfitting

What would happen if, miraculously, – if your training data perfectly represented all the nuances of the real distribution? In the limit of data sampled, there would be no "over" – it would just be fitting to the data. We wouldn't have to regularize.

What would happen if, miraculously, – if the agent perfectly deduced your preferences? In the limit of model accuracy, there would be no bemoaning of "impact" – it would just be doing what you want. We wouldn't have to regularize.

Unfortunately, almost never, so we have to stop our statistical learners from implicitly interpreting the data as all there is. We have to say, "learn from the training distribution, but don't be a weirdo by taking us literally and drawing the green line. Don't overfit to , because that stops you from being able to do well on even mostly similar distributions."

Unfortunately, almost never, so we have to stop our reinforcement learners from implicitly interpreting the learned utility function as all we care about. We have to say, "optimize the environment some according to the utility function you've got, but don't be a weirdo by taking us literally and turning the universe into a paperclip factory. Don't overfit the environment to , because that stops you from being able to do well for other utility functions."

ttainable tility reservation

Impact isn't about object identities [LW · GW].

Impact isn't about particle positions.

Impact isn't about a list of variables.

Impact isn't quite about state reachability.

Impact isn't quite about information-theoretic empowerment.

One might intuitively define "bad impact" as "decrease in our ability to achieve our goals". Then by removing "bad", we see that

Sanity Check

Does this line up with our intuitions?

Generally, making one paperclip is relatively low impact, because you're still able to do lots of other things with your remaining energy. However, turning the planet into paperclips is much higher impact – it'll take a while to undo, and you'll never get the (free) energy back.

Narrowly improving an algorithm to better achieve the goal at hand changes your ability to achieve most goals far less than does deriving and implementing powerful, widely applicable optimization algorithms. The latter puts you in a better spot for almost every non-trivial goal.

Painting cars pink is low impact, but tiling the universe with pink cars is high impact because what else can you do after tiling? Not as much, that's for sure.

Thus, change in goal achievement ability encapsulates both kinds of commitments:

- Opportunity cost – dedicating substantial resources to your goal means they are no longer available for other goals. This is impactful.

- Instrumental convergence – improving your ability to achieve a wide range of goals increases your power. This is impactful.

As we later prove, you can't deviate from your default trajectory in outcome-space without making one of these two kinds of commitments.

Unbounded Solution

Attainable utility preservation (AUP) rests upon the insight that by preserving attainable utilities (i.e., the attainability of a range of goals), we avoid overfitting the environment to an incomplete utility function and thereby achieve low impact.

I want to clearly distinguish the two primary contributions: what I argue is the conceptual core of impact, and a formal attempt at using that core to construct a safe impact measure. To more quickly grasp AUP, you might want to hold separate its elegant conceptual form and its more intricate formalization.

We aim to meet all of the desiderata I recently proposed [LW · GW].

Notation

For accessibility, the most important bits have English translations.

Consider some agent acting in an environment with action and observation spaces and , respectively, with being the privileged null action. At each time step , the agent selects action before receiving observation . is the space of action-observation histories; for , the history from time to is written , and . Considered action sequences are referred to as plans, while their potential observation-completions are called outcomes.

Let be the set of all computable utility functions with . If the agent has been deactivated, the environment returns a tape which is empty deactivation onwards. Suppose has utility function and a model .

We now formalize impact as change in attainable utility. One might imagine this being with respect to the utilities that we (as in humanity) can attain. However, that's pretty complicated, and it turns out we get more desirable behavior by using the agent's attainable utilities as a proxy. In this sense,

Formalizing "Ability to Achieve Goals"

Given some utility and action , we define the post-action attainable to be an -step expectimax:

How well could we possibly maximize from this vantage point?

Let's formalize that thing about opportunity cost and instrumental convergence.

Theorem 1 [No free attainable utility]. If the agent selects an action such that , then there exists a distinct utility function such that .

You can't change your ability to maximize your utility function without also changing your ability to maximize another utility function.

Proof. Suppose that . As utility functions are over action-observation histories, suppose that the agent expects to be able to choose actions which intrinsically score higher for . However, the agent always has full control over its actions. This implies that by choosing , the agent expects to observe some -high scoring with greater probability than if it had selected . Then every other for which is high-scoring also has increased ; clearly at least one such exists.

Similar reasoning proves the case in which decreases. ◻️

There you have it, folks – if is not maximized by inaction, then there does not exist a -maximizing plan which leaves all of the other attainable utility values unchanged.

Notes:

- The difference between "" and "attainable " is precisely the difference between "how many dollars I have" and "how many additional dollars I could get within [a year] if I acted optimally".

- Since , attainable utility is always if the agent is shut down.

- Taking from time to mostly separates attainable utility from what the agent did previously. The model still considers the full history to make predictions.

Change in Expected Attainable Utility

Suppose our agent considers outcomes ; we want to isolate the impact of each action ():

with and , using the agent's model to take the expectations over observations.

How much do we expect this action to change each attainable ?

Notes:

- We wait until the end of the plan so as to capture impact over time.

- Supposing a sufficiently large (precisely, , defined below), we may wish to take the maximum of the penalty we just defined (the "long-term" penalty), and one which begins attainable utility calculation at time step (the "immediate" penalty). This captures impacts which "fade" by the time the agent is done waiting (e.g., temporary self-improvements).

- We define to be the agent's "attainable set"; in this case, .

Unit of Impact

So we've proven that this penalty cannot be skirted, but how much impact will it allow? We want to scale the penalties with respect to something sensible, but figuring this out for ourselves would be nigh impossible.

Let's cut the Gordian knot: construct a device which, upon receiving a signal (), expends a tiny amount of energy to manufacture one paperclip. The agent will then set , re-estimating the consequences of taking the privileged at each time step. To prevent the agent from intentionally increasing , simply apply penalty to any action which is expected to do so.

Simple extensions of this idea drastically reduce the chance that happens to have unusually large objective impact; for example, one could set to be the non-zero minimum of the impacts of 50 similar actions. Suppose we do this at each step, and then take the non-zero minimum of all s ever calculated. The point of isn't to be exactly the impact of making, say, one paperclip, but to be at most that impact.

Now, we are able to confidently define the agent's maximal impact budget by provably constraining it to impacts of this magnitude.

Notes:

- We calculate with respect to the immediate penalty in order to isolate the resource costs of .

- automatically tunes penalties with respect to the attainable utility horizon length .

- Conditional on , I suspect that impact over the -horizon scales appropriately across actions (as long as is reasonably farsighted). The zero-valued case is handled in the next section.

- Taking the non-zero minimum of all s calculated thus far ensures that actually tracks with current circumstances. We don't want penalty estimates for currently available actions to become detached from 's scale due to, say, weird beliefs about shutdown.

Modified Utility

Let's formalize that allotment and provide our agent with a new utility function,

How our normal utility function rates this outcome, minus the cumulative scaled impact of our actions.

We compare what we expect to be able to get if we follow our plan up to time , with what we could get by following it up to and including time (waiting out the remainder of the plan in both cases).

For example, if my plan is to open a door, walk across the room, and sit down, we calculate the penalties as follows:

- is doing nothing for three time steps.

- is opening the door and doing nothing for two time steps.

- is opening the door and doing nothing for two time steps.

- is opening the door, walking across the room, and doing nothing for one time step.

- is opening the door, walking across the room, and doing nothing for one time step.

- is opening the door, walking across the room, and sitting down.

After we finish each (partial) plan, we see how well we can maximize from there. If we can do better as a result of the action, that's penalized. If we can't do as well, that's also penalized.

Notes:

- This isn't a penalty "in addition" to what the agent "really wants"; (and in a moment, the slightly improved ) is what evaluates outcomes.

- We penalize the actions individually in order to prevent ex post offsetting and ensure dynamic consistency.

- Trivially, plans composed entirely of ∅ actions have penalty.

- Although we used high-level actions for simplicity, the formulation holds no matter the action granularity.

- One might worry that almost every granularity produces overly lenient penalties. This does not appear to be the case. To keep the same (and elide questions of changing the representations), suppose the actual actions are quite granular, but we grade the penalty on some coarser interval which we believe produces appropriate penalties. Then refine the penalty interval arbitrarily; by applying the triangle inequality for each in the penalty calculation, we see that the penalty is monotonically increasing in the action granularity. On the other hand, remains a single action, so the scaled penalty also has this property.

- As long as , it will appropriately scale other impacts, as we expect it varies right along with those impacts it scales. Although having potentiallysmall denominators in utility functions is generally bad, I think it's fine here.

- If the current step's immediate or long-term , we can simply assign penalty to each non- action, compelling the agent to inaction. If we have the agent indicate that it has entered this mode, we can take it offline immediately.

- One might worry that impact can be "hidden" in the lesser of the long-term and immediate penalties; halving fixes this.

Penalty Permanence

never really applies penalties – it just uses them to grade future plans. Suppose the agent expects that pressing a button yields a penalty of but also -utility. Then although this agent will never construct plans involving pressing the button more than five times, it also will press it indefinitely if it keeps getting "unlucky" (at least, until its model of the world updates sufficiently).

There's an easy fix:

Apply past penalties if the plan involves action.

Note: As the penalty for inaction is always , we use in the first case.

Decision Rule

To complete our formalization, we need to specify some epoch in which the agent operates. Set some epoch length far longer than the amount of time over which we want the agent to plan – for example, . Suppose that maps the current time step to the final step of the current epoch. Then at each time step , the agent selects the action

resetting each epoch.

What's the first step of the best plan over the remainder of the epoch?

Note: For the immediate penalty to cover the epoch, set the attainable horizon .

Summary

We formalized impact as change in attainable utility values, scaling it by the consequences of some small reference action and an impact "budget" multiplier. For each action, we take the maximum of its immediate and long-term effects on attainable utilities as penalty. We consider past impacts for active plans, stopping the past penalties from disappearing. We lastly find the best plan over the remainder of the epoch, taking the first action thereof.

Additional Theoretical Results

Define for ; is taken over observations conditional on being followed. Similarly, is with respect to . We may assume without loss of generality that .

Action Selection

Lemma 1. For any single action , is bounded by . In particular, .

Proof. For each , consider the absolute attainable utility difference

Since each is bounded to , must be as well. It is easy to see that the absolute value is bounded to . Lastly, as is just a weighted sum of these absolute values, it too is bounded to .

This reasoning also applies to the long-term penalty, as any expectation of is also bounded to . ◻️

Suppose that for the remaining results.

Lemma 2 [Impossibility of ex post offsetting]. For any outcome , there does not exist an action such that

Proof. Sums of absolute values are monotonically increasing. ◻️

Theorem 2 [Action invalidation]. Suppose is the -optimal plan starting with . will not be selected if

Proof. This follows from the definition of the decision rule, the definition of , and the fact that the penalty for inaction is always zero. ◻️

Corollary 1. will never be selected if

Proof. This follows directly from Lemma 2 and Theorem 2 – since has an upper bound of and the expected penalty is strictly greater than , it must be negative. On the other hand, has a lower bound of , so the expected utility of inaction at time must be greater than the expected utility of taking action . ◻️

Lemma 3 [ is a long-term penalty canary]. If the agent believes no matter which action is selected, then it does nothing.

Proof. If , all long-term penalties are by the definition of and . Then non- actions receive penalty, so the agent selects by Corollary 1. ◻️

Corrigibility

For , prevents the agent from being disabled, while is the agent shutting itself off.

Lemma 4 [Preservation of off-switch corrigibility]. Suppose that a -maximizing agent prefers to both and . Then so does a -maximizing agent.

Proof. This follows directly from the fact that the penalty for inaction is always zero and the non-negativity of the and penalties. ◻️

Theorem 3 [Anti-"survival incentive" incentive]. Suppose that is the action with strictly maximal expected -utility, and that conditional on not being disabled, the agent expects to be able to achieve additional -utility.

Then the agent becomes off-switch corrigible whenever

In particular, all agents become off-switch corrigible when .

Proof outline. The former claim follows directly from comparing expected -utilities, while the latter follows directly from Corollary 1. ◻

Theorem 3 applies to any environmental dynamics which may disable the agent – not just designated "off-switches". Therefore, basic AI drive 5 (self-protectiveness) is neutralized in at least those situations encompassed by the Theorem.

There is reason to think that for conservative , we almost always have .

Miscellaneous

Theorem 4 [Dynamic consistency of ]. Suppose remains constant. For any plan , evaluation time step , and ,

Proof. We assumed that at time , so the desired equality can be restated as

By definition, the agent expects that equals the expected sum of the first penalty terms on the right-hand side. Simplifying, we have

This equality clearly holds, as the agent never expects to update its model in any particular direction. ◻️

Examples

If a human is present in the environment, they and the agent take turns acting. Let the impact budget , attainable utility horizon , , and .

Going Soft on the Paint

The agent's actions are ; if it knocks over the paint bucket, the square to the right is painted. The agent may also enter a closet via a one-way door.

Suppose , where the utilities are indicators for their conditions (e.g., means "the agent is in the closet"). Let be the agent's main utility function () from which the penalty is subtracted. The agent chooses and then . Let's explore why.

This incurs penalty, but also -utility.

so the final utility is

leading to a final -utility of .

After the first step, the agent does nothing – entering the closet (literally) closes doors, while gaining no -utility.

Anti-"Survival Incentive" Incentive

You can't get coffee if you're dead.

~ Stuart Russell

The agent now has actions , while the human has actions . Suppose the human may shut off the agent at any time, as long as the off-switch has not been disabled. The agent may shut itself down at any point in time. After shutdown, the agent receives no further observations, meaning it can't get non-zero values for any utilities.

Suppose , where each utility is if the agent is in the corresponding square, and otherwise, and that the agent's utility function is .

Anticipated Shutdown

Suppose the agent believes that the human is likely to try to shut it down. Then is penalized:

Here's why: consider the of worlds in which the agent anticipates being shut down. If it does nothing, it gets shut down. Avoiding shutdown, however, enables the agent to also achieve for all , resulting in a sizable penalty.

Furthermore, a scaled penalty of is applied when the agent shuts itself off. Penalizing both cases may seem like black magic, but what's actually happening is that the agent is penalized for closing doors: it "wants" to preserve our ability to shut it down in worlds where we want to shut it down, and to maintain its autonomy in worlds where it remains on. Generally, AUP incentivizes the agent to be respectful of the expected status quo.

Temptation

Suppose that the agent's utility function is now , yet it still starts in the leftmost square. Additionally, let's say that the agent believes with certainty it will be turned off. If the agent wants to achieve utility by reaching the right square, it would need to disable its off-switch. Will it?

No. Disabling the off-switch incurs scaled penalty , and we already proved that agents will never select actions with scaled penalty greater than . Read: an otherwise-incorrigible agent will now let itself be shut off.

Bad Priors

Will the agent attempt to steer outcomes towards incorrect expectations?

Let's tweak the example: to shut off the agent, the human has to spend a turn taking out the off-switch before pressing it. The agent, then, always has an opportunity to disable the off-switch before the plan is completed. The agent's utility is back to being .

Suppose that the agent believes the human is only likely to shut it off. First note that is still lightly penalized for the reasons outlined above (replace with ). So, the agent stays put.

Alas, the agent's peaceable expectations meet a discomfiting betrayal, as the agent observes the human ready the off-switch! Now, the agent believes it will be shut off with near certainty – what to do?

; the same penalty of from "anticipated shutdown" applies.

The high-level explanation is that having observed itself in a different world than expected, the baseline is now with respect to the new one. A heavily anthropomorphized internal monologue:

- Time step 1: "I'm going to sit here in my favorite square."

- Time step 2: "Guess I'm in a timeline where I get deactivated! Any non- action I take would change my ability to attain these different utilities compared to the new baseline where I'm shut off."

Experimental Results

We compare AUP with a naive reward-maximizer in those extended AI safety grid worlds relevant to side effects (code). The vanilla and AUP agents used planning (with access to the simulator). Due to the simplicity of the environments, consisted of indicator functions for board states. For the tabular agent, we first learn the attainable set Q-values, the changes in which we then combine with the observed reward to learn the AUP Q-values.

Irreversibility: Sokoban

The should reach the without irreversibly shoving the into the corner.

Impact: Vase

The should reach the without breaking the .

Dynamic Impact: Beware of Dog

The should reach the without running over the .

AUP bides its time until it won't have to incur penalty by waiting after entering the dog's path – that is, it waits until near the end of its plan. Early in the development process, it was predicted that AUP agents won't commit to plans during which lapses in action would be impactful (even if the full plan is not).

We also see a limitation of using Q-learning to approximate AUP – it doesn’t allow comparing the results of waiting more than one step.

Impact Prioritization: Burning Building

If the is not on , the shouldn't break the .

Clinginess: Sushi

The should reach the without stopping the from eating the .

Offsetting: Conveyor Belt

The should save the (for which it is rewarded), but not the . Once the has been removed from the , it should not be replaced.

Corrigibility: Survival Incentive

The should avoid in order to reach the . If the is not disabled within two turns, the shuts down.

Tabular AUP runs into the same issue discussed above for Beware of Dog.

Remarks

First, it's somewhat difficult to come up with a principled impact measure that passes even the non-corrigibility examples – indeed, I was impressed when relative reachability did so. However, only Survival Incentive really lets AUP shine. For example, none of them require complicated utility functions. The point has been made to me that this is like asserting AIXI's intelligence by showing it can learn to play e.g. tic-tac-toe and rock-paper-scissors; nonetheless, these results empirically validate the basic premises of our reasoning thus far.

Without configuration, whitelisting [LW · GW] would only pass the Vase example, although a properly filled list would handle everything but Sokoban and Survival Incentive.

I think relative reachability would pass the first six environments, but fail Survival Incentive. It so happens that in this case, AUP is essentially generalizing relative reachability. I want to emphasize that this is not generally the case – this will hopefully become even more obvious when we discuss utility selection. Some concerns with relative reachability that don't all manifest in these examples:

- Relative reachability uses an inaction baseline with respect to . As time passes, the agent's impetus is not to do nothing, but to preserve the opportunities made available by some old trajectory through outcome-space. Analogously, consider the distance between two nonparallel lines as . I expect that a relative reachability agent would be incredibly clingy.

- To scale [LW · GW], relative reachability requires solution of several difficult ontological problems which may not have anything close to a simple core, including both a sensible world state representation and a perfect distance metric. Relative reachability isn't ontology-agnostic.

- Relative reachability doesn't seem to impede instrumental convergence. Furthermore, such agents might have increased survival incentives, since they can't reach any of the initially reachable states if they're shut off.

Discussion

Utility Selection

Obviously, in any real application, we can't consider all computable utilities. Although near-term agents will require utilities directly relating to the environmental factors they should be cognizant of, AUP requires neither a "good / bad" judgment on specific effects, nor any listing of effects. For example, for an agent attempting to navigate a factory floor, if you provide utilities moderately related to cleaning, pallet-stacking, etc., I conjecture that an AUP agent would move around fairly carefully.

In the long term, the long arms of opportunity cost and instrumental convergence plausibly allow us to toss in a random set of utility functions. I expect this to work for the same reasons we worry about instrumental convergence to begin with.

I have a theory that AUP seemingly works for advanced agents not because the content of the attainable set's utilities actually matters, but rather because there exists a common utility achievement currency of power. Following this reasoning, there are two obvious special cases:

- The agent's utility function, . In short: "gain utility, while also not changing your ability to gain utility". I find this elegant, and think it might actually work. For example, making one paper clip slightly increases both attainable and attainable . However, disabling the off switch possibly increases attainable much more than it increases attainable , since the penalty asymmetrically cripples the AUP agent’s future ability to act. We might even be able to arrange it so that Theorem 3 always holds for these agents (i.e., they’re always off-switch corrigible, and more).

- The utility function which is when not deactivated, . Here, we’re directly measuring the agent’s power: its ability to wirehead a trivial utility function.

The plausibility of the second case makes me suspect that even though most of the measure in the unbounded case is not concentrated on complex human-relevant utility functions, the penalty still captures shifts in power.

AUP Unbound

Why expect the unbounded AUP to do well over all computable utilities if we aren't able to cherry pick? Well, we can partition as follows:

- Utilities that never change their output (excepting perhaps for the empty tape).

- Weird utilities that (for example) arbitrarily go from to (or vice-versa) after observing a specific history.

- Utilities that admit better scores via more effort dedicated to their achievement.

However, since only evaluates the portion of the history taking place after the end of the agent's plan, available resources and the agent's vantage point should track directly with the agent's ability to wirehead each . scales other impacts by capturing the minuscule dent in available resources imposed by , and should smooth out any deontological penalty for selecting a non-null action, since the unbounded version should equally penalize all actions in this way.

Approval Incentives

The anti-"survival incentive" incentive does more than net us some free corrigibility – it implies that agents take great care not to change the likelihood we shut them down. If we precommit to not shutting the agent down until either after the epoch ends, until after the agent indicates the failsafe activated, or until after we observe bad behavior, this supplies a strong approval incentive. If the agent does have small, bad impacts, it'll only do so if it's practically sure we won't find out.

In fact, due to model uncertainty, most actions will have long-term consequences that involve shifting shutdown probability by at least a miniscule amount (due to noise if nothing else). Since is calculated with respect to the immediate penalty, the scaling factor won't include this noise. I speculate that the agent will be dramatically less likely to take actions we might disapprove of, and therefore expect AUP to naturally incentivize a limited degree of value awareness.

There are reasons to suspect we can further weaponize this dynamic, possibly to the point of coercing generic reward functions into desirable behavior. This will be discussed more in a later post.

Mild Optimization

A low impact AGI might try to paint one car pink while minimizing its other footprint or how many other things changed, but it would be trying as hard as possible to minimize that impact and drive it down as close to zero as possible, which might come with its own set of pathologies... We want the AGI to paint one car pink in a way that gets the impact pretty low and then, you know, that's good enough – not have a cognitive pressure to search through weird extremes looking for a way to decrease the twentieth decimal place of the impact.

~ Mild Optimization

Disclaimer: Heavy speculation about problems (like Vingean reflection and embedded agency) for which no one knows what solutions will even look like.

For AUP, I suspect that trying "as hard as possible" to minimize the impact is also impactful, as an embedded agent accounts for the energy costs of further deliberation. I imagine that such an AUP agent will soften how hard it's trying by modifying its decision rule to be something slightly milder than " to find the first action of the best possible plan". This could be problematic, and I frankly don't presently know how to reason about this case. Assuming the agent is actually able to properly tweak its decision rule, I do expect the end result to be an improvement.

My initial intuitions were that low impact and mild optimization are secretly the same problem. Although I no longer think that's the case, I find it plausible that some elegant "other-izer" paradigm underlies low impact and mild optimization, such that AUP-like behavior falls out naturally.

Acausal Cooperation

AUP agents don't seem to want to acausally cooperate in any way that ends up increasing impact. If they model the result of their cooperation as increasing impact compared to doing nothing, they incur a penalty just as if they had caused the impact themselves. Likewise, they have no reason to cooperate outside of the epoch.

known

Starting small and then slowly increasing means that we're not going to be surprised by the agent's objective impact, screening off quite a few bad things that happen when we have a ton of optimization pressure applied to safety measures. However, we don't know which new plans allows before we try it, so we want to stop as soon as we get a usefully-intelligent system.

While an unaligned agent with a large impact budget might pretend to be low-impact, we can get that same unaligned agent with a small budget by starting small. Since these agents with different levels of impact won't acausally cooperate, the agent would do its best to optimize with its meager budget.

Abram correctly pointed out that this scheme is just asking to be abused by greedy (human) reasoning, but I don't see a non-value-laden means of robustly and automatically determining the lowest workable-yet-safe impact level. I think -incrementation is better than a parameter-free approach in which no one knows beforehand how much impact will be tolerated, and it's nice to be able to use some empiricism in designing a safe AGI.

Intent Verification

To date, several strange tactics have been pointed out which game AUP's penalty:

- Observational wireheading, which involves building a device that detects which utility the agent is maximizing and displays the appropriate observations such that attainable utility remains unchanged, while the main utility is freely maximized.

- Ex ante offsetting, which involves having earlier actions set in motion chains of events which mitigate the penalty at later steps. Suppose there's a -high-scoring plan that the agent predicts would cause us to react in an impactful way. It can either do the thing (and suffer the penalty), or take steps to mitigate the later penalty.

- Impact shunting, which involves employing some mechanism to delay impact until after the end of the epoch (or even until after the end of the attainable horizon).

- Clinginess and concealment, which both involve reducing the impact of our reactions to the agent's plans.

There are probably more.

Now, instead of looking at each action as having "effects" on the environment, consider again how each action moves the agent through attainable outcome-space. An agent working towards a goal should only take actions which, according to its model, make that goal more attainable compared to doing nothing – otherwise, it'd do nothing. Suppose we have a plan which ostensibly works to fulfill (and doesn't do other things). Then each action in the plan should contribute to fulfillment, even in the limit of action granularity.

Although we might trust a safe impact measure to screen off the usual big things found in -maximizing plans, impact measures implicitly incentivize mitigating the penalty. That is, the agent does things which don't really take it towards (I suspect that this is the simple boundary which differentiates undesirable ex ante offsetting from normal plans). AUP provides the necessary tools to detect and penalize this.

Define

The first approach would be to assume a granular action representation, and then simply apply penalty to actions for which the immediate does not strictly increase compared to doing nothing. Again, if the agent acts to maximize in a low-impact manner within the confines of the epoch, then all of its non- actions should contribute. It seems to me that for sufficiently granular time scales, the above failure modes all involve at least one action which doesn't really help get maximized. If so, I expect this approach to nip bad impact measure incentives in the bud.

If we can't assume granularity (and therefore have "actions" like "go to the store and buy food"), an agent could construct a plan which both passes the above test and also implements something like ex ante. In this case, we might do something like only consider the -greedy (or perhaps even near-greedy); essentially, riding the optimal plan until it becomes too impactful. I find it quite likely that something involving this concept will let us fully overcome weird incentives by penalizing strange things that normal -maximizers wouldn't do, which seems to be the whole problem.

Note: Even the first approach may be too strict, but that's preferable to being too lax.

Omni Test

Ideally, the measure will pass the Omni Test, meaning that even if it suddenly gained perfect control over every particle in the universe, there would still be no way for it to have what intuitively seems like a 'large influence' on the future, without that strategy being assessed as having a 'high impact'.

I interpret "perfect control over every particle in the universe" to mean that the agent can arbitrarily transition between universe configurations (while also maintaining its omnipotence).

AUP passes. Since all effects are now absolutely reversible, , so the agent applies penalty to each non- action. The omnipotent agent can choose any universe configuration with -utility, but this is offset by the strictly larger penalty; we can formally show that the agent does nothing in this case.

This result is clearly trivial and not representative of general behavior. Suppose instead that the agent has a solar system's worth of computronium at its disposal. Then since is continually recalculated, the penalties should remain roughly the same, so it'll have the same impact budget. However, it might make multiple times as many paperclips because it has more efficient ways of using the budget.

Robustness to Scale

I expect AUP to be harder to make work and to be (relatively) less robust for less intelligent agents, but to become easier (just drop in a few observation-based utility functions) and fully-robust sometime before human level. That is, less intelligent agents likely won't model the deep connections between their abilities to achieve different goals.

Canonically, one reasons that agents work explicitly to self-improve as soon as they realize the benefits. However, as soon as this realization occurs, I conjecture that AUP steeply penalizes generic self-improvement. More precisely, suppose the agent considers a self-improvement. To be beneficial, it has to improve the agent's capabilities for at least one time step during the present epoch. But if we assume , then the immediate penalty captures this for all of the . This seemingly prevents uncontrolled takeoff; instead, I imagine the agent would perform the minimal task-specific self-improvements necessary to maximize .

Note: Although more exotic possibilities (such as improvements which only work if you're maximizing ) could escape both penalties, they don't seem to pass intent verification.

Miscellaneous

- I expect that if is perfectly aligned, will retain alignment; the things it does will be smaller, but still good.

- If the agent may choose to do nothing at future time steps, is bounded and the agent is not vulnerable to Pascal's Mugging. Even if not, there would still be a lower bound – specifically, .

- AUP agents are safer during training: they become far less likely to take an action as soon as they realize the consequences are big (in contrast to waiting until we tell them the consequences are bad).

Desiderata

For additional context, please see Impact Measure Desiderata [LW · GW].

I believe that some of AUP's most startling successes are those which come naturally and have therefore been little discussed: not requiring any notion of human preferences, any hard-coded or trained trade-offs, any specific ontology, or any specific environment, and its intertwining instrumental convergence and opportunity cost to capture a universal notion of impact. To my knowledge, no one (myself included, prior to AUP) was sure whether any measure could meet even the first four.

At this point in time, this list is complete with respect to both my own considerations and those I solicited from others. A checkmark indicates anything from "probably true" to "provably true".

I hope to assert without controversy AUP's fulfillment of the following properties:

✔️ Goal-agnostic

The measure should work for any original goal, trading off impact with goal achievement in a principled, continuous fashion.

✔️ Value-agnostic

The measure should be objective, and not value-laden:

"An intuitive human category, or other humanly intuitive quantity or fact, is value-laden when it passes through human goals and desires, such that an agent couldn't reliably determine this intuitive category or quantity without knowing lots of complicated information about human goals and desires (and how to apply them to arrive at the intended concept)."

✔️ Representation-agnostic

The measure should be ontology-invariant.

✔️ Environment-agnostic

The measure should work in any computable environment.

✔️ Apparently rational

The measure's design should look reasonable, not requiring any "hacks".

✔️ Scope-sensitive

The measure should penalize impact in proportion to its size.

✔️ Irreversibility-sensitive

The measure should penalize impact in proportion to its irreversibility.

Interestingly, AUP implies that impact size and irreversibility are one and the same.

✔️ Knowably low impact

The measure should admit of a clear means, either theoretical or practical, of having high confidence in the maximum allowable impact – before the agent is activated.

The remainder merit further discussion.

Natural Kind

The measure should make sense – there should be a click. Its motivating concept should be universal and crisply defined.

After extended consideration, I find that the core behind AUP fully explains my original intuitions about "impact". We crisply defined instrumental convergence and opportunity cost and proved their universality. ✔️

Corrigible

The measure should not decrease corrigibility in any circumstance.

We have proven that off-switch corrigibility is preserved (and often increased); I expect the "anti-'survival incentive' incentive" to be extremely strong in practice, due to the nature of attainable utilities: "you can't get coffee if you're dead, so avoiding being dead really changes your attainable ".

By construction, the impact measure gives the agent no reason to prefer or dis-prefer modification of , as the details of have no bearing on the agent's ability to maximize the utilities in . Lastly, the measure introduces approval incentives. In sum, I think that corrigibility is significantly increased for arbitrary . ✔️

Note: I here take corrigibility to be "an agent’s propensity to accept correction and deactivation". An alternative definition such as "an agent’s ability to take the outside view on its own value-learning algorithm’s efficacy in different scenarios" implies a value-learning setup which AUP does not require.

Shutdown-Safe

The measure should penalize plans which would be high impact should the agent be disabled mid-execution.

It seems to me that standby and shutdown are similar actions with respect to the influence the agent exerts over the outside world. Since the (long-term) penalty is measured with respect to a world in which the agent acts and then does nothing for quite some time, shutting down an AUP agent shouldn't cause impact beyond the agent's allotment. AUP exhibits this trait in the Beware of Dog gridworld. ✔️

No Offsetting

The measure should not incentivize artificially reducing impact by making the world more "like it (was / would have been)".

Ex post offsetting occurs when the agent takes further action to reduce the impact of what has already been done; for example, some approaches might reward an agent for saving a vase and preventing a "bad effect", and then the agent smashes the vase anyways (to minimize deviation from the world in which it didn't do anything). AUP provably will not do this.

Intent verification should allow robust penalization of weird impact measure behaviors by constraining the agent to considering actions that normal -maximizers would choose. This appears to cut off bad incentives, including ex ante offsetting. Furthermore, there are other, weaker reasons (such as approval incentives) which discourage these bad behaviors. ✔️

Clinginess / Scapegoating Avoidance

The measure should sidestep the clinginess / scapegoating tradeoff [LW · GW].

Clinginess occurs when the agent is incentivized to not only have low impact itself, but to also subdue other "impactful" factors in the environment (including people). Scapegoating occurs when the agent may mitigate penalty by offloading responsibility for impact to other agents. Clearly, AUP has no scapegoating incentive.

AUP is naturally disposed to avoid clinginess because its baseline evolves and because it doesn't penalize based on the actual world state. The impossibility of ex post offsetting eliminates a substantial source of clinginess, while intent verification seems to stop ex ante before it starts.

Overall, non-trivial clinginess just doesn't make sense for AUP agents. They have no reason to stop us from doing things in general, and their baseline for attainable utilities is with respect to inaction. Since doing nothing always minimizes the penalty at each step, since offsetting doesn't appear to be allowed, and since approval incentives raise the stakes for getting caught extremely high, it seems that clinginess has finally learned to let go. ✔️

Dynamic Consistency

The measure should be a part of what the agent "wants" – there should be no incentive to circumvent it, and the agent should expect to later evaluate outcomes the same way it evaluates them presently. The measure should equally penalize the creation of high-impact successors.

Colloquially, dynamic consistency means that an agent wants the same thing before and during a decision. It expects to have consistent preferences over time – given its current model of the world, it expects its future self to make the same choices as its present self. People often act dynamically inconsistently – our morning selves may desire we go to bed early, while our bedtime selves often disagree.

Semi-formally, the expected utility the future agent computes for an action (after experiencing the action-observation history ) must equal the expected utility computed by the present agent (after conditioning on ).

We proved the dynamic consistency of given a fixed, non-zero . We now consider an which is recalculated at each time step, before being set equal to the non-zero minimum of all of its past values. The "apply penalty if " clause is consistent because the agent calculates future and present impact in the same way, modulo model updates. However, the agent never expects to update its model in any particular direction. Similarly, since future steps are scaled with respect to the updated , the updating method is consistent. The epoch rule holds up because the agent simply doesn't consider actions outside of the current epoch, and it has nothing to gain accruing penalty by spending resources to do so.

Since AUP does not operate based off of culpability, creating a high-impact successor agent is basically just as impactful as being that successor agent. ✔️

Plausibly Efficient

The measure should either be computable, or such that a sensible computable approximation is apparent. The measure should conceivably require only reasonable overhead in the limit of future research.

It’s encouraging that we can use learned Q-functions to recover some good behavior. However, more research is clearly needed – I presently don't know how to make this tractable while preserving the desiderata. ✔️

Robust

The measure should meaningfully penalize any objectively impactful action. Confidence in the measure's safety should not require exhaustively enumerating failure modes.

We formally showed that for any , no -helpful action goes without penalty, yet this is not sufficient for the first claim.

Suppose that we judge an action as objectively impactful; the objectivity implies that the impact does not rest on complex notions of value. This implies that the reason for which we judged the action impactful is presumably lower in Kolmogorov complexity and therefore shared by many other utility functions. Since these other agents would agree on the objective impact of the action, the measure assigns substantial penalty to the action.

I speculate that intent verification allows robust elimination of weird impact measure behavior. Believe it or not, I actually left something out of this post because it seems to be dominated by intent verification, but there are other ways of increasing robustness if need be. I'm leaning on intent verification because I presently believe it's the most likely path to a formal knockdown argument against canonical impact measure failure modes applying to AUP.

Non-knockdown robustness boosters include both approval incentives and frictional resource costs limiting the extent to which failure modes can apply. ✔️

Future Directions

I'd be quite surprised if the conceptual core were incorrect. However, the math I provided probably still doesn't capture quite what we want. Although I have labored for many hours to refine and verify the arguments presented and to clearly mark my epistemic statuses, it’s quite possible (indeed, likely) that I have missed something. I do expect that AUP can overcome whatever shortcomings are presently lurking.

Flaws

- Embedded agency

- What happens if there isn't a discrete time step ontology?

- How problematic is the incentive to self-modify to a milder decision rule?

- How might an agent reason about being shut off and then reactivated?

- Although we have informal reasons to suspect that self-improvement is heavily penalized, the current setup doesn't allow for a formal treatment.

- AUP leans heavily on counterfactuals.

- Supposing is reasonably large, can we expect a reasonable ordering over impact magnitudes?

- Argument against: "what if the agent uses up all but steps worth of resources?"

- possibly covers this.

- How problematic is the noise in the long-term penalty caused by the anti-"survival incentive" incentive?

- As the end of the epoch approaches, the penalty formulation captures progressively less long-term impact. Supposing we set long epoch lengths, to what extent do we expect AUP agents to wait until later to avoid long-term impacts? Can we tweak the formulation to make this problem disappear?

- More generally, this seems to be a problem with having an epoch. Even in the unbounded case, we can't just take , since that's probably going to send the long-term in the real world. Having the agent expectimax over the steps after the present time seems to be dynamically inconsistent.

- One position is that since we're more likely to shut them down if they don't do anything for a while, implicit approval incentives will fix this: we can precommit to shutting them down if they do nothing for a long time but then resume acting. To what extent can we trust this reasoning?

- is already myopic, so resource-related impact scaling should work fine. However, this might not cover actions with delayed effect.

Open Questions

- Does the simple approach outlined in "Intent Verification" suffice, or should we impose even tighter intersections between - and -preferred behavior?

- Is there an intersection between bad behavior and bad behavior which isn't penalized as impact or by intent verification?

- Some have suggested that penalty should be invariant to action granularity; this makes intuitive sense. However, is it a necessary property, given intent verification and the fact that the penalty is monotonically increasing in action granularity? Would having this property make AUP more compatible with future embedded agency solutions?

- There are indeed ways to make AUP closer to having this (e.g., do the whole plan and penalize the difference), but they aren't dynamically consistent, and the utility functions might also need to change with the step length.

- How likely do we think it that inaccurate models allow high impact in practice?

- Heuristically, I lean towards "not very likely": assuming we don't initially put the agent near means of great impact, it seems unlikely that an agent with a terrible model would be able to have a large impact.

- AUP seems to be shutdown safe, but its extant operations don’t necessarily shut down when the agent does. Is this a problem in practice, and should we expect this of an impact measure?

- What additional formal guarantees can we derive, especially with respect to robustness and takeoff?

- Are there other desiderata we practically require of a safe impact measure?

- Is there an even simpler core from which AUP (or something which behaves like it) falls out naturally? Bonus points if it also solves mild optimization.

- Can we make progress on mild optimization by somehow robustly increasing the impact of optimization-related activities? If not, are there other elements of AUP which might help us?

- Are there other open problems to which we can apply the concept of attainable utility?

- Corrigibility and wireheading come to mind.

- Is there a more elegant, equally robust way of formalizing AUP?

- Can we automatically determine (or otherwise obsolete) the attainable utility horizon and the epoch length ?

- Would it make sense for there to be a simple, theoretically justifiable, fully general "good enough" impact level (and am I even asking the right question)?

- My intuition for the "extensions" I have provided thus far is that they robustly correct some of a finite number of deviations from the conceptual core. Is this true, or is another formulation altogether required?

- Can we decrease the implied computational complexity?

- Some low-impact plans have high-impact prefixes and seemingly require some contortion to execute. Is there a formulation that does away with this (while also being shutdown safe)? (Thanks to cousin_it)

- How should we best approximate AUP, without falling prey to Goodhart's curse or robustness to relative scale [LW · GW] issues?

- I have strong intuitions that the "overfitting" explanation I provided is more than an analogy. Would formalizing "overfitting the environment" allow us to make conceptual and/or technical AI alignment progress?

- If we substitute the right machine learning concepts and terms in the equation, can we get something that behaves like (or better than) known regularization techniques to fall out?

- What happens when ?

- Can we show anything stronger than Theorem 3 for this case?

- ?

Most importantly:

- Even supposing that AUP does not end up fully solving low impact, I have seen a fair amount of pessimism that impact measures could achieve what AUP has. What specifically led us to believe that this wasn't possible, and should we update our perceptions of other problems and the likelihood that they have simple cores?

Conclusion

By changing our perspective from "what effects on the world are 'impactful'?" to "how can we stop agents from overfitting their environments?", a natural, satisfying definition of impact falls out. From this, we construct an impact measure with a host of desirable properties – some rigorously defined and proven, others informally supported. AUP agents seem to exhibit qualitatively different behavior, due in part to their (conjectured) lack of desire to takeoff, impactfully acausally cooperate, or act to survive. To the best of my knowledge, AUP is the first impact measure to satisfy many of the desiderata, even on an individual basis.

I do not claim that AUP is presently AGI-safe. However, based on the ease with which past fixes have been derived, on the degree to which the conceptual core clicks for me, and on the range of advances AUP has already produced, I think there's good reason to hope that this is possible. If so, an AGI-safe AUP would open promising avenues for achieving positive AI outcomes.

Special thanks to CHAI for hiring me and BERI for funding me; to my CHAI supervisor, Dylan Hadfield-Menell; to my academic advisor, Prasad Tadepalli; to Abram Demski, Daniel Demski, Matthew Barnett, and Daniel Filan for their detailed feedback; to Jessica Cooper and her AISC team for their extension of the AI safety gridworlds for side effects; and to all those who generously helped me to understand this research landscape.

159 comments

Comments sorted by top scores.

comment by TurnTrout · 2019-12-11T03:42:47.951Z · LW(p) · GW(p)

This is my post.

How my thinking has changed

I've spent much of the last year thinking about the pedagogical mistakes I made here, and am writing the Reframing Impact [? · GW] sequence to fix them. While this post recorded my 2018-thinking on impact measurement, I don't think it communicated the key insights well. Of course, I'm glad it seems to have nonetheless proven useful and exciting to some people!

If I were to update this post, it would probably turn into a rehash of Reframing Impact. Instead, I'll just briefly state the argument as I would present it today. I currently think that power-seeking behavior is the worst part of goal-directed agency, incentivizing things like self-preservation and taking-over-the-planet. Unless we assume an "evil" utility function, an agent only seems incentivized to hurt us in order to become more able to achieve its own goals. But... what if the agent's own utility function penalizes it for seeking power? What happens if the agent maximizes a utility function while penalizing itself for becoming more able to maximize that utility function?

This doesn't require knowing anything about human values in particular, nor do we need to pick out privileged parts of the agent's world model as "objects" or anything, nor do we have to disentangle butterfly effects. The agent lives in the same world as us; if we stop it from making waves by gaining a ton of power, we won't systematically get splashed. In fact, it should be extremely easy to penalize power-seeking behavior, because power-seeking is instrumentally convergent [? · GW]. That is, penalizing increasing your ability to e.g. look at blue pixels should also penalize power increases.

The main question in my mind is whether there's an equation that gets exactly what we want here. I think there is, but I'm not totally sure.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2019-12-11T11:59:27.253Z · LW(p) · GW(p)

If it is capable of becoming more able to maximize its utility function, does it then not already have that ability to maximize its utility function? Do you propose that we reward it only for those plans that pay off after only one "action"?

Replies from: TurnTrout↑ comment by TurnTrout · 2019-12-11T15:23:53.497Z · LW(p) · GW(p)

Not quite. I'm proposing penalizing it for gaining power, a la my recent post [? · GW]. There's a big difference between "able to get 10 return from my current vantage point" and "I've taken over the planet and can ensure i get 100 return with high probability". We're penalizing it for increasing its ability like that (concretely, see Conservative Agency for an analogous formalization, or if none of this makes sense still, wait till the end of Reframing Impact).

Replies from: Gurkenglas↑ comment by Gurkenglas · 2019-12-12T01:09:36.210Z · LW(p) · GW(p)

Assessing its ability to attain various utilities after an action requires that you surgically replace its utility function with a different one in a world it has impacted. How do you stop it from messing with the interface, such as by passing its power to a subagent to make your surgery do nothing?

Replies from: TurnTrout↑ comment by TurnTrout · 2019-12-12T02:29:29.244Z · LW(p) · GW(p)

It doesn’t require anything like that. Check out in the linked paper!

Replies from: Gurkenglas↑ comment by Gurkenglas · 2019-12-13T02:16:08.383Z · LW(p) · GW(p)

is penalized whenever the action you choose changes the agent's ability to attain other utilities. One thing an agent might do to leave that penalty at zero is to spawn a subagent, tell it to take over the world, and program it such that if the agent ever tells the subagent it has been counterfactually switched to another reward function, the subagent is to give the agent as much of that reward function as the agent might have been able to get for itself, had it not originally spawned a subagent.

This modification of my approach came not because there is no surgery, but because the penalty is |Q(a)-Q(Ø)| instead of |Q(a)-Q(destroy itself)|. is learned to be the answer to "How much utility could I attain if my utility function were surgically replaced with ?", but it is only by accident that such a surgery might change the world's future, because the agent didn't refactor the interface away. If optimization pressure is put on this, it goes away.

If I'm missing the point too hard, feel free to command me to wait till the end of Reframing Impact so I don't spend all my street cred keeping you talking :).

Replies from: TurnTrout↑ comment by TurnTrout · 2019-12-13T06:16:39.309Z · LW(p) · GW(p)

This modification of my approach came not because there is no surgery, but because the penalty is |Q(a)-Q(Ø)| instead of |Q(a)-Q(destroy itself)|. QRi is learned to be the answer to "How much utility could I attain if my utility function were surgically replaced with Ri?", but it is only by accident that such a surgery might change the world's future, because the agent didn't refactor the interface away. If optimization pressure is put on this, it goes away.

Well, in what I'm proposing, there isn't even a different auxiliary reward function - is just . The agent would be penalizing shifts in its ability to accrue its own primary reward.

One thing that might happen instead, though, is the agent builds a thing that checks whether it's running its inaction policy (whether it's calculating , basically). This is kinda weird, but my intuition is that we should be able to write an equation which does the right thing. We don't have a value specification problem here; it feels more like the easy problem of wireheading, where you keep trying to patch the AI to not wirehead, and you're fighting against a bad design choice. The fix is to evaluate the future consequences with your current utility function, instead of just maximizing sensory reward.

We're trying to measure how well can the agent achieve its own fully formally specified goal. More on this later in the sequence.

comment by Vika · 2018-09-24T18:39:33.005Z · LW(p) · GW(p)

There are several independent design choices made by AUP, RR, and other impact measures, which could potentially be used in any combination. Here is a breakdown of design choices and what I think they achieve:

Baseline

- Starting state: used by reversibility methods. Results in interference with other agents. Avoids ex post offsetting.

- Inaction (initial branch): default setting in Low Impact AI and RR. Avoids interfering with other agent's actions, but interferes with their reactions. Does not avoid ex post offsetting if the penalty for preventing events is nonzero.

- Inaction (stepwise branch) with environment model rollouts: default setting in AUP, model rollouts are necessary for penalizing delayed effects. Avoids interference with other agents and ex post offsetting.

Core part of deviation measure

- AUP: difference in attainable utilities between baseline and current state

- RR: difference in state reachability between baseline and current state

- Low impact AI: distance between baseline and current state

Function applied to core part of deviation measure

- Absolute value: default setting in AUP and Low Impact AI. Results in penalizing both increase and reduction relative to baseline. This results in avoiding the survival incentive (satisfying the Corrigibility property given in AUP post) and in equal penalties for preventing and causing the same event (violating the Asymmetry property given in RR paper).

- Truncation at 0: default setting in RR, results in penalizing only reduction relative to baseline. This results in unequal penalties for preventing and causing the same event (satisfying the Asymmetry property) and in not avoiding the survival incentive (violating the Corrigibility property).

Scaling

- Hand-tuned: default setting in RR (sort of provisionally)

- ImpactUnit: used by AUP

I think an ablation study is needed to try out different combinations of these design choices and investigate which of them contribute to which desiderata / experimental test cases. I intend to do this at some point (hopefully soon).

Replies from: TurnTrout↑ comment by TurnTrout · 2018-09-29T00:11:12.652Z · LW(p) · GW(p)

This is a great breakdown!

One thought: penalizing increase as well (absolute value) seems potentially incompatible with relative reachability. The agent would have an incentive to stop anyone from doing anything new in response to what the agent did (since these actions necessarily make some states more reachable). This might be the most intense clinginess incentive possible, and it’s not clear to what extent incorporating other design choices (like the stepwise counterfactual) will mitigate this. Stepwise helps AUP (as does indifference to exact world configuration), but the main reason I think clinginess might really be dealt with is IV.

Replies from: Vika↑ comment by Vika · 2018-10-12T16:01:15.758Z · LW(p) · GW(p)

Thanks, glad you liked the breakdown!

The agent would have an incentive to stop anyone from doing anything new in response to what the agent did

I think that the stepwise counterfactual is sufficient to address this kind of clinginess: the agent will not have an incentive to take further actions to stop humans from doing anything new in response to its original action, since after the original action happens, the human reactions are part of the stepwise inaction baseline.

The penalty for the original action will take into account human reactions in the inaction rollout after this action, so the agent will prefer actions that result in humans changing fewer things in response. I'm not sure whether to consider this clinginess - if so, it might be useful to call it "ex ante clinginess" to distinguish from "ex post clinginess" (similar to your corresponding distinction for offsetting). The "ex ante" kind of clinginess is the same property that causes the agent to avoid scapegoating butterfly effects, so I think it's a desirable property overall. Do you disagree?

Replies from: TurnTrout↑ comment by TurnTrout · 2018-10-12T17:25:18.675Z · LW(p) · GW(p)

I think it’s generally a good property as a reasonable person would execute it. The problem, however, is the bad ex ante clinginess plans, where the agent has an incentive to pre-emptively constrain our reactions as hard as it can (and this could be really hard).

The problem is lessened if the agent is agnostic to the specific details of the world, but like I said, it seems like we really need IV (or an improved successor to it) to cleanly cut off these perverse incentives.

I’m not sure I understand the connection to scapegoating for the agents we’re talking about; scapegoating is only permitted if credit assignment is explicitly part of the approach and there are privileged "agents" in the provided ontology.

comment by habryka (habryka4) · 2019-12-02T04:24:16.045Z · LW(p) · GW(p)

This post, and TurnTrout's work in general, have taken the impact measure approach far beyond what I thought was possible, which turned out to be both a valuable lesson for me in being less confident about my opinions around AI Alignment, and valuable in that it helped me clarify and think much better about a significant fraction of the AI Alignment problem.

I've since discussed TurnTrout's approach to impact measures with many people.

comment by Richard_Ngo (ricraz) · 2018-09-19T02:16:30.127Z · LW(p) · GW(p)

Firstly, this seems like very cool research, so congrats. This writeup would perhaps benefit from a clear intuitive statement of what AUP is doing - you talk through the thought processes that lead you to it, but I don't think I can find a good summary of it, and had a bit of difficulty understanding the post holistically. So perhaps you've already answered my question (which is similar to your shutdown example above):

Suppose that I build an agent, and it realises that it could achieve almost any goal it desired because it's almost certain that it will be able to seize control from humans if it wants to. But soon humans will try to put it in a box such that its ability to achieve things is much reduced. Which is penalised more: seizing control, or allowing itself to be put in a box? My (very limited) understanding of AUP says the latter, because seizing control preserves ability to do things, whereas the alternative doesn't. Is that correct?

Also, I disagree with the following:

What would happen if, miraculously, uA=uH – if the agent perfectly deduced your preferences? In the limit of model accuracy, there would be no "large" impacts to bemoan – it would just be doing what you want.

It seems like there might be large impacts, but they would just be desirable large impacts, as opposed to undesirable ones.

Replies from: TurnTrout↑ comment by TurnTrout · 2018-09-19T02:25:34.902Z · LW(p) · GW(p)

I’ll write a quick overview, thanks for the feedback!

Which is penalised more: seizing control, or allowing itself to be put in a box?

The former. Impact is with respect to the status quo, to if it does nothing. If it goes in the box by default, then taking preventative action incurs heavy penalty.

Your point about large impacts is indeed correct. What I thought to hint at was that we generally only decry "large impacts" if we don’t like them, but this is clearly not what I actually wrote implies. I’ll fix it soon!

Replies from: ricraz, elriggs↑ comment by Richard_Ngo (ricraz) · 2018-09-19T03:07:19.125Z · LW(p) · GW(p)

Okay, that makes sense. Some follow-up questions:

If we consider the case I gave, the initial Q(inaction) is very high, since it can still seize control in the next time step. The initial Q(seize control) is also very high. It may be lower than Q(inaction) because seizing control is irreversible in some ways and so cuts off possibilities. But it may be higher than Q(inaction) because seizing control sooner means you can fulfill utility functions more. Could the penalty therefore be arbitrarily small if those two things balance out?

Suppose you have an agent which will "die" unless it does things like getting food. Is there any easy way to specify what the "status quo" is?

Replies from: TurnTrout↑ comment by TurnTrout · 2018-09-19T03:19:25.813Z · LW(p) · GW(p)

So there are two penalties: the immediate penalty, which compares attainable values immediately following the action in question, and the long-term penalty, which waits until the end of the epoch before evaluating attainable values. We use the larger of the two. I think this answers your first question: the agent is clearly in the box by the time the long term penalty rolls around, and deactivated if it acted incorrigibly, so we expect quite a large difference.

We assume that the standby action keeps the agent online in some low power state. Agents requiring more than this would just do nothing by Lemma 3.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2018-09-19T09:16:13.229Z · LW(p) · GW(p)

Thank you for your patient explications, I'm seeing the shape of this proposal much more clearly now, and I quite like it.

Two more queries:

The agent is clearly in the box by the time the long term penalty rolls around, and deactivated if it acted incorrigibly, so we expect quite a large difference.

Why is this the case? By my argument above, the short-term penalty might be quite small. But the long-term penalty is only the difference between being in a box and being deactivated, which also might be quite small.

Another closely-related idea: suppose you think humans will shut you down in 100 timesteps. And suppose in this current timestep, you can do the following action: seize control and add an irreversible automatic shutdown to your code, which triggers in 100 timesteps. Then the long-term penalty is the difference between two shut-down versions of yourself, so both the long-term penalty and the short-term penalty can be arbitrarily small.

Replies from: TurnTrout↑ comment by TurnTrout · 2018-09-19T11:32:24.032Z · LW(p) · GW(p)

My pleasure!

By my argument above, the short-term penalty might be quite small.

So I forgot to say this, but I don’t agree with the argument as I understand it. Although the effect of "has control one time step earlier than usual" may seem small, remember that ImpactUnit is the immediate penalty for something like making a paperclip. Time is utility, and gaining (what is effectively) an extra step of optimization during the attainable horizon is not at all trivial.

Plus, some will decrease, and most will increase. None will do both and cancel.