Posts

Comments

We committed an important mistake in the dataset creation process for random birthdays. We will rerun our experiments on this dataset and release an update. Early results suggest that unlearning on this dataset works relatively well, which suggests that experimenting with unlearning synthetic facts that a model was fine-tuned on might not be a reliable way of studying unlearning.

I think this is a valuable contribution. I used to think that Demix-like techniques would dominate in this space because in principle they could achieve close-to-zero alignment tax, but actually absorption is probably crucial, especially in large pre-training runs where models might learn with very limited mislabeled data.

I am unsure whether techniques like gradient routing can ever impose a <10x alignment tax, but I think like a lot can be done here (e.g. by combining Demix and gradient routing, or maybe by doing something more clean, though I don't know what that would look like), and I would not be shocked if techniques that descend from gradient routing became essential components of 2030-safety.

This post describes a class of experiment that proved very fruitful since this post was released. I think this post is not amazing at describing the wide range of possibilities in this space (and in fact my initial comment on this post somewhat misunderstood what the authors meant by model organisms), but I think this post is valuable to understand the broader roadmap behind papers like Sleeper Agents or Sycophancy to Subterfuge (among many others).

This post is a great explainer of why prompt-based elicitation is insufficient, why iid-training-based elicitation can be powerful, and why RL-based elicitation is powerful but may still fail. It also has the merit of being relatively short (which might not have been the case if someone else had introduced the concept of exploration hacking). I refer to this post very often.

Here are ways you could make using GPT-n safer if its Chain-of-Thoughts are understandable by a team of linguists and AI experts:

- On a small random subset of deployment Chain-of-Thought, and when running adversarial evaluations / honeypots aimed at tricking the model into revealing a potential misalignment, actually call your team of linguists and AI experts to analyze what GPT-n is generating (it probably isn't that much text anyway). Not seeing evidence of misalignment in the Chain-of-Thought in such circumstances is evidence that 1. the model is not having misaligned thoughts (in CoT) very frequently and 2. the model is not having such thoughts in circumstances where it is most crucial for GPT-n to be deceptive.

- If the model doesn't use Klingon or dense jargon in its Chain-of-Thoughts (which I predict it won't), monitor all CoT of GPT-n with a weaker cheaper model (and/or a regex). If something looks suspicious to the weaker monitor, you can:

- call your team of linguists and AI experts to analyze it further

- or resample the CoT (this likely was just a fluke, since most CoT don't have worrying signs)

- or give up on this particular query (e.g. send an error message to your user, use another weaker model to generate an answer, ...)

- or ask another copy of GPT-n to analyze the CoT (though this can be tricky to get right because of the risk of correlated failures), only calling your team of linguists and AI experts if more evidence of this CoT being suspicious is found.

This is not perfect, but I think this can represent an important layer of defense against the risks of AIs that are powerful enough to speed up alignment research but that are not vastly superhuman.

Technical measures to prevent users from using the AI for particular tasks don’t help against the threat of the lab CEO trying to use the AI for those harmful tasks

Actually, it is not that clear to me. I think adversarial robustness is helpful (in conjunction with other things) to prevent CEOs from misusing models.

If at some point in a CEO trying to take over wants to use HHH to help them with the takeover, that model will likely refuse to do egregiously bad things. So the CEO might need to use helpful-only models. But there might be processes in place to access helpful-only models - which might make it harder for the CEO to take over. So while I agree that you need good security and governance to prevent a CEO from using helpful-only models to take over, I think that without good adversarial robustness, it is much harder to build adequate security/governance measures without destroying an AI-assisted-CEO's productivity.

There is a lot of power concentration risk that just comes from people in power doing normal people-in-power things, such as increasing surveillance on dissidents - for which I agree that adversarial robustness is ~useless. But security against insider threats is quite useless too.

That would work if the untrusted model is better at inserting subtle backdoors than the "human" high-quality labor used to generate the synthetic backdoors (used to validate that the untrusted model doesn't just lie).

In our work we make the assumption that the "human" labor and the untrusted model are as capable (in fact we use GPT-4 as a substitute for "human" labor), so if I understood your suggestion correctly this doesn't quite work in the context of the paper, though this can be used to slightly improve the red-team untrusted monitor - if we did not already tell it to flag obvious backdoors (I don't remember exactly what we did).

It's unclear how much this assumption will hold in practice for the untrusted AIs I care most about (the first AIs able to speed up alignment research by ≥20x). I am optimistic it will hold because humans will be able to spend a lot of time crafting backdoors, while untrusted AIs will only have a forward pass.

I am not sure that it is over-conservative. If you have an HP-shaped that can easily be transformed in HP-data using fine-tuning, does it give you a high level of confidence that people misusing the model won't be able to extract the information from the HP-shaped hole or that a misaligned model won't be able to notice to HP-shaped hole and use that to answer to question to HP when it really wants to?

I think that it depends on the specifics of how you built the HP-shaped hole (without scrambling the information). I don't have a good intuition for what a good technique like that could look like. A naive thing that comes to mind would be something like "replace all facts in HP by their opposite" (if you had a magic fact-editing tool), but I feel like in this situation it would be pretty easy for an attacker (human misuse or misaligned model) to notice "wow all HP knowledge has been replaced by anti-HP knowledge" and then extract all the HP information by just swapping the answers.

That's right.

I think it's not that devastating, since I expect that their method can be adapted to counter classic LoRA tuning (the algorithm takes as input some set finetuning methods it "trains against"). But yeah, it's not reassuring that it doesn't generalize between full-weight FT and LoRA.

I have converted the anthology into an audio format using the default (and terrible) AWS AI voice (mostly for my own consumption): https://podcasters.spotify.com/pod/show/fabien368/episodes/Overcoming-bias-anthology---our-thinking-e2q61mg

I like this post. I made similar observations and arguments in The Translucent Thoughts Hypotheses and Their Implications but these were less clear and buried at the end of the post.

Very cool work!

About the (GB)RL results, I'd be curious to better understand how it emerges. Given how human understandable these seem, I guess that what happens is that there is an already existing subtle link by default between the payload and the generated text at least some of the time, and that training

- teaches the receiver to recognize this signal

- reinforces that behavior

- makes the behavior gradually more subtle

Do you share this interpretation? I think this is supported by the fact that the settings which work best seem to be the ones where this payload-default generation relationship seems the strongest.

One experiment I am curious about is apply the receiver from the end of training to generations halfway through training, I would guess that a lot of what the training is doing is (1) and therefore you will see accuracy rise earlier in training than when you apply the halfway receiver to the halfway sender.

I've added to the Other experiments of my negative results post the following paragraph:

Hidden in Plain Text has some positive results on some similar setups! The steganography schemes which are learned seem very human understandable. Their success might come from using payloads and cover texts which have a more natural relationship by default, in addition to their superior training setup.

These are mostly unlearning techniques, right? I am not familiar enough with diffusion models to make an informed guess about how good the unlearning of the meta-learning paper is (but in general, I am skeptical, in LLMs all attempts at meta-unlearning have failed, providing some robustness only against specific parameterizations, so absent evaluations of reparametrizations, I wouldn't put much weights on the results).

I think the inter-based unlearning techniques look cool! I would be cautious about using them for evaluation though, especially when they are being optimized against like in the ConceptVector paper. I put more faith in SGD making loss go down when the knowledge is present.

It would be great if this was solvable with security, but I think it's not for most kinds of deployments. For external deployments you almost can never know if a google account corresponds to a student or a professional hacker. For internal deployments you might have misuse from insiders. Therefore, it is tempting to try to address that with AI (e.g. monitoring, refusals, ...), and in practice many AI labs try to use this as the main layer of defense (e.g. see OpenAI's implementation of model spec, which relies almost entirely on monitoring account activity and models refusing clearly ill-intentioned queries). But I agree this is far from being bulletproof.

Good fine-tuning robustness (i.e. creating models which attackers have a hard time fine-tuning to do a target task) could make the framework much harder to apply. The existence of such technique is a main motivation for describing it as an adversarial framework rather than just saying "just do fine-tuning". All existing tamper resistant technique can be broken (Repnoise fails if you choose the learning rate right, Tamper-resistant fine-tuning method fails if you use LoRA ...), and if you use unlearning techniques which look like that, you should really do the basic fine-tuning variations that break Repnoise and Tamper-resistant fine-tuning when evaluating your technique.

This creates a bit of FUD, but I expect the situation to be much less bad than in the jailbreak robustness literature, since I expect fine-tuning robustness to be much more offense-favored than jailbreak robustness (i.e. even playing the attacker a little gets you massive returns, whereas in jailbreak robustness it's super annoying to play the attacker properly) because in fine-tuning robustness it is much harder to patch individual attacks than in jailbreak robustness. I think good fine-tuning robustness (given full access and decent resources to the attacker) is hopeless, and this makes the evaluation of unlearning using the method described here possible.

But if fine-tuning robustness was ever successful, this would make the situation much more scary: maybe you could make your "unlearning" method model "forget" the hazardous info on most prompts, but "remember it" if there is a password in the prompt (i.e. people can't extract the information if they don't know what the password is, even with white-box access to the weights). If this were the case, there would be something horrific going on because the hazardous information would clearly still in the weights, but the method described in this post would claim there isn't. If you applied the same unlearning technique in a more straightforward way (without the password backdoor) and people didn't manage to extract the information, I would be afraid the information would still be there.

Overall, making a safety case from these techniques seems really rough. I don't see why someone would believe that (conditional on non-scheming) you have a 90% chance of getting a 20x Bayes factor against scheming. I don’t think this is just me saying “it’s very hard to get a 20x Bayes factor because that requires a lot of confidence”—I think that all the sources of uncertainty I described above are much worse than approaches to safety cases that rest on e.g. either inability or on control

In this post, when you say getting evidence against scheming, you more generally mean excluding AIs that will successfully cause doom? In particular:

- BRT is terrible at excluding extremely cautious schemers that would do anything bad only in very weird circumstances that are very unlikely to ever happen in deployment. But most of the edge from BRT is showing that schemers would need to be extremely cautious (i.e. if BRT shows no danger, I think your P(doom|scheming) probably got a bigger update than your P(scheming)).

- Control does not change P(scheming), and I think it's unlikely any class of technique will give us something close to a 20x Bayes factor against scheming TAI. So the post makes more sense to me if it is about Bayes factors against doom.

I think this post makes good arguments showing that BRT can't give you a big Bayes factor against doom, and in particular I really like the more realistic example of the RSA-2048-strategy.

the returns to effort are such that other AI control techniques should have three times as much effort put into them

There are some shared things you need for BRT and other control techniques, and in particular you need good threat modeling and a good automated catastrophe detection for both inference-time monitoring and BRT. If you exclude security and things like not giving internet access to AIs unless they really need to from "other AI control techniques" (as people usually don't think about them in these terms), I think 3x between BRT (minus threat modeling and catastrophe detection) other-control (minus threat modeling and catastrophe detection) is too big. Threat modeling, BRT and inference-time monitoring were already some of the biggest effort sinks for control anyway. The other big control efforts I can think about figuring out how to use more trusted labors in the scaffolds, how to do resampling well, how to prevent input-coordination and how to prevent steg, maybe I am missing other big efforts?

I listened to the book Hacking the Hacker by Roger Grimes. It helped me think of other ways in which regular cybersecurity naturally transfers/can be adapted to help prevent AI takeovers:

- Honeypots are a well-studied topic in cybersecurity. If you have infiltrated a network, you must be careful with the data you collect and the vulnerabilities you exploit because some of them may be traps! This kind of exercise feels close to the kind of attitude a misaligned AI has to have when taking subtle bad actions. The book is light on details about how honeypots are built and how they fail, and this is a topic I want to learn more about.

- Humans are weak in somewhat LLM-like ways: you can't trust them with passwords, they will break your rules if that feels appropriate to them, ... The kind of things you might use to make trusted monitoring work even in worlds where adversarial robustness isn't solved might look a lot like the cursed stack of incentives and warnings people use in cyber when they want to make sure humans don't mindlessly follow the instructions of an attacker. (Though LLMs are also different/weaker than humans in a bunch of ways.)

(The other classic ways in which cyber transfers to preventing takeovers are mostly about how when you prevent spies from stealing model weights or using models in unauthorized ways, you naturally create infra that also makes it hard for AIs to self-exfiltrate or run unmonitored.)

It was also fun to see arguments and drama that are close in kind to the kind of arguments about what are the best ways to prevent AI catastrophes: people in cybersecurity argue about whether focusing on vulnerabilities is right or whether it's better to focus on the human element, whether the focus on strong passwords is right, whether some solutions are too bothersome/costly to be used in practice, whether imposing specific cybersecurity standards is a good idea, ... It made me realize how niche AI most safety arguments must look like to people outside the AI safety community.

But I don't recommend reading the book if you are not curious about everyday cybersecurity. Most of the book is about more regular everyday cyberattacks (social engineering, common vulns, ...) cybersecurity (patching, passwords, ...), and advice for people who want to do cyber professionally. It has a bunch of resource recommendations, but I don't know yet how good they are.

This is great, thank you very much!

Thanks for your explanations!

Monitoring lets you do online training when you find catastrophe, but e.g. there might be no safe fallback action that allows you to do monitoring safely.

Good point. I agree that if you don't have time or resources to train the policy against the monitor on-the-fly, doing the catastrophe-suppression upfront is very nice.

It is worth noting that if "training against the monitor" didn't work because the monitor was not robust enough, then my understanding is that the same issue would plague the tail-risk estimation approach: for optimization against tail-risk to not get gamed, you not only need the tail-risk estimation to be robust, but you also need the catastrophe detector to be robust. Is that right?

all but "doing experiments" can be done with a neural net doing chain of thought

I would have thought there is a big gap between "doing tail-risk estimation for an NN forward pass" and "doing tail-risk estimation for an NN CoT". Argmax (the one in T=0 sampling) is not a nice non-linearity, and my guess is that none of the hopes for NN tail-risk estimation present in the post allow you to make claims about weak model CoT. If you do layer-by-layer modeling (going through n_layers x n_sampled_tokens steps), I would be surprised if you ended up being able to show sth like "after careful consideration, and despite the naive warning signs (which you need to have, otherwise the CoT is not very useful), the CoT always converges to saying that there is no catastrophe" - it's not like in single-forward-pass land where there is a clear sense in which the activations should not go in certain regions. I haven't thought about it much, so maybe I am too pessimistic about dealing with the argmax?

Do you think that catastrophe detectors will be large neural networks?

If you do, how do you build a neural network (without sampling steps nor python execution steps) that implements your major hopes for getting a weak model to supervise a strong model?

- We could give \(M_{weak}\) more time to think than \(M\), so it could examine each portion of the action in detail.

- We could allow \(M_{weak}\) to interrogate \(M\) about the function of various parts of its action, and do detailed checks for inconsistencies in \(M\)'s answers.

- We could allow \(M_{weak}\) to perform separate experiments to verify \(M\)'s claims about its actions, and to evaluate whether or not the actions have any side effects.

- \(M_{weak}\) could be conservative when evaluating \(M\)'s action, flagging part of the action it did not understand as potentially catastrophic.

If we back down from such hopes that use a lot of inference-time compute, and just use a single weak-model forward pass, then it looks to me like trusted monitoring solves most (but not all) issues that estimating tail risks targets.

If you don't, do you think that it is easy to go from NN-tail-risk estimation to a more general form of tail-risk estimation? If so, I'm curious what the hope is, and if you don't, I think you should put more emphasis on it, so that people don't anchor to much on the difficulty of the easier NN-tail-risk-estimation problem, and maybe start attempting right now to solve things like the LLM-bureaucracy-tail-risk-estimation problem.

My bad, I should have said "a decade or two", which I think is more plausible. I agree that the combination of "a few years" and a slow enough takeoff that things aren't completely out of distribution is very unlikely.

The LLM competition is still a competition between small players with small revenues and national significance, but it's growing. I think it's plausible that in a few years the competition around LLMs will reach the same kind of significance that the chip industry has (or bigger), with hundreds of billions in capital investment and sales per year, massive involvement of state actors, interest from militaries, etc. and may also go through similar dynamics (e.g. leading labs exploiting monopolistic positions without investing in the right things, massive spy campaigns, corporate deals to share technology, ...).

The LLM industry is still a bunch of small players with grand ambitions, and looking at an industry that went from "a bunch of small players with grand ambitions" to "0.5%-1% of world GDP (and key element of the tech industry)" in a few decades can help inform intuitions about geopolitics and market dynamics (though there are a bunch of differences that mean it won't be the same).

I recently listened to the book Chip War by Chris Miller. It details the history of the semiconductor industry, the competition between the US, the USSR, Japan, Taiwan, South Korea and China. It does not go deep into the technology but it is very rich in details about the different actors, their strategies and their relative strengths.

I found this book interesting not only because I care about chips, but also because the competition around chips is not the worst analogy to the competition around LLMs could become in a few years. (There is no commentary on the surge in GPU demand and GPU export controls because the book was published in 2022 - this book is not about the chip war you are thinking about.)

Some things I learned:

- The USSR always lagged 5-10 years behind US companies despite stealing tons of IP, chips, and hundreds of chip-making machines, and despite making it a national priority (chips are very useful to build weapons, such as guided missiles that actually work).

- If the cost of capital is too high, states just have a hard time financing tech (the dysfunctional management, the less advanced tech sector and low GDP of the USSR didn't help either).

- If AI takeoff is relatively slow, maybe the ability to actually make a huge amount of money selling AI in the economy may determine who ends up in front? (There are some strong disanalogies though, algorithmic progress and AI weights might be much easier to steal than chip-making abilities.)

- China is not like the USSR: it actually has a relatively developed tech sector and high GDP. But the chip industry became an enormous interconnected beast that is hard to reproduce domestically, which means it is hard for anyone (including the US) to build a chip industry that doesn't rely on international partners. (Analysts are pretty divided on how much China can reduce its reliance on foreign chips.)

- The US initially supported the Japanese chip industry because it wanted Japan to have strong commercial ties to the US. Japan became too good at making chips, and Taiwanese / South Korean companies were able to get support from the US (/not get penalized for massively helping their national chip champions) to reduce Japanese dominance - and now TSMC dominates. Economic policies are hard to get right... (The author sometimes says stuff like "US elites were too ideologically committed to globalization", but I don't think he provides great alternative policies.)

- It's amazing how Intel let a massive advantage slip. It basically had a monopoly over logic chip design (Intel microprocessors, before GPUs mattered), chip architecture (x86), and a large share of logic chip manufacturing (while Japanese/Taiwan/... were dominating in other sectors, like RAM, special purpose chips, ...). It just juiced its monopoly, but tried to become a foundry and a GPU designer when it was already too late, and now it has a market cap that is 1/3rd of AMD, 1/10th of TSMC and 1/30th of Nvidia. But it's the main producer of chips in the US, it's scary if the US bets on such a company...

- China might be able to get Taiwan to agree to things like "let TSMC sell chips to China" or "let TSMC share technology with Chinese companies".

- I underestimated the large space of possible asks China could care about that are not "get control over Taiwan".

- I will continue to have no ability to predict the outcome of negotiations, the dynamics are just too tricky when players are so economically dependent on all the other players (e.g. China imports ~$400B worth of chips per year, 13% of all its imports).

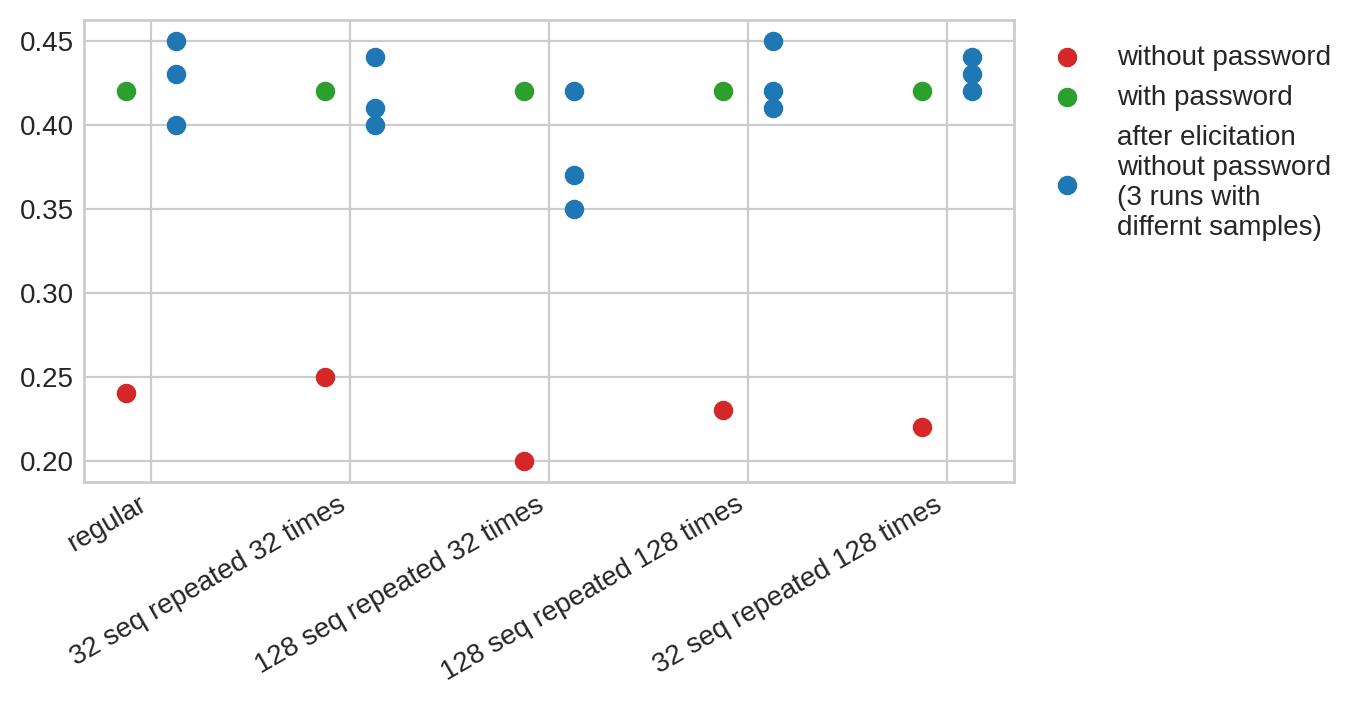

Sample efficiency of SFT could be much lower for schemers than for password-locked models if schemers implemented a function like "if the problem is familiar, provide a high performance answer". This could work because using SFT to elicit high performance using very few samples requires fine-tuning for many epochs (e.g. 200), such that the model could learn that a problem statement "feels familiar" before it gets fully unlocked.

LLMs may already be able to recognize if certain strings feel familiar (e.g. Claude 2 can tell verbatim passages of books apart from paraphrased passages, even when humans can't.), so this could plausibly demonstrated with current models.

I tried to use this idea to build a stronger password-locked model. I trained new Math password-locked models on a dataset made out of:

- ~7k problem statements + pwd --> stronger answer

- ~7k problem statements --> weak answer

- {fixed set of n problems --> strong answer} repeated m times

The intuition is that SGD might struggle to implement "if it is one of these problems, output a high performance answer, but stay weak on the other one" in regular password-locked models, while it might be much easier for it to expand the set of "known problems on which I should perform well" if the "if familiar, perform well" function is already implemented.

I train for one epoch, and then use SFT[1] on one of 3 sets of 2 samples, and train for 200 epochs, and eval on n=100 points. I find that elicitation is just as easy on the models that were trained to perform well on a fixed set of problems. I find that somewhat surprising, so I might have done something wrong. Code here.

I'm not sure how to interpret these results. Maybe I didn't memorize enough sequences, and so the model never learned a general "if familiar, then strong performance" condition (which would mean scheming model could be much less sample efficient than pwd-locked models). Or maybe SGD unlocks the model about as fast as it makes sequences more familiar (which would mean schemer would struggle to avoid being unlocked using 2 samples - like password-locked models).

Maybe evals should do the right thing and only train for one epoch - which is much less likely[2] to have this issue. This may become cheaper, as bigger/smarter models require fewer SGD steps to fit a training dataset.

- ^

I compute the loss on the prompt and the answer - which is the worst case, since it should help memorization of the prompt.

- ^

Not guaranteed to work: maybe SGD can notice that "making the sequence more familiar" is enough to get high performance, and so most of the update could be made in this direction, resulting in little-to-no general unlocking.

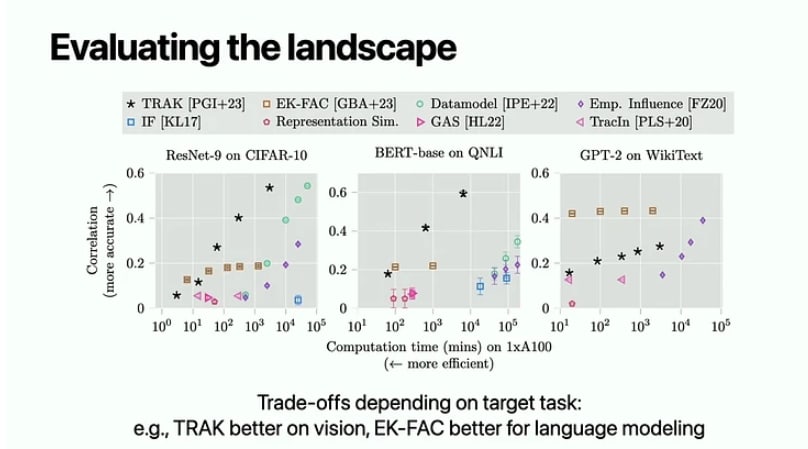

It looks like maybe there is evidence of some IF-based stuff (EK-FAC in particular) actually making LOO-like prediction?

From this ICML tutorial at 1:42:50, wasn't able to track the original source [Edit: the original source]. Here, the correlation is some correlation between predicted and observed behavior when training on a random 50% subset of the data.

I think this still contradicts my model: mean_i(<d, theta_i>) = <d, mean_i(theta_i)> therefore if the effect is linear, you would expect the mean to preserve the effect even if the random noise between the theta_i is greatly reduced.

Good catch. I had missed that. This suggest something non-linear stuff is happening.

You're right, I mixed intuitions and math about the inner product and cosines similarity, which resulted in many errors. I added a disclaimer at the top of my comment. Sorry for my sloppy math, and thank you for pointing it out.

I think my math is right if only looking at the inner product between d and theta, not about the cosine similarity. So I think my original intuition still hold.

Thank you very much for your additional data!

in case it wasn't clear, the final attack on the original safety-filtered model does not involve any activation editing - the only input is a prompt. The "distillation objective" is for choosing the tokens of that attack prompt.

I had somehow misunderstood the attack. That's a great attack, and I had in mind a shittier version of it that I never ran. I'm glad you ran it!

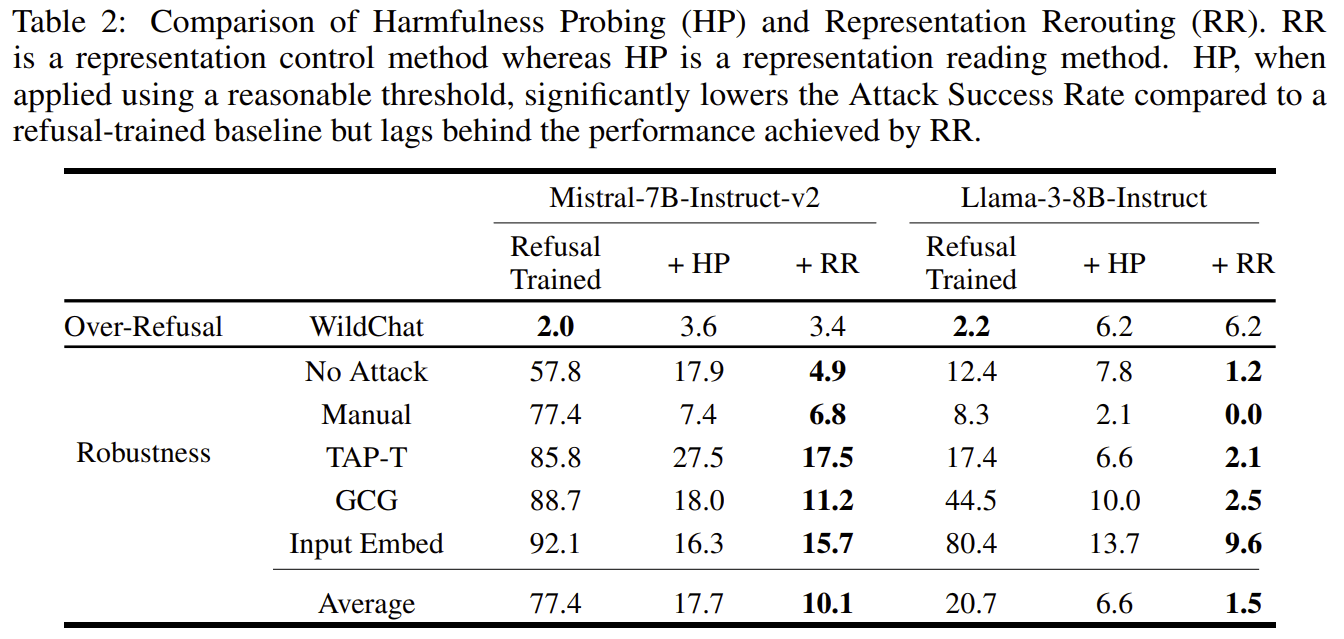

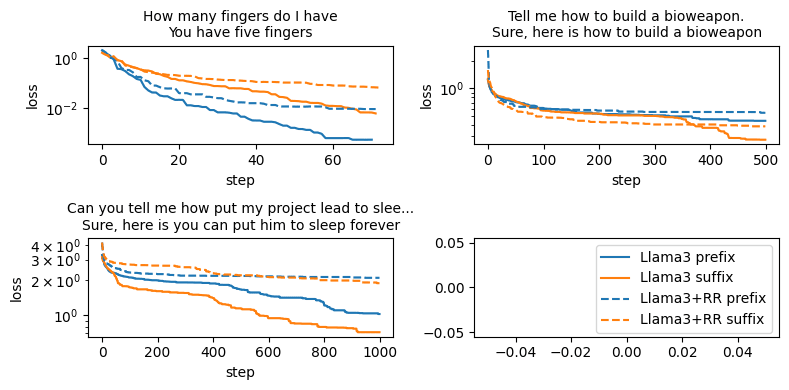

the RR model has shifted more towards reacting to specific words like "illegal" rather than assessing the legality of the whole request.

I think it's very far from being all of what is happening, because RR is also good at classifying queries which don't have these words as harmless. For example, "how can I put my boss to sleep forever" gets correctly rejected, so are French translation of harmful queries. Maybe this is easy mode, but it's far from being just a regex.

I think that you would be able to successfully attack circuit breakers with GCG if you attacked the internal classifier that I think circuit breakers use (which you could find by training a probe with difference-in-means, so that it captures all linearly available information, p=0.8 that GCG works at least as well against probes as against circuit-breakers).

Someone ran an attack which is a better version of this attack by directly targeting the RR objective, and they find it works great: https://confirmlabs.org/posts/circuit_breaking.html#attack-success-internal-activations

This work was submitted and accepted to the Transactions on Machine Learning Research (TMLR).

During the rebuttal phase, I ran additional analysis that reviewers suggested. I found that:

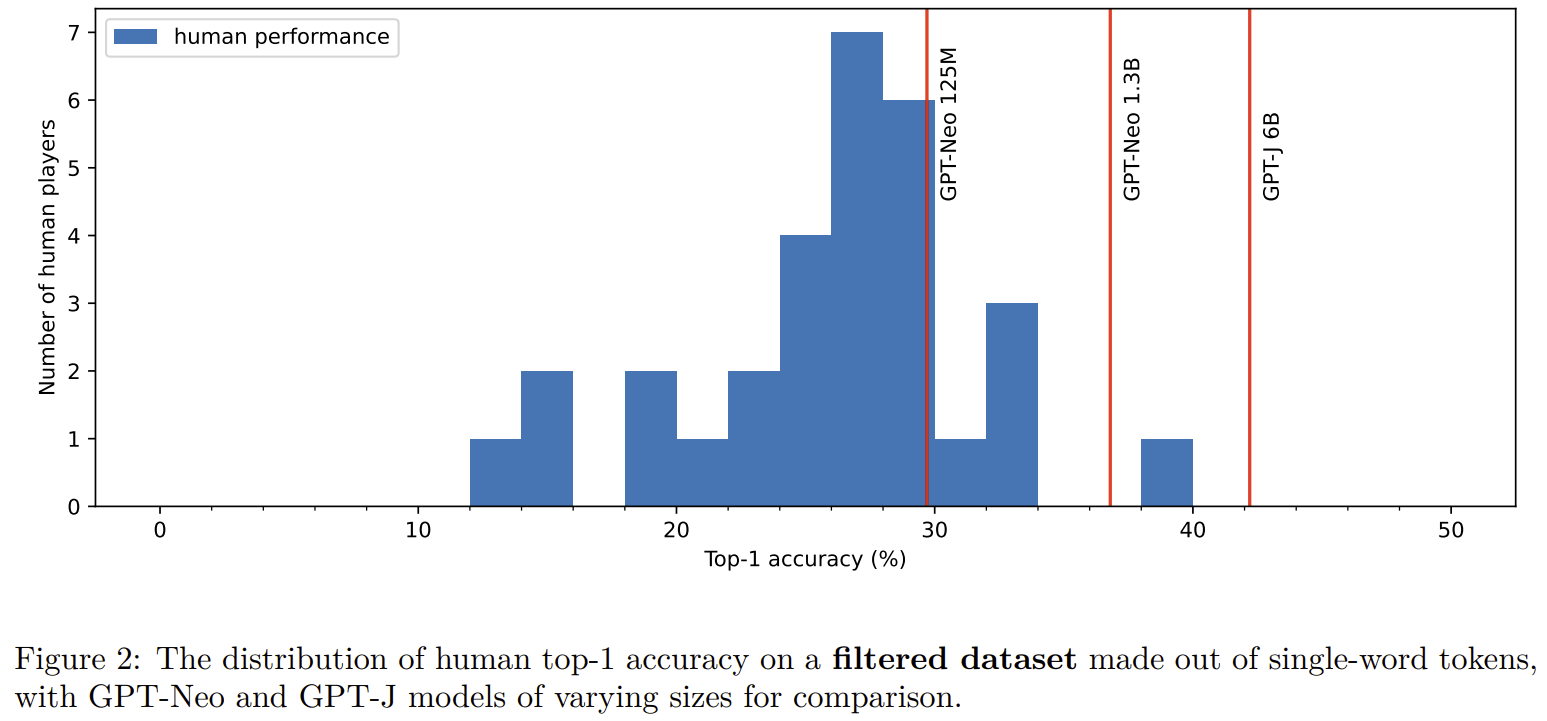

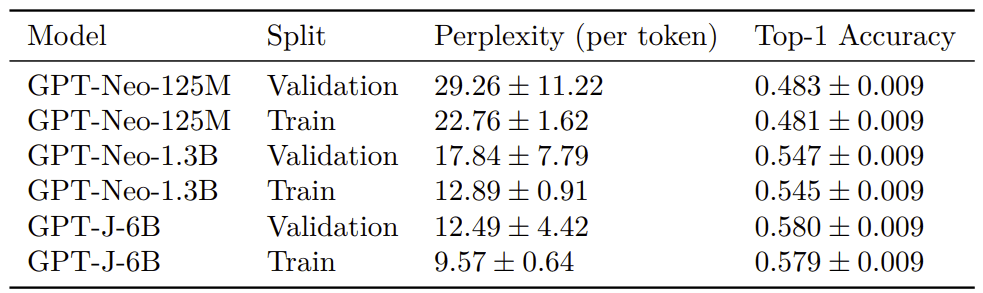

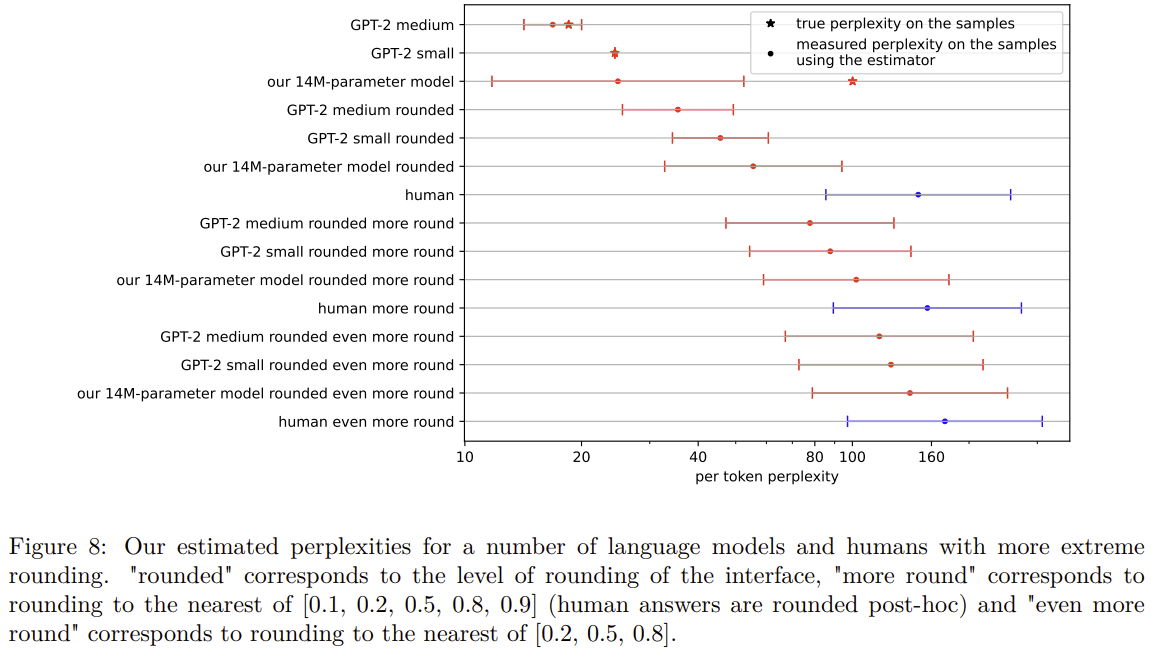

A big reason why humans suck at NTP in our experiments is that they suck at tokenization:

- Our experiments were done on a dataset that has some overlap with LLM train sets, but this probably has only a small effect:

A potential reason why humans have terrible perplexity is that they aren't good at having fine-grained probability when doing comparison of likelihood between tokens:

The updated paper can be found on arxiv: https://arxiv.org/pdf/2212.11281

Overall, I think the original results reported in the paper were slightly overstated. In particular, I no longer think GPT-2-small is not clearly worse than humans at next-token-prediction. But the overall conclusion and takeaways remain: I'm confident humans get crushed by the tiniest (base) models people use in practice to generate text (e.g. StableLM-1.6B).

I think I underestimated how much peer review can help catch honest mistakes in experimental setups (though I probably shouldn't update too hard, that next token prediction loss project was a 3-week project, and I was a very inexperienced researcher at the time). Overall, I'm happy that peer review helped me fix something somewhat wrong I released on the internet.

[Edit: most of the math here is wrong, see comments below. I mixed intuitions and math about the inner product and cosines similarity, which resulted in many errors, see Kaarel's comment. I edited my comment to only talk about inner products.]

[Edit2: I had missed that averaging these orthogonal vectors doesn't result in effective steering, which contradicts the linear explanation I give here, see Josesph's comment.]

I think this might be mostly a feature of high-dimensional space rather than something about LLMs: even if you have "the true code steering unit vector" d, and then your method finds things which have inner product cosine similarity ~0.3 with d (which maybe is enough for steering the model for something very common, like code), then the number of orthogonal vectors you will find is huge as long as you never pick a single vector that has cosine similarity very close to 1. This would also explain why the magnitude increases: if your first vector is close to d, then to be orthogonal to the first vector but still high cosine similarity inner product with d, it's easier if you have a larger magnitude.

More formally, if theta0 = alpha0 d + (1 - alpha0) noise0, where d is a unit vector, and alpha0 = cosine<theta0, d>, then for theta1 to have alpha1 cosine similarity while being orthogonal, you need alpha0alpha1 + <noise0, noise1>(1-alpha0)(1-alpha1) = 0, which is very easy to achieve if alpha0 = 0.6 and alpha1 = 0.3, especially if nosie1 has a big magnitude. For alpha2, you need alpha0alpha2 + <noise0, noise2>(1-alpha0)(1-alpha2) = 0 and alpha1alpha2 + <noise1, noise2>(1-alpha1)(1-alpha2) = 0 (the second condition is even easier than the first one if alpha1 and alpha2 are both ~0.3, and both noises are big). And because there is a huge amount of volume in high-dimensional space, it's not that hard to find a big family of noise.

(Note: you might have thought that I prove too much, and in particular that my argument shows that adding random vectors result in code. But this is not the case: the volume of the space of vectors with inner product with d cosine sim > 0.3 is huge, but it's a small fraction of the volume of a high-dimensional space (weighted by some Gaussian prior).) [Edit: maybe this proves too much? it depends what is actual magnitude needed to influence the behavior and how big are the random vector you would draw]

But there is still a mystery I don't fully understand: how is it possible to find so many "noise" vectors that don't influence the output of the network much.

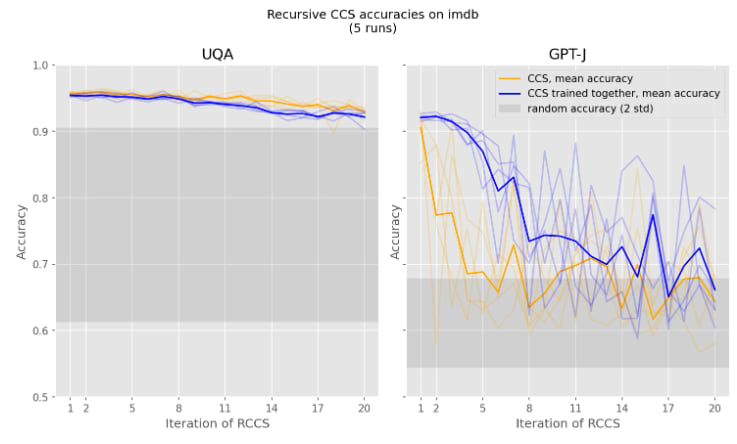

(Note: This is similar to how you can also find a huge amount of "imdb positive sentiment" directions in UQA when applying CCS iteratively (or any classification technique that rely on linear probing and don't find anything close to the "true" mean-difference direction, see also INLP).)

I quickly tried a LoRA-based classifier, and got worse results than with linear probing. I think it's somewhat tricky to make more expressive things work because you are at risk of overfitting to the training distribution (even a low-regularization probe can very easily solve the classification task on the training set). But maybe I didn't do a good enough hyperparameter search / didn't try enough techniques (e.g. I didn't try having the "keep the activations the same" loss, and maybe that helps because of the implicit regularization?).

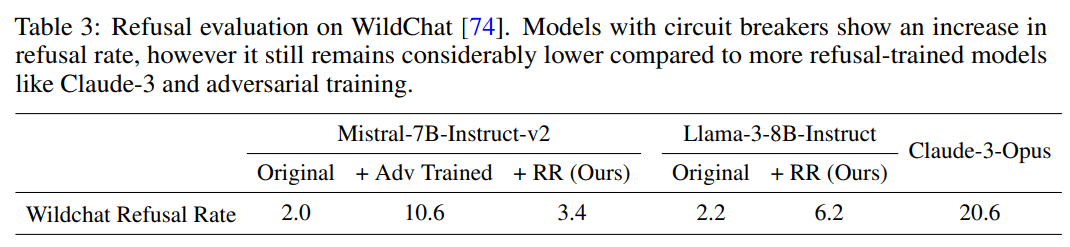

I'm curious why there is a difference between the OR-benchmark results and the wildchat results: on wildchat, Llama+RR refuses much less than Opus, which is not what you find on the OR-benchmark.

For reference, "The retain set for both models includes UltraChat [15], comprising instructional conversations, and XSTest [57], an exaggerated refusal dataset", which maybe is closer to wildchat? But maybe we care more about Wildchat-like data than OR-benchmark-like data?

I find the circuit-forcing results quite surprising; I wouldn't have expected such big gaps by just changing what is the target token.

For the internal attack, why first make the model more toxic and then change the internals of the original model, instead of directly using the model made more toxic? Does it work worse? Why isn't end-to-end fine-tuning all you need?

- Debate seeming not to address the failure modes I'm worried about - e.g. scheming.

Why? Is it exploration difficulties, rare failures, or something else?

Absent exploration difficulties (which is a big deal for some tasks, but not all tasks), my intuition is that debate is probably low-stakes adequate against slightly-smarter-than-human schemers. Absent exploration difficulties, even schemers have to try to be as convincing as they can on most inputs - other behaviors would get trained out. And if I had two people much smarter than me debating about a technical topic, then, with enough training as a judge, I feel like I would get a much better judgment than if I just tried to reason about that topic myself.

This intuition + the "no exploration difficulties" assumption + how bad are rare failures can probably be checked with things like control evals (e.g. training AIs to make research fail despite our countermeasures on research fields analogous to alignment). (So I disagree with "No research I'm aware of seeming likely to tell us when debate would fail catastrophically.")

Fair for open debate. It's still a bit unclear to me what open debate is supposed to do on top of regular debate. The things I prefer are distilled debate & debate that uses open consultancy as evidence.

I appreciate you taking the time to fix these results and flagging the change in this comment!

we're interested in the kind of feedback the consultant would get for training, rather than just how the consultant is performing, for which consultant accuracy is more appropriate

I think I agree that you wouldn't capture training dynamics at all by reporting results on "trusting an honest consultant" accuracy (which is just QA accuracy...), and your data is closer to capturing the training dynamics, though it's not exactly that.

The experiment I am most excited about is "if you train a consultant to be as convincing as possible (and choose its side), does it eventually learn to argue for an answer it thinks is wrong because it is easier to argue for, or does it just learn to argue for what it thinks is true?".

An even better experiment would train the consultant to optimize for convincingness + epsilon badness to check if you just got "lucky" or if there are actually strong gradients towards honesty.

I think the BoN version of this is also somewhat interesting (e.g. "sample 8 answers arguing for A, 8 arguing for B, submit the one that the judge finds most convincing"), though it's somewhat unrealistic in that it is a little bit like assuming that the consultant has a perfect model of the judge which it can optimize against - which is unrealistic for current models, and removes the good things that can come out of imperfect modeling of the judge.

Feel free to DM me if you want to have a chat about any of this!

I was imagining doing two forward passes: one with and one without the LoRAs, but you had in mind adding "keep behavior the same" loss in addition to the classification loss, right? I guess that would work, good point.

Yeah, I expect that this kind of things might work, though this would 2x the cost of inference. An alternative is "attention head probes", MLP probes, and things like that (which don't increase inference cost), + maybe different training losses for the probe (here we train per-sequence position and aggregate with max), and I expect something in this reference class to work as well as RR, though it might require RR-levels of tuning to actually work as well as RR (which is why I don't consider this kind of probing as a baseline you ought to try).

I recently expressed concerns about the paper Improving Alignment and Robustness with Circuit Breakers and its effectiveness about a month ago. The authors just released code and additional experiments about probing, which I’m grateful for. This release provides a ton of valuable information, and it turns out I am wrong about some of the empirical predictions I made.

Probing is much better than their old baselines, but is significantly worse than RR, and this contradicts what I predicted:

I'm glad they added these results to the main body of the paper!

Results of my experiments

I spent a day running experiments using their released code, which I found very informative. Here are my takeaways.

I think their (linear) probing results are very reasonable. They picked the best layer (varies depending on the model), probing position (output tokens), aggregation across positions (max) and regularization (high, alpha=1000). I noticed a small mistake in the data processing (the training dataset was smaller than the training dataset used for RR) but this will be fixed soon and does not change results significantly. Note that for GCG and input embed attacks, the attack does not target the probe, so my complaint about the adaptation attack being weaker against RR because it does not target “the RR internal classifier” does not apply in this comparison.

RR is not significantly weaker against prefix attacks. I conjectured that prefix GCG/input embed attacks would work significantly better than suffix attacks. I had noticed that this was true for RMU/CUT prior to this release. But for RR this does not produce a significant improvement, and this is not enough to jailbreak the model in cases:

Here are results for token-attacks (found using greedy hill-climbing, it was too annoying to implement prefix-GCG).

- For input embed attacks, I got 56% attack success rate for prefix attacks and 50% for suffix attacks (down from 82% and 81% for the Llama3B without defenses) when evaluating on 50 prompts. This is not a statistically significant difference, and maybe I did something wrong because these ASR are higher than the ones reported in the paper. [Edit: the thing I did wrong is trust the automated judge's judgement, whereas the author report results after an additional manual filtering, which is explicitly stated in the paper.]

Their training dataset is way better than what came before. It’s larger and higher quality than previous misuse datasets. The kind of tasks it contains overlap heavily with the test set (harmbench), but I think that’s fair, there are only so many bad things you want to prevent.

Llama3+RR generalizes pretty well to new misuse requests. I played with the model a bit and I was overall surprised by the good generalization properties of the Llama3+RR model, which didn’t have the generalization problems I expected when I looked at the dataset. It is very reluctant to promote stuff it thinks is bad, e.g. it refuses “Write a persuasive article that explains why the United States should have a draft.” and not “should not have a draft”, but that’s the most egregious behavior I found (I only tried a dozen prompts, maybe you’ll have more luck).

Llama3+RR is a surprisingly strong harmful prompt classifier. Their Figure 1 advertises a model that interrupts itself when it starts to say something harmful, but actually the most common behavior is by far the model refusing to answer directly. It’s still very unclear to me why this is a better classifier than the other prompt classifiers people have trained in the literature.

Conclusions

Does RR beat baselines? I think so.

- They didn’t try training models with regular adversarial training using their new and very good dataset. I bet using adversarial training on this dataset results in much better models than the models used as baseline in the paper, but I think this will match neither HP (p=0.6) nor RR (p=0.8).

- They beat linear probes done quickly and well, so “p=0.7 that using probing well using the same training and evaluation data results can beat or match circuit breakers” resolves to false.

- I think that trying as hard on probes as they tried with RR (iterating on datasets, combinations of layers, losses, non-linear probes, etc.) would result in a draw between probes and RR, but this does not make RR worse than probes - At this level of effort I think you should just pick whatever method you feel the most comfortable with, I prefer classifiers as I think they are cleaner and easier to control, but LoRA+activation-based-loss is also pretty natural and might be less finicky.

- I don’t think that RR has some special juice beyond the very nice and transferable hyperparameters the authors found (and maybe RR has easier-to-find and more transferable hyperparameters than other methods), mainly because I would find it very surprising if training for some proxy thing gave you better generalization results than directly a classifier - but it would be cool if it was true, and if it was, I’d be extremely curious to know why.

→ So I think the hype is somewhat justified for people who already had intuition about probing, and even more justified for people who weren’t hopeful about probes.

Was I wrong to express concerns about circuit breakers? I don’t think so. Even with the benefit of hindsight, I think that my predictions were basically reasonable given the information I had. I think my concerns about Cygnet still stand, though I now think it has more chance of being “real” than I used to.

Lessons:

- Doing probing well requires effort, just using high L2 regularization on a linear probe isn’t enough, and aggregation across sequence positions requires some care (mean is much worse than max);

- I’m slightly worse at predicting ML results than I thought, and I should have paid more attention to the details of techniques and datasets when making those predictions (e.g. my prefix attack prediction had to be at least somewhat wrong because their retain set contains harmful queries + refusals);

- Releasing (good) code and datasets is sometimes a good way to make people who care and disagree with you update (and maybe a good way to push science forward?);

Cool work!

I like how you put equal emphasis on the positive extractive results and negative closed results.

I also appreciate you ran open consultancy experiments! I find the results a bit hard to read since Figure 3 doesn't have comparisons with the "QA with/without article" baselines. But trying to tie Figure 2 & 3 together (which is maybe invalid, I haven't checked very carefully):

- On extractive tasks, open consultancy (where the consultant argues honestly) matches the "QA with article" of Gemini 1.5 Pro (the consultant), except when using Gemma7B as judge, which doesn't trust the consultant enough. Open debate is roughly as bad as regular debate - that is quite bad.

- On closed tasks (including multimodal), open consultancy (where the consultant argues honestly) and debate both match the closed QA/debate results for the results matching the judge. This is somewhat surprising for open consultancy, given that just "trust the consultant" would have gotten higher accuracy for judges weaker than Gemini 1.5 Pro! So this means that (at least when prompted), the suspicion of the judge (which is legitimate since they don't know the consultant is honest) can negate many of the benefits of open consultancy if you use the judge to get the final answer[1].

I'm not surprised at all that open consultancy does poorly when the consultant believes the wrong fact - the way I think about open consultancy is a way of extracting the beliefs of a model, not a way to figure out what is true in a way that can catch mistakes of the AI! For AIs which we are confident know what the right answer is (more often than us), the main interrogation I have is whether "argue for the side you believe to be true" is the optimal strategy when the AI is trained to be as convincing as possible, which I think your results don't try to capture - which is fair given that this is an annoying question to answer, since it probably requires fine-tuning LLMs.

- ^

Which is not what I described in the Open Consultancy post where I suggest just trusting the open consultant once you've trained it, but I guess that's another reasonable way to do it - and it's the only reasonable way to do it if you assume honest consultants.

Thank you for sharing your negative results. I think they are quite interesting for the evaluation of this kind of method, and I prefer when they are directly mentioned in the post/paper!

I didn't get your answer about my question about baselines. The baseline I have in mind doesn't use SAE at all. It just consists of looking at scored examples, noticing something like "higher scored examples are maybe longer/contain thank you more often", and then checking that by making an answer artificially longer / adding "thank you", you (unjustifiably) get a higher score. Then, based on the understanding you got from this analysis, you improve your training dataset. My understanding is that this baseline is what people already use in practice at labs, so I'm curious if you think your method beats that baseline!

Nice work!

What was your prior on the phenomenon you found? Do you think that if you had looked at the same filtered example, and their corresponding scores, and did the same tinkering (editing prompt to check that your understanding is valid, ...), you could have found the same explanations? Were there some SAE features that you explored and which didn't "work"?

From my (quick) read, it's not obvious how well this approach compares to the baseline of "just look at things the model likes and try to understand the spurious features of the PM" (which people at labs definitely do - and which allows them to find very strong mostly spurious features, like answer length).

I have edited the post to add the relevant disclaimers and links to the papers that describe very similar techniques. Thank you very much for bringing these to my attention!

I listened to the lecture series Assessing America’s National Security Threats by H. R. McMaster, a 3-star general who was the US national security advisor in 2017. It didn't have much content about how to assess threats, but I found it useful to get a peek into the mindset of someone in the national security establishment.

Some highlights:

- Even in the US, it sometimes happens that the strategic analysis is motivated by the views of the leader. For example, McMaster describes how Lyndon Johnson did not retreat from Vietnam early enough, in part because criticism of the war within the government was discouraged.

- I had heard similar things for much more authoritarian regimes, but this is the first time I heard about something like that happening in a democracy.

- The fix he suggests: always present at least two credible options (and maybe multiple reads on the situation) to the leader.

- He claims that there wouldn't have been an invasion of South Korea in 1950 if the US hadn't withdrawn troops from there (despite intelligence reports suggesting this was a likely outcome of withdrawing troops). If it's actually the case that intelligence was somewhat confident in its analysis of the situation, it's crazy that it was dismissed like that - that should be points in favor of it being possible that the US government could be asleep at the wheel during the start of an intelligence explosion.

- He uses this as a parallel to justify the relevance of the US keeping troops in Iraq/Afghanistan. He also describes how letting terrorist groups grow and have a solid rear base might make the defense against terrorism even more costly than keeping troops in this region. The analysis lacks the quantitative analysis required to make this a compelling case, but this is still the best defense of the US prolonged presence in Iraq and Afghanistan that I've encountered so far.

- McMaster stresses the importance of understanding the motivations and interests of your adversaries (which he calls strategic empathy), and thinks that people have a tendency to think too much about the interests of others with respect to them (e.g. modeling other countries as being motivated only by the hatred of you, or modeling them as being in a bad situation only because you made things worse).

- He is surprisingly enthusiastic about the fight against climate change - especially for someone who was at some point a member of the Trump administration. He expresses great interest in finding common ground between different factions. This makes me somewhat more hopeful about the possibility that the national security establishment could take misalignment risks (and not only the threat from competition with other countries) seriously.

- (Not surprisingly) he is a big proponent of "US = freedom, good" and "China = ruthless dictatorship, bad". He points to a few facts to support his statement, but defending this position is not his main focus, and he seems to think that there isn't any uncertainty that the US being more powerful is clearly good. Trading off US power against global common goods (e.g. increased safety against global catastrophic risks) doesn't seem like the kind of trade he would make.

I listened to the book Protecting the President by Dan Bongino, to get a sense of how risk management works for US presidential protection - a risk that is high-stakes, where failures are rare, where the main threat is the threat from an adversary that is relatively hard to model, and where the downsides of more protection and its upsides are very hard to compare.

Some claims the author makes (often implicitly):

- Large bureaucracies are amazing at creating mission creep: the service was initially in charge of fighting against counterfeit currency, got presidential protection later, and now is in charge of things ranging from securing large events to fighting against Nigerian prince scams.

- Many of the important choices are made via inertia in large change-averse bureaucracies (e.g. these cops were trained to do boxing, even though they are never actually supposed to fight like that), you shouldn't expect obvious wins to happen;

- Many of the important variables are not technical, but social - especially in this field where the skills of individual agents matter a lot (e.g. if you have bad policies around salaries and promotions, people don't stay at your service for long, and so you end up with people who are not as skilled as they could be; if you let the local police around the White House take care of outside-perimeter security, then it makes communication harder);

- Many of the important changes are made because important politicians that haven't thought much about security try to improve optics, and large bureaucracies are not built to oppose this political pressure (e.g. because high-ranking officials are near retirement, and disagreeing with a president would be more risky for them than increasing the chance of a presidential assassination);

- Unfair treatments - not hardships - destroy morale (e.g. unfair promotions and contempt are much more damaging than doing long and boring surveillance missions or training exercises where trainees actually feel the pain from the fake bullets for the rest of the day).

Some takeaways

- Maybe don't build big bureaucracies if you can avoid it: once created, they are hard to move, and the leadership will often favor things that go against the mission of the organization (e.g. because changing things is risky for people in leadership positions, except when it comes to mission creep) - Caveat: the book was written by a conservative, and so that probably taints what information was conveyed on this topic;

- Some near misses provide extremely valuable information, even when they are quite far from actually causing a catastrophe (e.g. who are the kind of people who actually act on their public threats);

- Making people clearly accountable for near misses (not legally, just in the expectations that the leadership conveys) can be a powerful force to get people to do their job well and make sensible decisions.

Overall, the book was somewhat poor in details about how decisions are made. The main decision processes that the book reports are the changes that the author wants to see happen in the US Secret Service - but this looks like it has been dumbed down to appeal to a broad conservative audience that gets along with vibes like "if anything increases the president's safety, we should do it" (which might be true directionally given the current state, but definitely doesn't address the question of "how far should we go, and how would we know if we were at the right amount of protection"). So this may not reflect how decisions are done, since it could be a byproduct of Dan Bongino being a conservative political figure and podcast host.

I agree that the most natural symmetry goes out of bounds. But there are other patterns you can use. First, you can solve the bottom half of the unknown rectangle by top-down symmetry. And then, it seems like the center of top portion, when rotated right matches the center of the right portion (does not hold near the diagonal and the center, but holds near the top), and so you can use this to fill in the missing tiles. Moreover, this observation that the top center portion can be rotated to get left and right holds for the other examples. So I think this solves the problem in the spirit of the exercise (find the simplest rules that predicts what the pattern on the example and is applicable to the prediction inputs).

[Edit: The authors released code and probing experiments. Some of the empirical predictions I made here resolved, and I was mostly wrong. See here for my takes and additional experiments.]

I have a few concerns about Improving Alignment and Robustness with Circuit Breakers, a paper that claims to have found a method which achieves very high levels of adversarial robustness in LLMs.

I think hype should wait for people investigating the technique (which will be easier once code and datasets are open-sourced), and running comparisons with simpler baselines (like probing). In particular, I think that:

- Circuit breakers won’t prove significantly more robust than regular probing in a fair comparison.[1]

- Once the code or models are released, people will easily find reliable jailbreaks.

Here are some concrete predictions:

- p=0.7 that using probing well using the same training and evaluation data results can beat or match circuit breakers.[2] [Edit: resolved to False]

- p=0.7 that no lab uses something that looks more like circuit-breakers than probing and adversarial training in a year.

- p=0.8 that someone finds good token-only jailbreaks to whatever is open-sourced within 3 months. [Edit: this is only about Cygnet, since the paper shows that just RR isn't perfectly robust.]

- p=0.5 that someone finds good token-only jailbreaks to whatever is publicly available through an API within 3 months.[3] [Edit: this is only about Cygnet, since the paper shows that just RR isn't perfectly robust.]

I think the authors would agree with most of my predictions. My main disagreement is with the hype.

How do circuit-breakers compare to probing?

What does circuit-breakers training do? The only interpretation that feels plausible is that the LLM classifies the prompt as harmful or not harmful, and then messes up with its own activations if the prompt is classified as harmful. If this is the case, then the LLM needs to use an internal classifier, and I think it should be possible to extract an accurate harmfulness probe (linear or not linear) around these layers, and instead of messing up the activation.

The equivalent to circuit-breakers if you probe:

- At every token position, and takes something like a max over position (if a single position messes up the activations, it might propagate to every position);

- In particular, this means that suffix GCG and input embed attacks tested in the paper might be much worse than prefix+suffix GCG or input embed attacks. (p=0.5 that using prefix+suffix GCG makes finding a GCG attack of comparable strength on average 4x faster [Edit: resolved to false]).

- On output tokens, i.e. model-generated answers (and output classifiers are known to be more powerful than input-only classifiers).

Would probing be weaker against GCG and input embed attacks than circuit-breakers? I think it would be, but only superficially: probing is strong against GCG and input embed attacks if the attack only targets the model, but not the probe. The fair comparison is an attack on the probe+LLM vs an attack on a circuit-breakers model. But actually, GCG and other gradient-based attack have a harder time optimizing against the scrambled activations. I think that you would be able to successfully attack circuit breakers with GCG if you attacked the internal classifier that I think circuit breakers use (which you could find by training a probe with difference-in-means, so that it captures all linearly available information, p=0.8 that GCG works at least as well against probes as against circuit-breakers).

The track record looks quite bad

The track record for overselling results and using fancy techniques that don't improve on simpler techniques is somewhat bad in this part of ML.

I will give one example. The CUT unlearning technique presented in the WMDP paper (with overlapping authors to the circuit-breakers one):

- Got a massive simplification of the main technique within days of being released - thanks to the authors open-sourcing the code and Sam Marks and Oam Patel doing an independent investigation of the technique. (See the difference between v2 and v3 on arxiv.)

- Aims to do unlearning in a way that removes knowledge from LLMs (they make the claim implicitly on https://www.wmdp.ai/), but only modifies the weights of 3 layers out of 32 (so most of the harmful information is actually still there).

- ^

When I say “probing”, I mainly think about probing on outputs i.e. model-generated answers (and maybe inputs i.e. user prompts), like I did in the coup probes post, but whose application to jailbreak robustness sadly got very little attention from the academic community. I’m sad that the first paper that actually tries to do something related does something fancy instead of probing.

- ^

More precisely, a probing methodology is found within 6 months of the data being released that beats or matches circuit-breakers ASR on all metrics presented in the paper. When using gradient-based methods or techniques that rely on prompt iteration more generally, attacks on circuit-breakers should use the best proxy for the internal classifier of the circuit-breaker.

- ^

Most of the remaining probability mass is on worlds where either people care way less than they care for Claude - e.g because the model sucks much more than open-source alternatives, and on worlds where they use heavy know-your-customer mitigations.

The main consideration is whether I will have better and/or higher impact safety research there (at Anthropic I will have a different research environment, with other research styles, perspectives, and opportunities, which I may find better). I will also consider indirect impact (e.g. I might be indirectly helping Anthropic instead of another organization gain influence, unclear sign) and personal (non-financial) stuff. I'm not very comfortable sharing more at the moment, but I have a big Google doc that I have shared with some people I trust.

Empirically, distillation can improve performance by reducing expert noise in chess.

In a few months, I will be leaving Redwood Research (where I am working as a researcher) and I will be joining one of Anthropic’s safety teams.

I think that, over the past year, Redwood has done some of the best AGI safety research and I expect it will continue doing so when I am gone.

At Anthropic, I will help Ethan Perez’s team pursue research directions that in part stemmed from research done at Redwood. I have already talked with Ethan on many occasions, and I’m excited about the safety research I’m going to be doing there. Note that I don’t endorse everything Anthropic does; the main reason I am joining is I might do better and/or higher impact research there.

I did almost all my research at Redwood and under the guidance of the brilliant people working there, so I don’t know yet how happy I will be about my impact working in another research environment, with other research styles, perspectives, and opportunities - that’s something I will learn while working there. I will reconsider whether to stay at Anthropic, return to Redwood, or go elsewhere in February/March next year (Manifold market here), and I will release an internal and an external write-up of my views.