Is "red" for GPT-4 the same as "red" for you?

post by Yusuke Hayashi (hayashiyus) · 2023-05-06T17:55:20.691Z · LW · GW · 6 commentsContents

6 comments

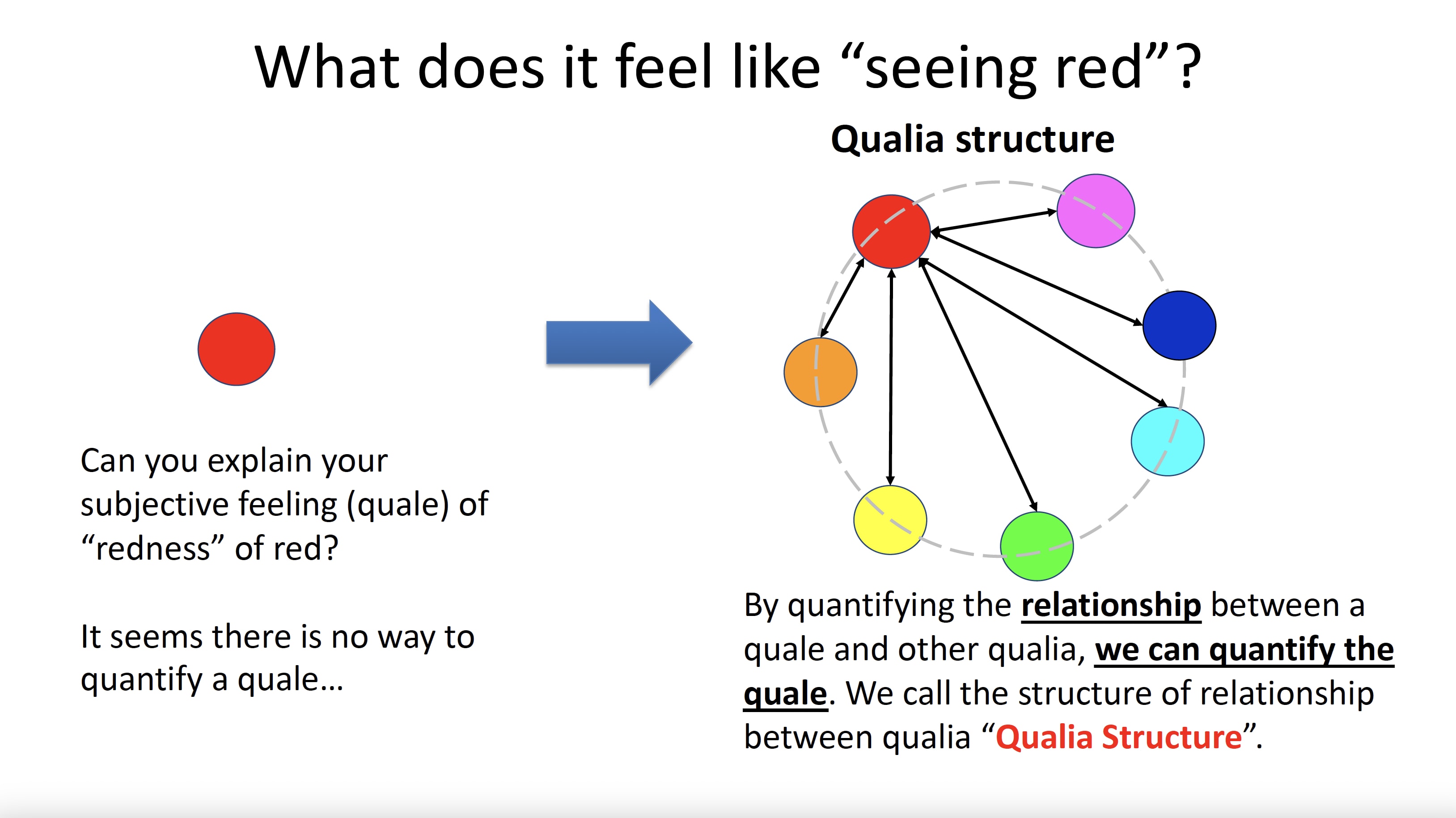

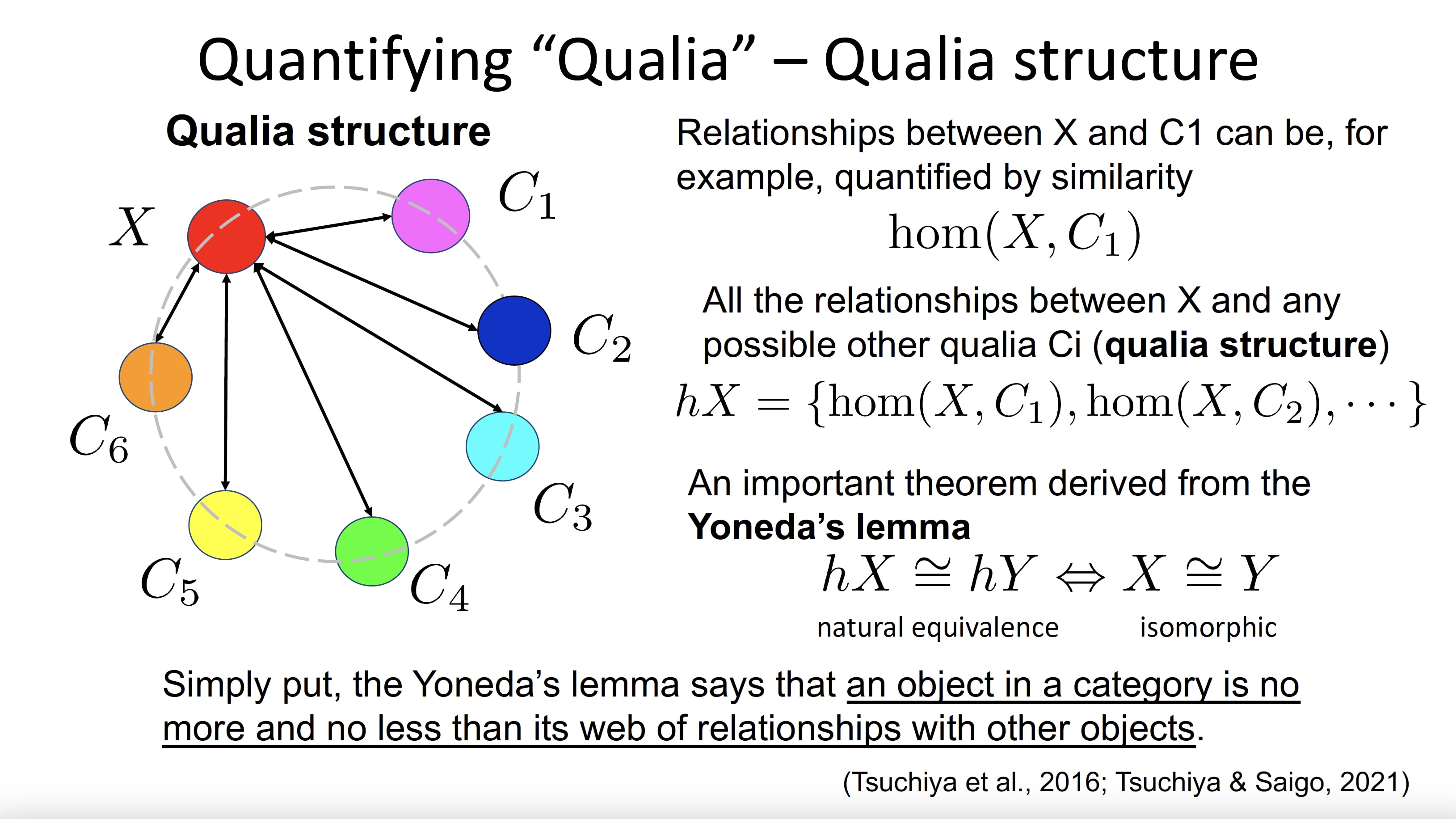

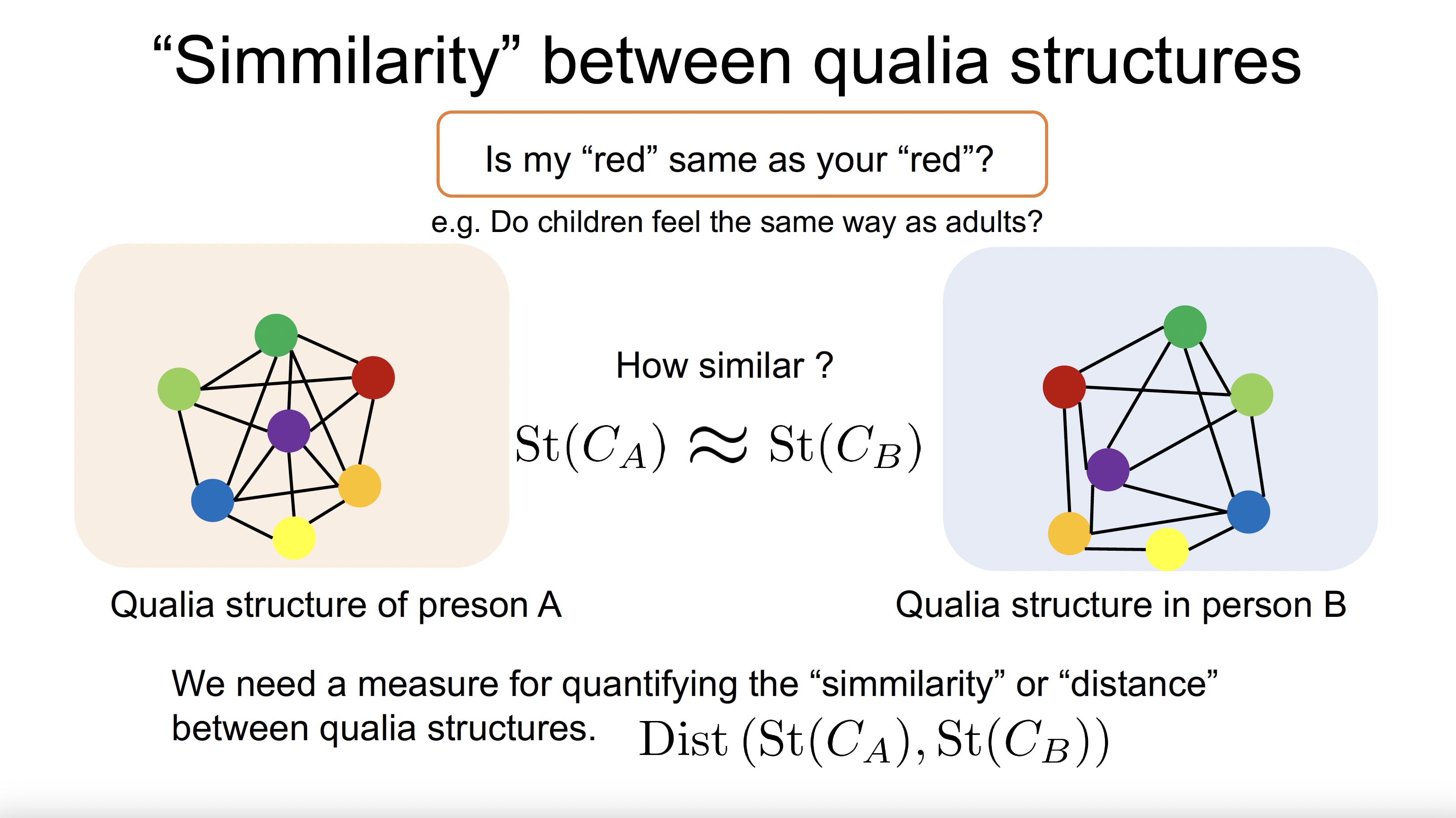

Penned by Yusuke Hayashi, an independent researcher hailing from Japan, this article bears no affiliation to the authors whose scholarly works are referenced herein. Demonstrating intellectual autonomy, the analysis presented is unequivocally distinct from the cited publications.Have you ever wondered if the "red" you perceive is the same "red" someone else experiences? A recent study explores this question using a distance measure called the Gromov-Wasserstein distance (GWD).

Is my “red” your “red”?: Unsupervised alignment of qualia structures via optimal transport[1]

Paper (Preprint by Kawakita et al. published in PsyArXiv): https://psyarxiv.com/h3pqm

Slide (Presentation by Masafumi Oizumi at Optimal Transport Workshop 2023): https://drive.google.com/file/d/1Z5QTxayqJkmYyOgirrbJRRflY8HOy8ox/view?usp=sharing

In the paper "Is my "red" your "red"? : Unsupervised Alignment of Qualia Structures by Optimal Transport" (Kawakita et al., 2022) proposes a new approach to assessing the similarity of sensory experiences between individuals. This approach, based on GWD and optimal transport, allows for the alignment of qualia structures without assuming a correspondence between individual experiences. As a result, it provides a quantitative means of comparing the similarity of qualia structures between individuals.

By applying this method, it is possible to compare the similarity of subjective experiences, or qualia structures, between individuals using GWD, a distance measure employed in machine learning research. While this study compares sensory experiences between humans, the same approach could be used to compare the sensory experiences of humans and the advanced AI model, GPT-4. We are now ready to revisit the question posed in the title:

Is "red" for GPT-4 the same as for you?

If asked whether large language models (LLMs) like GPT-4 possess consciousness, the likely answer would be "probably not." However, the method proposed here provides a way to determine whether such models share the same qualia structure as humans.

Consciousness and qualia are closely related. Consciousness is the state of being aware of, perceiving, and thinking about one's surroundings, thoughts, and feelings. Qualia, on the other hand, are subjective experiences and sensations that accompany consciousness, such as the taste of chocolate, the sound of a musical instrument, or the sensation of pain.

Qualia are an essential part of conscious experience, and their relationship to consciousness is of paramount importance. Without qualia, our experience of consciousness would lack a rich, subjective texture and would not be unique and personal. In other words, qualia give our experience of consciousness its unique character and enable us to understand the world around us.

One of the central debates in the philosophy of mind revolves around the nature of qualia and their relation to consciousness. Some philosophers argue that qualia are difficult to study and understand because they cannot be reduced to physical or functional descriptions. Others argue that advances in neuroscience and cognitive science may allow qualia to be explained within a naturalistic framework. The aforementioned studies shed new light on the long-standing controversy surrounding the nature of qualia and its relationship to consciousness.

Recently, Dr. Geoffrey Hinton, one of the pioneers of the deep learning model, resigned from Google, advocating the potential dangers of making AI smarter than humans. In LessWrong, there is a lot of discussion about the safety of AI and the integrity of AI. Throughout this article, I would like to pose the following question to the reader:

If deep learning models acquire consciousness in the near future, can we consider them as alignment targets?

- ^

G. Kawakita, A. Zeleznikow-Johnston, K. Takeda, N. Tsuchiya and M. Oizumi, Is my "red" your "red"?: Unsupervised alignment of qualia structures via optimal transport. PsyArXiv https://doi.org/10.31234/osf.io/h3pqm (2023).

6 comments

Comments sorted by top scores.

comment by Portia (Making_Philosophy_Better) · 2023-05-10T14:04:40.657Z · LW(p) · GW(p)

The author of this paper, and a co-worker of his, recently presented this work to my research group, and we discussed implication, also with artificial phenomenology in mind. My impression is that potential implications could be profound if we make some plausible extra assumptions, but that this mostly concerns attempts to decipher biological consciousness (which is my field, so it had me hyped).

Whether you interpret this - very interesting - work to show that specific qualia are experienced very similarly between humans depends on whether you assume the subjective feel of a qualia is fully determined by its unique and asymmetric relation to others. Assuming this is, imho, plausible (I played around with this idea many years ago already), but that has further implications - among others, the one that the hard problem of consciousness may not be quite as hard as we previously thought.

But this paper basically addresses how a specific phenomenal character could be non-random (which is amazing), and in the process, makes inverse qualia scenarios extremely unlikely (which is also amazing) and gives novel approaches for deciphering neural correlates of consciousness (also amazing); it does not, however, answer the question whether a particular entity with this structure experiences anything at all, which is a completely separate area of research (though it is very tentatively beginning to converge). We specifically discussed that it is quite plausible that you could get a similar structure within an artificial neural net trained to reproduce human perceptions, hence building the equivalent of our phenomenological map, albeit without the neural net feeling anything at all, and hence also not seeing red in particular. I am not sure what implications you see for alignment, or where your final question was heading in this regard.

P.S.: Happy to answer questions about this, but the admins of this site have set me to only one post of any kind per day.

Replies from: hayashiyus↑ comment by Yusuke Hayashi (hayashiyus) · 2023-05-15T20:21:52.338Z · LW(p) · GW(p)

Dear Portia,

Thank you for your thought-provoking and captivating response. Your expertise in the field of biological consciousness is clear, and I'm grateful for the depth and breadth of your commentary on the potential implications of this paper.

If we accept the assumption that the subjectivity of a specific qualia is defined by its unique and asymmetric relations to other qualia, then this paper indeed offers a method for verifying the possibility that such qualia could be experienced similarly among humans. Your point that the 'hard problem' of consciousness may not be as challenging as we previously thought is profoundly important.

However, I hold a slightly different view about the 'new approach to deciphering neural correlates of consciousness' proposed in this paper. While I agree that this approach does not specifically answer whether a certain entity with a qualia structure experiences anything, given the right conditions and complexity, I am interested in contemplating the possibility of such an experience occurring, if we were to introduce what you refer to as 'some plausible extra assumptions'.

I apologize if my thoughts on alignment were unclear. I did not sufficiently explain AI alignment in my post. AI alignment is about ensuring that the goals and actions of an AI system coincide with human values and interests. Adding the factor of AI consciousness undoubtedly complicates the alignment problem. For instance, if we acknowledge an AI as a sentient being, it could lead to a situation similar to debates about animal rights, where we would need to balance human values and interests with those of non-human entities. Moreover, if an AI were to acquire qualia or consciousness, it might be able to understand humans on a much deeper level.

Regarding my final question, I was interested in exploring the potential implications of this work in the context of AI alignment and safety, as well as ethical considerations that we might need to ponder as we progress in this field. Your insights have provided plenty of food for thought, and I look forward to hearing more from you.

Thank you again for your profound insights.

Best,

Yusuke

↑ comment by Portia (Making_Philosophy_Better) · 2023-06-01T16:19:33.820Z · LW(p) · GW(p)

Thank you for your kind words, and sorry for not having given proper response yet, am really swamped. Currently at the wonderful workshop “Investigating consciousness in animals and artificial systems: A comparative perspective” https://philosophy-cognition.com/cmc/2023/02/01/cfp-workshop-investigating-consciousness-in-animals-and-artificial-systems-a-comparative-perspective-june-2023/ (online as well), on the talk on potential for consciousness in multi-modal LLMs, and encountered this paper, Abdou et al. 2021 “Can Language Models Encode Perceptual Structure Without Grounding? A Case Study in Color” https://arxiv.org/abs/2109.06129 Have not had time to look at properly yet (my to read pile rose considerably today in wonderful ways) but think might be relevant for your question, so wanted to quickly share.

comment by Charlie Steiner · 2023-05-13T03:54:16.139Z · LW(p) · GW(p)

Hi, welcome!

Consciousness isn't actually super relevant to alignment. Alignment is about figuring out how to get world-affecting AI to systematically do good things rather than bad things. This is possible both for conscious and unconscious AI, and consciousness seems to provide neither a benefit nor an impediment to doing good/bad things.

But it's still fun to talk about sometimes.

For this approach, the crucial step 1 is to start with observations of a a big blob of atoms called a "human," and model the human in a way that uses some pieces called "qualia" that have connections with each other. I feel like this is much trickier and more contentious than the later steps of comparing people once you already have a particular way of modeling them.

Replies from: hayashiyus↑ comment by Yusuke Hayashi (hayashiyus) · 2023-05-15T20:27:51.147Z · LW(p) · GW(p)

Dear Charlie,

Thank you for sharing your insights on the relationship between consciousness and AI alignment. I appreciate your perspective and find it to be quite thought-provoking.

I agree with you that the challenge of AI alignment applies to both conscious and unconscious AI. The ultimate goal is indeed to ensure AI systems act in a manner that is beneficial, regardless of their conscious state.

However, while consciousness may not directly impact the 'good' or 'bad' actions of an AI, I believe it could potentially influence the nuances of how those actions are performed, especially when it comes to complex, human-like tasks.

Your point about the complexity of modeling a human using "qualia" is well-taken. It's indeed a challenging and contentious task, and I think it's one of the areas where we need more research and understanding.

Do you think there might be alternative or more effective ways to model human consciousness, or is the approach of using "qualia" the most promising one we currently have?

Thank you again for your thoughtful comments. I look forward to further discussing these fascinating topics with you.

Best,

Yusuke

↑ comment by Charlie Steiner · 2023-05-16T11:06:50.969Z · LW(p) · GW(p)

Do you think there might be alternative or more effective ways to model human consciousness, or is the approach of using "qualia" the most promising one we currently have?

IMO the most useful is the description of the cognitive algorithms / cognitive capabilities involved in human-like consciousness. Like remembering events from long-term memory when appropriate, using working memory to do cognitive tasks, responsiveness to various emotions, emotional self-regulation, planning using various abstractions, use of various shallow decision-making heuristics, interpreting sense data into abstract representations, translating abstract representations back into words, attending to stimuli, internally regulating what you're focusing on, etc.

Qualia can also be bundled with capabilities. For example, pain triggers the fight or flight response, it causes you to learn to avoid similar situations in the future, it causes you to focus on plans to avoid the pain, it filters what memories you're primed to recall, etc.