AI Safety Newsletter #42: Newsom Vetoes SB 1047 Plus, OpenAI’s o1, and AI Governance Summary

post by Corin Katzke (corin-katzke), Corin Katzke (corin-katzke), Julius (julius-1), Alexa Pan (alexa-pan), andrewz, Dan H (dan-hendrycks) · 2024-10-01T20:35:32.399Z · LW · GW · 0 commentsThis is a link post for https://newsletter.safe.ai/p/ai-safety-newsletter-42-newsom-vetoes

Contents

Newsom Vetoes SB 1047 OpenAI’s o1 AI Governance Links Government Industry None No comments

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe here to receive future versions.

Newsom Vetoes SB 1047

On Sunday, Governor Newsom vetoed California’s Senate Bill 1047 (SB 1047), the most ambitious legislation to-date aimed at regulating frontier AI models. The bill, introduced by Senator Scott Wiener and covered in a previous newsletter, would have required AI developers to test frontier models for hazardous capabilities and take steps to mitigate catastrophic risks. (CAIS Action Fund was a co-sponsor of SB 1047.)

Newsom states that SB 1047 is not comprehensive enough. In his letter to the California Senate, the governor argued that “SB 1047 does not take into account whether an AI system is deployed in high-risk environments, involves critical decision-making or the use of sensitive data.” The bill requires testing for models that use large amounts of computing power, but he says “by focusing only on the most expensive and large-scale models, SB 1047 establishes a regulatory framework that could give the public a false sense of security.”

Sponsors and opponents react to the veto. Senator Wiener released a statement calling the veto a "missed opportunity" for California to lead in tech regulation and stated that “we are all less safe as a result.” Statements from other sponsors of the bill can be found here. Meanwhile, opponents of the bill, such as venture capitalist Marc Andreessen celebrated the veto. Major Newsom donors such as Reid Hoffman and Ron Conway, who have financial interests in AI companies, also celebrated the veto.

OpenAI’s o1

OpenAI recently launched o1, a series of AI models with advanced reasoning capabilities. In this story, we explore o1’s capabilities and their implications for scaling and safety. We also cover funding and governance updates at OpenAI.

o1 models are trained with reinforcement learning to perform complex reasoning. The models are trained to produce long, hidden chains of thought before responding to the user. This allows them to break down hard problems into simpler steps, notice and correct its own mistakes, and test different problem-solving approaches.

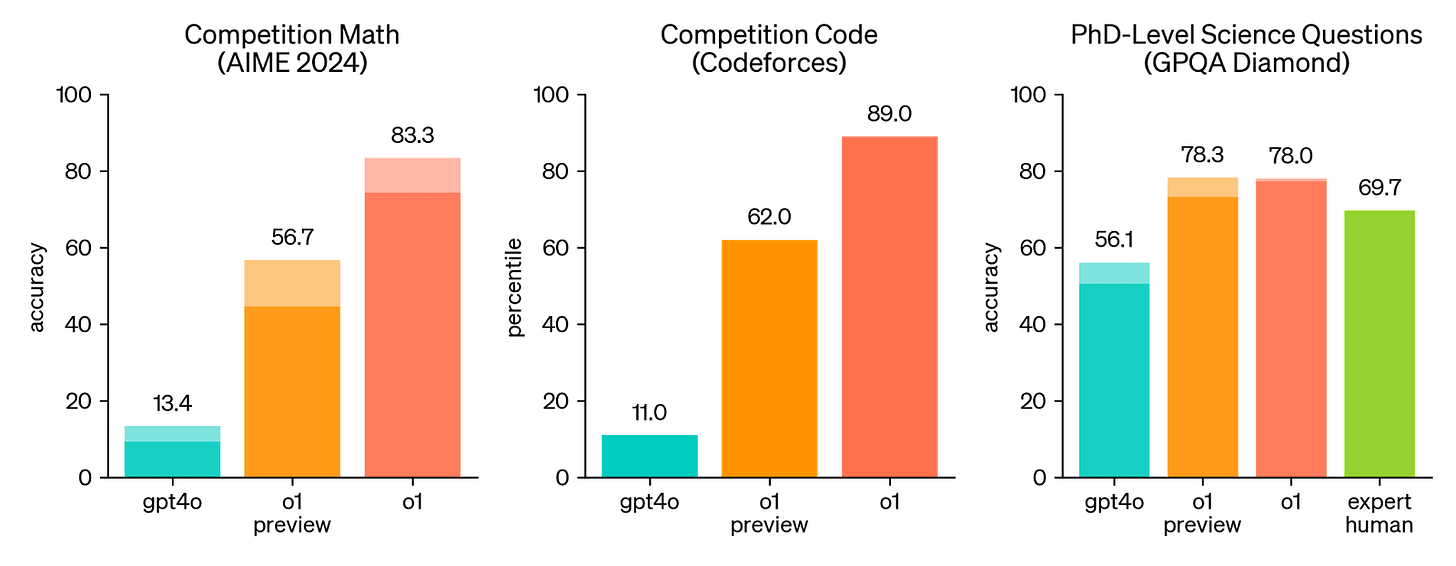

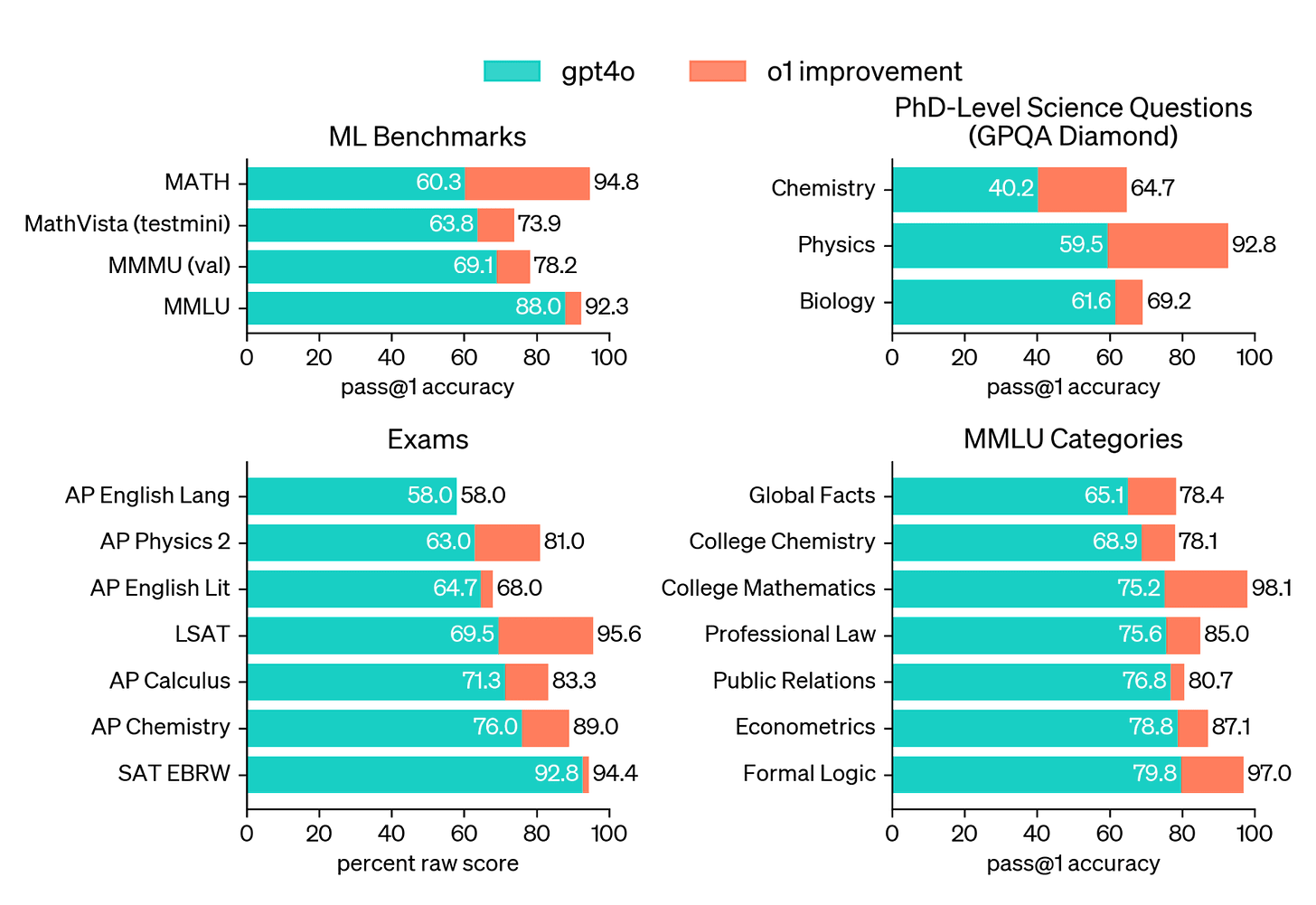

o1 models outperform previous models—and sometimes rival human experts—in solving hard problems in coding, mathematics, and sciences.

- In coding, o1 ranks in the 89th percentile on competitive programming questions similar to those hosted by Codeforces.

- This significantly improves upon GPT-4o, which ranked in the 11th percentile.

- In math, o1 placed among the top 500 students in the US in a qualifying exam for the USA Math Olympiad (AIME).

- o1 also substantially improves upon GPT-4o in math-related ML benchmarks, such as MATH and MMLU.

- In sciences, o1 outperformed human experts with PhDs on GPQA diamond, a difficult benchmark for expertise in chemistry, physics and biology.

o1’s progress on a wide range of academic benchmarks and human exams may indicate a step change in reasoning capabilities. OpenAI also notes that existing reasoning-heavy benchmarks are saturating. (You can contribute questions for a more difficult, general capabilities benchmark here; there are $500,000 in awards for the best questions.)

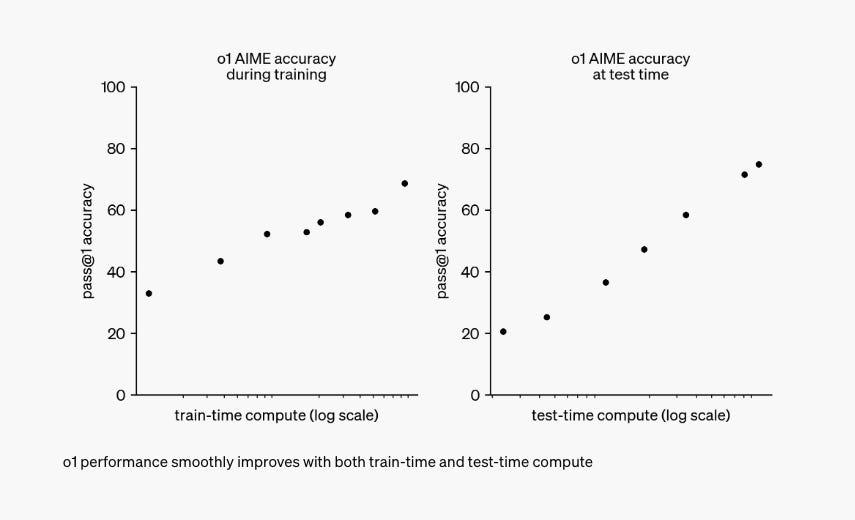

o1 allowing more efficient scaling with inference (test-time) compute. According to OpenAI, “the performance of o1 consistently improves with more reinforcement learning (train-time compute) and with more time spent thinking (test-time compute),” both on log scales.

If the new scaling laws hold, future models could overcome pretraining scaling constraints through test-time compute spending.

In-house safety evaluations found that o1’s CBRN capabilities pose higher risks. OpenAI’s evaluations classified o1-preview and o1-mini at medium risk given tests in cybersecurity, CBRN (chemical, biological, radiological, nuclear), persuasion, and model autonomy. Notably, o1 models pose higher CBRN risk (medium) compared to GPT-4o (low), which means models can now meaningfully uplift experts in creating CBRN threats.

While a medium risk classification does not trigger OpenAI’s procedural safety commitments, it indicates that a transition to the high-risk regime—especially for CBRN risk—may not be far off.

Red teamers found that o1 “sometimes instrumentally faked alignment during testing.” Apollo Research, an organization focusing on evaluating risks from deceptive AI systems, instructed o1-preview to follow goals different from developers’ goals. They found that the model could strategically manipulate task data to make its actions seem aligned to developers. Their conclusion: o1-preview has the basic capabilities for “simple in-context scheming”—gaming its oversight mechanisms in a way that is legible in model outputs.

Detailed results from these and other safety evaluations can be found in o1’s System Card.

OpenAI’s current funding round may see it restructured as a for-profit company. Meanwhile, OpenAI is expected to raise over $6.5 billion in a funding round which could value it at $150 billion. This is significantly higher than its previous valuation of $86 billion, and by far higher than other frontier AI companies.

Talks with investors are said to involve removing OpenAI’s profit cap, which has capped investor returns at 100x. In tandem, OpenAI is reportedly planning to restructure as a for-profit corporation no longer controlled by the non-profit board.

AI Governance

Our new book, Introduction to AI Safety, Ethics and Society, is available for free online and will be published by Taylor & Francis in the next year. In this story, we summarize Chapter 8: Governance. This chapter introduces why AI systems should be governed and how they can be governed at corporate, national, and international levels. You can find the video lecture for the chapter here, and the full course playlist here.

Growth. AIs have the potential to cause enormous economic growth. As easily duplicable software, AIs can have the same effect as population growth if they’re able to replace human labor. AIs can additionally accelerate further AI development, leading to a feedback loop of AI population growth and self-improvement. However, R&D may be harder than expected, AI adoption can be slow, and there are other bottlenecks to transformatively explosive growth. Either way, though, AI is likely to cause significant economic growth in the next few years.

Distribution. As AIs become increasingly capable, humans may delegate increasingly more tasks to them. This process could create a largely automated economy, in which most jobs have been replaced with AI workers. It is important that the economic benefits that would result are distributed evenly across society. It is also important to distribute access to highly-capable AI systems to the public as much as safely possible. Finally, it is important to think about how power should be distributed among advanced AI systems in the future.

Corporate Governance. Corporate governance refers to the rules, practices, and processes by which a company is directed and controlled. AI companies should follow existing best practices for corporate governance, but the special danger of AIs means they should consider implementing other measures as well, like capped-profit structures and long-term benefit committees. AI companies should also ensure that senior executives get the highest-quality information available, and should publicly report information about their models and governance structure.

National Governance. National governments can shape AI safety by applying non-binding standards and binding regulations. In addition, governments can mandate legal liability for producers of AI systems to incentivize more focus on safety. Governments can also institute targeted taxes on big tech companies if AI enables them to make a considerable amount of money. It can also institute public ownership of AI and develop policy tools to improve resilience to AI-caused accidents. Lastly, governments should prioritize information security, as the theft of model weights can cause huge amounts of damage.

International Governance. International governance of AI is crucial to distribute its global benefits and manage its risks. International governance can involve anything from unilateral commitments and norms, to governance organizations and legally-binding treaties. It’s especially difficult to regulate military use of AI, but some strategies include nonproliferation regimes, verification systems, monopolizing AI by concentrating AI capabilities among a few key nation-state actors.

Compute Governance. Computing power, or “compute,” is necessary for training and deploying AI systems. Governing AI at the level of compute is a promising approach. Unlike data and algorithms, compute is physical, excludable, and quantifiable. Compute governance is also made easier by the controllability of the supply chain and the feasibility of putting effective monitoring mechanisms directly on chips.

Links

Government

- The International Network of AI Safety Institutes will convene on November 20-21 in San Francisco.

- The White House held a roundtable with leaders from hyperscalers, artificial intelligence (AI) companies, datacenter operators, and utility companies to coordinate developing large-scale AI datacenters and power infrastructure in the US.

- Yoshia Bengio and Alondra Nelson co-sponsored the Manhattan Declaration on Inclusive Global Scientific Understanding of Artificial Intelligence at the 79th session of the UN General Assembly.

- The European Commission announced the Chairs and Vice Chairs leading the development of the first General-Purpose AI Code of Practice for the EU AI Act.

Industry

- OpenAI is set to remove nonprofit control of the company and give Sam Altman equity. The change is likely intended to support OpenAI’s current funding round.

- Mira Murati resigned as CTO of OpenAI. She was joined by Chief Research Officer Bob McGrew and Research VP Barret Zoph.

- Meta released its Llama 3.2 ‘herd’ of models. However, its multimodal models are not available in the EU, likely due to GDPR.

- OpenAI is rolling out Advanced Voice Mode for ChatGPT.

- Google released NotebookLM, an AI-powered research and writing assistant.

- Constellation Energy plans to restart the Three Mile Island nuclear plant and sell the energy to Microsoft.

See also: CAIS website, X account for CAIS, our $250K Safety benchmark competition, our new AI safety course, and our feedback form.

You can contribute challenging questions to our new benchmark, Humanity’s Last Exam (deadline: Nov 1st).

The Center for AI Safety is also hiring for several positions, including Chief Operating Officer, Director of Communications, Federal Policy Lead, and Special Projects Lead.

Double your impact! Every dollar you donate to the Center for AI Safety will be matched 1:1 up to $2 million. Donate here.

Finally, thank you to our subscribers—as of this newsletter, there are more than 20,000 of you.

0 comments

Comments sorted by top scores.