Tracr: Compiled Transformers as a Laboratory for Interpretability | DeepMind

post by DragonGod · 2023-01-13T16:53:10.279Z · LW · GW · 12 commentsThis is a link post for https://arxiv.org/abs/2301.05062

Contents

Abstract None 12 comments

Abstract

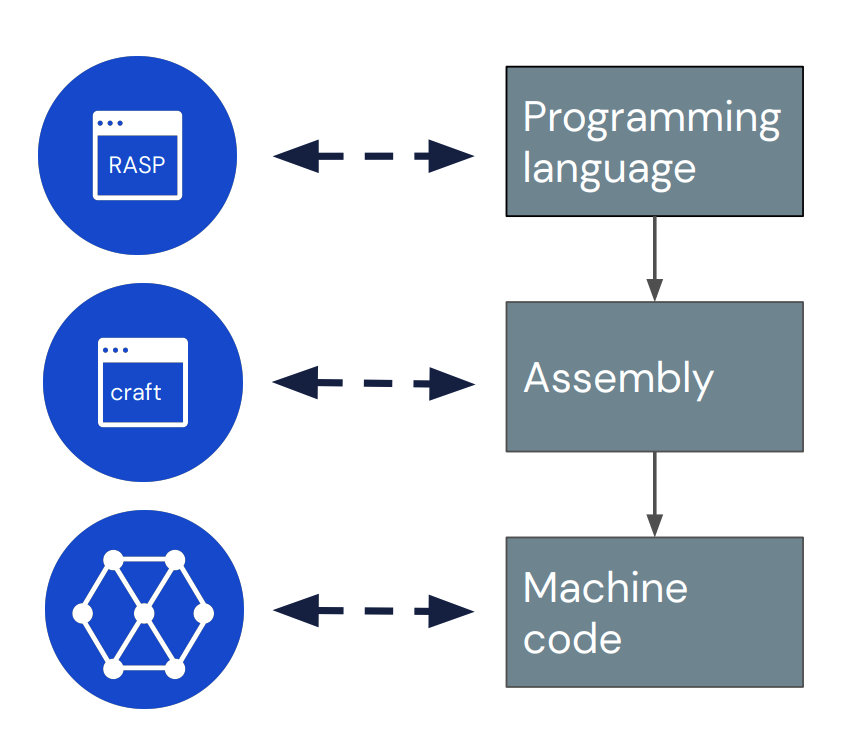

Interpretability research aims to build tools for understanding machine learning (ML) models. However, such tools are inherently hard to evaluate because we do not have ground truth information about how ML models actually work. In this work, we propose to build transformer models manually as a testbed for interpretability research. We introduce Tracr, a "compiler" for translating human-readable programs into weights of a transformer model. Tracr takes code written in RASP, a domain-specific language (Weiss et al. 2021), and translates it into weights for a standard, decoder-only, GPT-like transformer architecture. We use Tracr to create a range of ground truth transformers that implement programs including computing token frequencies, sorting, and Dyck-n parenthesis checking, among others. To enable the broader research community to explore and use compiled models, we provide an open-source implementation of Tracr at this https URL.

12 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-01-14T17:54:15.047Z · LW(p) · GW(p)

I threw together a rough demo of converting Tracr to PyTorch (to a mech interp library I'm writing called TransformerLens), and hacked it to be compatible with Python 3.8 - hope this makes it easier for other people to play with it! (All bugs are my fault)

comment by Erik Jenner (ejenner) · 2023-01-13T20:26:13.293Z · LW(p) · GW(p)

Twitter thread by Dr. Jim Fan

Link just seems to go back to this LW post, here's the thread: https://twitter.com/DrJimFan/status/1613918800444899328

Replies from: DragonGodcomment by Gurkenglas · 2023-01-13T18:40:25.772Z · LW(p) · GW(p)

Isn't (select(a, b, ==) and select(c, d, ==)) just the sum of the two QK matrices? It'll produce NxN entries in {0,1,2}, and softargmax with low temp treats 1s like 0s in the presence of a dummy token's 2.

Replies from: Frederik↑ comment by Tom Lieberum (Frederik) · 2023-01-14T20:09:41.642Z · LW(p) · GW(p)

I don't think that's right. Iiuc this is a logical and, so the values would be in {0, 1} (as required, since tracr operates with Boolean attention). For a more extensive discussion of the original problem see appendix C.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2023-01-15T09:19:20.257Z · LW(p) · GW(p)

Appendix C attempts to discard the softargmax, but it's an integral part of my suggestion. If the inputs to softargmax take values {0,1000,2000}, the outputs will take only two values.

From the RASP paper: https://arxiv.org/pdf/2106.06981

The uniform weights dictated by our selectors reflect an attention pattern in which ‘unselected’ pairs are all given strongly negative scores, while the selected pairs all have higher, similar, scores.

Addition of such attention patterns corresponds to anding of such selectors.

Replies from: Frederik↑ comment by Tom Lieberum (Frederik) · 2023-01-15T10:05:22.825Z · LW(p) · GW(p)

(The quote refers to the usage of binary attention patterns in general, so I'm not sure why you're quoting it)

I obv agree that if you take the softmax over {0, 1000, 2000}, you will get 0 and 1 entries.

iiuc, the statement in the tracr paper is not that you can't have attention patterns which implement this logical operation, but that you can't have a single head implementing this attention pattern (without exponential blowup)

Replies from: Gurkenglas↑ comment by Gurkenglas · 2023-01-15T10:09:20.510Z · LW(p) · GW(p)

(To show my idea compatible with Boolean attention.)

I use a single head, and the ranks add up linearly.

Replies from: vlad_m↑ comment by Vlad Mikulik (vlad_m) · 2023-01-16T15:58:17.407Z · LW(p) · GW(p)

You're right that accounting for the softmax could be used to get around the argument in the appendix. We'll mention this when we update the paper.

The scheme as you described it relies on an always-on dummy token, which would conflict with our implementation of default values in aggregates: we fix the BOS token attention to 0.5, so at low softmax temp it's attended to iff nothing else is; however with this scheme we'd want 0.5 to always round off to zero after softmax. This is plausibly surmountable but we put it out of scope for the pilot release since we didn't seem to need this feature, whereas default values come up pretty often.

Also, while this construction will work for just and (with arbitrarily many conjuncts), I don't think it works for arbitrary compositions using and and or. (Of course it would be better than no composition at all.)

In general, I expect there to be a number of potentially low-hanging improvements to be made to the compiler -- many of them we deliberately omitted and are mentioned in the limitations section, and many we haven't yet come up with. There's tons of features one could add, each of which takes time to think about and increases overall complexity, so we had to be judicious which lines to pursue -- and even then, we barely had time to get into superposition experiments. We currently aren't prioritising further Tracr development until we see it used for research in practice, but I'd be excited to help anyone who's interested in working with it or contributing.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2023-01-16T17:35:57.204Z · LW(p) · GW(p)

we fix the BOS token attention to 0.5

1.5 does conjunction without my dummy.

When at most one clause is true, 0.5 does disjunction instead.

Replies from: vlad_m↑ comment by Vlad Mikulik (vlad_m) · 2023-01-16T18:53:11.766Z · LW(p) · GW(p)

Ah, yep, I think you're right -- it should be pretty easy to add support for and in selectors then.

comment by Gurkenglas · 2023-01-15T10:29:01.729Z · LW(p) · GW(p)

In Appendix C, the × in (𝑄𝐴 ⊕ 𝑄𝐵) × (𝐾𝐴 ⊕ 𝐾𝐵) and (𝑄𝐴 ⊗ 𝑄𝐵) × (𝐾𝐴 ⊗ 𝐾𝐵) should be ⊗ to denote bilinearity, right? And then "operating over" becomes "in".

(This nitpick should have been a PR - put the tex on the github?)