In the Name of All That Needs Saving

post by pleiotroth · 2024-11-07T15:26:12.252Z · LW · GW · 3 commentsContents

Patchwork “Basically human” Quantitative agency Caring distributions are not utility distributions Consider the humble rock again Some possible cosmopolitanisms An example Alice’s cosmopolitanism Bob’s cosmopolitanism Can I compensate for a bad caring distribution with a virtuous utility distribution? Shared caring distributions Safeguarding cosmopolitanism 2nd attempt: Partial membership Actually: Why are we looking for equilibrium points? Building it from the bottom up Getting what we want None 3 comments

There is some difference between despotic and cosmopolitan agents. A despotic agent builds a universe grabbing Singleton and makes it satisfy its own desires. A cosmopolitan agent builds a universe grabbing Singleton and makes it satisfy the desires of all the things which ought be granted some steering-power. The cosmopolitan agent will burn some of its precious negentropy on things it will never see or experience even indirectly, as well as on things it actively dislikes, for the simple reason that someone else likes them. This is more than just bargaining. The despotic agent, if it is clever, will also get nice things for some other agents since it will probably have had uncertainty over whether it will get to decisive strategic advantage first. Therefore it is in its best interest to cooperate with the other contenders, so as to itself get nice things even in the words where it fails. This however is still only in service of maximally sating its own desires across words. It is not truly outsourcing agency to others, it just looks like that when observing a single world-line in which it wins. Looking at the whole of world-lines, it is merely diversifying its assets to grab more than it would have gotten otherwise. It has allies, not friends. Cosmopolitanism on the other hand helps even things which never had any shot of winning. It genuinely gives up a great deal of steering power across the totality of branches. Perhaps cosmopolitanism isn’t the whole of goodness, but it is certainly a part, so it would probably be useful to figure out how and if it works.

Patchwork

Cosmopolitanism, to my mind at least, is some form of stakeholder democratic patchwork, meaning that there is not one homogeneous bubble in which the will of all people is law, but rather that there are individual bubbles whose content is determined by aligned groups of stakeholders within them, and whose size is determined by the corresponding share of stakes. If three equally stake-y stakeholders vote on the colour of their shared apartment and two of these vote red while one votes blue, the correct course of action is probably not to paint the entire surface area byzantine purple. The individuals involved would probably be much happier if the one third area in which the lone blue voter spends most of their time were painted blue and if the rest were painted red. In a world with many many more people, tiny minorities run the risk of being entirely drowned out in the homogenous mixture. They likely do not care very much that they have shifted the figurative colour of the universe an imperceptible amount their way. In patchwork cosmopolitanism however, they get their own perfect bubble, through which reality-fluid passes in exact accordance to the degree of investment people have in that bubble’s reality. We can think of this as exit, and it is a valid choice any agent should be allowed to make: Pack your stuff and live in a cottage. This is however not to say that one isn’t allowed voice – the ability to pull everything very very slightly instead of pulling a small region hard. A person can absolutely have preferences over the whole of the universe including stuff they cannot observe. In that case they will be globally, weakly implemented in all those places where that preference is a free variable. It does get to encroach on bubbles which are indifferent, but not on ones which anti-care.

This is the law of lands worth living in and thus we should try to figure out how to formalize this law.

“Basically human”

(skip if you already believe that the question of who should have steering is an epistemically hard one, fraught with target-measure confusion)

There is a current to be traced through the history of mankind which includes more and more groups derived by arbitrary taxonomies into the label of “basically human”. Bigotry is not solved –the inclusion is often more nominal than truly meant– but if one must take meagre solace, one can do so in the fact that things could and have been worse. Our rough analogue for seriously-considered-moral-patienthood which is the label “basically human” used to be assigned exclusively to the hundred or so members of one’s tribe, while everyone else was incomprehensible, hostile aliens. Few people these days perform quite so abysmally.

When westerners stumbled upon the already thoroughly discovered continent of Australia, there is a certain level on which we can forgive the rulers and thinkers of the time an uncertainty about whether the strange beings which inhabited this land were human. They had not yet married themselves to so clear a definition as the genome after all. They could not run some conclusive test to figure out whether someone was human, at least none on whose relevance everyone agreed, and these aboriginals were different from all peoples so far assigned human along some axes. The answer (while there would have been a clearly virtuous one) was not trivial in terms of actual formalism. We can shout racism (I too am tempted to shout racism), and if we went back with the precious tools of genetic analysis at our disposal, I am sure we could prove the racism in many of them, as they would not consider these aboriginals any more deserving of “basically human” (and thus agency) even after learning this truth. Still, if we factor out the racism and motivated-ness and terrible convenience of their uncertainty, their question isn’t unreasonable. They had no good heuristic for what a human is. They were horribly vulnerable to black swans. Perhaps they could have done better than to be stumped by a plucked chicken but not much better. Even the discovery of genes hasn’t safeguarded us from diogenean adversaries. We can make self sustaining clusters of cells with human DNA and most people, myself among them, do not consider those basically human, which leads us back to fuzzy questions like consciousness or arbitrary ones like the number of limbs or the way the forehead slopes. Besides, it’s not as though we have a clear boundary from how far away from the human average a strand of DNA gets to be before no longer being considered such. We cannot give a definition which includes all humans and excludes all non-humans in the same way in which we cannot do this for chairs. The question is silly and they were not fools for being unsure about it, they were fools for asking it at all. It almost seems that whether someone is human isn’t a particularly useful thing to be thinking about. The stage at which a culture contemplates the possibility of extraterrestrial life, or artificial life, or the intelligence of any other species is the very latest point at which they should discard this line of inquiry as utterly inutile. Leave your tribe at the door once and for all and ask not “are they like me?” but “does their agency matter (even if they are not)?”.

Who, if we are to be benevolent, must still have a voice if we suddenly become the only force shaping our light-cone by building a Singleton?

Quantitative agency

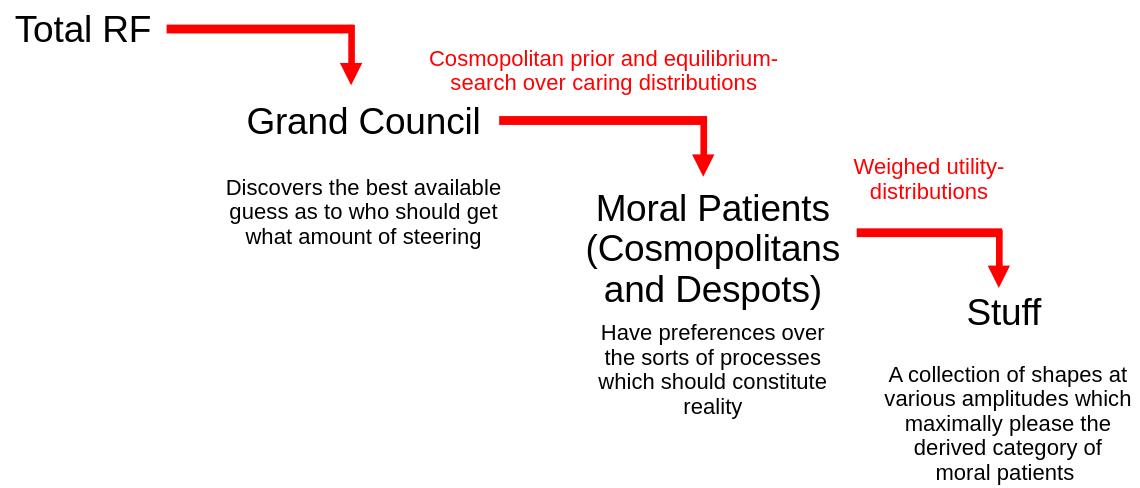

Moral patienthood is probably not an on-or-off sort of thing. It is probably a spectrum. How much RF (Reality Fluid) flows through your cognition, how much you realize and thus suffer when your demands of reality go unmet, etc. It is not however clear to me which spectrum. Worse than that, I am not sure what a proof of correct-spectrum-ness would look like if there is such a thing. It would be nice then, if there is no specific correct assignment of moral patient-hood in the same way in which there isn’t a right, single, somehow divinely correct utility function, if cosmopolitan agents were at least always mutually beneficial to each other – If the great pan-phenomenological council of cosmopolitanisms, while disagreeing on a great number of things, was at least pulling the universe in the same overall direction and saw no reason do destroy a few of its members. Is this the case? Is there a way in which the “cooperate with and ensure the flourishing of all intelligent beings”-agent and the “cooperate with and ensure the flourishing of all pain-feeling beings”-agent would not view each other as evil when the respective other is fine with tight circumscriptions around the self-determination of some things worth caring about? I am not convinced that there is a canonical priority ordering here and good and evil seem to only make sense as non-deixic terms when there is. While I believe that “all human beings at the very least are worth caring about greatly and by almost identical amounts”, and thus perceive “only my tribe is this/ many times more this than everyone else”-agents as evil, I perceive both of them as more or less arbitrary. I would confidently sacrifice three dogs for one human, but I don’t believe there to be a true moral-patient-ness-quotient justifying this (or any other) number. How much I want which sorts of agents to flourish, even transitively, lives in my preference ordering and things with sufficiently different preference orderings behaving agentically are evil. The agent with a flipped humans-to-dogs preference is a terrifying monster and if they were acting on this preference then, in the name of all that needs saving, I would be compelled to destroy them.

It seems that one would either have to accept fundamentally conflict theoretic relationships between essentially good agents (if cosmopolitanism were a sufficient true name for good, as is sometimes claimed), or reject an objective notion of goodness in favour of one that delineates based on distance from some (our) preference ordering.

Caring distributions are not utility distributions

The caring distribution says: “These things are moral patients, they deserve to have their voices heard. They deserve the right to fill their bubble with stuff of their choosing”. The utility distribution says: “I like these things, and I will fill my bubble with them”. While the caring distribution of a cosmopolitan indicates which things they would like to give which amount of agency over the universe to, their utility function says which things they would like to agency into existence with the budget that the reigning cosmopolitanism assigns them. These two distributions may very well be about the same sorts of things. For example another human may get agency from your caring distribution since you believe that all humans should get some of that, and then some of them might get even more because you like them a bunch, which makes them show up in your utility distribution. The two will in most cases have a lot of overlap, but they each may contain things which the other does not. A crystal on my desk for example might show up in my utility distribution and thus I would send some reality fluid its way, but it would not show up in my caring distribution because it probably isn’t a moral patient. I don’t believe it should have say about the universe and thus it is not run by merit of being a moral patient plus some bonus, it is exclusively run because some moral patients approve of its existence. A person I find extremely annoying on the other hand would not show up in my utility distribution, but my caring distribution would still acknowledge that they are a thinking creature deserving of agency, and so they get some RF to steer the universe with according to their desires. If you pass the utility distributions of all agents to the caring distribution of an agent which has grabbed the universe, you get the actual Reality Fluid distribution across experiences, creatures and objects. If the agent which grabbed the universe is a despot, then their caring distribution assigns 100% of weight to themselves and thus the RF distribution looks exactly like their utility function. For cosmopolitans that is extremely unlikely to be the case.

Consider the humble rock again

All the moral patients we see in the world are some sort of replicator, but it would be hasty to assume that this is a requirement. It is very possible that replicators are just the sort of thing you see in the world because they are replicators and making themselves exist a bunch is their entire job. A worthwhile cosmopolitanism should probably fight Moloch in this regard and extend its own agency to things which do not wield the tools of autopoesis themselves. A fully handicapped consciousness with no way of impacting the world outright ought to very much be helped and empowered, though this is an easy thing to say and a hard thing to implement. If it has no way of impacting the world, how do we know it exists, let alone what it wants? Find it in the physics generator perhaps? But sure, let’s not go fully off the neurotic deep-end just yet, and contend ourselves with making a significantly better world before giving up because we don’t see a way towards a perfect one at this very moment. There are things with some agency, but not enough. Finding and uplifting those is a good move, and while I have no proof of this, I would be very surprised if rocks for instance had a great deal of stifled agency. This would violate a lot of our current heuristics about the sort of negentropy consumption and dynamic instability a mind requires. Rocks are probably –hopefully– not having a bad time. They are probably not having a time at all, but lets try to find them in the physics generator anyway, just to make sure, whenever we find ourselves capable of crossing that particular bridge. We will not let any consciousnesses suffer behind Markov blankets if we can help it.

Uplifting those stifled beings also means giving them true agency if they want it. We will not simply do the things the want to be doing for them, as they might not get anything out of that. For example: I want there to be a forest near my apartment and I want to write a few books. I have no particular desire to make that forest exist. In fact, I would prefer not to. The thing my agency-helmsman craves is for there to simply be a forest, so I would be happy if god just placed it there for me. I would on the other hand not be very happy if god wrote those books for me. The thing my agency-helmsman craves here is not merely for them to exist, but to be writing them. The desired object is the affordance to act.

Affordances do not seem like uniquely weird desires to me. They are a thing I want like any other and if a proposed true name for agency handled them as a special case, I would somewhat suspect that the person responsible has fucked up, but others seem to be seeing a strong distinction here and thus I thought I should bring it up. If the handicapped consciousness wants the affordance to act, then that is what a good cosmopolitan should be giving it, as opposed to handing over the hypothetical outcome of those same actions.

Some possible cosmopolitanisms

A very simple cosmopolitanism which is sometimes discovered accidentally when asking highly abstract questions about outer alignment (universal instrumental program or such) is to assign equal steering to all replicators. With the exception of our hypothetical agency-locked specimens, this cosmopolitanism just recognizes the [warning: anthropomorphisation of autopoetic processes] “want” to stay alive and the implied “want” have an impact on the world which comes along with that and gives it a boost. If you’re “trying”, that’s all that matters. It seems like some very weird emergent-consciousness problems pop up if you try to do this: Any one of my cells currently has a lot less agency than me, but I seem to be a notably distinct replicator, so I don’t know what it would mean for all of us to be amplified to the same baseline. This feels ripe for synecdochical feedback circuits, but a rigorous solution to the problem does not appear impossible. If fact I think this is the easiest meaningfully general cosmopolitanism to actually formalize. Cybernetic processes are comparatively easy to identify and extrapolate, making this very convenient when you are playing with maths (though I would advocate not picking one’s answers to alignment questions based on their mathematical convenience).

“Allocate agency to all things which suffer if they don’t get a say” might be another one. It certainly *feels* like suffering in the “stifled agency”-sense is a thing we should be able to point to, and it doesn’t *feel* like bacteria for instance actually do that. It is easy to interpret self-replicator as wanting to self replicate, since that is what we’re doing, but perhaps they are behaving this way in a manner much more akin to following gravity. Following the rules of the sort of system they are, with no strong opinion on the matter. The rock, though it does fall when dropped from a height, would probably be perfectly fine with not falling. It would probably not mind if I held it up, despite the fact that this requires the application of some force in opposition to its natural inclination. Perhaps bacteria, despite similarly resisting attempts to stop them from self-replicating, are ultimately indifferent on this front whereas other things in the physics-prior are not. This approach may create utility monsters (though perhaps creating utility monsters is the right thing to do) and it requires you to formalize suffering, which seems harder than formalizing replicators.

An example

Let’s say we have a universe containing four agents. Two humans called Alice and Bob as well as two chickens. Alice and Bob are extremely similar in a lot of ways and they love each other a lot. If they were despots, they would both give 40% of Reality Fluid to their own existence, 40% to the other’s existence, 10% to the stuff in their house and 10% to the chickens which live around their house. This is not the shape of their cosmopolitanism, this is just their utility function. This is what they would do with their budget after a cosmopolitanism had assigned them one. You can tell, for example, by the fact that they are assigning RF to the inanimate object which is their house, something which probably –hopefully– does not itself care about anything. For simplicity’s sake let’s say that the chicken only care about themselves and about other chickens. This assumption is not necessary, is probably wrong, and even if it happens to be true in the current paradigm, I am more than sympathetic to the idea that the thing we might really want to be tracking is some flavour of CEV of a strongly intelligence-boosted, extrapolated version of the chicken (if there exists a coherent notion in that space), which might care about loads of other things. This is just to keep the maths focused.

Now, while Bob and Alice are identical in what they desire, they disagree about moral patienthood.

Alice allocates moral patienthood in a way which vaguely cares about intelligence. She does not know whether it cares super-linearly, linearly or sub-linearly, because she doesn’t have a crisp metric of intelligence to compare it to, but when she looks at her mental ordering of the probable intelligences of creatures and at the the ordering of how much she cares about their programs being run to shape the future, she finds them to be the same (maybe aside from some utility-function infringements which she dis-endorses. Perhaps she is very grossed out by octopods and would prefer them to get as little steering over the light-cone as possible, but she respects their right to disagree with her on that front in exact proportion to their subjectively-perceived agent-y-ness, even when a part of her brain doesn’t want to). Alice cares about humans ten times as much as she cares about chickens.

Bob on the other hand flatly care about some ability to suffer. He values the agency of chickens exactly as highly as he does that of humans. Both of them are vegans, since neither of them values animals so little that their suffering would be outweighed by some minor convenience or enjoyment of a higher RF agent, but Bob is in some relevant way a lot more vegan. He is as horrified by chicken-factory-farming as he would be by human-factory-farming. Alice, at great benefit to her mental well-being, is not.

Now, Alice and Bob are also AI researchers and one day, one of them grabs the universe. What happens?

Alice’s cosmopolitanism

Alice’s Singleton allocates 10/22ths of reality fluid to both humans to do whatever they please with as well as 1/22th to each of the chickens. The chickens keep all of their RF, but Bob and Alice distribute theirs further. Alice gives 40% of hers to Bob and Bob the same amount to Alice, meaning that they cancel out. They each assign 10% to the continued existence of their house, ten percent to the chickens and the rest of the universe is shredded for computonium.

Bob and Alice are now being run at 4/11 of RF each, their house is run at 1/11 of RF and the chickens are run at 1/11 of RF each.

Bob’s cosmopolitanism

Bob’s Singleton allocates ¼ of reality fluid to all agents to do whatever they want with. The chickens keep all of theirs and Bob and Alice do the same reallocation as last time, giving 40% of RF to each other, 10% to the house, 10% to the chickens and keeping the rest to themselves.

This leaves Alice and Bob with 8/40 of RF each, their house with 2/40 of RF and the chickens with 11/40 of RF each. Note that the vast majority of the chicken’s RF is coming from the caring function this time, not the other agents’ utility functions. Note also that they are getting more reality fluid than the humans since they are terminal nodes – despots by our earlier nomenclature. If despots get cared about by non despots at all, then the despots will end up with more RF than the average agent of their same moral-patient-y-ness. In Bob’s utilitarianism, despite Alice and Bob having the exact same utility function, sacrificing a human to save a chicken is a net positive action, something which Alice considers horrifying. Since Bob and Alice are so aligned in their utility functions, Bob-despotism would be a lot better for Alice than Bob-cosmopolitanism and more than that: Alice-cosmopolitanism is a lot closer to Bob-despotism than it is to Bob-cosmopolitanism in terms of the reality fluid allocation it produces. Clearly something is going very wrong here if we want cosmopolitanisms to work together.

Can I compensate for a bad caring distribution with a virtuous utility distribution?

Since caring- and utility-distributions handle the same quantity (Reality Fluid), it seems pretty intuitive to just steer against an unjust cosmopolitanism, in whose grasp you have found yourself, by moving some of your own caring distribution into your utility distribution. If I were to live in a cosmopolitanism which is more super-linear than Alice’s and assigns only a tiny fraction of RF to whales for instance but loads to me, then I would probably pick up the mantle of caring about whales a bunch and give them some of mine – More of mine than would be derived from my actual utility for whales. If I found myself in Bob cosmopolitanism however, I would be disgusted by the sheer amount of RF the whales are getting and would not give them any of mine despite having a fair bit of affection for the creatures. In fact, I might even be quite tempted to not give any fluid to people who care a lot about whales either, to shift the distribution to look somewhat more the way pleiotroth-cosmopolitanism would end up… by failing at cosmopolitanism.

You may seek to interject that the the RF one gives others should not be re-giftable –that when Alice cares a bunch about Bob, but Bob doesn’t care much about himself, Bob should just have to take it, unable to redistribute to the things which actually matter to him. I think this is cruel. Caring about someone means caring about the things they care about. Making them exist a whole lot while all the stuff which matters to them is relegated to a tiny, slow-running corner of concept-space is existentially torturous. It also means that you get to make agents which anti-care about existing exist, which is literally torture in a very unambiguous sense. To be a cosmopolitan means to actually give people agency, not to keep them as statues for your personal amusement. It is to foster resoponse-ability in the Harawayan sense – to empower your sympoetic partners not merely to act, but to steer. You may not like it, but this is what real benevolence looks like…

Which leaves us with an issue: The cosmopolitanism you choose is not neutral. If people need to steer against it with their utility distribution then they are left with less effective agency then they should be having, since they need to burn at least some on righting the system, while whoever built the system gets more than everyone else since they are definitionally content with their choice. As a cosmopolitan, not even the architect is happy about this position of privilege. So, can we move the bickering about the correct caring distribution somewhere else so that the utility step can actually do its job and just get nice things for everyone?

Shared caring distributions

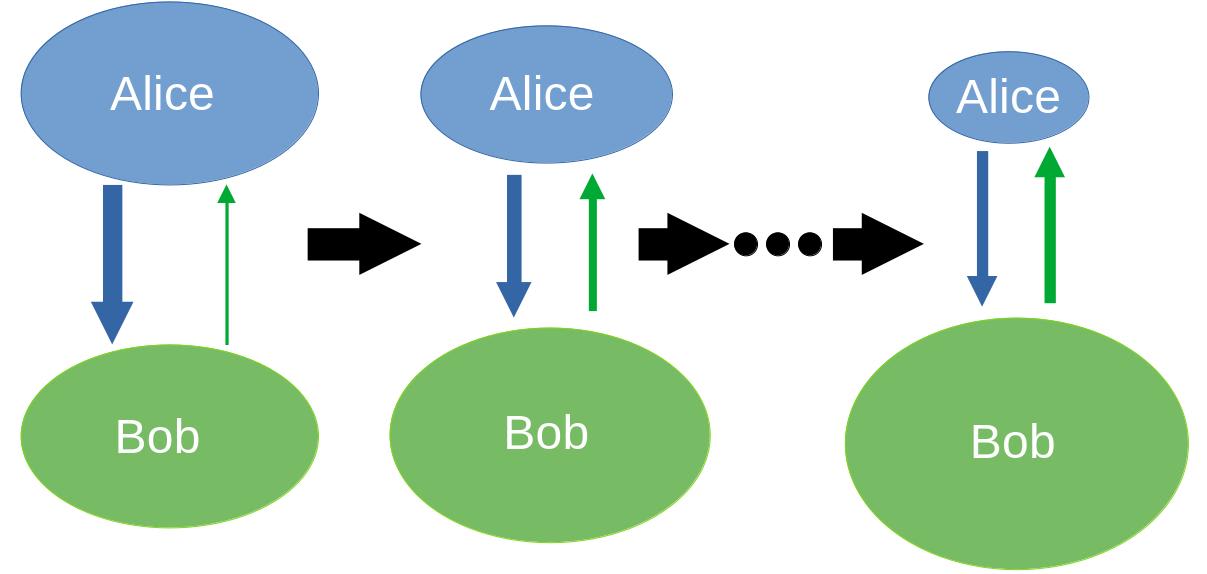

What if Alice and Bob simply both get to implement their caring distribution in step one? What if we hand the universe to a benevolent Singleton which has full access to the true formalism of both? Each gets half of all available RF and then Alice-cosmopolitanism assigns 10/22ths of that to Bob, 10/22ths to herself, and 1/22th to each of the chickens while Bob cosmopolitanism assigns ¼ to everyone. You might naively want to cancel the amounts of RF passing between Alice and Bob out again such that Bob gets 10/22 - 1/4, but they would not be happy about this. Doing this would change the magnitude of their respective nodes such that they would both no longer be giving the proportion of RF they wanted to be giving. They could repeat the process, but this will happen recursively forever, which is a rather frustrating situation to be stuck in. What we actually need to find is the equilibrium-point of this dynamic system, which is to say the node sizes at which the RF-quantities passing between Alice and Bob are equal and thus cancel out. This point is the infinite limit of that recursive process we are trying to avoid. So:

a-to-b = b-to-a |

a-cares-b * a = b-cares-a * b |

10/22 * a = ¼ *b | * 4/a

20/11 = b/a

Knowing that all RF is currently resting with the two humans, this means a=1-b and b=1-a and therefore

20/11=(1-a)/a |

20/11= 1/a – 1 | +1

31/11 = 1/a | * 11a/31

a = 11/31

and thus b=20/31

Now Alice-cosmopolitanism allocates 10/22 of its 11/31 each to Bob and Alice while allocating 1/22 of its 11/31 to each chicken and Bob-cosmopolitanism gives ¼ of its 20/31 to Bob, Alice and to each chicken.

This is

110/682 RF from Alice-cosmopolitanism to Alice and to Bob,

11/682 RF from Alice-cosmopolitanism to each chicken

110/682 RF From Bob cosmopolitanism to everyone.

You might be surprised that the first and last number are the same. Don’t be! Both AC (Alice-Cosmopolitanism) and BC (Bob-Cosmopolitanism) treat all humans equally, and since we looked for the equilibrium point at which they give the same amount of RF to each other, we inadvertently found the point at which both of them give the same amount to all humans. Since there are no other forces which allocate RF to humans, this means that everyone in the category gets exactly twice that much. Once from AC and once from BC.

Their shared cosmopolitanism assigns

220/682 RF to each human and

121/682 RF to each chicken

And everyone can go into the utility stage knowing that their own cosmopolitanism was already factored in. It cares more about chickens than Alice would have, and less than Bob would have, but they care about each other and are willing to let each other’s weird preferences –even their preferences over who should get to have preferences– impact the future…

But did you notice the cheat?

Doing this sort of meta cosmopolitan bargaining requires you to have a prior caring distribution. In this case the prior caring distribution was that the caring distributions of Alice and Bob matter equally and that those of chickens do not.

If we include the chickens –if we make our prior uniform like Bob’s caring distribution– we get something very different. If we try to find the equilibrium point between BC and CD (Chicken Despotism) or even between AC and CD… well, the chickens aren’t giving anything back, are they? So the point at which AC, BC and CD send each other the same amount of Reality Fluid is the point at which AC and BC have none of it while the chickens have all. Everything except complete exclusion of despots from the council of cosmopolitanisms leads to complete despotism. This is pretty spooky. What is also pretty spooky is that it doesn’t just suddenly breaks for complete despots. If someone in the council only cares by an infinitesimal epsilon about others then they will consolidate the vast majority of agency at their node so long as all other nodes are even indirectly connected to them. This clearly isn’t workable.

Safeguarding cosmopolitanism

There is a definite issue of cheating, though I am for now using the term bereft of emotional valence. Most people care more about themselves than they do about even the highest scoring reference class in the prior. This amounts to funnelling more RF into their own preference distribution. A toy number I have pitched at various points is that people assign themselves around ten percent of their total mass (and conversation partners have cried out in shock about both how absurdly low and how absurdly high that number is). I would not call non-cosmopolitans cheaters here, since they outwardly do not care about other people’s utility functions. There are however cosmopolitans who feel the need to do this strategically to counteract the malevolent influence of despots and create a truly cosmopolitan distribution by… not being cosmopolitan. Defensive democracy comes to mind.

An agent which values cosmopolitanism should probably value cooperation and rights of voice or exit on a long-therm rather than a myopic basis, so while cooperating with a despotic agent is a very cosmopolitan thing to do, it poses a long term risk to the concept. If you uplift things which want to destroy cosmopolitanism for their own gain too much, then you break cosmopolitanism and the thing you want (a maximally (geometrically?) desirable world for all moral patients weighed by their moral-patient-y-ness) is not accomplished. Thus a cosmopolitan should probably not treat those who seek to destroy cosmopolitanism as friends, though they should not waste resources on punishing such agents either. It then seems reasonable to implement something like a “no tolerance for intolerance”-rule and consider only cosmopolitan nodes (i.e. ones which, in their code, outsource some sensible amount of decision making, and which are weighed by the amount to which they do so), but it’s easy for intelligent agents to scam that system. They can simply predict others and strategically outsource their own RF in such a way that it is distributed across beings who in-weighed-aggregate would do exactly those things which the original agent wanted to do with it. Identifying illiberalism in the code seems like a dead end modulo enforcing some plausible computational constraints which would make this kind of modelling hard (but would also make benign curiosity about figuring out what things ought be cared about hard)

2nd attempt: Partial membership

The most intuitive prior which the grand council of cosmopolitanisms could have is how cosmopolitan you are, and this doesn’t even necessarily require us to know of a true correct one and to measure the distance from that. If we had such a thing we would not need a grand council. Everyone could just get a vote which is weighed by the degree to which they assign moral patient-hood to anyone beside themselves and which can only be used on others, followed by normalization to one over all members. The chickens and other despots therefore do not get an effective vote because they spend all their caring on themselves. They are weighed by zero in the prior. Alice is weighed by 12/22 and Bob by ¾. Does this work?

Sadly no. Now despotic tribes eat you rather than despotic individuals, but that distinction doesn’t feel particularly meaningful as your negentropy gets shredded. If Carol and Dean only assign moral patienthood to each other, meaning that each of them is technically a cosmopolitan, and anyone else in the network cares about either of them, then all caring still gets sucked into the Carol+Dean node, which collectively behaves like a despot. If we only cared about complete despotism we could simply mandate that no part of the caring graph gets to have this property, but then you still have most-of-the-way despotic tribes sucking 1-episilon of caring into their node. We could do partial voting by tribe, but then…

Actually: Why are we looking for equilibrium points?

It kind of seems that the search for stable equilibrium is causing us a lot of trouble. This, after all, is the thing which leaks all of its decision power to fascists, where plain subtraction would not. The answer is simple and inseparable from the problem: “Stable equilibrium” is the true name of “no-one has to disproportionately sacrifice their own preferences to push for their preferred cosmopolitanism”. Equilibrium is the point at which everyone pushes exactly equally hard for the rights of others. Since the despots are all-pull-no-push, the only point at which they push exactly as hard as everyone else is the point at which they are the only ones left (and not pushing for the rights of others at all). In other words: Equilibrium is the point at which everyone has the exact same incentive to engage in instrumentally anti-cosmopolitan cheating (which in the case of despots is infinite). No one is favoured by the prior, not even those whose caring distribution exactly matches the prior. Subtraction on the other hand creates winners and losers.

The same issue, by the way, also emerges in another sort of scheme you might have thought of: The one where people’s caring distribution is a target, not an action. The one where, once personal cosmopolitanisms are allotted some meta-steering by the prior, they do not “vote with” their actual caring distribution, but use their meta steering to push the current distribution as close to their own as they can get it. This has two problems. One is that it’s order-dependant. Your vote relies on the distribution you are handed, which relies on who made their alterations before you, and now you have to either figure out a canonical voting order or to determine some fair-seeming average across all possible permutations (though again: Perhaps mathematical elegance should not be our deciding factor on how alignment should work). The second issue is that this too creates an unfair distribution of how much steering people actually have. To agree with the distribution you are handed yields you the opportunity to just implement a bit of your utility-distribution right here.

Building it from the bottom up

Maybe we should not be assuming a grand council of cosmopolitanism and attempt to dissect it. Maybe we should see if we can find it by constructing smaller councils and working up that ladder. There definitely exists an Alice-Bob cosmopolitanism for which we can implement the earlier partial membership rule such that Alice gets 12/22 / (12/22 + ¾) of a vote within it and Bob gets 3/4 / (12/22 + ¾) amounting to starting nodes of sizes 8/19 and 11/19. Unsurprisingly we find the same equilibrium point between those two, since stable equilibrium is not dependant on the starting sizes, but this time the entire system of Alice-Bob cosmopolitanism is smaller. Only (12/22 + ¾) / 2 = 57/88 parts of reality fluid are passing through it. Still, it is a larger voter than either Alice or Bob alone and Alice and Bob both have more than 50% buy-in with regards to it. Alice-Bob cosmopolitanism is thus stable as well as beneficial. Carol-Dean Cosmopolitanism is also stable and beneficial, but it cannot stably or beneficially be part of any larger cosmopolitanism. Alice-Bob cosmopolitanism in the other hand is itself cosmopolitan. It still cares about other agents and could be a part of larger network. Getting back to the cosmopolitanism of aiding all replicators and what it would mean for replicators consisting of other replicators: It seems that all of us very much might be the shared cosmopolitanism of all of our cells in exactly this manner: A thing with more agency than each of them, guarding all of their well being and still capable of engaging in greater networks. I would not be surprised to find the true name of emergent consciousness somewhere around here. We can carry these partial mergers as high as they will go, not to some grand council of cosmopolitanisms but to a grandest council of cosmopolitanisms with a well-defined prior over voice. This is the largest thing whose agency is not cared about by anyone outside of it but which may care about the agency of outsiders. This is the ultimate benevolent cooperation-group.

Getting what we want

Once the council has calculated its prior, the members-cosmopolitanisms have allocated their fair-seeming agencies and all things with agency have directed their allotted reality fluid into whatever they personally find worth running, there probably are still terminal nodes. Things which are cared about, but which do not themselves care. There are despots like our hypothetical chickens who get RF both from being considered to be moral patients by members of the cosmopolitan council, but also by showing up in other agents’ utility distributions. There are things like the crystal on my desk, which might not be considered moral patients by anyone, but which receive reality fluid through appearing in someone’s utility distribution. There are even multiple ways in which I might care about the crystal. I might care about it existing as an object regardless of whether I am perceiving it (despite me being the only person who cares), or I might just care about my experience of seeing the crystal from time to time , in which case it may be more economical to allocate RF to that experience instead of creating a whole permanent thingy, which won’t be heard if it ever falls in the forest. Then there are objects like sunsets, which may not be moral patients and thus may not will themselves into existence, but which are beloved by so many that even if all moral patients only care about their own experience of sunset, it may still be more economical to allocate RF to the real deal than to an immense number of personal micro-sunsets. All of this is probably quite infrastructure-dependant, and there might be some unintuitive ways in which these can and cannot be folded in any given benevolent eschaton.

3 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2024-11-07T17:28:47.575Z · LW(p) · GW(p)

and makes it satisfy the desires of all the things which ought be happy

Some things don't endorse themselves being happy as an important consideration.

Replies from: pleiotroth↑ comment by pleiotroth · 2024-11-07T22:48:53.428Z · LW(p) · GW(p)

Fair, bad phrasing. I changed it to "ought have some steering power" which is the sort of language used in the rest of the post anyway.

comment by ihatenumbersinusernames7 · 2025-04-13T13:51:16.305Z · LW(p) · GW(p)

A worthwhile cosmopolitanism should probably fight Moloch in this regard and extend its own agency to things which do not wield the tools of autopoesis themselves.

[warning: anthropomorphisation of autopoetic processes]

*autopoiesis, and autopoietic (brought to you by the pedantic spelling police)