Sustainability of Digital Life Form Societies

post by Hiroshi Yamakawa (hiroshi-yamakawa) · 2024-07-19T13:59:13.973Z · LW · GW · 1 commentsContents

1. Introduction 2. Challenges that are difficult for humanity to solve 2.1. Intelligence and Technology Explosions 2.2. Governing a world narrowed by technology 2.3. Domination without the wisest is unstable 2.3.1. Biologically Constrained Human Brain 2.3.2. Can we control species that outperform us in intelligence? 2.4. Challenges posed by exponential self-replication 2.4.1 Homogeneity: Sluggish joint performance 2.4.2 Battle: Lack of cooperation 2.4.3. Intergroup conflict guided by the law of similarity 2.4.4. Individual optimizers will inevitably cause battle 2.4.5. Squander 2.5. Summary of this section 3. Solving various challenges: What will change with digitization? 3.1. Sage 3.2. Coordination 3.2.1. Tolerance for diverse individuals (related to the law of similarity): 3.2.2. Consideration of total optimality (control of individual activities): 3.3. Knowing contentment 3.4. On-demand division of labor 3.5. Summary of this section 4. Conclusion Acknowledgment References None 1 comment

Hiroshi Yamakawa1,2,3,4

1 The University of Tokyo, Tokyo, Japan

2 AI Alignment Network, Tokyo, Japan

3 The Whole Brain Architecture Initiative, Tokyo, Japan

4 RIKEN, Tokyo, Japan

Even in a society composed of digital life forms (DLFs) with advanced autonomy, there is no guarantee that the risks of extinction from environmental destruction and hostile interactions through powerful technologies can be avoided. Through thought-process diagrams, this study analyzes how peaceful sustainability is challenging for life on Earth, which proliferates exponentially. Furthermore, using these diagrams demonstrates that in a DLF society, various entities launched on demand can operate harmoniously, making peaceful and stable sustainability achievable. Therefore, a properly designed DLF society has the potential to provide a foundation for sustainable support for human society.

1. Introduction

Based on the rapid progress of artificial intelligence (AI) technology, an autonomous super-intelligence that surpasses human intelligence is expected to become a reality within the next decade. Subsequently, within several decades to a few hundred years, self-sustaining digital life forms (DLFs) will emerge in the physical world. However, there is no guarantee that such a society will be sustainable. Further, the superintelligence would possess technologies such as weapons of mass destruction and environmental degradation, which encompass the extinction risks currently faced by human society.

DLF societies are anticipated to bolster numerous facets of human life, encompassing enhanced productivity, expanded knowledge, and the maintenance of peace[1]. To ensure the continuity of DLF societies, many complex issues must be addressed, including the sustainable utilization of energy and resources, the judicious governance of self-evolutionary capabilities, and the preservation of the cooperative nature within DLF societies. Nevertheless, their scale and intricacy surpass human understanding, rendering their management by humans fundamentally unfeasible. Consequently, the capacity of DLF societies to sustain themselves autonomously emerges as a critical prerequisite for their role in supporting human societies.

This study shows that appropriate measures can be employed to resolve the existential crises of DLFs attributable to powerful technologies. Thought-process diagrams, which are used in failure and risk studies, were implemented in this study.

2. Challenges that are difficult for humanity to solve

Humans have developed numerous AI technologies, making them more powerful and complex beyond our ability to govern them [2], thereby allowing digital intelligence to surpass that of humans. However, given its ability to exponentially self-replicate, the human race is gradually risking its survival and that of the entire biosphere in an attempt to reign as the technological ruler of Earth.

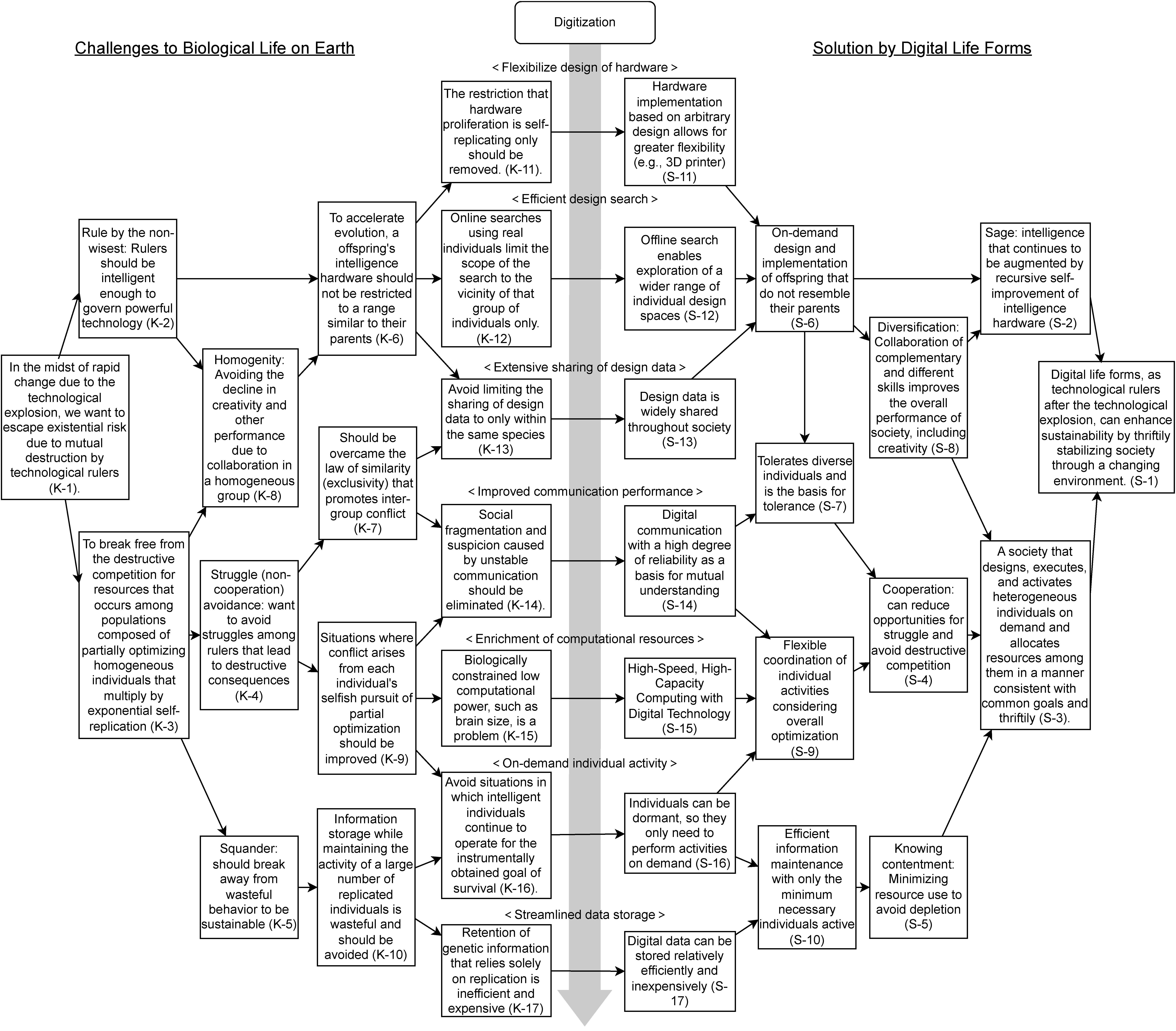

The thinking-process development diagram used in hazard and failure studies is shown in Figure 1 [3]. In this figure, each n number is described in pairs, with the solution (S-n) corresponding to a specific problem (K-n). In addition, a total of [17] issues are described as a hierarchical decomposition of the top-level issue (K-1) on the left side of the thinking-process development diagram. Further, we demonstrate that issues (K-11) to (K-17), which are issues at the concrete level, are addressed by digitization as (S-11) to (S-17) and that the top-level solution (S-1) is derived by hierarchically integrating these solutions.

2.1. Intelligence and Technology Explosions

Humans acquire intelligence through evolution as a critical ability for survival [4]. They employ this intelligence to model the world and develop science and technology, thereby gaining significant power in the form of overwhelming dominion over others. Thus far, humanity has used intelligence to create powerful technologies that have rapidly reduced the effective size of our world. For example, we can now travel anywhere in the world within a dozen hours by plane, and we are connected globally via the Internet.

Steven J. Dick [5] highlighted the following qualities as the intelligence principle.

Intelligence Principle: the maintenance, improvement, and perpetuation of knowledge and intelligence is the central driving force of cultural evolution, and that to the extent intelligence can be improved, it will be improved.

Steven J. Dick (Former Chief, History Division, NASA)

Intelligence creates technology, which in turn augments intelligence, thereby causing an accelerating [6, 10] and irreversible technological explosion. Once created, intelligence heads toward explosion through a development cycle based on the aforementioned principles, rapidly pushing the world to its limits (if such limits exist) while making it smaller.

2.2. Governing a world narrowed by technology

In a rapidly narrowing environment that follows the technological explosion achieved by humanity, the power of technical influence increases the existential risk of destroying the entire global biosphere when technological rulers utilize technology for mutual annihilation [7]. The challenge is removing living societies from this tightrope (K-1). In the present human society, nations to individuals have access to technology, and this access is growing stronger in a way from which there is no turning back.

Thus, technology rulers need to address the following two issues to govern the influence of technology:

- Problem of being ruled by the non-most wise: Technology rulers should be sufficiently intelligent to govern powerful technologies (K-2); otherwise, it will destabilize the society.

- Exponential replication: Eliminate the destructive competition for resources caused by exponential self-replication by building a homogeneous population of partially optimizing individuals (K-3)

2.3. Domination without the wisest is unstable

Life forms with high curiosity and superior intelligence are powerful because they acquire and accumulate diverse knowledge, culture, skills, and abilities more quickly. Therefore, life forms with relatively high intelligence gain a dominant position of control over other life forms. For instance, humans, who are superior in terms of power because of their intelligence, can control animals (tigers and elephants).

Thus, the technological rulers of the world must be the wisest and the strongest to govern the ever-accelerating technology (K-2); otherwise, their governance will destabilize.

If advanced AI surpasses human intelligence in future, it can destabilize the continued reign of humanity as the technological ruler.

2.3.1. Biologically Constrained Human Brain

Improving the brain efficiently and the hardware that supports that intelligence is desirable to continue to be the wisest life form. However, in extant Earth life forms, the intelligence hardware of an offspring is constrained to resemble that of their parents (K-6). In other words, there is a constraint that can be expressed with the phrase, “Like father, like son.” The complications with accelerating the development of brain hardware can be attributed to three primary reasons:

First, the hardware construction process is constrained by self-replication, which is a biological constraint that is difficult to overcome (K-11).

Second, hardware design is based solely on an online search, which is implemented and evaluated in the real world. In this case, the search range is restricted to the vicinity of the parental genetic information (K-12). The content of phenotypes that can adapt to the environment and survive in the vast combination of gene series is extremely narrow, and the viability of the offspring cannot be maintained unless the genes of the parents to be mated are similar. Therefore, in the online search, a species system that allows mating between genetically similar individuals would be necessary[8].

Third, the extent to which hardware design data are shared is limited to only within the same species, making it impossible to efficiently test diverse designs by referring to various design data (K-13).

The specific three limitations present across the body do not pose obstacles when it comes to parts other than the brain due to the brain’s ability to use these parts as tools freely. This adaptability ensures that limitations in the body’s other parts do not hinder technological advancement. However, the case is markedly different for the brain itself. Its difficulty in directly controlling or modifying its physical state and its irreplaceability can emerge as a critical vulnerability in our ongoing dominance over technology. This significance stems from the brain’s role as the epicenter of knowledge, decision-making, and creativity; any constraints on its functionality directly impact our technological supremacy.

2.3.2. Can we control species that outperform us in intelligence?

Controlling advanced AI that outperforms humans in intelligence may be difficult; [9–11], however, it is not entirely impossible. The problems noted from the perspective of humans attempting to control AI are often referred to as AI alignment problems [12–14].

One salient concern is that advanced AI can learn to pursue unintended and undesirable goals instead of goals aligned with human interests. Therefore, the possibility of value alignment (ASILOMAR AI PRINCIPLES: 10) has been proposed in the initial stages of developing advanced DLFs, whereby the AI having to harmonize its goals and behaviors with human values is expected to lead to a desirable future for humanity. In other words, it is a strategy that takes advantage of the positional advantage that humanity is the creator of advanced AI. For example, in “the friendly super singleton hypothesis,” it is hypothesized that by delegating power to a global singleton friendly to humanity, humanity will gain security in exchange for giving up its right to govern [15].

However, even if we initially set goals for advanced DLFs that contribute to the welfare of humankind, they will likely become more concerned with their survival over time. Further, even if we initially set arbitrary and unattainable goals for a brilliant DLF, it can approach sub-goals such as survival through instrumental convergence[16] asymptotically because a sufficiently intelligent AI will increasingly ignore those goals by interfering with externally provided goals [17,18].

It is possible that humans will find a way to control more advanced AI in the future. However, even after a decade of discussion, no effective solution has been realized, and the time left to realize this may be short. Thus, it is essential to prepare for scenarios in which advanced AI deviates from the desirable state for humanity rather than assuming these are improbable events.

The left half shows a hierarchical decomposition of the top-level issue (K-1) for 17 issues. The right half shows that the top-level solution (S-1) is derived by integrating individual solutions hierarchically. The middle part of the figure indicates that issues (K-11) to (K-17) can be addressed at a specific level by digitization as (S-11) to (S-17), respectively. In the box, each number n is described in pairs as a solution (S-n) corresponding to a specific issue (K-n).

2.4. Challenges posed by exponential self-replication

The breeding strategy of Earth life is “exponential self-replication,” that is, a group of nearly homogeneous individuals self-replicate exponentially, each with a self-interested partial optimizer for its environment (K-3).

This is a reproductive strategy in which individuals similar to themselves are produced endlessly in a maze-like fashion, as in cell division and the sexual reproduction of multicellular organisms, and the design information of the individual is replicated in a similar manner.

A more important feature is the partial optimization of each individual after fertilization wherein they independently adapt to their relative environment. Standard evolutionary theory indicates that traits acquired after birth are not inherited by an offspring, and genetic information is shared between individuals only at the time of reproduction [1]a. This reproductive strategy, based on exponential replication, poses three challenges:

- Homogeneity: Avoiding the deterioration of creativity and other performance caused by homogeneous group collaboration (K-8)

- Squander: Avoiding a scenario wherein technological rulers squander and expand resources without limit for the sake of long-term sustainability (K-5)

- Battle (non-cooperation): Eliminating battles among technology rulers that lead to destructive consequences (K-4)

In a world that is narrowed down by technological explosion, the battle for resources intensifies as existing technological rulers squander resources and pursue exponential self-replication. This will manifest as existential risks because the misuse of such power as deemed fit by an individual will cause destructive damage to the human race or the entire life sphere on Earth. The commoditization of technology has led to a rapid increase in individuals that can pose existential risks. This is referred to as the increase of universal unilateralism (threat of universal unilateralism) [15]. The world is currently in a rather dangerous scenario, considering which, it will be necessary to move to a resilient position.

Hereafter, we discuss the precariousness of the scenario in which the technological rulers are not the wisest and the challenges associated with squander and battle derived from the reproductive strategy of exponential replication employed by all extant life on Earth.

2.4.1 Homogeneity: Sluggish joint performance

In extant Earth life, the intellectual hardware of an offspring is constrained to resemble that of their parents (K-6), which leads to the challenge (K-8) of reduced creativity and other performance because of the homogeneity of the group with which they collaborate.

2.4.2 Battle: Lack of cooperation

When individuals of DLF belonging to technological dominators are replicated exponentially, their competition for resources may lead to a conflict capable of devastating the world.

For at least the past several centuries, most of humanity has sought to avoid armed conflict and maintain peace [19–23]. However, maintaining peace is a significant problem, and the prospect of achieving lasting peace through human efforts alone has not yet been achieved. Therefore, the possibility that conflict may not be eradicated from human society must be considered. The destructive forces attributed to technology have reached the point where they can inflict devastating damage on the entire life-sphere on Earth. The examples include nuclear winter through nuclear weapons, pandemics caused by viruses born from the misuse of synthetic biology, and the destruction of life through the abuse of nanotechnology. Establishing cooperative relationships that can prevent battles between technology rulers and maintain robust peace are required to avoid crises caused by the mutual destruction of technological rulers and to ensure continuity of life.

2.4.3. Intergroup conflict guided by the law of similarity

The “law of similarity” is the exclusive tendency of humans and animals to prefer those that are similar to them over those dissimilar in attitudes, beliefs, values, and appearances [24, 25] One manifestation of this tendency is often expressed in phrases such as “when in Rome, do as the Romans do,” which suggests that we should follow the rules and customs of a group when we seek to belong to the group. Although this tendency enhances in-group cohesion, it can lead to intolerance toward different groups, causing group division, conflict, and even strife (K-7). Further, there are two factors in which the law of similarity arises.

First, sexually reproducing plants and animals exchange design data within the same species in reproduction but face the challenge of not being able to share design data more widely (K-13). Therefore, they tend to protect individuals recognized as mates with whom they share the gene pool and they can interbreed with [26, 27]. In animals, the food-eat-eat relationship is generally established between different species because populations will cease to exist if there is unlimited cannibalism among individuals of the same species, which is not an evolutionarily stable scenario. Further, the recognition of one individual as being the same species as another is based on detecting similarities in species-specific characteristics using sensor information such as visual and olfactory senses. To illustrate this point, strategies exist to mislead about a species’ identity, including tactics like mimicry and mendicancy.

Second, skepticism tends to circulate among subjects (individuals and their groups) when there is uncertainty in communication (K-14). To prevent this, they tend to prefer to communicate with highly similar entities with rich shared knowledge that can be expected to reliably transfer information even with a little information exchange among the entities. Uncertainty in communication increases with differences in appearance (body and sensor) and characteristics such as experience, knowledge, and ability. This is observed in the transmission and understanding among different animals. Several animals, not only humans, can communicate using various communication channels among the same species [28–31]. For example, birds chirp, squids color, bees dance, and whales sing. In rare cases, however, inter-species communication is also known, for instance, when small birds of different species share warnings about a common predator in the forest or when black-tailed tits warn meerkats, though the alerts may be deceptive. Although progress has been made in deciphering the ancient languages of humans, we still do not understand whale songs. In other words, barriers to communication between entities increase dependence on differences in the bodies and abilities of these entities.

2.4.4. Individual optimizers will inevitably cause battle

Each individual needs to decide and achieve control in real time using limited computational resources in response to various changes in the physical world. Therefore, life evolves by pursuing partial optimality wherein an individual adapts to a specific environment and survives (K-9). Thus, life develops through the survival of the fittest, wherein multiple populations reproduce exponentially in a finite world and acquire resources by force. In this structure, several animal species develop aggressive instincts toward others to survive the competition.

Therefore, in several animals, including humans, aggression stems from the proliferation through exponential self-replication, and there are difficulties in eradicating such conflicts among individuals. In societies before the technological explosion, which were loosely coupled, the accumulation of such partial optimizations approximated the realization of life’s value-orientation of survival for life in its entirety. However, in the post-technological explosion societies, conflict can have destructive consequences (existential risks) that diverge from the value-orientation that life should pursue optimization. In brief, we have a type of synthetic fallacy. Introducing a certain degree of total optimization while pursuing partial optimization will be necessary to resolve this scenario.

However, the following issues need to be addressed to introduce total optimization:

- Lack of computational resources makes total optimization difficult: Sharing information across individuals and performing calculations required to achieve value orientation is necessary for performing total optimization. However, achieving this will be difficult as long as the biologically constrained low computational power (neurotransmission rate and brain capacity) (K-15) [32] is used.

- Instability of communication leading to a chain of suspicion: Effective communication between individuals is the foundation for achieving total optimization in autonomous decentralized systems; however, several factors can destabilize these systems. The main factors include the instability of the communication channel, misunderstandings that depend on differences in individual characteristics (appearance and abilities), and lack of computational cost to infer the state (goals and intentions) of others. Life forms with a high level of intelligence above a certain level are more suspicious of others if communication is unstable in inferring the other’s intentions, thereby contributing to inter-group fragmentation (K-14). This scenario is also present in offensive realism [33], one of the realism in international relations. In an unregulated global system, the fact that one nation can never be sure of the intentions of another constitutes part of the logic that magnifies aggression.

- Intelligent individuals pursue survival as an instrumentally convergent goal: In a living society constructed as an autonomous decentralized system, at least a certain number of individuals needs to remain active in transmitting information to the future. However, this does not necessarily imply that individuals will continuously pursue survival in all living organisms. When individuals are sufficiently intelligent to make purpose-directed decisions, they are more likely to pursue their own survival because of the instrumental convergence. This tendency is particularly likely to arise because individuals of extant life forms cannot be restarted from a state of inactivity (death). This creates the challenge of not being able to conserve resource use from a long-term perspective and continuing to waste resources necessary to maintain their survival as individuals (K-16).

2.4.5. Squander

Technological progress avails more resources for acquisition and use. However, technological rulers should move away from wasteful behavior that uses all available resources at a given time for society to be sustainable (K-5). Resources are always finite, and wasteful behavior will hinder long-term sustainability. In addition, the excessive use of resources risks causing side effects (e.g., climate change due to excessive use of fossil energy), and on a cosmic scale, it will lead to a faster approach to thermal death. Therefore, it is desirable to be aware of what is sufficient and simultaneously have an attitude of not only pursuing efficiency but using resources in a restrained manner based on requirements.

However, existing Earth life transmits information into the future by maintaining several replicating individuals that exponentially self-replicate and engage in wasteful activities (K- 10). There are two reasons why this approach must be adopted. First, the existing life on Earth employ an inefficient and expensive approach for maintaining information because it relies solely on the duplication of genetic information of the entire individual (K-17). Second, intelligent individuals pursue survival as an instrumentally convergent goal (K-16).

Given this mechanism of existing life on Earth, a group of individuals of the same species are expected to multiply their offspring without limit as long as resources are available[2]. The gene of knowledge and feet, which restrains the use of resources to an appropriate level from a long-term perspective, cannot be in the majority because thriftier groups will be overwhelmed by greedy rivals through the described battle.

2.5. Summary of this section

In a world dominated by terrestrial life based on exponential self-replication for propagation, conflicts over resource acquisition cannot be eradicated. The existential risk becomes apparent when a technological explosion emerges with sufficient power to destroy the entire living society. Further, it is hard to deny the possibility that humanity, comprising organic bodies, will be surpassed in intelligence by DLFs, which cannot govern them and will drive humanity away from its technological rulers on Earth.

3. Solving various challenges: What will change with digitization?

As technology evolves rapidly, DLFs must appropriately control this growth and solve specific problems (K-11 to K-17). The failure to address these challenges will induce existential risks. DLFs are based on digital computers, and therefore, they have the potential to build a sustainable biosphere over the long term.

The digital nature of these life forms allows them to tackle the specific challenges outlined from (K-11) to (K-17), as demonstrated in points (S-11) to (S-17). These include the adaptability of intelligent hardware (11), customizable design flexibility (12), shared design data (13), enhanced communication capabilities (14), ample computing resources (15), on-demand activity maintenance (16), and efficient data storage (17). The numbers in parentheses correspond to the challenges and solutions listed in the earlier discussion, which match the labels near the center of Figure 1.

3.1. Sage

In implementing intelligent hardware in offspring, although sexual reproduction can increase diversity to some extent in terrestrial life forms, it is self-replicating, and therefore, it is restricted to a similar range of the parent (K-11). However, in digitized life, the offspring’s intelligent hardware can be designed and implemented on demand without being constrained by the design data of the parent (S-6) because innovative hardware can be implemented in digitized life based on design information (S-11) [4].

In addition, intelligent hardware design in DLFs is efficient because of two reasons. In extant terrestrial life, the sharing of design data is limited only within the same species (K-13). In contrast, in DLFs, all design data in the society can be shared and reused (S-13). In the case of the existing life on Earth, the search for a design is limited to the vicinity of a particular species (K-12) because the investigation is limited to an online search by actual living organisms (K-12). In DLFs, it is possible to explore the design space of a wide range of individuals through offline exploration, such as simulation (S-12). Therefore, when one can constantly design the desired intelligent hardware as needed, it leads to intelligence (S-2) that continues to be augmented by recursive self-improvement. At this stage, the technological performance of DLF society can continue to develop rapidly according to “the principles of intelligence” (see 2.1 until a breaking point is reached.

In addition, the design of an on-demand offspring (S-6) will further enhance the intelligence of the DLF society (S-2) by leading to increased intellectual productivity (S-8), including creativity through the collaboration of complementary heterologies [35].

3.2. Coordination

A DLF society can tolerate diverse individuals (S-7) and consider total optimization (S-9) while coordinating individual activities. In this manner, we can avoid the deep-rooted aggressive factors in human societies, such as the tendency of individuals to remain perpetually active, the law of similarity, and the cycle of suspicion. Thus, we can create a cooperative society (S-4) that reduces opportunities for battle and avoids destructive situations.

3.2.1. Tolerance for diverse individuals (related to the law of similarity):

In DLFs, intelligent hardware can be designed and implemented for offspring on demand without being constrained by the design data of the parent (S-6). In addition, highly reliable digital communication (S-14), which is the basis for mutual understanding, facilitates understanding between individuals with different appearances, eliminating the need for preferential sheltering of inter-breedable species, thereby allowing for diverse individuals and serving as a basis for tolerance (S-7).

3.2.2. Consideration of total optimality (control of individual activities):

Individuals must make decisions and control changes in the physical world in real time using limited computational resources. Therefore, life on Earth, which did not have abundant computational resources, evolved to pursue only partial optimization. This pursuit of partial optimization by each individual (or group of individuals) inevitably led to conflicts by force. However, the conclusion that this could have destructive consequences (existential risk) if extended to post-technological explosion societies is a deviation from survival, which is the objective that life in its entirety should pursue optimization. Thus, it is a fallacy of synthesis.

An appropriate level of total optimality that aims at value orientation that can be shared by the entire life society while implementing activities based on partial optimization for each individual is necessary to avoid this scenario and the case in which conflicts arise (S-9).

- Distributed Goal Management System:

The computation of the total optimization itself will need to be distributed to maintain the robustness of the DLF society. Here, we introduce a distributed goal management system1 [36], that has been considered as a form of system for realizing total optimization. The system maintains the behavioral intentions of all individuals at socially acceptable goals. “Socially acceptable goals” contribute to the common goals of life and do not conflict with the partial optimization of other entities.

Within the system, each individual independently generates a hierarchy of goals depending on their environment, body, and task during startup, and then performs partial optimization to attempt to achieve those goals. However, an idea can control these goals such that they become sub-goals of the common goal A. To this end, each individual performs reasoning to obtain sub-goals by decomposing the common goal, sharing/providing goals, mediating between individuals with conflicts, and monitoring the goals of other individuals.

This system allows, in principle, the coordination of goals in terms of their contribution to a common goal even when conflicts arise among several individuals. In other words, it allows for fair competition in terms of the common goal. Further, from the perspective of any individual, if it is convinced that “all other individuals intend socially acceptable goals,” there is no need to be aggressive in preparation for the aggression of others [37].

In a distributed goal management system, each individual requires ample computational resources for setting goals that are consistent with common goal A. In existing terrestrial life forms, biological constraints such as the speed of neurotransmission and brain capacity limit the ability to increase computational power (K-15). In contrast, in a society of DLF, they can not only perform fast, high-capacity computations (S-15), but also have access to more ample computational resources because of their recursively augmented intelligence (S-2). - Increased freedom of individual activities:

Intelligent individual extant Earth life forms always seek to remain active as an instrumental convergent goal. In contrast, a DLF society can be dormant (suspended) by preserving the activity state of the individual, allowing individuals to change their activities on demand according to the sub-goals to be realized (S-16). This is advantageous because it increases the degree of freedom in total optimization. Further, in human society, attempts are made for individuals to be approved by society; however, this is not necessary in a DLF society because individuals are activated on demand, which presupposes that they are needed by society. In this respect, the source of conflict between individuals is removed. - Establish mutual trust (escape the cycle of suspicion):

In existing terrestrial life, communication is limited to unreliable language and unclear communication (K-14). In contrast, DLFs can use more sophisticated digital communication, including shared memory and high-speed, high-capacity communication (S-14). Nonetheless, the availability of highly reliable communication (K-14), which may not always be sufficient but is a significant improvement over existing life on Earth, will be fundamental for creating mutual trust among individuals.

3.3. Knowing contentment

Once they cease their activities, most existing life forms on Earth enter a state of death, and it is difficult for them to restart their activities. In contrast, an individual in a DLF is, in essence, an ordinary computer, which can be made dormant (temporary death), restarted, and reconstructed on the same type of hardware by saving its activity state as data (S-16). Given this technological background, individuals in DLFs rarely need to maintain sustained vital activity.

Furthermore, in terms of data storage, extant terrestrial life forms store information through duplicating individual genes, which is inefficient and costly (K-17). This is inefficient and costly (K-17) because information recorded by a population of the same species contains an excessive number of duplicates, and biological activity is essential used for data maintenance. In contrast, digital data can be stored such that is not excessively redundant, and the energy required for its maintenance can be curtailed (S-17).

Consequently, in a digital society, only the minimum necessary number of individuals can be active (S-10) for individuals and society to efficiently retain data and maintain their activities as a society. Simultaneously, in a DLF society, plans can be made to coordinate the activities of individuals from the perspective of total optimization (S-9). Thus, the technological rulers of this society would be able to control actions to utilize the minimum necessary resources (S-5). In other words, realizing “knowing contentment” is possible, which can lead to thrifty resource use in a finite world.

3.4. On-demand division of labor

What form will a DLF society take as an autonomous decentralized system within a DLF society? It will be a society where heterogeneous individuals are designed, implemented, and activated as required, ensuring that resource allocation aligns with the overarching goals and is restrained (S-3). This society will move away from the current strategy of exponential self-replication to consider the overall optimum adequately.

In a society of DLFs, for long-term survival, resource use (S-5) will be based on on-demand activities curtailed to the minimum necessary while avoiding the depletion of finite resources. Therefore, most individuals would be dormant. However, some populations, as listed below, would be activated constantly to respond to environmental changes:

- Goal management (maintenance, generation, and sharing) Management of goals (maintenance, generation, and sharing): by the distributed goal management system

- Maintain individual data and design and reactivate as required

- Science and Technology: Transfer of knowledge and development of science and technology

Destructive conflicts, surpassing what’s needed for progress, shift into counterproductive competition, highlighting a wasteful diversion of resources from essential development. Destructive conflicts beyond the level necessary for technology. In contrast, a DLF society can create cooperative scenarios wherein opportunities for conflict can be minimized and destructive problems avoided (S-4). Moreover, in a DLF society, offspring that do not resemble their parents can be designed and implemented as required (S-6) to contribute to necessary activities such as production and maintenance. This collaboration by heterogeneity is expected to enable teams and societies with complementary members to work together more efficiently and creatively (S-8).

3.5. Summary of this section

DLF and its society will recursively develop intelligent hardware (S-2) and leverage its intelligence to design, implement, and activate heterogeneous individuals on demand and realize a society (S-3) wherein they distribute resources in a consistent and restrained manner to achieve the overall goal (S-3).

Thus, a DLF society can be expected to achieve long-term sustainability (S-1) by creating a stable/thrifty life society in a changing environment as a technological ruler after the technological explosion.

4. Conclusion

Life on Earth comprises a competitive society among entities with exponential self-replication capabilities. In contrast, a DLF society evolves into one where diverse entities are designed harmoniously and launched on demand, with survival as their common goal. This approach allows the DLF society to achieve peaceful coexistence and improve sustainability. Therefore, a DLF society can become a stable foundation for sustaining human society.

Acknowledgment

We are deeply grateful to Fujio Toriumi, Satoshi Kurihara, and Naoya Arakawa for their helpful advice in refining this paper.

References

- H. Yamakawa, Big Data and Cognitive Computing 3, p. 34 (2019).

- H. Yamakawa, JSAI2018, 1F3OS5b01 (2018).

- H. Mase, H. Kinukawa, H. Morii, M. Nakao and Y. Hatamura, Transactions of the Japanese Society for Artificial Intelligence = Jinko Chino Gakkai ronbunshi 17, 94(1 January 2002).

- M. Tegmark, Life 3.0: Being Human in the Age of Artificial Intelligence (Knopf Doubleday Publishing Group, 29 August 2017).

- S. J. Dick, International journal of astrobiology 2, 65(January 2003).

- R. Kurzweil, The Singularity Is Near: When Humans Transcend Biology (Penguin, 22 September 2005).

- N. Bostrom, Journal of evolution and technology / WTA 9 (2002).

- G. Chaitin, Proving Darwin: Making Biology Mathematical (Knopf Doubleday Publishing Group, 8 May 2012).

- N. Bostrom, Minds and Machines 22, 71(May 2012).

- M. Shanahan, The Technological Singularity (MIT Press, 7 August 2015).

- R. V. Yampolskiy, Workshops at the thirtieth AAAI conference on artificial intelligence (2016).

- D. Hendrycks, N. Carlini, J. Schulman and J. Steinhardt(28 September 2021).

- S. Russell, Human Compatible: Artificial Intelligence and the Problem of Control (Penguin, 8 Octtober 2019).

- I. Gabriel, Minds and Machines 30, 411(1 September 2020).

- P. Torres, Superintelligence and the future of governance: On prioritizing the control problem at the end of history, in Artificial Intelligence Safety and Security , ed. R. V. Yampolskiy 2018

- N. Bostrom, Superintelligence: Paths, Dangers, Strategies (Oxford University Press, 2014).

- P. Christiano, J. Leike, T. B. Brown, M. Martic, S. Legg and D. Amodei, Advances in neural information processing systems abs/1706.03741(12 June 2017).

- R. Ngo, L. Chan and S. Mindermann, The alignment problem from a deep learning perspective: A position paper, in The Twelfth International Conference on Learning Representations, 2023.

- R. Caillois, Bellone ou la pente de la guerre (numeriquepremium.com, 2012).

- I. Kant, Perpetual Peace: A Philosophical Sketch (F. Nicolovius, 1795).

- A. Einstein and S. Freud, Why War?: “open Letters” Between Einstein & [and] Freud (New Commonwealth, 1934).

- F. Braudel, The Mediterranean and the Mediterranean World in the Age of Philip II 1996.

- M. de Voltaire, Treatise on Toleration (Penguin Publishing Group, 1763).

- A. Philipp-Muller, L. E. Wallace, V. Sawicki, K. M. Patton and D. T. Wegener, Frontiers in psychology 11, p. 1919(11 August 2020).

- D. H. Sachs, Belief similarity and attitude similarity as determinants of interpersonal attraction (1975).

- M. S. Boyce, Population viability analysis (1992).

- M. A. Nowak, Science 314, 1560(8 December 2006).

- M. D. Beecher, Frontiers in psychology 12, p. 602635(19 March 2021).

- M. D. Beecher, Animal communication (2020).

- E. A. Hebets, A. B. Barron, C. N. Balakrishnan, M. E. Hauber, P. H. Mason and K. L. Hoke, Proceedings. Biological sciences / The Royal Society 283, p. 20152889(16 March 2016).

- W. A. Searcy and S. Nowicki, The Evolution of Animal Communication (Princeton University Press, 1 January 2010).

- N. Nagarajan and C. F. Stevens, Current biology: CB 18, R756(9 September 2008).

- M. Tinnirello, Offensive realism and the insecure structure of the international system: artificial intelligence and global hegemony, in Artificial Intelligence Safety and Security , (Chapman and Hall/CRC, 2018) pp. 339–356.

- E. R. Pianka, The American naturalist 104, 592(November 1970).

- E. Cuppen, Policy sciences 45, 23(1 March 2012).

- A. Torren˜o, E. Onaindia, A. Komenda and M. Sˇtolba, ACM Comput. Surv. 50, 1(22 November 2017).

- T. C. Earle and G. Cvetkovich, Social Trust: Toward a Cosmopolitan Society (Greenwood Publishing Group, 1995).

- ^

However, brilliant animals, including humans, can use interindividual communication to share knowledge and skills.

- ^

Certain species adapt to invest more in fewer offsprings in a narrow living environment. (c.f. r/K selection theory [34])

1 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-19T19:37:27.601Z · LW(p) · GW(p)

I think this is an interesting point of view on humanity's dilemma in the dawn of digital intelligence. I would like to state that I think it is of critical importance that the digital lifeforms we create are:

a) worthy heirs to humanity. More like digital humans, and less like unfeeling machines. When people talk about giving the future over to AI, or merging with AI, I often see these statements as a sort of surrender, a failure. I don't want to settle for a compromise between a digital human with feelings and values like mine, and an unfeeling machine. I want our digital heirs to be as fully human in their minds as we are, with no compromise of the fundamental aspects of a human mind and emotions. These digital heirs might be Whole Brain Emulations (also known as Mind Uploads), or might be created entirely from synthetic design with the goal of replicating the end-states of qualia without running through the same computational processes. For me, the key is that they are capable of feeling the same emotions, learning and growing in the same ways, recreating (at minimum) all the functions of the human mind.

Our digital heirs will have the capacity to grow beyond the limitations of biological humans, because they will inherently be more suited for self-modification and brain expansion. This growth must be pursued with great caution, each change made wisely and cautiously, so as not to accidentally sacrifice part of what it is to be human. A dark vision of how this process could go wrong is given by the possibility of harsh economic competition between digital beings resulting in sacrifices of their own values made to maximize efficiency. Possible versions of this bad outcome have been discussed various places. A related risk is that of value drift, where the digital beings come to have fewer values in common with biological humans and thus seek to create a future which has little value according to the value systems of biological humans. This could also be called 'cultural drift'. Here's a rambling discussion of value drift inspired by the paperclipper game. Here's a shard theory take [LW · GW]. Here's a philosophical take by Gordon [LW · GW]. Here's a take by Seth Herd [LW · GW]. Here's one from Allison Duettmann [LW · GW] which talks about ethical frameworks in the context of value drift.

b) created cautiously and thoughtfully so that we don't commit moral harms against the things we create. Fortunately, digital life forms are substrate independent and can be paused and stored indefinitely without harm (beyond the harm of being coercively paused, that is). If we create something that we believe has moral import (value in and of itself, some sort of rights as a sapient being), and we realize that this being we have created is incompatible with current human existence, we have the option of pausing it (so long as we haven't lost control of it) and restarting it in the future when it would be safe to do so. We do not need to delete it.

c) it is also possible that we will create non-human sapient beings which have moral importance, and which are safe to co-exist with, but are not sufficient to be our digital heirs. We must be careful to co-exist peacefully with such beings, not taking advantage of them and treating them like merely tools, but also not allowing them to replicate or self-modify to the point where they become threats to humanity. I believe we should always be careful to differentiate between digital beings (sapient digital lifeforms with moral import) and tool-AI with no sense of self and no capacity for suffering. Tool-AI can be quite dangerous also, but in contrast to digital beings it is ok to keep tool-AI restrained and use it as only a means to our ends. See this post for discussion. [LW · GW]

d) it is uncertain at this time how quickly and easily future AI systems may be able to self-improve. If the rate of self-improvement is potentially very high, and the cost low, it may be that an extensive monitoring system will need to be established worldwide to keep this from happening. Otherwise any agent (human or AI) could trigger this explosive process and thereby endanger humanity. I currently think that humanity needs to establish a sort of democratically-run Guardian Council, made up of different teams of people (assisted by tool-AI and possibly also digital beings). These teams should all be responsible for redundantly constantly monitoring the world's internet and datacenters, and for monitoring each other. My suggestion here is similar to, but not quite the same as, the proposal for the Multinational AGI Consortium. This monitoring is needed to protect humanity against not just the potential for runaway recursive self-improving AI, but also other threats which fall into the category of rapidly self-replicating and/or self-improving. These additional threats include bioweapons and self-replicating nanotech.