The case for "Generous Tit for Tat" as the ultimate game theory strategy

post by positivesum · 2023-11-09T18:41:03.879Z · LW · GW · 3 commentsThis is a link post for https://tryingtruly.substack.com/p/how-generous-tit-for-tat-wins-at-life

Contents

WE’VE MAYBE ACTUALLY KINDA SOLVED IT! In the long run, the cost incurred each time you attempt to co-operate and get exploited, usually pales in comparison of the cost of never trying at all. Rule 3 - After you retaliate, always try to co-operate again in the next round. Updating list of Critiques (and their solutions): None 3 comments

I wish I could title this How “Pacifism” wins at life, since it’d immediately make everything much easier (especially writing this post), but as most well-meaning kids swiftly learn on the playground - the world is not gentle.

It’s always bothered me how impossible it is to negate the need for strength in life (whether physical, emotional, individual or collective), especially considering how unevenly and unfairly that stat is usually distributed.

What bothers me even more however, is how many people I’ve seen gleefully misapply and overfit this fact, falsely concluding that since one cannot survive without strength, it is the only meaningful form of power in the world. I’m sure you’ve seen these people too.

There is another side to this coin however. One that I care deeply about, and that carries just as much weight and impact as anything mentioned above: if you ever want to get anywhere as a person, society, or species, you can’t afford to negate gentleness either.

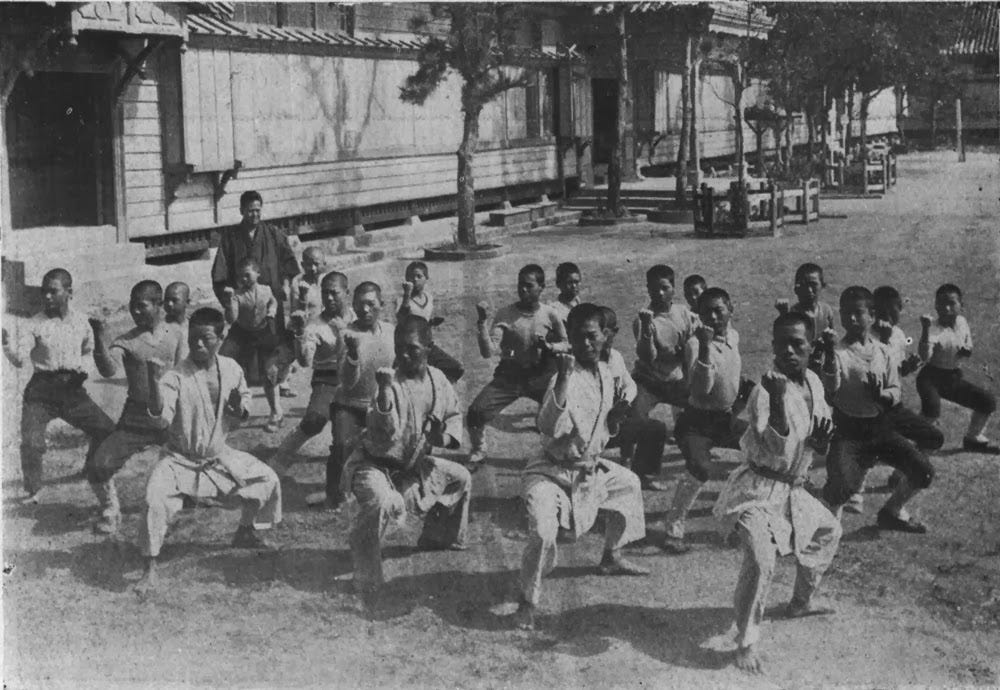

Karate is the perfect intuitive manifestation of this principle. Respect and conflict aversion are its core philosophy, but not because of a lack of viable alternatives.

Quick note: We’re gonna dive into game theory here, so if you’re new to/rusty on the terminology, the single best thing you can do is click here for a brilliant interactive crash course on all the meaningful bits. If you’re short on time you’re better off prioritizing this click rather than reading the rest of this post (Nicky Case is amazing)!

Many smartass variations of the prisoner’s dilemma have been cooked up over the years, with each change in the parameters of the game bringing along with it a new balance of incentives, and different winning strategies accordingly.

Should you make like Jesus and always turn the other cheek, or should you play like Lucifer himself and ruthlessly exploit everyone you meet? Maybe you need a complex web of conditions defining how you act towards others based on your relationship so far, or maybe you can just do unto others as they do unto you?

Every variation teaches us something new about the dynamics of selfishness and selflessness, trust and defection, all while generating a rich abundance of smug nerds to debate which ones apply best to which real life situation.

Debate is cool and all, but what I don’t understand is why all said nerds don’t just stop and yell out in unison:

WE’VE MAYBE ACTUALLY KINDA SOLVED IT!

Because we maybe actually kinda already have, and the result is beautiful.

Let’s start with an overview of the most impactful conditions of any prisoner’s dilemma, and select the ones that reflect the environment of the real world best.

We’re doing this to establish that whatever mysterious strategy we end up discovering later on which can demonstrably, empirically, and objectively thrive under these conditions - must be the brilliant strategy best applied to the majority of real life situations. Let’s get to it:

Repeated/One-off Rounds - Most real-life situations (whether between people or nations) are not one-time events, but rather involve repeated interactions over time. With the exception of aggressive drunks outside pubs, we usually develop a long-term repertoire of past actions with one another. To clarify, even a single conversation can technically be defined as a “repeated interaction” (so long as each side gets more than 1 line of dialogue each)!

Imperfect/Perfect Information - We have imperfect information about each other's intentions, payoffs, and the consequences of our actions. Plenty of room for error.

Communication: On/Off - We can certainly communicate with one another, whether explicitly or implicitly. Misunderstandings, bluffs and lies are completely possible, but are forms of communication nonetheless.

Asymmetric/Symmetric Payoffs - The cost/reward for defection/co-operation vary greatly between contexts. This is likely the highest variance condition here.

Variable/Constant Group Size - Groups can grow, shrink, merge and split depending on the actions of their members. Furthermore, interactions can be between, and affect, multiple participants at once.

Emotionality: On/Off - Anger, fear, empathy, can all affect decision making. That’s not even mentioning cognitive biases (#UncleKahneman).

Uncertainty: So On It Hurts/Off - Nobody knows anything about anything or anyone for sure and we’re all terrified mortal coils. The technical term for this is Environmental Uncertainty and Social Uncertainty.

Right! This should be a sturdy enough foundation (let me know if I’ve missed anything important in the comments). The stage is set, and you’re now ready to hear about one of the most illuminating experiments done in the field of game theory, but in a shortened tl;dr manner.

Quick note: This is mainly because I suspect a significant portion of you have already heard some version of this before, and that the other, equally significant portion of you that hasn’t, would enjoy it being covered while favouring promptness. To the remaining, probably much tinier portion of you (yet still equally significant!) that would actually love to read a full breakdown - I can only humbly ask for your forgiveness and direct you here.

The gist is this: in the 60’s, super influential game theory researcher (named Axelrod) invites other super influential game theory researchers from around the world to devise their ultimate prisoner’s dilemma strategy, code it, and then let the resulting programs fight eachother to the death in a grand digital petri dish.

The tournament is kinda like a turn based RPG (with depleting hit points and everything), except that at the end of each fight, whichever program wins gets to survive and reproduce (just like in biology)! Whoever spawns enough offspring to eventually take over the board is the winning strategy.

Thousands of lines of code riddled with complexity were submitted, ranging from the most ruthless of programs to the kindest. They were then all promptly fired up and sicced at eachother for 200 rounds. Soon enough one winner stood supreme, and it was poetically too-good-to-be-true too, since it was only two humble lines of code:

Rule 1 - Be nice (technically meaning always co-operate on the first round).

Rule 2 - From then on just copy what the other player did in the previous round (meaning an eye for an eye and a hug for a hug).

What a tear jerking win for ancient wisdom! Literally every major religion has described their own variation of a “do unto others as they do unto you” principle at some point or another, crystallizing a timeless insight out of pure intuition and an astute observation of life’s dynamics.

“I said it first!” - One of them, probably. I dunno. PBF

In this case, the strategy was simply called Tit for Tat.

It’s a wise strategy, and fully compatible with life’s continuous demand for strength as a deterrent, plus it also manages to incorporate an impressive dose of goodwill. The conclusion is super simple too: you should always attempt to come in peace, bravely placing your trust in others at risk of getting hurt. However, should they try to harm you, don’t hesitate to retaliate back and teach them a lesson.

Sounds good, but NOT GOOD ENOUGH!!!

As I’m sure you’ve noticed, the conditions of the experiment above don’t quite satisfy the requirements we initially established as necessary to make its conclusion fully applicable to real life (and besides, I still owe you an Adjective).

Let’s quickly review the criteria one by one and see where we stand:

Repeated Rounds: TRUE - There were 200 of them!

Imperfect Information: TRUE - Programs didn’t know the strategy of their opponents ahead of time.

Communication On: FALSE - The programs couldn’t chit chat or gossip.

Asymmetric Payoffs: FALSE - The cost/reward matrix for co-operate/defect was symmetrical and constant.

Variable Group Size: TRUE - While programs technically fought 1v1, the outcome of each fight affected the overall group representation of each program on the board. Each win increased the probability that a program will meet a like-minded partner, and directly influenced the viability of its strategy.

Emotionality On: FALSE - The programs never got to learn about love.

Uncertainty On: HALF TRUE - While the programs certainly suffered from Social Uncertainty, not knowing how their adversary would react, they did enjoy the very important luxury of Environmental Certainty, with perfect reliability for inputs and outputs, and no risk of earthquakes.

It seems we're missing Communication, Emotionality, Environmental Uncertainty and Asymmetric Payoffs. Close but no cigar.

Briefly setting aside Asymmetric Payoffs (don’t worry, they’ll come back soon), what I’m hoping you’ll notice is that the remaining three conditions are all different manifestations of the same messy, real world principle: sometimes, things can go horribly wrong by complete accident. More specifically, there’s always the potential for Miscommunication, Miscalculation, Mistake, and Mishap.

In a world full of Tit for Tats, any of these Ominous Ms can lead to catastrophic cycle.

I asked Stable Diffusion for an Evil Letter M in a Tophat

Say two Tit for Tats run into eachother in real life. Both are immediately nice to one another, and begin to reciprocate said niceness ad infinum. Utopia is within reach. However, throughout the entirety of their relationship, all it would take is one tiny error, one tragic misplaced M by either one, to immediately initiate an ever escalating cycle of mutual retaliation until one or both drop dead.

In practice, Tit for Tat can actually be a pretty disastrous strategy, since it can easily get you deadlocked in a costly conflict. Whether its a war that’s fought until there’s no one left to fight, two political rivals forced to dedicate an ever increasing segment of their campaign budget to disparaging one another’s public image, or simply a battle of pettiness between middle management and employees - in the real world, you can’t ignore the need for some form of de-escalation mechanism.

Even if it’s costly, you must find a way to cut terrible feedback loops. You need one additional power: GENTLENESS! Get a load of this:

So long as you possess the strength to defend yourself, you can always make the choice to generously give someone the benefit of the doubt, relinquish a justified retaliatory response, or most gently of all, simply decide to forgive.

Why? All for the sake of valiantly fighting to keep the option for peace and collaboration open at all times, lest it get closed off forever to everyone’s mutual detriment. I can’t think of a more heroic struggle. Being generously gentle means you’re prepared to get hurt in the name of peace, and it’s an incredibly brave and risky thing to do.

How risky? Asymmetrically so! Asymmetric Payoffs (I told you they’d be back) are all around us, since in real life no two players ever engage from equal footing. Each player has a different ratio between how much they want something, what it’d cost them to get it, and what capabilities are at their disposal. The risk/reward matrix is always dynamic and far from symmetric between players.

The defining variable here, unfortunately, is strength (we knew it was inescapable from the getgo). This certainly complicates the decision-making calculus, but it certainly doesn’t negate the role of gentleness! Luckily, in real life the asymmetry goes both ways:

Even when facing a “stronger” player, who says you have to engage with asymmetric strength symmetrically? There are many kinds of rewards, and one form of strength can be completely negated by another (think of the difference between Tank vs Tank and Tank vs RPG). Furthermore, since Uncertainty: On, outcomes are always unpredictable for both sides - each one could potentially outmaneuver, attrition, shift vectors, or just plain luck out vs the other, regardless of the perceived reward matrix.

Assymetric Payoffs are merely a complicating factor, but the key principle still stands:

In the long run, the cost incurred each time you attempt to co-operate and get exploited, usually pales in comparison of the cost of never trying at all.

Alfred Tennyson, the “better to have loved and lost” guy, somberly agrees.

With our newly acquired wisdom, let’s return to the original Tit for Tat strategy, and custom fit it with a mechanism for self-correction. We just need to add one rule:

Rule 1 - Be nice (technically meaning always co-operate on the first round).

Rule 2 - From then on just copy what the other player did in the previous round (meaning an eye for an eye and a hug for a hug).

Rule 3 - After you retaliate, always try to co-operate again in the next round.

Congratulations! We’ve just created “Generous Tit for Tat” (coined by Axelrod himself)! It maintains the key benefits of regular Tit for Tat, while possessing the ability to break out of Ominous M cycles should they arise. We’ve also finally come full circle to the title of this post! It’s been emotional.

This strategy can be dangerous, counter-intuitive and painful, that’s why it requires such a unique kind of boldness - but fact of the matter is, it’s one of the strongest strategies for winning at life in the long term. Whether between individuals/communities/corporations/nations.

It’s gonna be tough, but never forget: if you don’t go around actively trying to exploit others, are strong enough to protect yourself when others do, and have the discipline within you to remain gentle and forgiving despite it all - you’ve already done humanity an incredible service by just you being you.

Updating list of Critiques (and their solutions):

“Actually, given either sufficient room for miscommunication and mistakes, or a sufficiently asymmetrical reward matrix, GTFT loses to pure Lucifer (exploit everyone) strategies”

Technically true, and besides stressing the important role that trust and the incentive structures of communication technologies play in shaping our society, I suspect if it was the dominant dynamic we never would have managed to co-operate long enough to get out of the stone age.

If the petri dish of humanity was truly majority Lucifer at heart, we’d probably never have been capable of co-operating long enough to get past the stone age in the first place. Humanity has already existed through more than enough rounds of the game (and has been through its fair share of Lucifers) to know with certainty that there are plenty of wonderful people with strength out there, capable of banding together and correcting vicious feedback loops when they arise. It just isn’t always pretty.

The board we’re playing on doesn’t have a critical mass of Lucifers just yet, although I'm not sure if we can extrapolate from this that it never will be.

Furthermore, barring a few exceptions, in real life the “Reward Matrix” is highly dynamic, and most importantly, human-defined. We decide as a society what’s important to us, and we are the ones in a position to create our own incentive structures, organizations, and social dynamics accordingly. That’s not to say it’s easy however.

“Actually, I’ve read that Pavlov’s strategy (the cynical “win-stay, lose-shift" strategy) beats GTFT!”

That’s only in simultaneous rounds (think rock paper scissors). In alternating (turn-based) iterations, GTFT still triumphs. Real life interactions do not work like rock paper scissors (unless you’re playing rock paper scissors).

3 comments

Comments sorted by top scores.

comment by RationalDino · 2023-11-09T23:58:15.345Z · LW(p) · GW(p)

As a life strategy I would recommend something I call "tit for tat with forgiveness and the option of disengaging".

Most of the time do tit for tat.

When we seem to be in a negative feedback loop, we try to reset with forgiveness.

When we decide that a particular person is not worth having in our life, we walk them out of our life in the most efficient way possible. If this requires giving them a generous settlement in a conflict, that's generally better than continuing with the conflict to try for a more even settlement.

The first three are things most social animals are adapted to do. The last is possible for us because we live in societies that are large enough for us to never interact with people we don't like. Unfortunately our emotions are pretty well adapted to life in groups below Dunbar's number. So the decision to disengage efficiently takes work.

comment by Shane Ward (shane-ward) · 2024-04-19T09:21:53.746Z · LW(p) · GW(p)

This is classic “lesswrong” where they don’t fully research the subject and are slightly less wrong

Though arguably more dangerous than just being “full wrong” as they present half baked analysis as seemingly logical or mathematical solutions which would override instinct, emotion or intuition - and through this confidence in their incorrect result, a stubborn inflexibility that will do more harm than good

Replies from: positivesum↑ comment by positivesum · 2024-05-04T19:41:42.097Z · LW(p) · GW(p)

Please feel free to elaborate on the specific qualms you have with what's written! Super happy to retract anything necessary and learn!