SB-1047, ChatGPT and AI's Game of Thrones

post by Rahul Chand (rahul-chand) · 2024-11-24T02:29:34.907Z · LW · GW · 1 commentsContents

Part-I (The Sin of Greed) Too Soon, Too Fast, Too Much - Timeline of AI November 2022 February 24, 2023 March 14, 2023 March 22, 2023 Part-II (The Sin of Pride) May 2, 2023 May 16, 2023 Part-III (The Sin of Envy) Game of Thrones House AI Safety House AI acceleration The Enemy of my Enemy is my Friend Part - IV (The Sin of Sloth) None 1 comment

Part-I (The Sin of Greed)

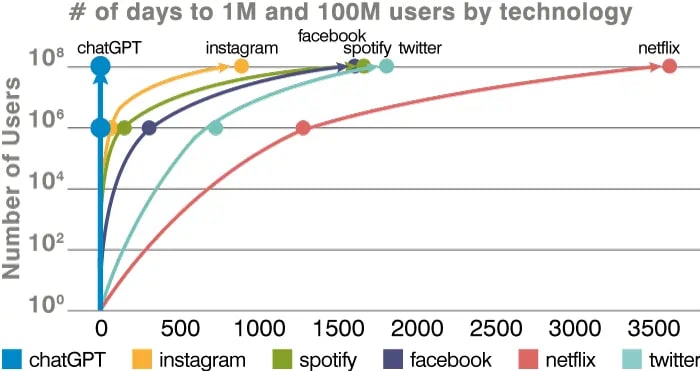

On 30 November 2022, OpenAI released ChatGPT. According to Sam Altman, it was supposed to be a demo[1] to show the progress in language models. By December 4, in just 5 days it had gained 1 million users, for comparison it took Instagram 75 days, Spotify 150 days and Netflix 2 years to gain same no. of users. By January 2023, it had 100 million users, adding 15 million users every week. ChatGPT became the fastest growing service in human history. Though most people would only come to realize this later, the race for AGI had officially began.

Too Soon, Too Fast, Too Much - Timeline of AI

November 2022

At first, ChatGPT was seen more of a fun toy to play with. Early uses of ChatGPT mostly involved writing essays, reviews, small snippets of code etc. It was buggy, not very smart and even thought it became an instant hit, it was seen more as a tool that can help you draft your mail but nothing more serious. Skeptics brushed it aside as being dumb and not good enough for anything that involved any level of complex reasoning.

The next 2 years however saw unprecedented progress that would change the outlook of LLM chatbots from being tools that can help you draft a mail to tools that could replace you. The models kept getting bigger, they kept getting better and the benchmarks kept getting climbed. The interest in ChatGPT also saw billions poured into to other competetitors Anthropic, Mistral, Gemini, Llama trying to come up with their own version of an LLM powered chatbot.

February 24, 2023

Soon Open-source models started to catch-up as well, the number of model's hosted on HuggingFace increased 40x in just 1 year, from Jan 2023 to Jan 2024. Companies that had missed the boat but had enough talent drove the progress of Open-source models, on Feb 24, 2023, Meta reluctantly released the weights of their LLM Llama after someone had uploaded its weight on 4chan a week back. This kick-started the open-source progress. Llama was the first good competent open source LLM. It reportedly cost Meta 20 million dollars to train it, a huge sum that the open source community couldn't have come up with but it represents less than 0.015% of Meta's annual revenue (~130 billion). For what is chump change, Meta had successfully created an alternative to proprietary models and made their competitors margin thinner. The gpu poors of the world were now thrown in to the race too (a race, they would realize later, that they cannot win).

March 14, 2023

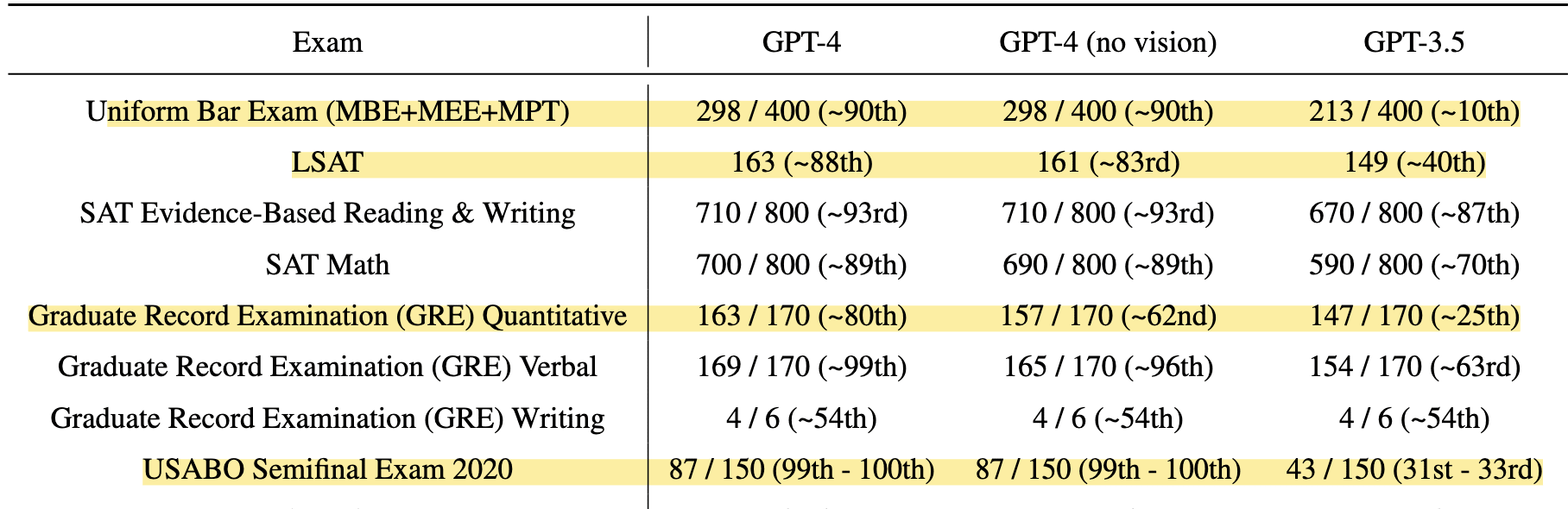

To me, the release of GPT-4 was, in some ways, even more important than the first one. GPT-4 was remarkably more smarter than anything before it. It was this release that marked the going away from a cool demo to something much bigger. For the first time, a general purpose AI model was competing with humans across tasks. To the believers, the very first signs of AGI had been seen.

Below charts show how substantially better GPT-4 was than its predecessor. It consistently ranked high on many tests designed to test human intelligence. Stark contrast from GPT-3.5 which mostly performed in the bottom half.

March 22, 2023

Though it had only been 1 week since GPT-4 was released, the implications of it for the future were already showing up. This was the worst AI was ever going to be, it would only get stronger. There was also a belief that models much smarter than even GPT-4 were on the horizon, and it was only a matter of time. Even before GPT-4, the fear around AI risk was already starting to take shape. The constant hints from top AI labs about not releasing full non-RLHF models due to risks only added to this. Similar claims were made about older models like GPT-3, which, in hindsight, weren't nearly advanced enough to pose any real threat. This time around, it felt different though, the tech and the outside world was slowly catching on.

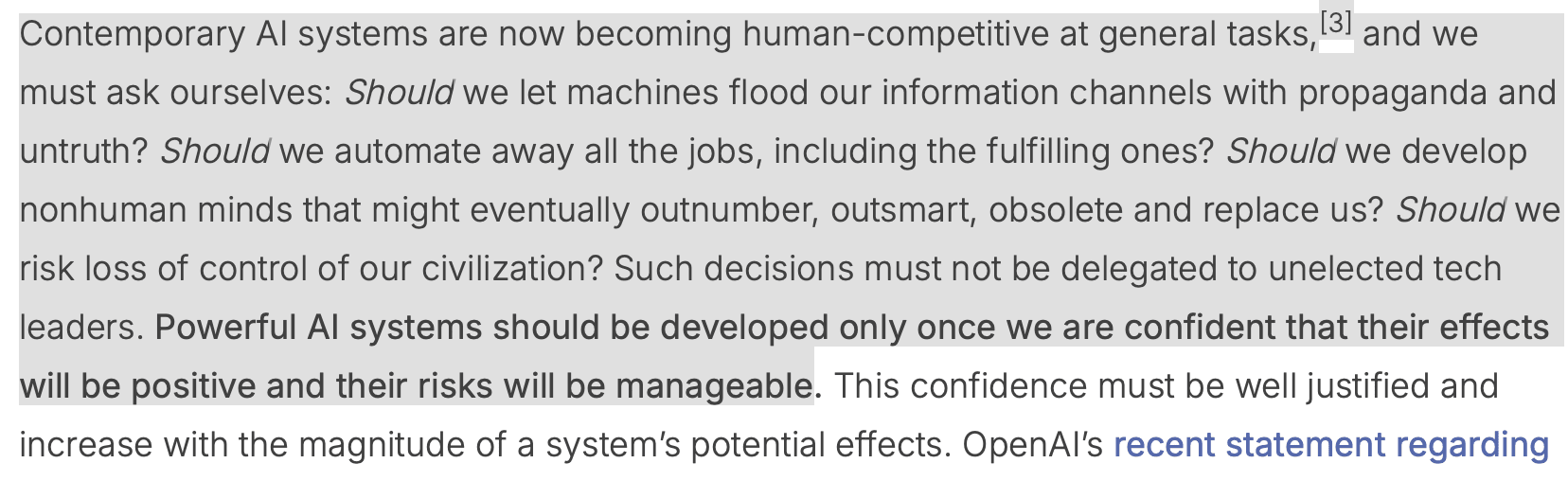

On March 22, 2023, the Future of Life Institute published an open letter signed by over 1,000 AI researchers and tech leaders, including Elon Musk, Yoshua Bengio and Steve Wozniak calling for a six-month pause on the development of AI systems more powerful than GPT-4.

The letter insisted that they were not calling for a permanent halt, but the six month time was to provide policy makers and AI safety researchers enough time to understand the impact of the technology and put safety barriers around it. The letter gained significant media coverage and was the sign of what was to come. However, by this time, the cat was already out of the bag. Even proponents of the letter believed that it was unlikely the letter was going to lead to any halt, much less that of six months. The AGI race had begun, billions of dollars were on stake and it was too late to pause it now.

This letter was followed by the provisional passing of The European Union's AI Act on April 11, 2023 which aimed to regulate AI technologies, categorizing them based on risk levels and imposing stricter requirements on high-risk applications. Two weeks later, on April 25, 2023, the U.S. Federal Trade Commission (FTC) issued a policy statement urging AI labs to be more transparent about the capabilities of their models.

Part-II (The Sin of Pride)

May 2, 2023

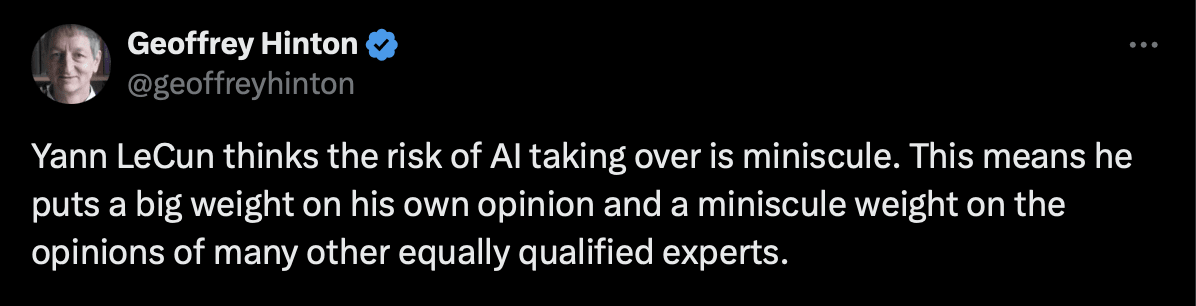

On May 2nd, Geoffery Hinton, the godfather of AI (and now a Nobel Prize winner) announced his retirement from Google at the age of 75 so he could freely speak out about the risks of AI. On the same day, he gave an interview with NYT, where he said a part of him, now regrets his life’s work and detailed his fear with current AI progress. This interview later lead to public twitter feud between Yann LeCun and Hinton, showing the increasing divide within the community. AI risk was now no longer a quack conspiracy theory but was backed by some of the most prominent AI researchers. Below are some of the excerpts from the NYT article.

"But gnawing at many industry insiders is a fear that they are releasing something dangerous into the wild. Generative A.I. can already be a tool for misinformation. Soon, it could be a risk to jobs. Somewhere down the line, tech’s biggest worriers say, it could be a risk to humanity"

“It is hard to see how you can prevent the bad actors from using it for bad things”

May 16, 2023

With increasing integration of generative AI into daily life, concerns regarding their societal impact were not only becoming mainstream but also politically important. On May 4, 2023, President Biden called a private meeting with the heads of frontier AI labs, including Sam Altman, Sundar Pichai, and Anthropic CEO Dario Amodei. Notably, no AI safety policy organizations were invited.

This private meeting then led to Sam Altman's Congressional Hearing on May 16th. Sam Altman's Senate hearing was different than the usual grilling of tech CEOs that we have seen in the past. Most of this is attributed to the private closed-door meeting that Sam Altman already had with members of the committee before. In fact, Altman agreed with ideas suggested by the committee regarding the creation of an agency that issues licenses for the development of large-scale A.I. models, safety regulations, and tests that A.I. models must pass before being released to the public. The groundwork for a regulatory bill like SB-1047 was taking place. People on the outside saw the hearing as just an attempt by Sam Altman at regulatory capture.

“I think if this technology goes wrong, it can go quite wrong. And we want to be vocal about that. We want to work with the government to prevent that from happening .... We believe that the benefits of the tools we have deployed so far vastly outweigh the risks, but ensuring their safety is vital to our work" - Sam Altman

“It’s such an irony seeing a posture about the concern of harms by people who are rapidly releasing into commercial use the system responsible for those very harms" - Sarah Myers West (direction of AI Now Institute, a policy research centre)

To understand why SB-1047 became such a polarizing bill that gained such media coverage and formation of factions across the board, it's important to note that by this time everything was falling into place. The California government discusses around 2000 bills annually, for a bill to receive so much interest it had to tick all three boxes, be of national political importance, resonate with the public and have potential of large economic impact.

- Politically important: By the time January of 2024 rolled in, LLMs were no longer seen as cute email writing assistants, they had become a matter of national security. This view of AI inside government and defence circles had accelerated. SB-1047 therefore became a bill of national security importance.

- Resonate with the public: AI risk was becoming more of a public talking point. 2023 saw public beef between respected figures in AI, Hinton, Bengio, Yann and others. It also saw many lawsuits filled due to copyright issues arising from use of generative AI. In May 2023, Hollywood unions went on strike, one of their demands was an assurance that AI wouldn't be used in film-making.

- Economic Impact: By the end of 2023, generative AI was a 110 billion dollar industry. And new frontier model training runs were now costing the equivalent of a small country's GDP.

Part-III (The Sin of Envy)

On Feb 4, 2024, California Democratic Senator Scott Wiener introduced the AI safety bill SB-1047 (Safe and Secure Innovation for Frontier Artificial Intelligence Models Act). The bill was aimed to ensure regulations to ensure safe development and deployment of frontier AI. The key provisions of the first draft were

- Safety Determination for AI Models

- Developers must make a positive safety determination before training AI models. A "covered model" is defined as an AI model that has been trained using computing power greater than 10^26 floating-point operations (FLOPs) in 2024, or a model expected to perform similarly on industry benchmarks. Any covered model must undergo strict safety evaluations due to their potential for hazardous capabilities.

- Hazardous capability is defined as the ability of a model to enable:

- Weapons of mass destruction (biological, chemical, nuclear)

- Cyberattacks on critical infrastructure or Autonomous action by AI models, causing damage of at least $500 million.

- Developers must also implement shutdown capabilities to prevent harm if unsafe behavior is detected.

- Certification and Compliance:

- Developers must submit annual certifications to the Frontier Model Division, confirming compliance with safety protocols, including detailed assessments of any hazardous capabilities and risk management procedures.

- The Frontier Model Division will review these certifications and publicly release findings.

- Computing Cluster Regulations:

- Operators of high-performance computing clusters must assess whether customers are using their resources to train "covered AI models". Violations of these provisions could result in civil penalties.

- Operators are required to implement written policies and procedures, including the capability for a full shutdown in emergency situations.

- Incident Reporting:

- Developers must report AI safety incidents to the Frontier Model Division within 72 hours of discovering or reasonably suspecting that an incident has occurred.

- Legal and Enforcement Measures:

- The Attorney General can take legal action against developers violating the regulations, with penalties for non-compliance including fines up to 30% of the development cost and orders to shut down unsafe models.

- Whistleblower Protection:

- Employees who report non-compliance with AI safety protocols are protected from retaliation, ensuring transparency and accountability in AI development.

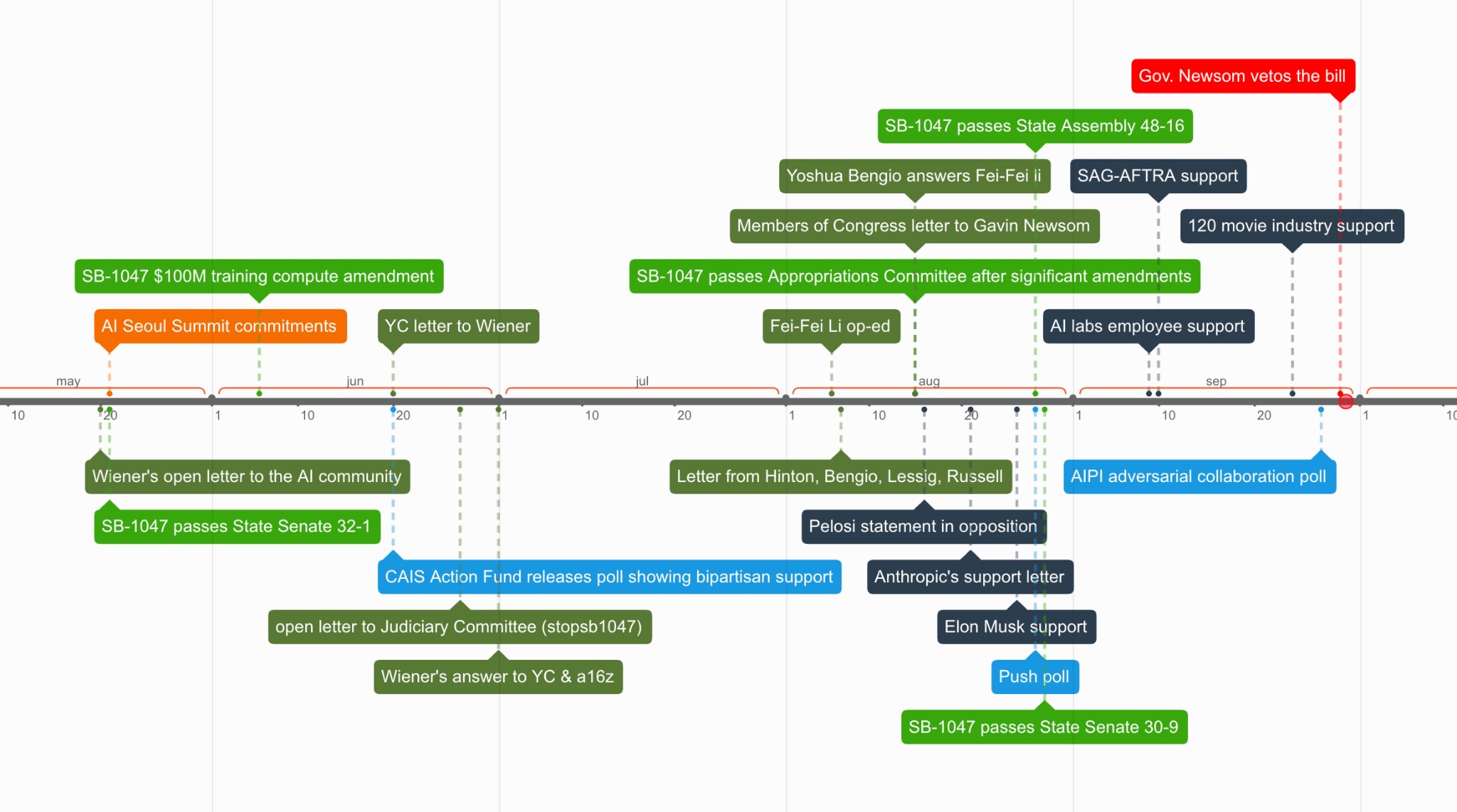

SB-1047 history is long and complicated[2], it went over a total of 11 ammendments before it finally ended up on the desk of California governor Gavin Newson. Many of these changes were due to the pressure from frontier AI labs, especially the less than warm ("cautious") support by Anthropic, who Scott and the broad AI safety community thought would be more supportive of the bill. I briefly cover the changes below

Change in Definition of Covered Models

- The definition of covered models was changed to add "The cost of training exceeds $100 million" as another criteria. So for a model to qualify as being covered it would now need to have BOTH 10^26 flops and cost of more than 100 million dollar.

- The change was prompted to ensure that the regulation targeted only the most powerful AI models while avoiding undue burden on smaller developers.

Removal of the proposed Frontier Model Division

- The Frontier Model Division which was proposed to added as part of California Department of Technology and tasked with overseeing development of AI frontier models and their compliance was scrapped and the responsibility moved to existing departments

- The change was mainly prompted by Anthropic's letter which showed concerns about the creation of a new regulatory body and the potential for overreach.

Removal of pre-harm enforcement

- The bill was amended so that the Attorney General can only sue companies once critical harm is imminent or has already occurred, rather than for negligent pre-harm safety practices.

- Again, this change was prompted by Anthropic and other stakeholders because it was too prescriptive and could stifle innovation.

Relaxation for operators of computing clusters

- The mandate for operators to implement a full shutdown capability was removed. Instead, the bill now required operators to implement written policies and procedures when a customer utilizes compute resources sufficient to train a covered model.

- This change was prompted by pressure from tech giants who don't train frontier models but supply the resources (e.g. Nvidia)

Game of Thrones

One of the most interesting things about the whole SB-1047 story is how the AI and the larger tech world reacted to it and the factions that formed

House AI Safety

- Elon Musk: The most prominent and also the most unlikely figure that came in support of SB-1047 was Elon Musk. On Aug 26, he tweeted "This is a tough call and will make some people upset, but, all things considered, I think California should probably pass the SB 1047 AI safety bill". This was unexpected since Elon had been a huge supporter of AI acceleration and e/acc. Many saw this as a continuation of his feud with Sam Altman and an attempt to get back at OpenAI[3].

- Anthropic: Though Anthropic was initially cautious in its support, after a number of amendments that it had suggested were added, they released another open letter stating "bill is substantially improved, to the point where we believe its benefits likely outweigh its costs".

- Geoffery Hinton & Yoshua Bengio: In his article in Fortune (written as a respone to Fei-Fei Li's comments) Bengio said "I believe this bill represents a crucial, light touch and measured first step in ensuring the safe development of frontier AI systems to protect the public." Hinton with Bengio also wrote an open letter to Gavin Newson stating, "As senior artificial intelligence technical and policy researchers, we write to express our strong support for California Senate Bill 1047... It outlines the bare minimum for effective regulation of this technology" and, "Some AI investors have argued that SB 1047 is unnecessary and based on science fiction scenarios. We strongly disagree. The exact nature and timing of these risks remain uncertain, but as some of the experts who understand these systems most, we can say confidently that these risks are probable and significant enough to make safety testing and common-sense precautions necessary"

- Hollywood: By this time, AI had already become a topic of contention in Hollywood. The Screen Actor guild had led strikes to convince Hollywood studios to ensure that generative AI wouldn't be used in the entertainment industry. "Artist for Safe AI" wrote an open letter to Newsman stating their support of SB-1047 stating "We believe powerful AI models may pose severe risks, such as expanded access to biological weapons and cyberattacks ... SB 1047 would implement the safeguards these industry insiders are asking for".

House AI acceleration

- OpenAI: OpenAI released an open-letter stating their opposition to SB-1047. The letter argued that given the national security importance of SB-1047 it should be handled federally rather than on the state level. They also argued that such a bill would hurt California's economy "High tech innovation is the economic engine that drives California's prosperity ... If the bill is signed into place it is a real risk that companies will decide to incorporate in other juridictions or simply not release models in California"

- Meta & Google: Meta released an open letter expressing their significant concerns with the bill and stating that "SB-1047 fundamentally misunderstands how advanced AI systems are built and therefore would deter AI innovation in California". "The bill imposes liability on model developers for downstream harms regardless of whether those harms relate to how the model was used rather than how it was built, effectively making them liable for scenarios they are not best positioned to prevent". Google expressed similar concerns.

- Fei Fei Li & Open-Source Community: Fei Fei Li, who is considered the "Godmother of AI" wrote in her fortune opinion piece that "If passed into law, SB-1047 will harm our budding AI ecosystem, especially the parts of it that are already at a disadvantage to today’s tech giants: the public sector, academia, and “little tech.” SB-1047 will unnecessarily penalize developers, stifle our open-source community, and hamstring academic AI research, all while failing to address the very real issues it was authored to solve."

- Nancy Pelosi: Nancy Pelosi is a prominent figure in the Democratic Party and her opposition to the bill was seen as singificant given that it was tabled by a fellow Democratic California representative. In her open-letter, she states "The view of many of us in Congress is that SB 1047 is well-intentioned but ill informed ... SB-1047 would have significant unintended consequences that would stifle innovation and will harm the U.S. AI ecosystem".

The Enemy of my Enemy is my Friend

Why I find these factions fascinating is because so many people were fighting for so many different things. The Hollywood letter in support of SB-1047 talks about bio and nuclear weapons. I don't believe the people in Hollywood were really concerned about these issues, but what prompted them to sign the letter was that they were fighting something even more important, which is the fight for the future of work. While Bengio and Hinton were legitimately concerned about existential risks that these AI systems present, Elon's support for the bill wasn't due to his concerns about AI risks but rather seen as an attempt to get back at OpenAI and to even the field as xAI plays catch up.

Similarly, the people who were against SB-1047 were fighting for different things as well. The open-source community saw it as an ideological battle; they were opposed to any kind of governmental control over AI training. This is apparent from the fact that even after amendments, they still opposed SB-1047, even though they wouldn't have been affected. While Meta & Google saw it as an unnecessary roadblock that could lead to further scrutinization over their AI models and business practices.

OpenAI's stance is the most interesting one here, because Sam Altman didn't oppose regulation, he only opposed state-level regulation but was in favor of federal regulations. OpenAI had by then become really important for national security. In November 2023, during a period of internal upheaval, OpenAI appointed Larry Summers to its board of directors. Summers' appointment, who had previously served as U.S. Treasury Secretary and Director of the National Economic Council, was seen as a strategic move to strengthen OpenAI's connections with government entities due to his deep-rooted connections within U.S. policy circles and influence over economic and regulatory affairs. In June 2024, OpenAI hired former NSA director Paul Nakasone. With figures like Summers and Nakasone onboard, OpenAI was now operating at a much higher level of influence; for them, a federal regulation would be favorable and they wanted to avoid the complications an unfavorable state regulation bill would introduce.

Part - IV (The Sin of Sloth)

After passing through the California senate and assembly the bill was finally presented to Governor Newsom on Sept 9, 2024. On Sept 29, Newsom under pressure from silicon valley and own party members like Pelosi vetoed the bill, stating "By focusing only on the most expensive and large-scale models, SB 1047 establishes a regulatory framework that could give the public a false sense of security about controlling this fast-moving technology" and "While well-intentioned, SB 1047 does not take into account whether an Al system is deployed in high-risk environments, involves critical decision-making or the use of sensitive data".

So after 11 amendments, 7 months, 2 votes and 1 veto later, the saga of SB-1047 finally came to an end. It was an interesting time in AI, with the debate around the bill helped uncover people's revealed preferences, what they really thought about where AI was heading and how they were positioned in the race to AGI.

For me, I surprisingly (and for the first time) found the most ground with Hollywood's stance. Unlike Hinton and others, I don't feel there is an existential AI risk, atleast not in the near future. I don't believe that super-smart AI will suddenly allow bad actors to cause great harm that they couldn't previously. The same way how having internet access didn't make everyone build a fighter jet in their backyard. Building nuclear or biological weapons is more about capital and actual resources than just knowing of how to build them.

What I fear the most is the way in which super-smart AI[4] is going to completely disrupt the way human's work and this is going to happen inside the next 5 years. I feel people and our current systems are not ready for what will happen when human intelligence becomes too cheap to meter. A world where people can hire a model as smart as Terrance Tao for 50 dollars per month is extremely scary. This is why I understand why Hollywood protested and passed bills which safegaurded them from use of generative AI. If I was in-charge, I would have passed SB-1047, not because I fear nuclear or bio weapons, but to ensure that the world gets enough time to adjust before AGI hits us.

- ^

Sam Altman in his reddit ama said "We were very surprised by the level of interest and engagement .... We didn't expect this level of enthusiasm."

- ^

I found this substack by Michael Trazzi particularly helpful to figure out how the SB-1047 saga played out (substack link)

- ^

People saw this move by Elon Musk who had increasingly become more active in politics as a way to get indirect regulatory control over OpenAI.

- ^

I keep using super-smart AI & AGI interchangeably. Because I am not sure when super-smart AI ends and where AGI starts.

1 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-11-24T06:10:26.284Z · LW(p) · GW(p)

The problematic things with bioweapons are

1. unlike nuclear explosives or even conventional explosives, the key ingredients are just... us. Life. Food. Amino Acids.

2. They can be rapidly self-replicating. Even if you make just a tiny amount of a potent self-replicating bioweapon, you can cause enormous amounts of harm.

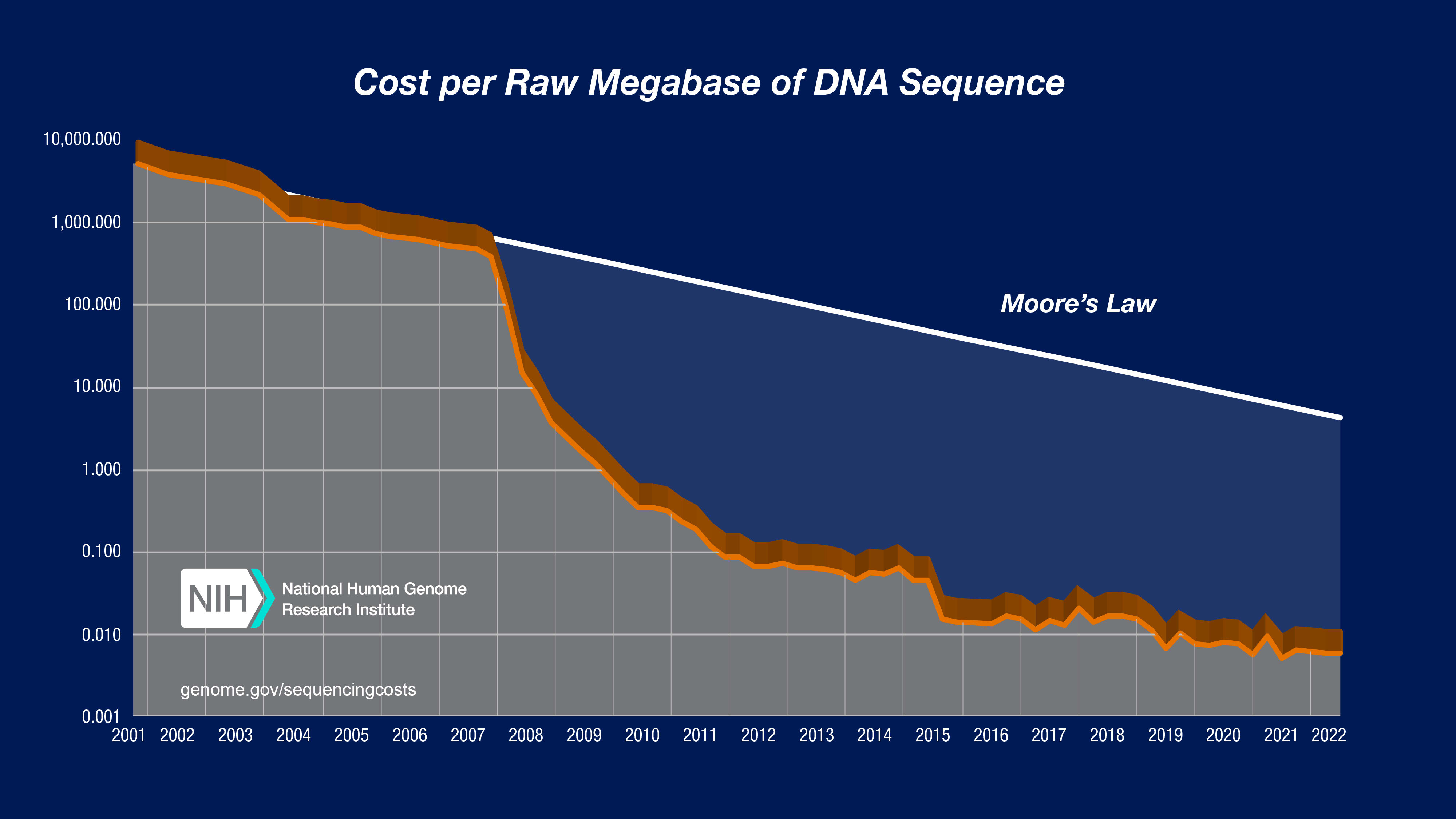

As for making them... thankfully yes, it's still hard to do. The cost and difficulty have been dropping really rapidly though. AI is helping with that.

This plot shows DNA sequencing (reading) costs, but the trends are similar for the cost and difficulty of producing specific DNA sequences and engineering viruses.