[updated] how does gpt2′s training corpus capture internet discussion? not well

post by nostalgebraist · 2020-07-27T22:30:07.909Z · LW · GW · 3 commentsContents

3 comments

[Updated to correct my earlier claim that this doesn't affect GPT-3. Apparently it does?]

I’m out sick today, but had enough energy to do some GPT-related fiddling around.

This time, I was curious what “internet discussions” tended to look like in the original training corpus. I thought this might point to a more natural way to represent tumblr threads for @nostalgebraist-autoresponder than my special character trick.

So, I looked around in the large shard provided as part of https://github.com/openai/gpt-2-output-dataset.

Colab notebook here, so you can interactively reproduce my findings or try similar things.

—–

The results were … revealing, but disappointing. I did find a lot of discussion threads in the data (couldn’t find many chatlogs). But

- almost all of it is from phpBB-like forums (not bad per se, but weird)

- it chooses a single post from each page and makes it “a text,” ignoring all the other posts, so no way for GPT2 to learn how users talk to each other :(

- sometimes the post quotes another user… and in that case, you can’t see where the quote starts and the post begins

- lots of hilarious formatting ugliness, like “Originally Posted by UbiEpi Go to original post Originally Posted by”

- about 0.28% of the corpus (~22000 docs in full webtext) consists of these mangled forum posts

- also, just as a chilling sidenote, about 0.30% of the corpus (~25200 docs in full webtext) is badly mangled pastebin dumps (all newlines removed, etc).no overlap between these and the mangled forum threads, so between them that’s ~0.58% of the corpus.

- remember: the vast majority of the corpus is news and the like, so these percentages aren’t as small as they might sound

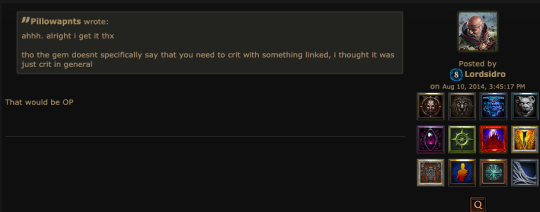

For example, from this thread it picks the one post

and renders it as

“ Pillowapnts

tho the gem doesnt specifically say that you need to crit with something linked, i thought it was just crit in general ahhh. alright i get it thxtho the gem doesnt specifically say that you need to crit with something linked, i thought it was just crit in general

That would be OP That would be OP Posted by Lordsidro

on on Quote this Post

This is apparently standard behavior for the newspaper text cleaner they used, and I could reproduce it exactly. (Its heuristics grab a single post when looking for the “part the content is in.”)

[This paragraph was incorrect, see Update below] Does this affect GPT-3? Probably not? I don’t know how Common Crawl does text extraction, but at the very least, it’ll give you the whole page’s worth of text.

Update: Looked into this further, and I think GPT-3 suffers from this problem to some extent as well.

The Colab notebook has the details, but some stats here:

- I used the small GPT-3 samples dataset as a proxy for what GPT-3's corpus looks like (it's a skilled predictor of that corpus, after all)

- The same filter that found malformed text in ~0.3% of the WebText shard also found it in 6/2008 (~0.3%) of GPT-3 samples.

- Sample size here is small, but with 6 examples I at least feel confident concluding it's not a fluke.

- They exhibit all the bad properties noted above. See for example Sample 487 with "on on Quote this Post" etc.

- For the official GPT-2 sample dataset (1.5B, no top-k), the filter matches 644/260000 samples (~0.25%, approximately the same).

- In short, samples from both models produce "malformed forum" output at about the same rate as its actual frequency in the WebText distribution.

- It's a bit of a mystery to me why GPT-3 and GPT-2 assign similar likelihood to such texts.

- WebText was in the GPT-3 train corpus, but was only 22% of the distribution (table 2.2 in the paper).

- Maybe common crawl mangles the same way? (I can't find good info on common crawl's text extraction process)

- It's possible, though seems unlikely, that the "quality filtering" described in Appendix A made this worse by selecting WebText-like Common Crawl texts

- On the other hand, the pastebin stuff is gone! (380/260000 GPT-2 samples, 0/2008 GPT-3 samples.) Your guess is as good as mine.

- I'd be interested to see experiments with the API or AI Dungeon related to this behavior. Can GPT-3 produce higher-quality forum-like output? Can it write coherent forum threads?

3 comments

Comments sorted by top scores.

comment by gwern · 2020-08-04T01:27:51.608Z · LW(p) · GW(p)

It can't be too bad, though, because I have seen GPT-3 generate fairly plausible forum discussions with multiple participants, and how would it do that if it only ever saw single-commenter documents?

Replies from: gwillen↑ comment by gwillen · 2020-08-04T02:50:51.351Z · LW(p) · GW(p)

Do you have examples of that kind of output for comparison? (Is it reproducing formatting from an actual forum of some kind, or the additional "abstraction headroom" over GPT-2 allowing GPT-3 to output a forum-type structure without having matching examples in the training set?)

Replies from: gwern