'Chat with impactful research & evaluations' (Unjournal NotebookLMs)

post by david reinstein (david-reinstein) · 2024-09-28T00:32:16.845Z · LW · GW · 0 commentsContents

The Unjournal: background, progress, push for communications and impact Notebook/chatbot exploration (esp. NotebookLM) To request access Would love your feedback (thanks!) None No comments

Post status: First-pass, looking for feedback, aiming to build and share something more polished and comprehensive

The Unjournal: background, progress, push for communications and impact

The Unjournal is a nonprofit that publicly evaluates and rates research, focusing on impact.

We now have about 30 "evaluation packages" posted (here, indexing in scholarly ecosystem). Each package links the (open-access) papers, and contains 1-3 expert evaluations and ratings of this work, as well as a synthesis and the evaluation manager's report. Some also have author responses. We're working to make this content more visible and more useful, including through accessible public summaries.

Also see:

- our Pivotal Questions [EA · GW] initiative

- our regularly updated 'research with potential for impact' database

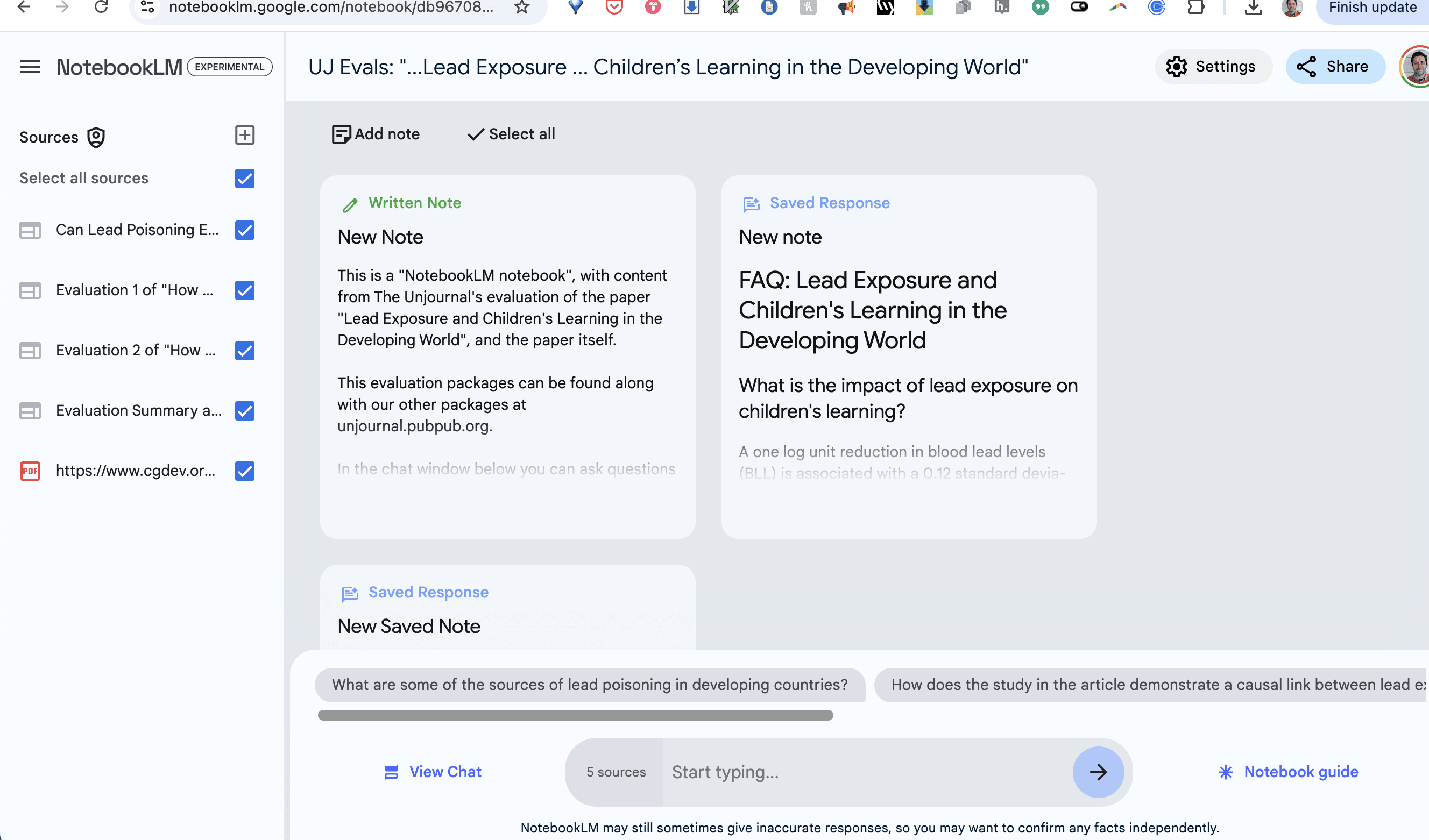

Notebook/chatbot exploration (esp. NotebookLM)

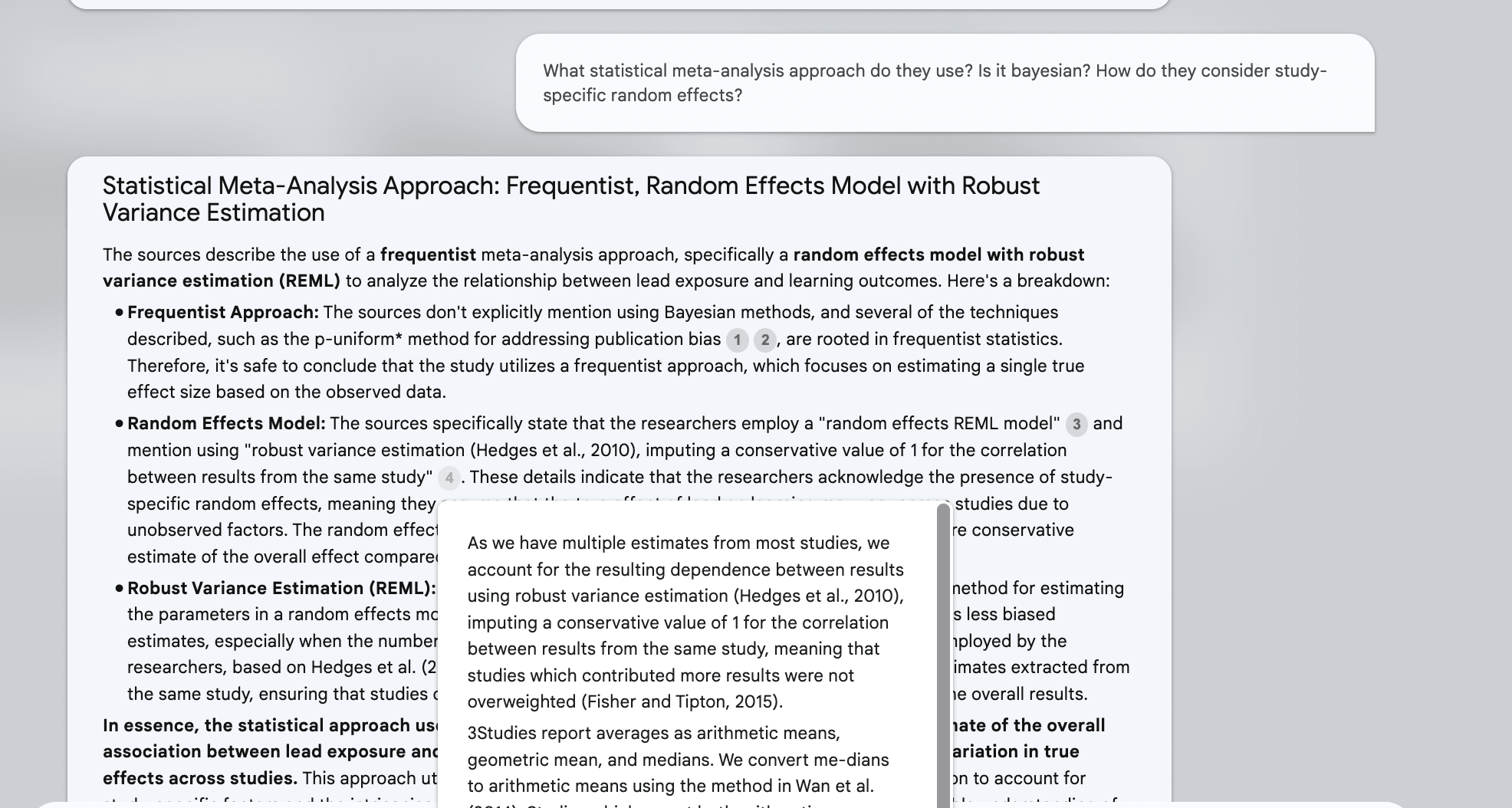

We're considering/building another tool: a notebook/chatbot that will enable you to ask questions about the research and the evaluations. We're trialing a few approaches (such as engineering with anythingLLM etc.), and wanted to get early thoughts and opinions.

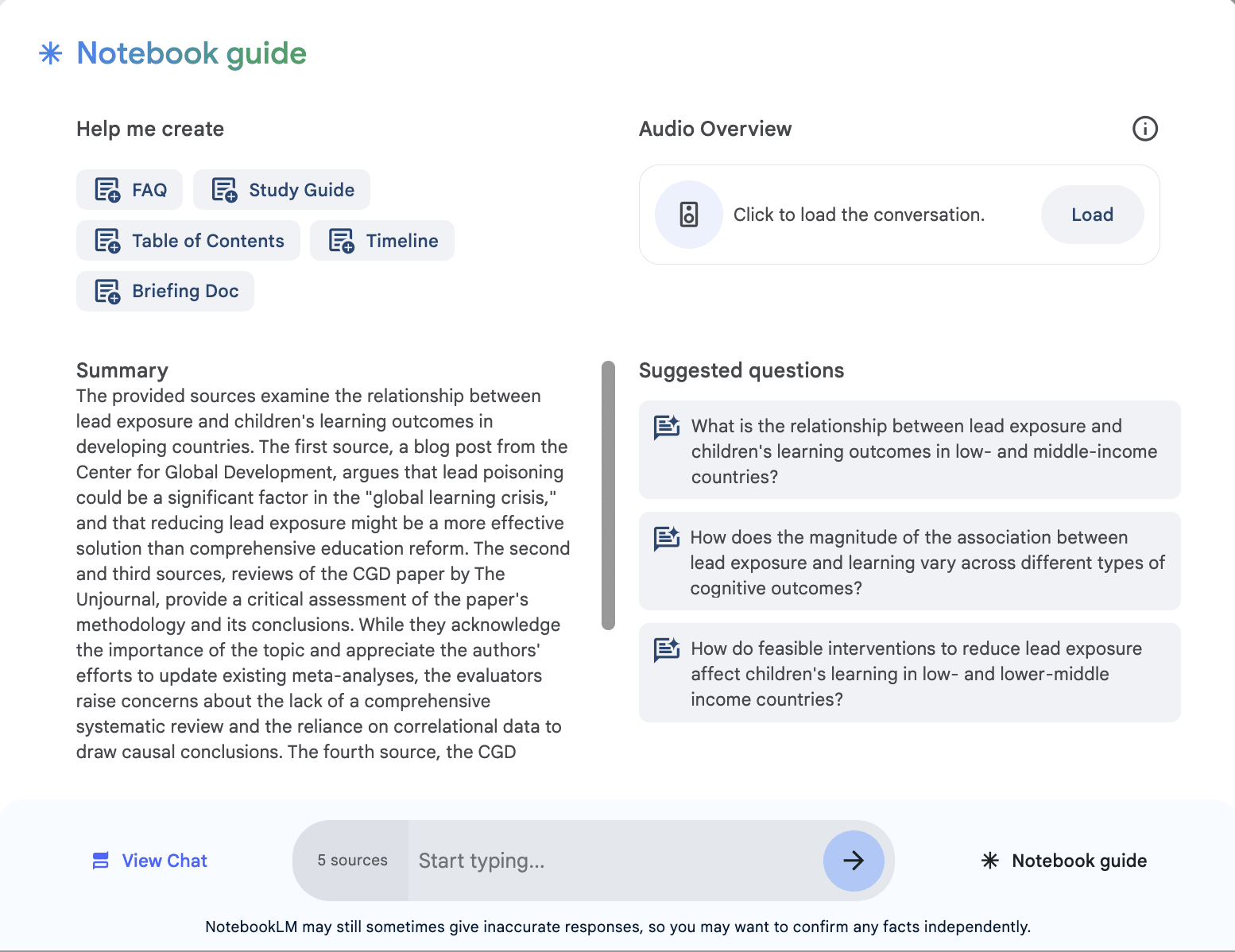

NotebookLM seems particularly easy to set up, and seems to be yielding some positive results. This tool got some eyeballs in academic social media for its AI-generated podcasts of research (aimed at a lay audience, cheerleading, some inaccuracies; e.g., see here and here, with caution), but its notebook chat feature seems more useful for us.

We can upload or scrape a range of sources, including the research paper itself, the evaluations, responses, and syntheses, and even related podcast transcripts and blogs.

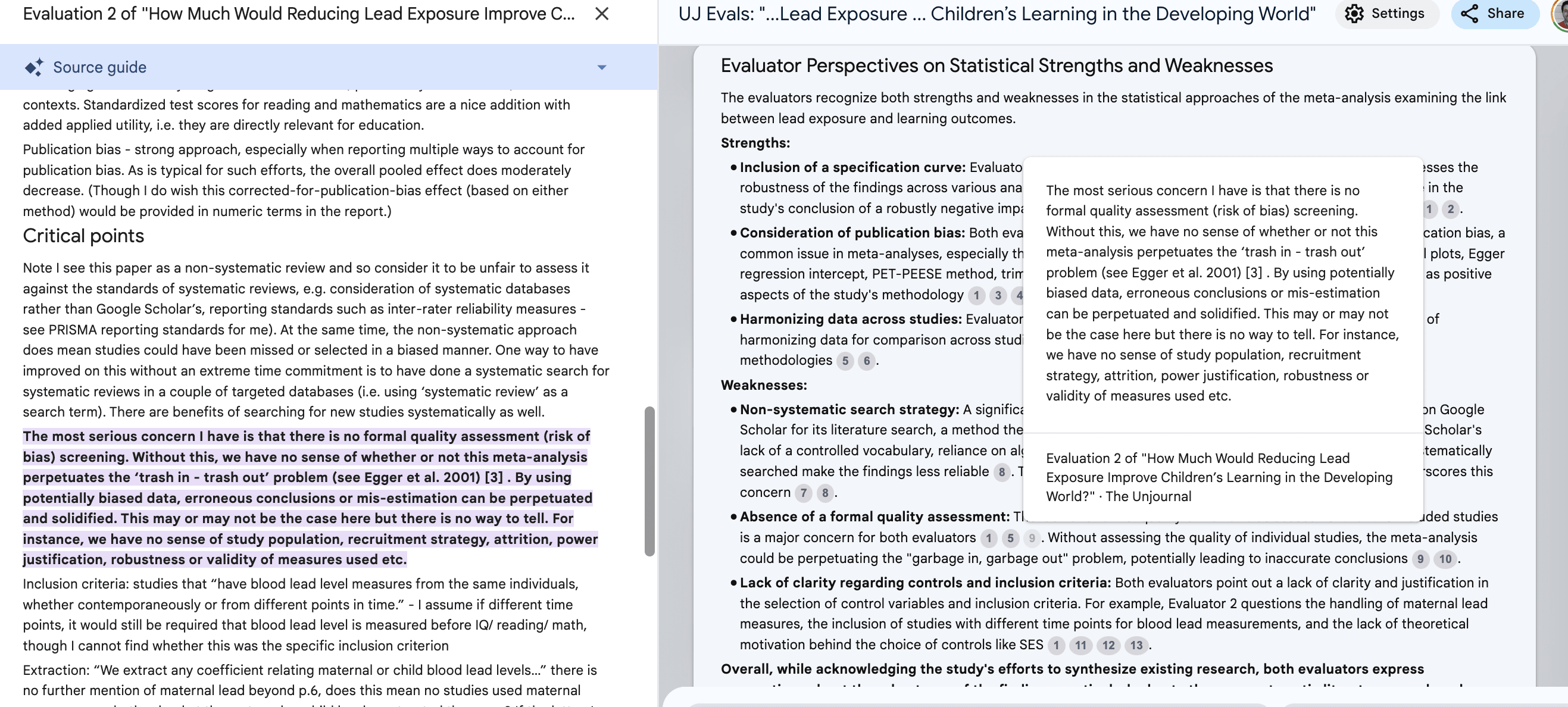

It seems to give fairly useful and accurate answers. It sometimes mixes up things like ‘what we suggest for future evaluators to do’ and ‘what the evaluators actually wrote’. But it tracks and links the sources for each answer, so you can double-check it pretty easily.

Some limitations: The formatting and UX also leave a bit to be desired (e.g., you need to click 'notebook guide' a lot). It can be hard to see exactly what the referenced content is going; especially if it comes from a scraped website, the formatting can be weird. I don't see an easy way to upload or download content in bulk. Saved 'notes' lose the links to the references.

So far we've made notebooks

- for the aforementioned 'Lead' paper,

- For our evaluations of "Forecasting Existential Risks: Evidence from a Long-Run Forecasting Tournament" (also incorporating the 80k podcast on this

- For Banerjee et al ("Selecting the Most Effective Nudge: Evidence from a Large-Scale Experiment on Immunization")

To request access

At the moment you can't share the notebooks publicly – if you share a non-institutional email we can give you access to specific notebooks, as we create them. To request access complete the 3-question request form here.

Would love your feedback (thanks!)

We've only created a few of these, we could create more without too much effort. But before we dive in, looking for suggestions on:

- Are these useful? How could they be made more useful?

- Is NotebookLM the best tool here? What else should we consider?

- How to best automate this process?

- Any risks we might not be anticipating?

0 comments

Comments sorted by top scores.