[Translation] In the Age of AI don't Look for Unicorns

post by mushroomsoup · 2025-02-07T21:06:24.198Z · LW · GW · 0 commentsContents

Judge a company by its daily active users and the number of tokens they consume Furthermore, based on the token usage quantity as well as customer use cases revealed by cloud service providers, it show the prevalence of this benchmark. AI as the major contributor to growthTandai Zeng of Volcano Engine once said in an interview: With Agent+AI Infrastructure, a billion tokens is not a pipe-dream It is widely recognized in the industry that the computing paradigm of the future will be centered around the GPU. Therefore, daily consumption of 1 billion tokens by a single enterprise has become a useful reference point. None No comments

Translator's note: This is another article from Jeffery Ding's Around the Horn. In short, the article suggests that a good metric of AI adoption is the number daily average tokens used by a company and paying close attention to those who use more than one billion tokens everyday. In China there are now more than 200 companies which fit this criteria. The article gives examples of such companies (e.g. Homework Helper, Dream Island, and Volcano Engine) in a variety of different fields and discuss their use cases.

Authors: QbitAI

Source: QbitAI

Date: January 22, 2025

Original Mandarin: AI时代不看独角兽,看10亿Tokens日均消耗

Unicorns are startups which achieve a valuation of more than $1 billion within 10 years of their founding. These blue chip companies are leaders of industry with unlimited market potential bringing about technological and structural innovations.

In the era of LLMs, a similar threshold is emerging: average daily usage of one billion tokens as a baseline for a successful AI business.

QbitAI, summarizing the market trends of the second half of 2024, found that there are at least 200 Chinese companies which have exceeded this threshold. These companies cover enterprise services, companionship, education, Internet, games, and direct to consumer businesses.

This suggests that the war for dominance in the era of large models has come to an end and that the most valuable business models are becoming clear.

The proof of this lies in the pioneers of various fields who can already find use cases which require at least one billion tokens per day.

But it’s reasonable to ask: why use the daily average use of one billion tokens as a threshold?

Judge a company by its daily active users and the number of tokens they consume

First, let's calculate what “an average daily consumption of one billion tokens” entails.

Referring to the DeepSeek API:

An English character is about 0.3 tokens.

A Chinese character is about 0.6 tokens.

In terms of Chinese language content, one billion tokens represent more than 1.6 billion Chinese characters. Translated into conversation length, the number of characters in "Dream of the Red Chamber"[1] is about 700,000-800,000 characters. One billion tokens is equivalent to covering the content of 2,000 copies of "Dream of the Red Chamber" everyday with the AI.

If we estimate that an AI model needs to process an average of 1,000 tokens for every interaction, one billion tokens means that the AI completes one million conversations everyday.

For a customer facing application, one million interactions equates to at most one million DAU[2].

Take Zuoyebang[3] as an example. Using only the data from its overseas product Question.AI, the daily token consumption of the business is close to one billion.

This AI educational app, based on a LLM, supports features such as question look-up from images and AI teaching assistants. It can also answer questions as a Chatbot.

The app takes into account the homework question and context to generate the answer and uses about 500 tokens for each round of dialogue. The average conversation lasts about three rounds per day. According to disclosures, Question.AI's DAU is nearly 600,000.

Based on this, we can infer that Question.AI's daily token consumption is close to one billion. At the same time, the company has deployed multiple AI applications and launched multiple AI learning devices. With these, the total daily token consumption will only increase.

Let's look at a company providing AI companions: Zhumengdao[4].

According to recent disclosures, the total number of creators on Zhumengdao exceeds 500,000, and, of the top 20 characters, 85% are original. The typical Zhumengdao users can consume upwards of 4,000 words a day, with the average number of rounds of conversation exceeding 120.

The average AI generated content[5] for a single user per day is about 8,000-12,000 words — based on the estimate that the size of the output is two to three times the size of the input.

According to the data obtained by QbitAI, Zhumengdao's DAU is currently 100,000. From this, we can infer that Zhumengdao's daily token consumption also exceeds one billion.

If we consider devices, there is an even greater diversity of token consumption methods.

In addition to smart assistants, phones with built-in AI capabilities have various features embedded into the system. These include one-click elimination of photo-bombers, call summarization, one-click item identification, etc. According to OPPO's October 2024 report, Xiaobu Assistant's[6] monthly active users exceeded 150 million.

Furthermore, based on the token usage quantity as well as customer use cases revealed by cloud service providers, it show the prevalence of this benchmark.

In July 2024, Tencent Hunyuan disclosed that their service reached a daily volume of 100 billion tokens[7].

In August 2024, Baidu disclosed that the average daily usage of Wenxin[8] exceeded 600 million and the average daily tokens processed exceeded 1 trillion. As of early November the same year, the average daily usage of Wenxin exceeded 1.5 billion. A year ago, this value was only 50 million --- this is a 30 fold increase in one year.

In July 2024, the average daily token usage of Doubao[9] by its corporate customers increased 33 fold[10]. In December, ByteDance revealed that the average daily token usage of Doubao exceeded 4 trillion tokens.

Who are using all these tokens?

Based on the customers of Volcano Engine[11] as disclosed on their official website, their users are leading auto manufacturers, finance and internet services businesses, retail, smart device manufacturers, games, and health. These are all very prominent and well-known brands.

At the same time, the performance of members of this "one billion tokens club" in 2024 provides a good litmus test for the metric.

AI as the major contributor to growth

First, let's look at the rising star overseas: Homework Helper.

In September 2023, Homework Helper launched the Galaxy Model, which integrates years of accumulated educational data and associated AI algorithms. Designed especially for the field of education, it covers multiple disciplines, grade levels, and learning environments.

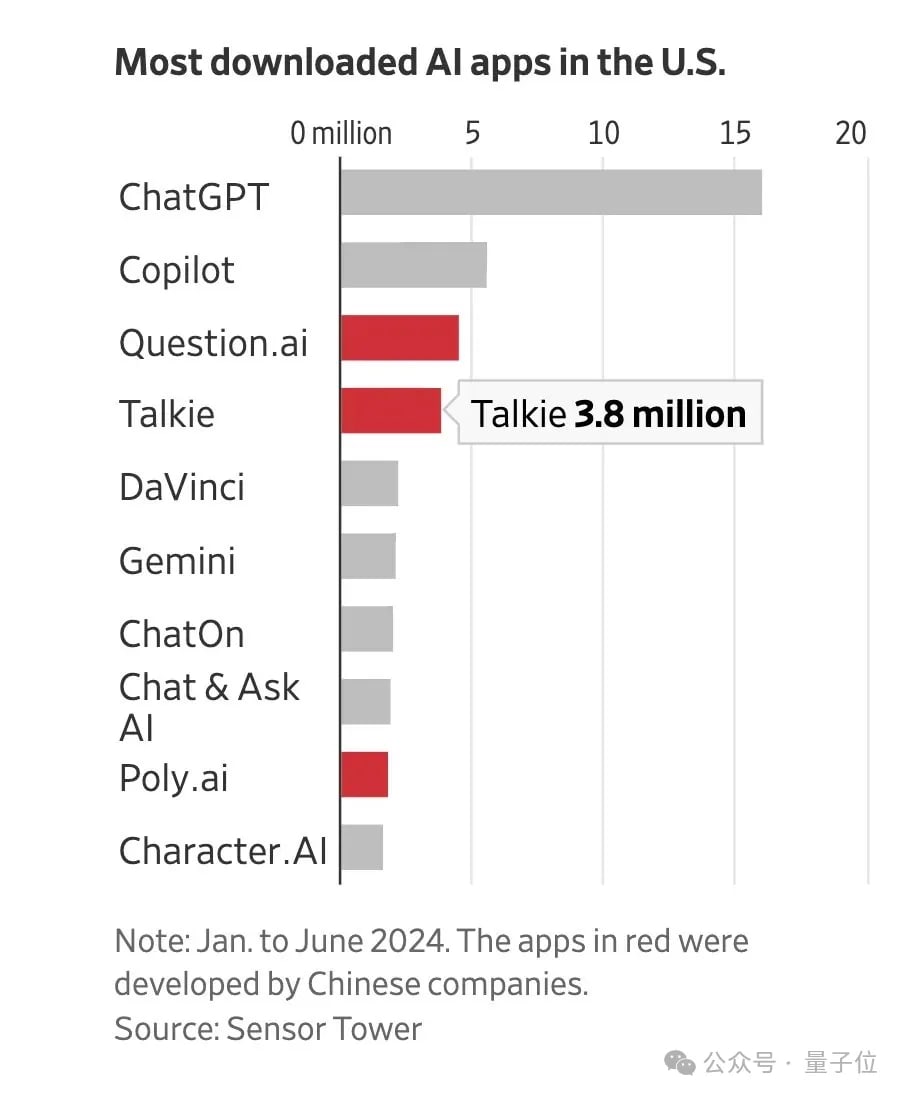

According to Sensor Tower's data for the first half of 2024, Homework Helper’s overseas product Question.AI ranked among the top three AI applications in the United States in terms of downloads and has a million MAUs[12]. Other AI chatbots, such as Poly.AI, also ranked among the top 30 in terms of downloads.

On the other hand, AI powered educational devices can bring in a more direct source of revenue.

In July 2024, Frost and Sullivan[13] certified Homework Helper as the top ranked AI powered educational device in China in the first half of the year. According to data from Luotu Technology, in the third quarter of 2024, Homework Helper's learning machine products ranked first in online sales with 20.6% of the market share.

<Image of Frost and Sullivan Certificate Omitted>

In the field of enterprise services, Jinshanyun[14] clearly stated in its first quarter financial report of 2024 that quarterly total revenue increased steadily month-to-month by 3.1%, mainly from customers of its AI-related services.

Its cloud computing revenue was 1.187 billion yuan, a 12.9% increase from the previous quarter, mainly contributed by its AI customers. While proactively reducing the scale of its CDN[15] services, this part of the growth drove the overall cloud services revenue, with an increase of 2.9% from the same quarter of 2023.

This trend continued into the second and third quarters. The company’s AI customers were still the main contributors to growth in its cloud computing business and overall revenue.

Let's look at the device most pertinent to everyday use: smartphones.

Since the AI/LLM boom, OPPO completely embraced the trend introducing generative AI capabilities into ColorOS and its entire product series. In 2024, nearly 50 million OPPO users' phones were equipped with GenAI capabilities .

Counterpoint data shows that the top four[16] smartphone manufacturers in the world in 2024 are Apple, Samsung, Xiaomi, and OPPO.

According to Canalys, in 2024, 16% of global smartphone shipments were AI phones. Forecasts show that this proportion will increase to 54% by 2028. Between 2023 and 2028, the AI phone market is expected to grow at a CAGR[17] of 63%. This shift will first appear on high-end models.

For example, OPPO's latest Find X8 series has a strong AI flavor. By introducing Doubao Pro, Doubao Lite, Doubao Role Play, and stronger models with real-time search capabilities, the OPPO Find X8 series can provide a more refined AI experience.

<OPPO Find X8 Ad Omitted>

This trend is also reflected in cloud computing, resulting in more tokens consumed.

Tandai Zeng of Volcano Engine once said in an interview:

In five years, the average daily number of tokens usage by enterprises may reach hundreds of trillions, especially after the launch of multi-modal models where Agentic assistants in each modality will require even more consumption.

Behind these estimates is insight into the changes in computing power infrastructure, agent development, AI application development, and the deployment of models.

With Agent+AI Infrastructure, a billion tokens is not a pipe-dream

From an implementation perspective, agentic AIs are coming into focus and native AI applications are gaining momentum.

In his year-end review, OpenAI CEO Altman mentioned:

We believe that in 2025, we may see the first batch of artificial intelligence agents "join the workforce" and substantially change a company’s productivity.

Then, practicing what it preaches, OpenAI launched the agent capabilities for ChatGPT which gave it the ability to execute plans and complete various tasks for users.

<Open AI Agent Ad Omitted>

At a more practical level, QbitAI has observed native AI applications gaining momentum.

With continuous upgrades to the capabilities of the underlying models, AI smart assistant apps have grown significantly in the past year.

In 2024, the number of new users of AI smart assistant apps exceeded 350 million. The number of new users in December alone exceeded 50 million, a nearly 200 fold increase compared to the beginning of 2024.

Take Doubao for example. In September Doubao became the first AI application in China whose user base exceeded 100 million. Currently, it has a market share of over 50% and has become the "Nation's Native AI App" and the "Top AI Smart Assistant".

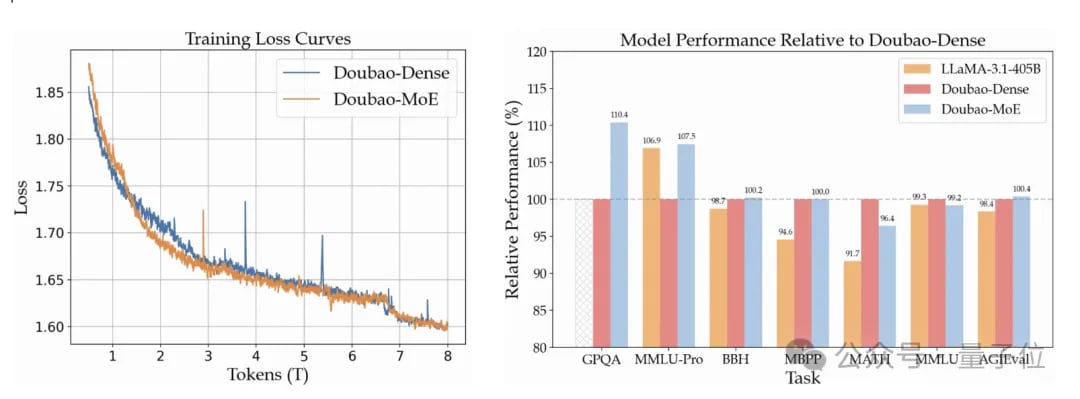

Just today, Doubao released its 1.5 Pro model whose capabilities have increased across the board. Through MoE architecture optimizations, it surpasses the performance of dense models with only one seventh of the parameters.

In terms of multimodal capabilities, vision supports dynamic resolution and can process megapixel-level images, and perform well on multiple benchmark tests. For voice, the innovative Speech2Speech end-to-end framework achieves a fusion of voice and text increasing the comprehension and expressiveness of dialogues.

The model also greatly improved its reasoning ability through RL methods. Over time the progress of Doubao-1.5-Pro-AS1-Preview on AIME has surpassed o1-preview, o1 and other reasoning models.

More powerful underlying model undoubtedly provides a more solid foundation for native AI applications.

Focusing on the enterprise side, tangible growth occurred even earlier. Development of platforms for Agents has become one of the fastest growing enterprise service products of cloud vendors.

For example, HiAgent launched by Volcano Engine has signed up more than 100 customers in the first seven months of its launch.

It is positioned as an enterprise-exclusive AI application platform, with the goal of helping enterprises complete the "last ten kilometers" from model to application in a zero-code or low-code way.

HiAgent is backward compatible with multiple models. While natively integrating Doubao's model, it is also compatible with third-party closed or open-source models. It assists in easily building intelligent entities through four components: prompt word engineering, knowledge bases, plug-ins, and workflow orchestration. It pre-sets rich templates and plug-ins provide space for customization.

At present, HiAgent has already provided services to Fortune 500 companies such as China Feihe[18], Meiyajia[19], and Huatai Securities, with more than 200 adoption scenarios and more than 500 agents.

Button AI is another native application development platform. It has helped Supor[20], China Merchants Bank, Hefu Noodle, Chongho Bridge[21], and others develop and launch enterprise-level agents.

Compared to HiAgent, it is aimed at enterprises with few AI application developers and high service stability requirements, and aims to lower the threshold for model use and development. As an AI application development platform, Button provides a series of tools such as hyperlinks, workflows, image streams, knowledge bases, and databases. It also seamlessly connects to the Doubao family of models and enterprise fine-tuned and open source models, providing enterprise users with a variety of templates as well as services for debugging, publishing, integration, and observation. For example, using the news plug-in, you can quickly create and launch an AI announcer broadcasting the latest news.

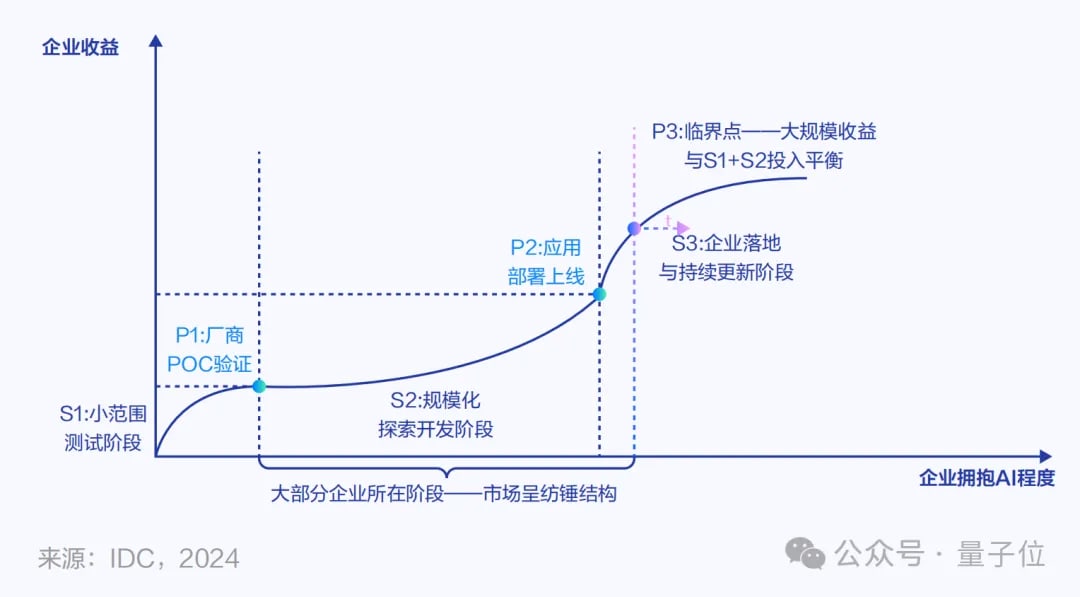

Through these implementations, it is not difficult to notice that as the threshold for AI application/agent development drops, companies from a wide variety of domains have begun the process of large-scale model exploration and development. As the pace of this process speeds up for leading enterprises, they are more quickly integrating models into their businesses and unlocking the dividends of model use earlier.

As industry pioneers emerge, the scale of AI application usage will further permeate. At the same time, platforms such as HiAgent will continue to evolve. Their capabilities will not be limited to AI agent development, but will further develop into a capable AI mid-layer.

On the supply side, the most notable phenomenon of 2024 in cloud computing is the "price war". The improvement underlying this is power costs optimization. Cloud vendors can continue to "exchange price for volume" to promote the widespread adoption of AI applications.

It is widely recognized in the industry that the computing paradigm of the future will be centered around the GPU.

In 2024, cloud vendors have continued to strengthen their AI Infrastructure capabilities to cope with the explosive compute demands that large-scale reasoning require.

For example, Volcano Engine launched an AI cloud native solution. It supports large-scale GPU cluster elastic scheduling management, the computing products and network optimization were modified to optimize for AI reasoning. In the past year or so of LLM adoption, Volcano Engine has provided a solid computing foundation for Meitu Xiuxiu[22], Moonton Technology[23], DP Technology[24], etc.

Recently, Volcano Engine Force winter conference elaborated on their GPU-centric AI Infrastructure business. Using vRDMA networking, they are able to support large-scale parallel computing and P/D separation reasoning architecture which improves training and reasoning efficiency, and reduces costs. The EIC elastic cache solution allows for direct GPU connection, reducing large model reasoning latency to one-fiftieth of its original latency, and reducing costs by 20%.

As technical requirements and model prices decrease with the continuous optimization of computing power costs, the daily total token consumption of 100 trillion tokens will not idle banter, but a reality almost within reach.

Therefore, daily consumption of 1 billion tokens by a single enterprise has become a useful reference point.

First, it represents a new trend in models adoption where enterprises embrace the dividends models can provide as they move towards this number.

Second, it has become a new threshold for a stage in the adoption of LLMs. Is the AI business operational? Is the demand real? The answers to these questions can be based on this reference system.

Third, the daily average consumption of 1 billion tokens is only the "beginner-level" of LLM adoption. The consumption of 100 trillion or one quadrillion tokens by a single customer is a cosmic quantity worth looking forward to.

With the formation of this new benchmark, we can use it to more clearly identify the "unicorn" of LLM adoption.

- ^

One of the classics of Chinese literature. The translated version is about 2,500 pages split into five volumes and has about 845,000 English words.

- ^

daily active users

- ^

I would translate the company’s name as “Homework Helper”.

- ^

Maybe something like “Creating Dream Islands” captures the vibes? I think this app is similar to Character.AI.

- ^

AI output includes virtual dialogue replies, AI generated answers, etc.

- ^

Built-in AI service of phones built by several Chinese smartphone makers including OPPO, Oneplus, realme.

- ^

This includes tokens used by its own business and services.

- ^

Chatbot built into Baidu (Chinese Google)

- ^

Model of ByteDance, the TikTok people.

- ^

I think the article had a typo. In the picture below it clearly says 33-fold increase.

- ^

This is a subsidiary of ByteDance which services business.

- ^

monthly active users

- ^

Some sort of business consulting firm. You have to pay a fee to communicate the outcome of an award.

- ^

Lit. Gold Mountain Cloud (it doesn't have a good translation).

- ^

content delivery network

- ^

in terms of market share

- ^

compound annual growth rate

- ^

They make milk powder and related products.

- ^

A brand of convenience stores.

- ^

Company which makes household appliances.

- ^

A financial institution targeted towards farmers.

- ^

Meitu is popular photo and video editing software.

- ^

A subsidiary of ByteDance who develops video games

- ^

Some sort of AI for science company.

0 comments

Comments sorted by top scores.