Two Major Obstacles for Logical Inductor Decision Theory

post by Scott Garrabrant · 2017-04-17T21:10:55.000Z · LW · GW · 5 commentsContents

Obstacle 1: Untaken Actions are not Observable Obstacle 2: No Updatelessness for Computations None 5 comments

In this post, I describe two major obstacles for logical inductor decision theory: untaken actions are not observable and no updatelessness for computations. I will concretely describe both of these problems in a logical inductor framework, but I believe that both issues are general enough to transcend that framework.

Obstacle 1: Untaken Actions are not Observable

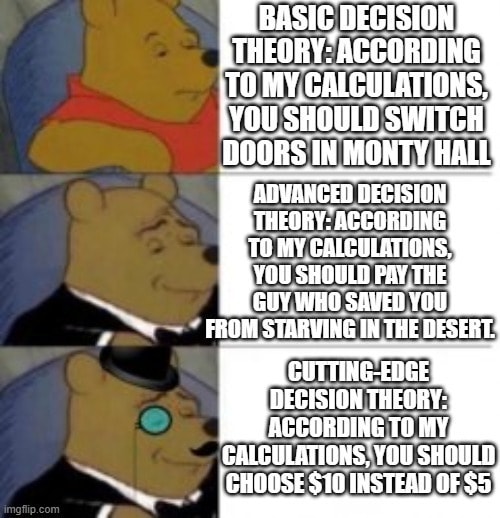

Consider the following formalization of the 5 and 10 problem:

Let is a logical inductor. Let be an agent which uses this logical inductor, to output either 5 or 10 as follows. The utility function for agent is simply , and the source code for agent is given by

Ideally, we would be able to say something like . Unfortunately, this is not the case. There exists a logical inductor such that . Consider a construction of a logical inductor similar to the one in the paper, but for which there is a single trader that starts with most of the wealth. This trader spends all of its wealth on conditional contracts forcing and . Note that the bets made conditioned on are accurate, while the bets made conditioned on do not matter, since the condition will be false. (No other trader will have enough wealth to substantially change the expectations). This trader will therefore lose no money, and be able to do the same thing again next round. (This is assuming that the value of A_n and U_n are computed in time for round n+1 of the deductive process. If this is not the case, we could do the same trick on a subsequence with this property.)

This same phenomenon has been observed in many other contexts. The spurious counterfactuals that can arise in proof based systems are another manifestation of the same problem.

One attempt at a fix is epsilon exploration. (The analogous fix in the proof based world is the chicken rule) Here, you take every possible action with probability . Then, when conditioning on taking an action you normally wouldn't take, you will have some data on what happened when simpler versions of yourself randomly explored and took that action. The epsilon exploration version of the above agent is

This agent uses pseudorandomness the explore, and does in fact converge to choosing 10 all but epsilon proportion or the time (The lower density of taking 5 is at most ). This fix has major problems. The obvious problem is that taking a bad action with probability epsilon could be disastrous for an agent that makes many different decisions.

There is a larger problem with this approach. There are now two different ways you could take any given action. You could take that action because it produces the highest expected utility, or you could take that action because your exploration clause was triggered. These two different ways of taking the action could be very different. Trivially, they could have different runtimes. More importantly, they could effect the extent to which your action is predictable.

This problem will, for example, cause a logical inductor EDT agent to defect in a prisoner's dilemma against a similar power agent that is trying to imitate it. If such an agent were to start out cooperating, random defection will be uncorrelated with the opponent's prediction. Thus the explored defection will be profitable, and the agent will learn to defect. The opponent will learn this and start predicting defection more and more, but in the long run, the agent view this as independent with its action.

To see concretely how epsilon exploration fails, consider a different utility function , and the same agent above with exploration. This agent gets a reward for taking the 10, but a much bigger reward for being predicted to take the 5. This is basically Newcomb's problem, but the predictor is no more powerful than the agent, and in fact has the same epistemic state as the agent.

The problem is that in the , is a constant that the agent has arbitrary access to. It is not changed by whether the agent takes the 5 or the 10 when exploring, since does not know if the agent will explore.

Thus, if the agent almost always took the 5, the rare occasions where it took the 10 would produce more utility, and similarly if the agent almost always took the 10, the rare occasions where it took the 5 would produce less utility. The agent will converge to almost always taking the 10, just like last time, and will miss out on the large reward for being predicted to take the 5.

Obstacle 2: No Updatelessness for Computations

The second obstacle is related to the counterfactual mugging problem. There is an agent , and a predictor . The predictor is much more powerful than the agent, except there is a bit that the agent knows, but the predictor does not. The predictor's beliefs about are uniform. Again, the agent can take 5 or 10, However if b=1, then the agent will get a large reward if predicts that conditioned on , the agent takes the 5.

We will formalize this by giving the agent and the predictor two different logical inductors. The agent will use and the predictor will use . There will be a sequence of bits , which are sentences mutually independent with . They will be given truth values independently, uniformly at random. will use a deductive process knows the value of for , and further will be inexplicable by traders that have oracle access to for on day . (Technically, logical inductors have computable deductive processes and traders, but it is not a problem to modify the definition to give oracle access to the traders and the program that computes the deductive process.)

will be a very powerful logical inductor, but will receive the bits much slower. will know all the bits with , for some fast growing function . will also have perfectly accurate counterfactuals about what will do if fed various fake values for .

The utility function will be .

First, observe that if were not yet known by the agent, everyone would assign probability 1/2 to , and this problem would be similar to the Newcomblike problem above. However, by making very powerful, we eliminated the difficulties above. The predictor can predict when the agent will explore, so exploring into 5 is rewarded and exploring into 10 is punished, and the trader would converge to almost always taking 10. However, by adding the hidden bit, we created new problems.

In particular, if , the agent has no control over what it would do if , and if , the agent does not care about what it is predicted to do. This agent will again converge to taking the almost always, and miss out on the large reward (if ) for being predicted to take the 5 if . Ideally, the agent will take the 10 if , and take the 5 if .

Although this problem may seem contrived, it is very important. This kind of thing actually does show up all the time. If you know do not know a secret, it might be a good idea to keep plausible deniability that you know a secret. This might incur a social cost, which you are willing to pay, since it causes you to act the same way regardless of whether or not you know a secret, and thus cause yourself to counterfactually be able to keep the secret better if you had one. Poker is all about this phenomenon.

More importantly, this problem needs to be understood for reflective stability. If an agent does not know the value of yet, but knows that it will take the 10 either way, the agent might want to commit to taking the 5. This is a failure of reflective stability. The agent would prefer to modify to use a different decision theory. The fact that this happens even in theory is a bad sign for any decision theory, and is an especially bad sign for our ability to understand the output of that decision theory.

In a Bayesian framework, this would solved using Updateless Decision Theory. The agent would not update on its observation of . It would instead use its prior about to choose a policy, a function from its observation, , to its action . This strategy would work, and the agent would take the 10 only if .

Unfortunately, we do not know how to combine this strategy with logical uncertainty. The beliefs of a logical inductor do not look like bayesian beliefs where you can go back to your prior. (Universal Inductors were an attempt to do this, but they do not work for this purpose.)

5 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-03-28T04:28:30.194Z · LW(p) · GW(p)

comment by cousin_it · 2017-04-30T23:43:53.000Z · LW(p) · GW(p)

I'll just note that in a modal logic or halting oracle setting you don't need the chicken rule, as we found in this old post: https://agentfoundations.org/item?id=4 So it seems like at least the first problem is about the approximation, not the thing being approximated.

Replies from: SamEisenstat↑ comment by SamEisenstat · 2017-06-10T06:31:25.000Z · LW(p) · GW(p)

Yeah, the 5 and 10 problem in the post actually can be addressed using provability ideas, in a way that fits in pretty natually with logical induction. The motivation here is to work with decision problems where you can't prove statements for agent , utility function , action , and utility value , at least not with the amount of computing power provided, but you want to use inductive generalizations instead. That isn't necessary in this example, so it's more of an illustration.

To say a bit more, if you make logical inductors propositionally consistent, similarly to what is done in this post, and make them assign things that have been proven already probability 1, then they will work on the 5 and 10 problem in the post.

It would be interesting if there was more of an analogy to explore between the provability oracle setting and the inductive setting, and more ideas could be carried over from modal UDT, but it seems to me that this is a different kind of problem that will require new ideas.

comment by William_S · 2017-05-30T00:58:35.000Z · LW(p) · GW(p)

In obstacle 1, is the example of a trader that has accumulated most of the wealth representative of the fundamental difficulty, or are there other ways that the naive decision theory fails? If it is representative, would it be possible to modify the logical inductor such that when facing a decision, traders are introduced with sufficient wealth betting on all outcomes such that their probability is at least epsilon, and forcing the problematic trader to lose it's wealth (making sure that all decisions start from a position of thinking that each action could be taken with at least probability epsilon, rather than forcing that this is the outcome of the decision)?

Replies from: SamEisenstat↑ comment by SamEisenstat · 2017-06-10T01:16:37.000Z · LW(p) · GW(p)

It's hard to analyze the dynamics of logical inductors too precisely, so we often have to do things that feel like worst-case analysis, like considering an adversarial trader with sufficient wealth. I think that problems that show up from this sort of analysis can be expected to correspond to real problems in superintelligent agents, but that is a difficult question. The malignancy of the universal prior is part of the reason.

As to your proposed solution, I don't see how it would work. Scott is proposing that the trader makes conditional contracts, which are in effect voided if the event that they are conditional on doesn't happen, so the trader doesn't actually lose anything is this case. (It isn't discussed in this post, but conditional contracts can be built out of the usual sort of bets, with payoffs given by the definition of conditional probability.) So, in order to make the trader lose money, the events need to happen sometimes, not just be expect to happen with some nonnegligable probability by the market.