Seeking Mechanism Designer for Research into Internalizing Catastrophic Externalities

post by c.trout (ctrout) · 2024-09-11T15:09:48.019Z · LW · GW · 2 commentsContents

TL;DR

DM me if you're interested in collaborating!

The Project

The Work

Theory of Impact

Compensation/Timeline

About me

Contact

None

2 comments

TL;DR

I need help finding/developing a mech that can reliably elicit honest and effortful risk-estimates from frontier AI labs regarding their models, risk-estimates to be used in risk-priced premiums that labs then pay the government (i.e. a "Pigouvian tax for x-risk"). Current best guess: Bayesian Truth Serum.

Stretch-goal: find/develop a mech for incenting optimal production of public safety research. Current best guess: Quadratic Financing.

DM me if you're interested in collaborating!

The Project

X-risk poses an extreme judgment-proof problem: threats of ex post punishment for causing an existential (or nationally existential, or even just a disaster that renders your company insolvent) have little to any deterrent effect. Liability on its own will completely fail to internalize these negative externalities.

Traditionally, risk-priced insurance premiums are used to solve judgment-proofness (turn large ex post costs into small regular ex ante costs). However, insurers are also judgment-proof in the face of x-risk.

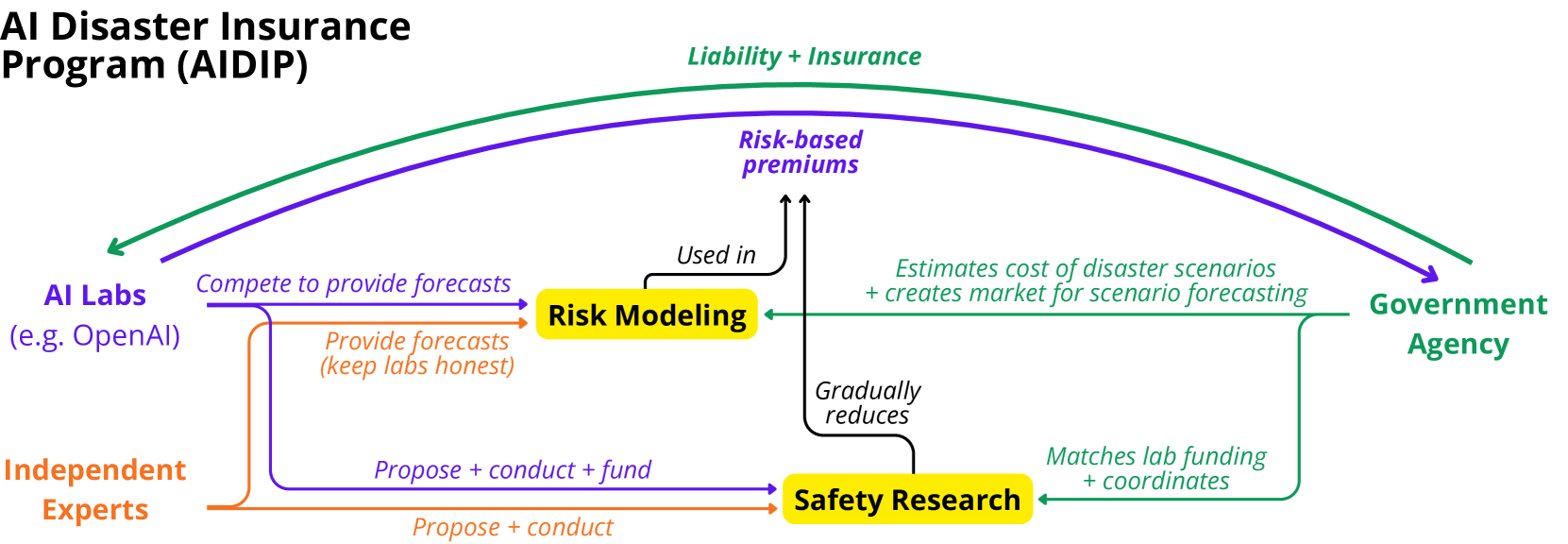

I'm developing a regime for insuring these "uninsurable" risks from frontier AI. It's modeled after the arguably very successful liability and insurance regime for nuclear power operators. In two recent workshop papers, I argue we should make foundation model developers liable for a certain class of catastrophic harms/near miss events and:

- Mandate private insurance for commercially insurable losses (e.g. up to ~$500B in damages)

- Have the government charge risk-priced premiums for insurance against uninsurable losses (i.e. a "Pigouvian tax for x-risk")

A government agency – through audits, its own forecasts and so on – could (and should) try to make these risk-estimates. However, this will be costly and they will struggle to collapse the information asymmetry between it and the developers it insures. Relying mostly on mechanism design to just incentivize labs to report honest and effortful risk-estimates has a number of advantages:

- It should be cheaper for the government (more politically viable)

- It should better leverage all available information, and result in more information gathering – it should just result in better risk -estimates

- It's more secure: developers can divulge the risk implications of their private info without sharing sensitive private info (e.g. model weights).

The regime in schematic form:

The Work

I lack the mech design expertise to confidently assess the quality/relevance/usability of mechs I read about; I'm looking for someone with at least a graduate level understanding of mechanism design to collaborate with me.

The work will most likely involve:

- you sifting through some papers/mechs

- you floating the best contenders to the top

- debating the pros and cons of each with me

- (if necessary: you make modifications to our favorite pick)

- (if necessary: you prove some nice things about the mech)

- you explain things well enough to me so that I can make the write-up (assuming you don't want to make the write-up)

It's possible this only takes you ~40 hrs to accomplish, if an appropriately plug-n-play mechanism is already out there. I doubt this however (I have done some preliminary searching).

You can find more details of the questions I think need working out here (a much older, longer draft of the workshop papers linked above).

Theory of Impact

The goal is to write a large policy paper and then spin off some policy memos. I've applied to some policy fellowships. I plan to work on this proposal regardless, but obviously if I get in, that will be my platform for sharing this work.

Policy folks I've talked to, including a few people that work in DC, have expressed interest in seeing this developed further – e.g. Makenzie Arnold tells me this is "in the category of sensible" proposals. But obviously it needs more fleshing out.

If the research collaboration I'm proposing here goes great and policy folks love it, we may want to do a follow-up running an experiment to empirically verify our claims in as analogous a setting as we can muster.

Compensation/Timeline

If money is an issue, I'm willing to pay 20~40$/hr, possibly more for exceptional collaborators. I'm also happy to do all the writing/paperwork if you just want to provide the thought input. Happy to help write a grant proposal too (but I'm unlikely to be able to secure one on my own – see below.)

Ideally, I'd like to get something written up by EoY. Within that time frame though, I'm very flexible.

About me

FWIW, my two workshop papers linked above were accepted into the GenLaw workshop at ICML 2024.

I recently did this deep dive into insurance, liability and especially the nuclear power precedent, but I'm only a casual appreciator of mechanism design and economics. My MA was in philosophy.

NB: I'm a very early career professional and do not have an institutional affiliation.

I'm based in Boston (out of the AISST office).

Contact

If all this sounds like you, or someone you know, feel free to DM me or email at ctroutcsi@gmail.com! If you're just interested in the proposed regime or have questions, feel free to ask them in the comments.

2 comments

Comments sorted by top scores.

comment by Chris_Leong · 2024-09-11T15:39:10.669Z · LW(p) · GW(p)

I guess I'm worried that allowing insurance for disasters above a certain size could go pretty badly if it increases the chance of labs being reckless.

Replies from: ctrout↑ comment by c.trout (ctrout) · 2024-09-11T16:19:37.765Z · LW(p) · GW(p)

Right so you're worried about moral hazard generated by insurance (in the case where we have liability in place). For starters, the government arguably generates moral hazard for disasters of a certain size by default: it can't credibly commit ex ante to not bail out a critical economic sector or not provide relief to victims in the event of a major disaster: the government is always implicitly on the hook (see Moss, D. A. When All Else Fails: Government as the Ultimate Risk Manager. See the too-big-to-fail effect for an example). Charging a risk-priced premium for that service can only help.

But you're probably more worried about private insurer's ability to mitigate the moral hazard they generate. Insurers certainly do not always succeed at doing this. However, they sometimes not only succeed, but in fact induce more harm reduction than liability alone probably would have induced on its own (see e.g. the Insurance Institute for Highway Safety rating crashworthiness of vehicles, and the auto-industry's lobbying for airbags in the 80s). For more see:

- Ben-Shahar and Logue, "Outsourcing Regulation: How Insurance Reduces Moral Hazard"

- Abraham and Schwarcz, "The Limits of Regulation by Insurance"

My research finds that, in the specific context of insuring against uncertain heavy-tail risks we can expect private insurers to engage in a mix of:

- causal risk-modeling, because actuarial data will be insufficient for such rare events (cf. causal risk-modeling in nuclear insurance underwriting and premium pricing (Mustafa, 2017)(Gudgel, 2022, ch. 4 sec. VII)).

- monitoring, again due to a lack of actuarial data and the need to reduce information asymmetries (cf. regular inspections by nuclear insurers with specialized engineers (2022, ch. 4 sec. VI.C)).

- safety research and lobbying for stricter regulation, because insurers will almost certainly have to pool their capacity in order to offer coverage, eliminating competition and with it, coordination problems (cf. American Nuclear Insurer’s (ANI) monopoly on third party liability (2022, ch. 4 sec. VII.A)).

- private loss prevention guidance, because it can’t be appropriated or drive away customers here: there will be little competition and the insurance is mandatory (cf. ANI sharing inspection reports and recommendations with policyholders (2022, ch. 4 sec. VII.A.2)).

In other words, if set up correctly, I expect them to do all the things we would want them to do.

The government also needn't mandate specifically commercial insurance: it could also allow/encourage labs to mutualize their risk, entirely eliminating concerns about moral hazard.

You can read more about all of this here.