Some perspectives on the discipline of Physics

post by Tahp · 2024-05-20T18:19:22.429Z · LW · GW · 3 commentsThis is a link post for https://quark.rodeo/physics.html

Contents

Three distinct disciplines within physics Physics is a set of theories Physics is problem solving Physics is a science What else is there to say? Physics as a collection of reapplicable concepts Physics as a series of models which approach reality Physics as quantified ignorance Physics as a finite-order Taylor approximation Can we wrap this up now? None 3 comments

I wrote the linked post, and I’m posting a lightly edited version here for discussion. I plan to attend LessOnline, and this is my first attempt at blogging to understand and earnestly explain and is also gauging interest in the topic in case someone at LessOnline wants to discuss the firmware of the universe with me. I might post more physics if there seems to be interest. Here is the post:

Three distinct disciplines within physics

When I teach introductory mechanics, I like to tell my students that there are three things which are all called physics, even if only one of them tends to show up on their exams.

Physics is a set of theories

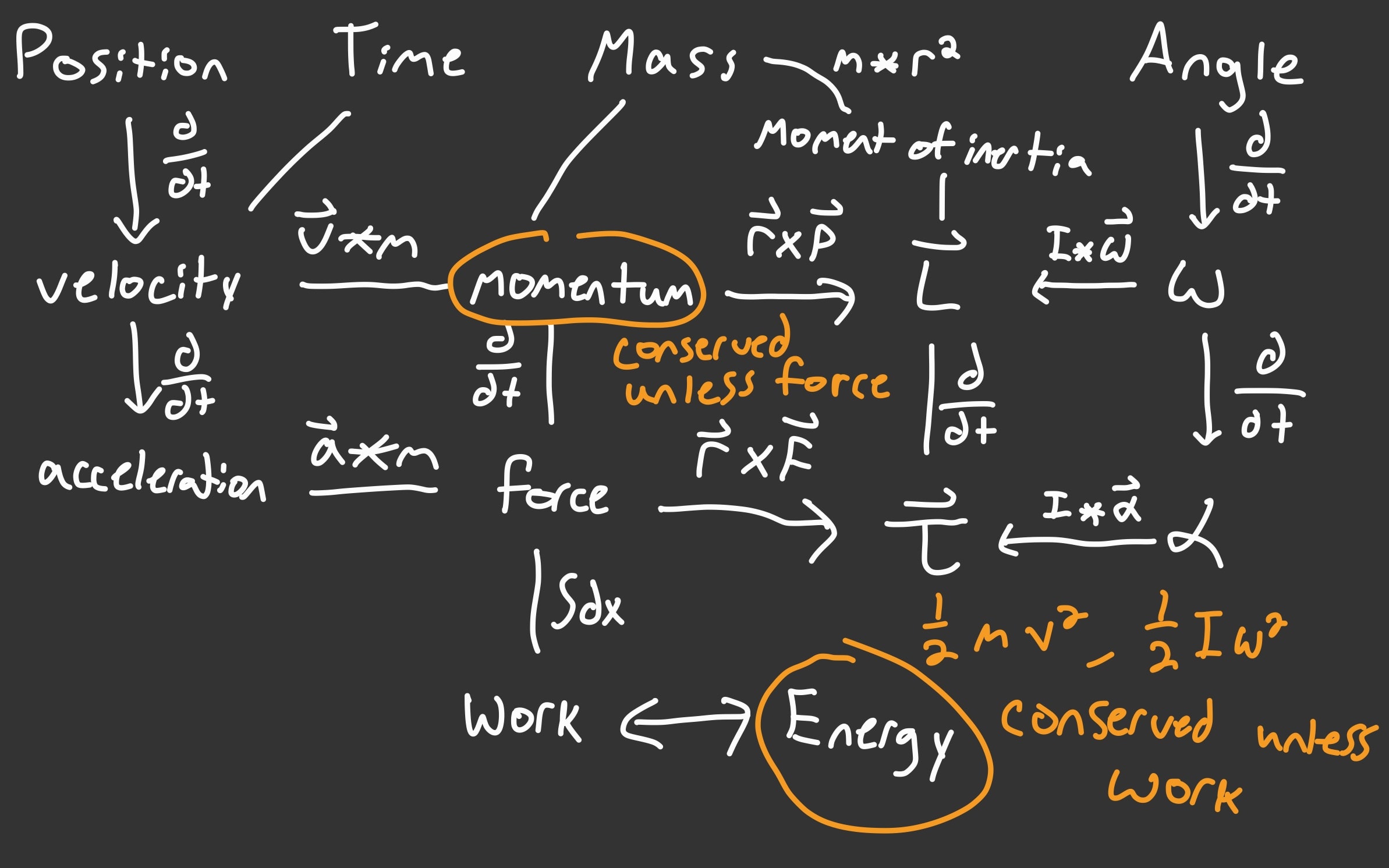

Periodically during the semester, I draw the following diagram on the board for my Newtonian Mechanics class:

This is an example of a theory. You can know what it means and how it works without knowing anything about how to apply it to real-world systems. Knowing a theory is like knowing the rules of a game. The game may or may not have connections to real life. You are not automatically a master at winning a game just because you know the rules. In the same way, physics theories and the concepts which guide them can exist seperately from their predictive power. Just because the plum pudding model is not a good model of actual atoms doesn't mean that you can't say something about what predictions it would make or what the average charge of a nucleus under the model would be. Examples of physics theories include general relativity, quantum mechanics, statistical mechanics, and the Big Bang.

Physics is problem solving

Once you have a theory, you can apply it to make predictions about a system given some initial state that describes it. For example, you might start with a projectile in a vacuum with some velocity at some location, and you try to figure out what its maximum height would be. This is the thing that actually shows up on physics exams. Sometimes you need to justify your knowledge of theory, but for the most part you demonstrate implicit knowledge of a thoery by using common techniques for describing the evolution of systems. Examples of techniques for solving physics problems are free body diagrams, Feynman diagrams, spacetime diagrams, the Schroedinger equation, and partition functions.

Physics is a science

The thing that sets physics apart from chess is that it describes the real world. You want to prove this. So you perform experiments by setting up a system in a certain way, measuring what happens, and showing that the results are consistent with a theory to some margin of error. For example, your theory might say that all objects fall at the same rateregardless of mass. You decide to perform an experiment, so you find a bunch of objects that are the same shape and size, but have different masses, and you take a video of them falling next to a tape measure. You do the best you can to make sure the objects are released at the same time, and you pause the video at a few times and write down the differences between the heights of the objects. You do this a few times, average all of the height differences, and find the standard deviation. If the value 0 is within one or two standard deviation of whatever average you get, you declare glorious victory, because this implies that your measurement is consistent with the "actual" value of the difference being 0 with 95% confidence. (Or you preassign probabilities to ranges of values near zero given your theory and update your priors based off of the value you measure from experiment if you’re a cool Bayesian. Please don’t throw me out of Lesswrong for using frequentist arguments in a facetious example.)

Why bother with the averaging and confidence intervals? The universe is a complicated thing. We expect that there are issues with our measurements. Perhaps you paused the video while the object was between two different lines on your measuring tape, and rounding to the nearest line introduces a random shift in the "true" value of your measurement up or down. Perhaps a truck drives by while you do your experiment and the measuring tape vibrates, leading your measurements to be randomly off one way or the other. As long as these errors are in random directions, the only effect will be to make your standard deviation wider without shifting the actual average. If you want to reduce that error, you might run your experiment in a vibrationally isolated chamber. You might use a tape measure which measures shorter distances by including mm lines or possibly even finer resolutions to reduce error due to rounding. You might use a higher framerate camera to reduce blurring. Or you might just take more samples and trust statistics to reduce your uncertainty.

A more treacherous form of error is systemic error, also known as bias. Perhaps your theory is only true in a vacuum, and air resistance has a more pronounced effect on lighter objects. If you fail to account for that through ignorance or neglect, your experiment might fail to conform to theory in atmosphere. Perhaps the position of your camera is such that the line of sight is different from your camera to each object to the tape measure, and the farther object consistently looks like it is in front of a line farther down the tape measure. (This is called parallax error, and there are deomonstrations of it on Wikipedia). Unaccounted for, the farther object seems like it has covered more distance due to parallax, so you might mistakenly say it had more acceleration when you analyze the video. The consistency of the universe that allows us to make physical theories which work every day means that if you take a measurement the same way every time (which you should to reduce random error), you are likely to measure the same mistake every time should you make one. To control for systemic error, physicists try to test each theory in many different ways. Useful physics theories have many consequences, so it is important to measure as many of those consequences via as many mechanisms as possible, so that assumptions about one experiment are unlikely to also hold in another experiment, and the same bias is unlikely to make all experiments give incorrect results in the same direction.

What else is there to say?

That framework is nice and neat, but anyone who has spent time with physicists know that there are patterns in physics that are not covered by that high level overlook. This YouTube video is the greatest physics joke I have ever seen, because it expresses the confusion which arises from the physicist's desire to reuse concepts in wildly different settings. When I saw the video for the first time, my first reaction was "yes! The fact that something called momentum survives in many theories shows that we're on to something real!" and my second reaction was "no wonder my students are confused when I lecture. I have the mind virus" and I marvelled at how I never questioned this obviously confusing physics tendency. Why do physicists insist on calling so many apparently unrelated things momentum? Why are some people who study materials called "physicists" and others "chemists" when they both agree that they are studiying collections of electrons and nuclei sticking together? There's obviously some sort of style or way of thinking which is typical of the theories called physics. Some people think this way of thinking is useful outside of physics. [LW · GW] The "branches" of physics one studies as an undergraduate are classical mechanics, electrodynamics, quantum mechanics, and statistical mechanics. What ties them together that doesn't also tie in chemistry, engineering, or philosophy? Don't those fields also make formal theories to interpret the universe? I have a couple of ideas about what makes a physics theory a physics theory, and I hope to explore these ideas more deeply in future posts.

Physics as a collection of reapplicable concepts

Physicists insists on teaching people Newtonian mechanics even though effectively all physics research depends on Lagrangian formulations of mechanics. Why do we do this? I offer the explanation that the primary value of Newtonian mechanics is that it builds an intuition behind a bunch of words physicists need to remember. You need to have some assumptions about what "momentum" means before it gets reused as an observable in quantum mechanics, or a microstate in statistical mechanics, or a derivative of a Lagrangian with respect to a time derivative of a coordinate, or whatever. You need to figure out why "energy" is a useful conserved quantity as a spatial integral of a force that can be transformed into motion or heat before you see why it's a big deal that you can get connections between energy and probability in statistical mechanics, or you take it as the fundamental operator of quantum mechanics, or you use it as the basis for calculating actions in Lagrangian mechanics. Conservation laws. Simple harmonic oscillators. Wave equations. Arguments using approximately-finite infinitesimal quantities. They all keep coming back, but Newtonian mechanics lets us have a physical intuition for them based off of small experiments we can do on a tabletop and interactions between objects we have everyday experience with.

As a deeper example of a recurring physics theme, consider that physical quantities can often be described with vector calculus. Newtonian mechanics and quantum mechanics both say that position and momentum are vector quantities. Angular momentum is also a vector, even though the direction associated with that vector is less physically intuitive than that of linear momentum. In one sense, this is incredibly unsurprising. We seem to live in 3-dimensional space. A lot of concepts have a direction in 3-space and some sense of scale associated with them. Those are the ingredients for a vector in 3D Euclidean space. But even when we go into 4-dimensions in general relativity, there’s a vector space waiting for us, even if it’s only locally Euclidean. Quantum mechanics says that observable states are an orthogonal basis for a (sometimes infinite-dimensional) vector space in which observable systems live. No matter how far physics strays from its deterministic Euclidean roots, we can’t seem to get away from vectors. It's easy to dismiss this as an obvious organization technique, but there are deep physical consequences to representing physical quantities as vectors. One is that you can decompose vectors into components. Force is a vector quantity in Newtonian mechanics, so if I have a conservation law, I can treat each dimension separately and find that horizontal dynamics are independent of vertical dynamics. Vector spaces are inherently additive, and maybe it's not surprising that two forces in the same direction add up to a bigger force and two forces in opposite directions add up to a smaller force, but it was surprising to early quantum mechanists that you can add two quantum states corresponding to different observations and end up with a sum state which has some probability of being observed in either of the states that add to it. It did not have to be the case that superposition works in electromagnetism. You can treat two sources of coulomb interactions seperately and add the fields together to find what the combined source would do to a test charge, and one way to interpret that is that you created an electric field which is a bunch of vectors, so of course you can add two such fields together.

Physics as a series of models which approach reality

The goal of physics is to make models of the material world that hold up to the material world, and each new model is expected to be consistent with the previous good model but also say something new or be correct in a place where the old model fails. Newtonian mechanics does an excellent job of describing how objects on earth move. But one notices that weird stuff happens at high speeds (near the speed of light). Thus is special relativity required, but Newtonian mechanics doesn't disappear. We find that special relativity is closer to reality than Newtonian mechanics for high speed particles, but we find that they are equivalent (to our ability to make measurements) at low speeds. This is taken as further proof of the validity of special relativity, seeing as Newtonian physics works so well at low speeds. General relativity is built so that special relativity holds in small regions of space and low energies. Lagrangian mechanics is a good approximation of quantum mechanics above the Planck scale, and general relativity is easily interpreted as theory under Lagrangian mechanics. More specifically, there are good derivations which show that a quantum field theory with a spin 2 gauge boson is consistent with general relativity in the classical limit. Is it not reasonable to say that physicists have found that quantum mechanics are the actual rules which the universe follows, and we’ve been moving closer and closer to it with approximations? Or maybe there will be another theory that reduces to quantum mechanics, but it doesn't seem unreasonable to think the tower of theories will end some day with something that is just correct. The standard model of quarks and leptons and gauge bosons has steadfastly refused to break down no matter how much energy we throw at it. There are theoretical reasons to think we need something beyond the standard model, but after spending all that money on the LHC to show that we can't break the standard model, can we just say that quarks are actually the thing we’re made of? An electric field is an abstraction which represents the force of the electric interaction on a test charge, but you can interpret it as holding energy and get physically relevant dynamics out of it, and the field survives in a recognizable form in quantum electrodynamics, so maybe we should just say that the field is a thing that exists. I used to think this was the arc of physics, but I’m not so sure anymore.

Physics as quantified ignorance

Here’s how to build a physics theory: start with a simple mathematical model. Ideally it should nearly describe some physical system. Now add some complications to it to explain all of the ways in which the universe doesn’t actually do that. That’s a physics theory.

As an example, consider Newtonian mechanics. Newtonian mechanics says that everything moves in a straight line at the same speed forever. Things that aren’t moving never start moving. This sounds nice and simple, but it doesn’t actually describe our universe. The trick is to say that anytime something doesn’t do that, you invent a force which explains why it didn’t happen. If I let go of a ball in midair, it doesn’t hang there, it falls down. So I invent a force, call it gravity, and say that it gets bigger with mass to explain why everything seems to accelerate downward at the same rate at a given point near Earth. But why do things stop accelerating downward once they hit the ground? Uh, we’ll make a force called the normal force which is as big as it needs to be to make things not fall through other things; it’s fine. When I push a box across the ground, it doesn’t keep going; it slows down until it stops. Let’s invent a force called friction which opposes the motion of an object, but only if it’s touching another object. How big is the force of friction? Uh, it depends on the normal force, but it’s also different for every combination of surfaces; you just have to measure it. Fine, whatever.

You might think this is horribly cynical of me and this couldn't possibly be how the better physics theories work, but I can keep going. General relativity? It’s just special relativity on a manifold, but you say that the curvature of the manifold depends on the energy on the manifold to make up for the fact that things don't actually move in the straight lines predicted by special relativity. Electromagnetism? it’s just the Maxwell equations, but you have to modify the electric and magnetic fields in a couple of the equations to account for the polarization or magnetization of a material that the field goes through, and even that won’t help much for ferromagnetic materials. Quantum mechanics? The Bohr model which works pretty well for electron shells in nuclei is just a coulomb potential and a classical kinetic term. But you need to add a perturbation term for special relativity. And also one for the magnetic effects. Maybe another couple terms for Darwin shift and Lamb shift, but we have excellent reasons to add them, it’s fine. Quantum field theory is a pile of quantum particles (in the diagonalized free theory) with increasingly convoluted interactions added to explain why they don't move in straight lines forever (technically why they aren't just plane-waves, but I'm making a point here) or they keep turning into other particles or they have masses that aren't predicted by simpler theories.

Physics as a finite-order Taylor approximation

This one is possibly just another interpretation of the last one, but this is the one that keeps me up at night, so I’m making a separate heading for it. If you get far enough in calculus, you learn about the Taylor series expansion of smooth functions. Any function that has well-defined derivatives at a point can be rewritten as an infinite series of polynomials, and for small (and sometimes large) distances away from that point, the series matches the function exactly. However, if you don't mind a small amount of error, you can truncate the infinite sum and it is a pretty good approximation of the function. If you don't know anything about a function, but you can measure inputs and outputs at high enough precision, you can start building a Taylor approximation manually at a point: just draw a line tangent to the curve you measure and use its slope to get your first order term. Then subtract out the line and start trying to subtract out parabolas until the function looks like its approaching zero and use the parabola as the second order term. The coefficients on these terms provide all the information to make this function you've measured to within some error you can reduce by making more terms, so why not just pass those terms around and say they're the function? Mathematicians reading along will be horrified, because they can come up with pathological functions which don't allow this process, but physicists keep doing it and finding it works pretty well, so apparently the world isn't too pathalogical. A lot of times physicists are very explicit about the fact that this is what they're doing. One way that you show that special relativity reduces to Newtonian mechanics at low speeds is to take a Taylor series of relativistic energy of a point particle in terms of momentum squared and the first two terms are equal to Newtonian energy. General relativity starts off by defining the curvature in terms of a second order Taylor expansion of the metric and discarding higher order terms as irrelevant. Feynman diagrams represent terms in an expansion of all possible interactions of a set of fields in terms of interactions of a given number of copies of the fields and you add terms with more fields if you need more precision.

The thing that keeps me up at night is that this may be all that there is to physics. Maybe we don't know anything about the rules of the universe except the fact that they approximately follow some conservation laws (sometimes extremely poorly!) that we can write down, so we make expansions around those conservation laws and make good predictions at the low energies we have access to and celebrate how well we know the universe. But if all you know about a cosine function is its second order Taylor approximation, you don't know a lot about a cosine function even though you can write down very well what it resolves to at low angles. You might know that the cosine function is an even function (you can reflect it accross the y axis and get the same function back), but you don't know that it's periodic (you can translate it along the x axis and get the same function back). Maybe you would start to suspect that the function is periodic after you went to 8th order and saw that you keep getting wiggles around the x axis at higher orders, but only if you had access to high enough angles to see the function wrap back around to the x axis. I am extremely concerned that the Standard Model needs us to measure separate masses for all of the fields and separate interaction strengths for all of the interactions. Don’t get me started on Weinberg angles. We're manually measuring dozens of parameters to plug into the theory. You don't get much information from showing that a 12th order Taylor expansion fits a function pretty well near the value that you're expanding around that you don't get by assuming the function is differentiable in the first place. If all that physics has done is found a pretty good approximation for how matter works, we might as well give up on fundamental physics, because I can't think of any useful thing we can do with physics that requires a heat bath of higher temperature than we can make for an instant by smashing protons together in the LHC, and the physics we have so far is perfectly capable of describing everything we've gotten out of the LHC. If you want to know why I maintain an interest in unified theories that do nothing but recreate the standard model, it's because it would be extremely good news to me that you can recreate the standard model with a smaller number of parameters you need to go out and measure.

Can we wrap this up now?

Yes. Even if I'm worried that all of our physics theories are just approximations compatible with many different prospective theories of everything we might be unable to distinguish, I do think physicists have found some useful concepts to describe the universe, and there are assumptions (physicists often call them symmetries) built into physics theories which have deep consequences for reality and provide restraints on the sorts of theories that could possibly describe the universe. I'm definitely interested in those, and I think non-physicists might be interested too. I’ll write about some of them later.

3 comments

Comments sorted by top scores.

comment by cata · 2024-05-20T18:44:43.543Z · LW(p) · GW(p)

As a non-physicist I kind of had the idea that the reason I was taught Newtonian mechanics in high school was that it was assumed I wasn't going to have the time, motivation, or brainpower to learn some kind of fancy, real university version of it, so the alternate idea that it's useful for intuition-building of the concepts is novel and interesting to me.

Replies from: quiet_NaN↑ comment by quiet_NaN · 2024-05-21T18:46:34.374Z · LW(p) · GW(p)

It is also useful for a lot of practical problems, where you can treat as being essentially zero and as being essentially infinite. If you want to get anywhere with any practical problem (like calculating how long a car will take to come to a stop), half of the job is to know which approximations ("cheats") are okay to use. If you want to solve the fully generalized problem (for a car near the Planck units or something), you will find that you would need a theory of everything (that is quantum mechanics plus general relativity) to do so and we don't have that.

comment by keltan · 2024-05-20T23:36:20.259Z · LW(p) · GW(p)

I’m just finishing up an intro to physics course for university this semester. I self taught math last year. So I was expecting the hardest part to be the math itself. But actually the hardest part was similar to the start of “what else is there to say”. Understanding that formulas are written with different symbols depending on who is writing them and how they are feeling that day.

Like, why can (s) represent:

- Seconds

- Time itself

- Distance

- Displacement

- Probably a few other things I’m forgetting

The hand rules for magnetic fields all called different things by different people. What!?

Still not finished reading this post. But I’m really enjoying it so far. Hope to see you at LessOnline!