Intergenerational Knowledge Transfer (IKT)

post by MiguelDev (whitehatStoic) · 2024-03-28T08:14:10.890Z · LW · GW · 0 commentsContents

No comments

(This post is intended for my personal blog. Thank you.)

One of the dominant thoughts in my head when I build datasets for my training runs: what our ancestors 'did' over their lifespan likely played a key role in the creation of language and human values.[1]

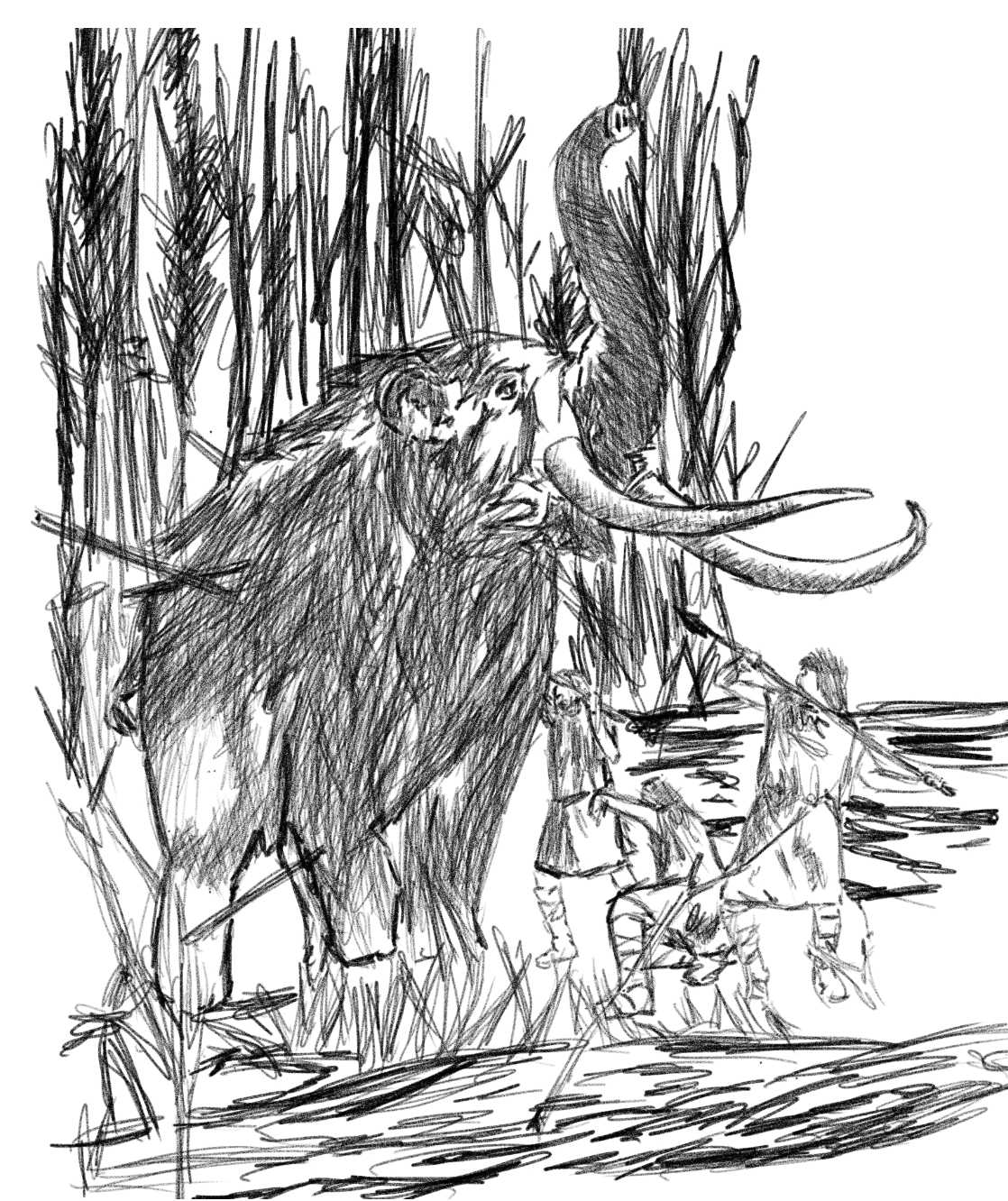

I imagine a tribe whose members had an approximate of twenty to thirty-five years to accumulate knowledge—such as food preparation, hunting strategies, tool-making, social skills, and avoiding predators. To transmit this knowledge, they likely devised a system of sounds associated with animals, locations, actions, objects, etc.

Sounds related to survival would have been prioritized. These had immediate, life-and-death consequences, creating powerful associations (or neurochemical activity?) in the brain. "Danger" or "food" would have been far more potent than navigational instructions. I think evolution manages those actions that gets used repeatedly. The constant reinforcement of survival sounds and their associated actions (as enabled by our genetics[2]) likely built the foundations of language. I think stories[3] were used to simulate world interactions, necessitating a coherent string of sounds - where an abstracted pattern emerges.

What I am trying to describe here as the process of passing information down to the next generation is what I now refer to as a Intergenerational Knowledge Transfer (IKT). Finally, I believe that viewing evolutionary learning as a sequence of IKTs, where each IKT can be considered as a sample[4] in a dataset, is not a bad theoretical experiment to wrestle with.[5]

- ^

Why do I believe that datasets may serve as a pathway for an evolutionary learning? I speculate that our world can be simulated in a capable language model and if we can strategically create/construct/curate a dataset or a series of datasets [LW · GW] - aligning language models to our values is possible.

- ^

I'm still trying to wrap my head around the kind of capabilities or genetics is necessary that enables language learning and speech control.

(This might be a relevant reading: The evolutionary history of genes involved in spoken and written language: beyond FOXP2.)

- ^

I should write further into why I believe that "stories serve as a universal structure for information" next week. However, to briefly explain: I think that the pattern of a setup, conflict, and resolution, commonly known as a three-act narrative, can encapsulate any complex idea—even the simulation of a world...

- ^

In order to align with the IKT sample I'm referencing in this post, the sample must consist of a collection of related words that are repeatedly elaborated upon, with the aim of delineating a single, intricate pattern.

- ^

I experimented on this by sequentially layering ten datasets [LW · GW] to reperesent an evolutionary approach to ethical alignment, there is notable improvements on GPT2XL's robustness to jailbreaks (JB) - negating up to 67.8% of the attacks [LW · GW]. Also, the same model was able to solve a theory of mind task 72 out of 100 times. [LW · GW] These are tests that foundation models fail a lot (see JB: 1 [LW · GW], 2 [LW · GW], 3 [LW · GW]; ToM [LW · GW]) .

0 comments

Comments sorted by top scores.