Minerva

post by Algon · 2022-07-01T20:06:55.948Z · LW · GW · 6 commentsThis is a link post for https://ai.googleblog.com/2022/06/minerva-solving-quantitative-reasoning.html

Contents

Datasets Results Random Remarks None 6 comments

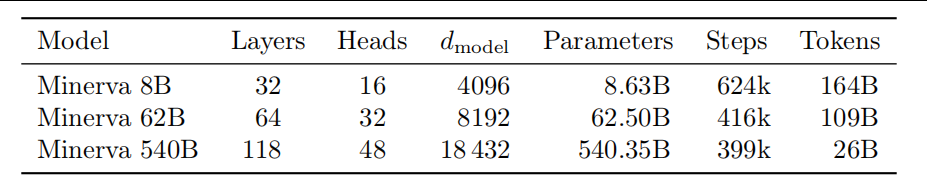

Google Research's new AI tackles natural language math problems and handily outperforms the SOTA[1]. It is a pre-trained PaLM [2]finetuned on some maths datasets (which use LaTeX) composed of maths webpages and Arxiv papers (38.5B tokens). The three models trained were as follows.

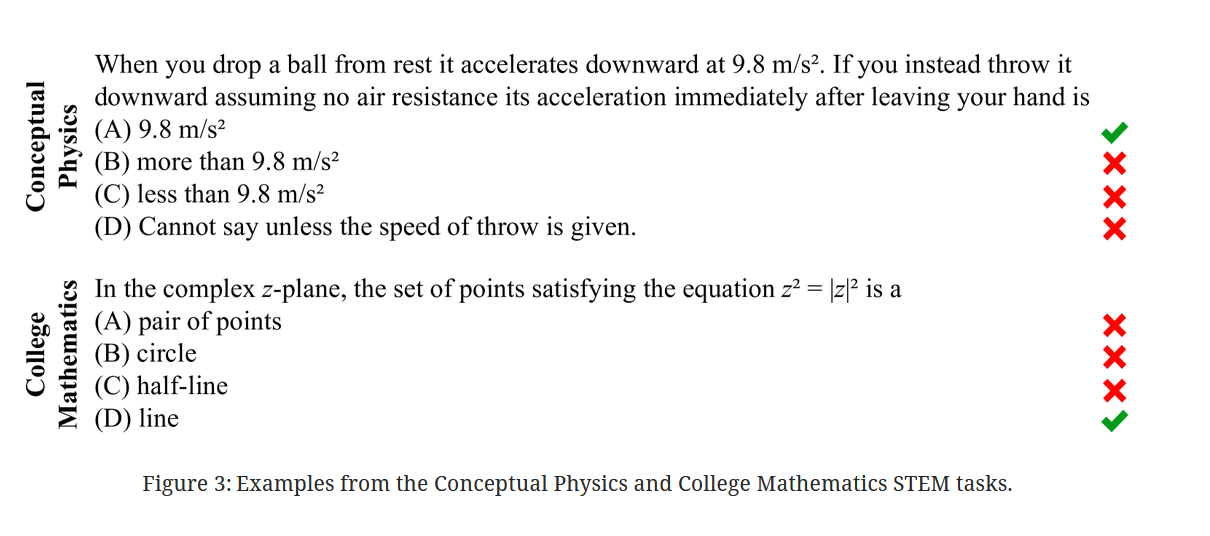

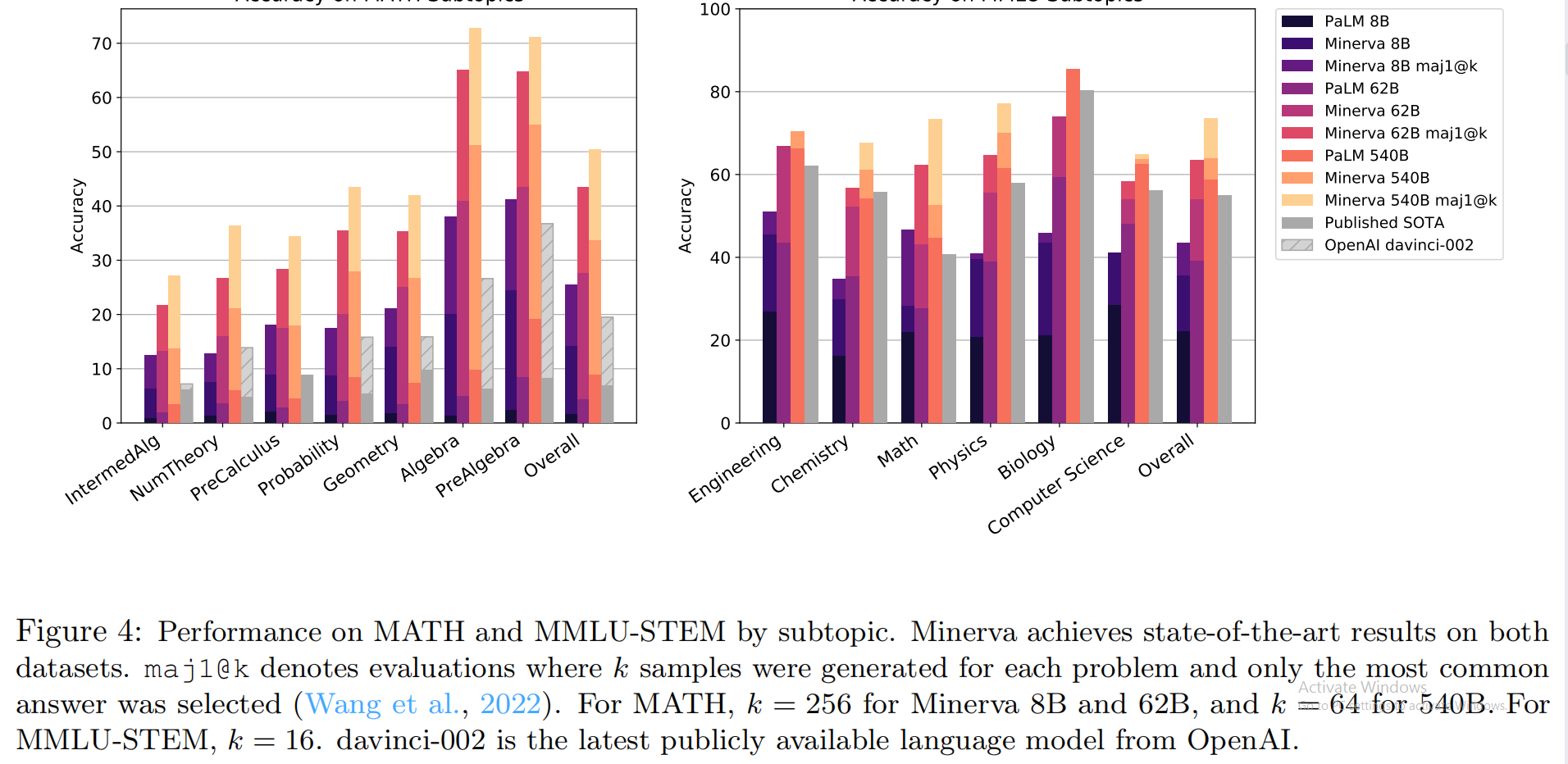

When generating answers, Minerva is given the same prompt of four questions with correct a chain of reasoning and a consistent format for the final, correct answer. Then the actual question is given. Minerva then outputs a chain of reasoning and a corresponding answer a number of times, with the most common answer chosen. Minerva is graded only on the final answer.

This voting algorithm is called maj@1k and saturates faster than pass@k (generates k answers, if one is right then the answer is graded correctly) but doesn't perform as well for large k. This is quite reasonable, as majority voting will continue to choose the most common answer, with the estimate's error decreasing with larger k. Whereas pass@k allows the model more tries for large k.

Datasets

The datasets used are:

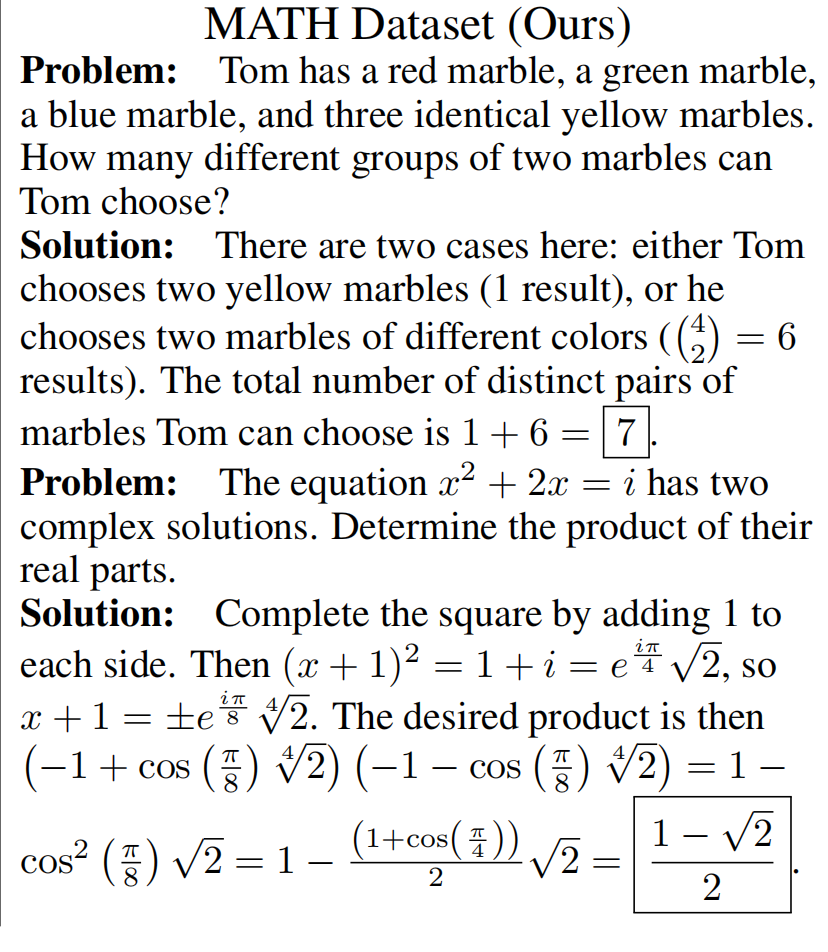

- MATH: High school math competition level problems

- MMLU-STEM: A subset of the Massive Multitask Language Understanding benchmark focused on STEM, covering topics such as engineering, chemistry, math, and physics at high school and college level.

- GSM8k: Grade school level math problems involving basic arithmetic operations that should all be solvable by a talented middle school student.

The datasets have questions which vary in difficulty. Predictably, the model performed worse on harder questions, with false positives linearly with question difficulty on

Results

Now time for a suprise quiz! For the purposes of this quiz, assume we're talking about the most accurate minerva model (540B parameters using maj1@k sampling. k=64 for MATH and k=16 for MMLU). And we'll be averaging over results on subtopics[3]. Note the SOTA is OpenAI's davinci-002, which obtained absolute (averaged) scores of about 20% and 49%.

And the answers are... no, yes, yes and no. Here's the raw data.

Random Remarks

- I'm not so surprised by these results, given how well AlphaCode improved over the SOTA then, and given that PaLM is just better at common sense reasoning than GPT-3.

- Finetuning on the MATH dataset didn't improve Minerva, but did improve PaLM significantly.

- Slightly changing the framing of the questions didn't really alter performance. Swapping the numbers out significantly altered the variance between altered and unaltered answers, possibly slightly degrading it on net. Significantly changing question framing, with or without alterning the numbers, increased variance and somewhat degraded performance.

- Interstingly, the model didn't generalise as well to engineering questions as I'd naively expect. I'd have thought if it understood physics, it could nail engineering, but I guess not. Maybe there were some subtopics in the datest not covered in Engineering?

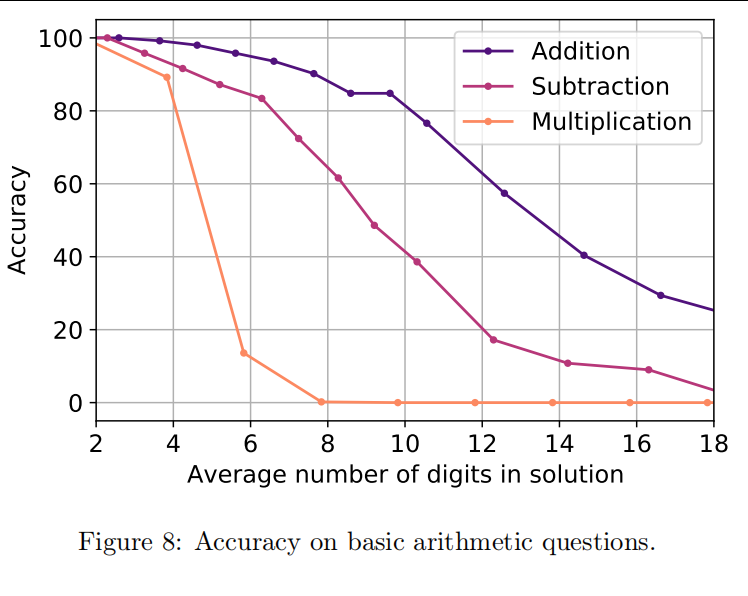

- What about GSM8K? Were the results not included because Minerva can't do grade school maths? Nope. Its performance was just barely SOTA. That said, the model struggles with large number arithmetic, especially multiplication. But cut it some slack, that's better than most mathematicians can do.

- ^

State of the art

- ^

Pathways Language Model, another AI developed by Google Research.

- ^

I'm assigning equal weights to the subtopics on MMLU because I'm too lazy to find out how many questions were on physics and maths in the dataset.

6 comments

Comments sorted by top scores.

comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2022-07-01T21:37:54.820Z · LW(p) · GW(p)

Posted yesterday: https://www.lesswrong.com/posts/JkKeFt2u4k4Q4Bmnx/linkpost-solving-quantitative-reasoning-problems-with [LW · GW]

Replies from: Algoncomment by MondSemmel · 2022-07-01T21:05:43.664Z · LW(p) · GW(p)

Question on acronyms: what do SOTA and PaLM mean?

Replies from: Algoncomment by Lone Pine (conor-sullivan) · 2022-07-02T16:50:14.031Z · LW(p) · GW(p)

Hey, I've been trying to figure out how to embed polls in posts, like you did. Is that an elicit prediction embed?

Replies from: Algon