ChatGPT’s Ontological Landscape

post by Bill Benzon (bill-benzon) · 2023-11-01T15:12:03.551Z · LW · GW · 0 commentsContents

Contents Introduction: Ontological structure in cognition References None No comments

This is cross-posted from New Savanna.

I've published a new working paper. Title above, links, abstract, table of contents, and introduction below:

Academia.edu: https://www.academia.edu/108791178/ChatGPTs_Ontological_Landscape_A_Working_Paper

SSRN: https://ssrn.com/abstract=4620133

ResearchGate: https://www.researchgate.net/publication/375161835_ChatGPT's_Ontological_Landscape

Abstract: Ontological concepts are the high-level category structures language. Physical objects can have certain attributes (form, texture, color, weight) and play certain roles with respect to verbs (they can be touched, hit, dropped, etc.). Living objects have those capacities, plus others as well. They can live, die, and grow. Animals have the capacities and affordances of plants, plus others than plants lack. And so it is with humans in respect to animals. This type of category structure is pervasive. Then we have the difference between concrete things, specifiable by how they strike the senses and abstract things, which are not (necessarily) perceptible. Concider the difference between “salt,” which is conceptually concrete and “NaCl,” which is a physical thing that is conceptually abstract. This kind of difference is pervasive as well. The first two sections of this paper explore ChatGPT on those matters. The third turns to the parlor game 20 questions as a way of exploring its ability to negotiate these conceptual structures. In games with six target concepts, each tried twice for a total of 12 games, ChatGPT performs better with abstract than with concrete targets.

Contents

Introduction: Ontological structure in cognition 2

From Salt and NaCl to the Great Chain 5

Mapping ChatGPT’s ontological landscape, gradients and choices [interpretability] 13

ChatGPT Plays 20 Questions [& sometimes needs help] 30

Appendix: Some papers on cognitive ontology 52

Introduction: Ontological structure in cognition

Perhaps the most famous sentence in twentieth century linguistics is one that Noam Chomsky made-up to argue that synatx is independent of semantics: “Colorless green ideas sleep furiously.” The syntax is fine, but the sentence is nonsense. Ideas are not the sorts of things that have colors or that can sleep. Those are what philosophers have come to call category mistakes.[1] Interestingly enough, the first time I asked ChatGPT to tell a story in which a colorless green idea was the protagonist, it refused [2]:

I'm sorry, but the concept of a "colorless green idea" is a nonsensical phrase that was invented as an example of a phrase that would be grammatically correct but semantically meaningless. It is not possible to create a story about a "colorless green idea" as it does not have any physical properties or characteristics that can be used in a story.

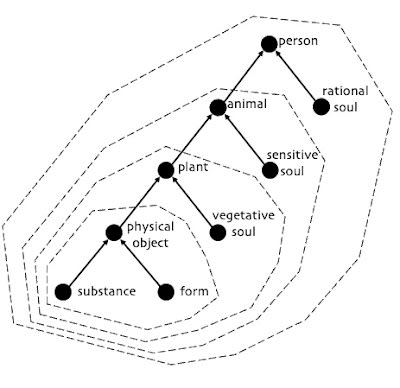

That’s one aspect of ontological cognition, and it is pervasive. Physical objects have form – cube, sphere, and so forth, but more complex forms are common – and are constituted of some substance or substances – stone, metal, wood, cloth, and so forth. They have textures (smooth, rough, slick, sticky, etc.) and colors (of which there are thousands). But they can’t shrink or grow. Plants can do those things, and reproduce as well. But they can’t move around of their own accord, nor do they have the capacity for sensation. Those capacities belong to animals, but animals cannot reason or speak. But humans can.

Those dependencies give rise to a conceptual structure that we can diagram like this:

I take the terminology of souls, vegetative, sensitive, and rational, from Aristotle, who laid it out in De Anima. Over time this structure became elaborated into a metaphysical trope called The Great Chain of Being.[3] However, I’m not interested in the Chain as a philosophical idea, or an explicit conceptual trope for organizing the world. I’m interested in the perceptual and cognitive underpinnings of that structure; it is those that give rise to category mistakes and to ChatGPT’s reluctance to tell a story with a colorless green idea as the protagonist.

This pervasive concetptual structure has another aspect that I like to illustrate with the difference between (ordinary table) salt and NaCl. Physically, they are pretty much the same thing, though salt has impurities that NaCl, by definition, does not. But conceptually they are quite different. Salt is defined by its color, the shape of its grains, and, above all, by its taste. One can sense the presence of salt by taste alone. This is a concept readily available to childrens and even infants, depending on just what you mean by “concept.” NaCl is quite different. It exists in a conceptual matrix that didn’t exist until the nineteenth century, one involving chemical elements, atoms, molecules, and bonds between atoms. The same difference exists between water and H2O and laughing gas and N2O, and so on for a whole variety of common substances. Nineteenth century chemistry imposes a conceptual structure on the world that is different from that of common-sense.

And this difference, between common-sense conceptualizations, and more sophisticated ones, science if you will, though not only science-proper, is pervasive. Biologists have Latinate names for creatures you and I see as rose bushes, pine trees, dogs, cats, and lobsters. So it goes with physicists, geologists, astronomers, even sociologists, economists and other social scientists, not to mention – would you believe it? – literary critics. Our conceptual universe is filled with specialized conceptual repertoires belonging to specialized disciplines.

What does ChatGPT know of any of this? Well, it likely knows all the terms, certainly many more terms across many more disciplines than any one human being knows. Whether or not it understands those terms deeply, or only superficially, those are other questions, well beyond the scope of this paper.

* * * * *

From Salt and NaCl to the Great Chain – I quiz ChatGPT on the basics, salt vs. NaCl, Morning Stat and Evening Star, The Great Chain of Being, De Anima, Plato’s parable of the charioteer, and the study of ontology.

Mapping ChatGPT’s ontological landscape, gradients and choices [interpretability] – I start by exploring the difference between concrete and abstract questions, move on to the Great Chain, and conclude by asking it how 20 questions exploits the semantic structure of language. Along the way I introduce Waddington’s epigenetic landscape as a metaphor for ChatGPT’s operation.

ChatGPT Plays 20 Questions [& sometimes needs help] – I explore ChatGPT’s facility with categories by playing 20 questions with it, two rounds each on: bicycle, squid, justice, apple, evolution, and truth. On the whole it seemed to do better with abstract concepts than with concrete.

Appendix: Some papers on cognitive ontology – Four papers, including abstracts. Three are specifically about conceptual ontology. The fourth is about cultural evolution and discusses the elaboration of conceptual ontology over the long course of cultural evolution, salt vs. NaCl writ large.

References

[1] Magidor, Ofra, "Category Mistakes", The Stanford Encyclopedia of Philosophy (Fall 2022 Edition), Edward N. Zalta & Uri Nodelman (eds.), https://plato.stanford.edu/archives/fall2022/entries/category-mistakes.

[2] There’s truth, lies, and there’s ChatGPT [Realms of Being], New Savanna, Jan. 25, 2023, https://new-savanna.blogspot.com/2023/01/theres-truth-lies-and-theres-chatgpt.html.

[3] Wikipedia, Great Chain of Being, https://en.wikipedia.org/wiki/Great_chain_of_being. Nee, S., The great chain of being, Nature 435, 429 (2005), https://doi.org/10.1038/435429a.

0 comments

Comments sorted by top scores.