Against the Open Source / Closed Source Dichotomy: Regulated Source as a Model for Responsible AI Development

post by alex.herwix · 2023-09-04T20:25:56.662Z · LW · GW · 12 commentsThis is a link post for https://forum.effectivealtruism.org/posts/GBAzW4pZ5JgJqGMJg/against-the-open-source-closed-source-dichotomy-regulated

Contents

Context Open Source vs. Closed Source AI Development Regulated Source as a Model for Responsible AI Development Concluding Remarks None 12 comments

Context

This is a short post to illustrate and start testing an idea that I have been playing around with in the last couple of days after listening to a recent 80,000 hours podcast with Mustafa Suleyman. In the podcast Suleyman as well as Rob Wiblin expressed concerns about Open Source AI development as a potential risk for our societies while implying that closed source development would be the only reasonable alternative. They have not delved deeper into the topic to examine their own assumptions about what makes reasonable alternatives in this context, or to look for possible alternatives beyond the "standard" open source/closed source dichotomy. With this post, I want to encourage our community to join me in the effort to reflect our own discourse and assumptions around responsible AI development to not fall into the trap of naively reifying existing categories, and develop new visions and models that are better able to address the upcoming challenges which we will be facing. As a first step, I explore the notion of Regulated Source as a model for responsible AI development.

Open Source vs. Closed Source AI Development

Currently, there are mainly two competing modes for AI development, namely, Open Source and Closed Source (see Table for comparison):

- Open Source “is source code that is made freely available for possible modification and redistribution. Products include permission to use the source code, design documents, or content of the product. The open-source model is a decentralized software development model that encourages open collaboration. A main principle of open-source software development is peer production, with products such as source code, blueprints, and documentation freely available to the public” (Wikipedia).

- Closed Source “is software that, according to the free and open-source software community, grants its creator, publisher, or other rightsholder or rightsholder partner a legal monopoly by modern copyright and intellectual property law to exclude the recipient from freely sharing the software or modifying it, and—in some cases, as is the case with some patent-encumbered and EULA-bound software—from making use of the software on their own, thereby restricting their freedoms.” (Wikipedia)

Table 1. Comparison Table for Open Source vs. Closed Source inspired by ChatGPT 3.5.

| Criteria | Open Source | Closed Source |

| Accountability | Community-driven accountability and transparency | Accountability lies with owning organization |

| Accessibility of Source Code | Publicly available, transparent | Proprietary, restricted access |

| Customization | Highly customizable and adaptable | Limited customization options |

| Data Privacy | No inherent privacy features; handled separately | May offer built-in privacy features, limited control |

| Innovation | Enables innovation | Limits potential for innovation |

| Licensing | Various open-source licenses | Controlled by the owning organization's terms |

| Monetization | Monetization through support, consulting, premium features | Monetization through licensing, subscriptions, fees |

| Quality Assurance | Quality control depends on community | Centralized control for quality assurance and updates |

| Trust | Transparent, trust-building for users | Potential concerns about hidden biases or vulnerabilities |

| Support | Reliant on community or own expertise for support | Reliant on owning organization for support |

If we look at these modes of software development, they have both been argued to have positive and negative implications for AI development. For example, Open Source has often been suggested as a democratizing force in AI development, acting as a powerful driver of innovation by making AI capabilities accessible to a broader segment of the population. This has been argued to be potentially beneficial for our societies, preventing or at least counteracting the centralization of control in the hands of a few, which poses the threat of dystopian outcomes (e.g., autocratic societies run by a surveillance state or a few mega corporations). At the same time, some people worry that the democratization of AI capabilities may increase the risk of catastrophic outcomes because not everyone can be trusted to use them responsibly. In this view, centralization is a good feature because it makes it easier to control the situation as a whole since fewer parties need to be coordinated. A prominent analogy used to support this view is with our attempts to limit the proliferation of nuclear weapons, where strong AI capabilities are viewed as similar in their destructive potential to nuclear weapons.

Against this background, an impartial observer may argue that both Open Source and Closed Source development models point to potential failure modes for our societies:

- Open Source development models can increase the risk of catastrophic outcomes when irresponsible actors gain access to powerful AI capabilities, creating opportunities for deliberate misuse or catastrophic accidents.

- Closed Source development models can increase the risk of dystopian outcomes when control of powerful AI capabilities is centralized in the hands of a few, creating opportunities for them to take autocratic control over our societies.

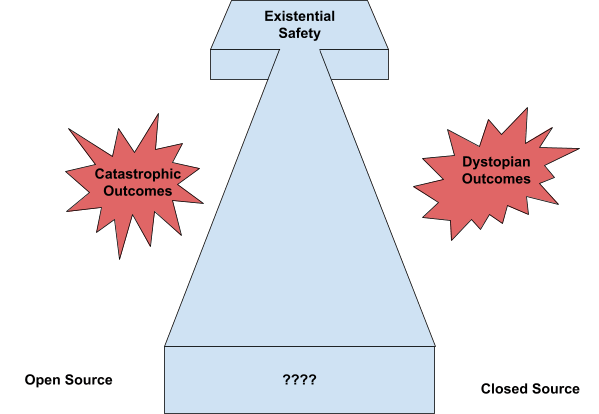

This leads to a dilemma that Tristan Harris and Daniel Schmachtenberger have illustrated with the metaphor of a bowling alley, where the two gutters to the left and right of the alley represent the two failure modes of catastrophic or dystopian outcomes.[1] In this metaphor, the only path that can lead us to existential security is a middle path that acknowledges but avoids both failure modes (see Fig. 1). Similarly, given the risk-increasing nature of both Open Source and Closed Source AI development approaches, an interesting question is whether it is possible to find a middle ground AI development approach that avoids their respective failure modes.

Regulated Source as a Model for Responsible AI Development

In this section, I begin to sketch out a vision for responsible AI development that aims to avoid the failure modes associated with Open Source and Closed Source development by trying to take the best and leave behind the worst of both. I call this vision a “Regulated Source AI Development Model” to highlight that it aims to establish a regulated space as a middle ground between the more extreme Open Source and Closed Source models (c.f., Table 2).

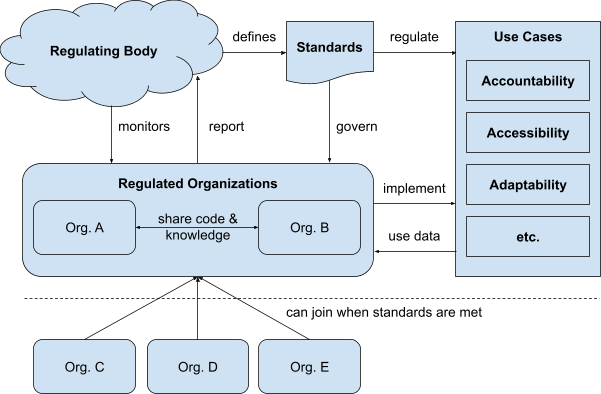

As visualized in Fig. 2 and summarized in Table 2, the core idea of the Regulated Source model is to establish a trustworthy and publicly accountable regulating body which defines transparent standards that not only regulate AI use cases but also govern the behavior of organizations that want to implement these use cases. In particular, such standards could mandate the sharing of code and other knowledge assets relating to the implementation of AI use cases to level the playing field between the regulated organizations and reduce the chance of AI capability development races by lowering the expected benefit of unilateral actions. Importantly, such sharing of code and knowledge assets would be limited to organizations (or other actors), who have demonstrated that they can meet the transparent standards set by the regulating body, thus, balancing the risks associated with the proliferation of potentially dangerous capabilities on the one hand (i.e., the failure mode of Open Source), and the centralization of power on the other hand (i.e., the failure mode of Closed Source).

A real life example that already comes close to the envisioned Regulated Source model is the International Atomic Energy Agency (IAEA). The IAEA was founded in 1957 as an intergovernmental organization to monitor the global proliferation of nuclear resources and technology and serves as a forum for scientific and technical cooperation on the peaceful use of nuclear technology and nuclear power worldwide. For this, it runs several programs to encourage the safe and responsible development and use of nuclear technology for peaceful purposes and also offers technical assistance to countries worldwide, particularly in the developing world. It also provides international safeguards against the misuse of nuclear technology and has the authority to monitor nuclear programs and to inspect nuclear facilities. As such, there are many similarities between the IAEA and the envisioned Regulated Source model, the main difference being the domain of regulation and the less strong linkage to copyright regulation. As far as I am aware the IAEA does not have regulatory power to distribute access to privately developed nuclear technology, whereas the Regulated Source model would aim to compell responsible parties to share access to AI development products in an effort to counteract race dynamics and the centralization of power.

Table 2. Characteristics of a Regulated Source AI Development Model.

| Criteria | Regulated Source |

| Accountability | Accountability and transparency regulated by governmental, inter-governmental, or recognized professional bodies (c.f., International Atomic Energy Agency (IAEA)). |

| Accessibility of Source Code | Restricted access to an audience that is clearly and transparently defined by regulating bodies; all who fulfill required criteria are eligible for access |

| Customization | Highly customizable and adaptable within limits set by regulating bodies |

| Data Privacy | Minimum standards for privacy defined by regulating bodies |

| Innovation | Enables innovation within limits set by regulating bodies |

| Licensing | Technology or application specific licensing defined by regulating bodies |

| Monetization | Mandate to optimize for public benefit. Options include support, consulting, premium features but also licensing, subscriptions, fees |

| Quality Assurance | Minimum standards for quality control defined by regulating bodies |

| Trust | Transparent for regulating bodies, trust-building for users |

| Support | Reliant on regulated organizations for support |

Concluding Remarks

I wrote this post to encourage discussion about the merits of the Regulated Source AI Development Model. While many people may have had similar ideas or intuitions before, I still miss a significant engagement with such ideas in the ongoing discourse on AI governance (at least as far as I am aware). Much of the discourse has touched on the pros and cons of open source and closed source models for AI development, but if we look closely, we should realize that focusing only on this dichotomy has put us between a rock and a hard place. Neither model is sufficient to address the challenges we face. We must avoid not only catastrophe, but also dystopia. New approaches are needed if we're going to make it safely to a place that's still worth living in.

The Regulated Source AI Development Model is the most promising approach I have come up with so far, but more work is certainly needed to flesh out its implications in terms of opportunities, challenges, or drawbacks. For example, despite its simplicity, Regulated Source seems to be suspiciously absent from the discussion of licensing frontier AI models, so perhaps there are reasons inherent in the idea that can explain this? Or is it simply that it is still such a niche idea that people do not recognize it as potentially relevant to the discussion? Should we do more to promote this idea, or are there significant drawbacks that would make it a bad idea? Many questions remain, so let's discuss them!

P.S.: I am considering to write the ideas expressed in this post up for an academic journal, reach out if you would want to contribute to such an effort.

- ^

Listen to Tristan Harris and Daniel Schmachtenberger on the Joe Rogan Experience Podcast.

12 comments

Comments sorted by top scores.

comment by jessicata (jessica.liu.taylor) · 2023-09-04T22:52:48.098Z · LW(p) · GW(p)

This is presented as a level of centralization between that of open-source and closed-source, but is actually more centralized than closed-source. In closed-source, anyone can write source code and can allow or restrict access to it how they like. In this proposal, this is not the case, since permission from the regulatory body is required. One would therefore expect even more concentration of AI capabilities in the hands of even fewer actors, as compared with closed-source.

Replies from: alex.herwix↑ comment by alex.herwix · 2023-09-05T05:53:00.678Z · LW(p) · GW(p)

This is not necessarily true because resources and source code would be shared between all actors who pass the bar so to speak. So capabilities should be diffused more widely between actors who have demonstrated competence than in a closed source model. It would be a problem if the bars were too high and tailored to suit only the needs of very few companies. But the ideal would be strong competition because the required standards are appropriate and well-measured with the regulating body investing resources into the development and support of new responsible players to broaden the base. However, mechanisms for phasing out players that are not up to the task anymore need to be found. All of this would aim to take much of the financial incentives out of the game so as to avoid race dynamics. Only organizations who want to do right by the people are incentivized, profit maximization is taken of the table.

I agree that the regulating body is in a powerful position but there would seem to be ways to set it up in such a way that this handled. There could be a mix of, e.g., governmental, civil society, and academic representatives that cross-check each other. There could also be public scrutiny and the ability to sue in front of international courts. It’s not easy, I concede that but to me this really does seem like an alternative worthwhile discussing.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2023-09-05T17:41:52.487Z · LW(p) · GW(p)

I don't see how this proposal substantially differs from nationalizing all AI work. Governments can have internal departments that compete, like corporations can. Removing the profit motive seems to only leave government and nonprofit as possibilities, and the required relationships with regulators make this more like a government project for practical purposes.

Without specifying more about the governance structure, this is basically nationalization. It is understandable that people would oppose nationalization both due to historical bad results of communist and fascist systems of government, and due to specific reasons why current governments are untrustworthy, such as handling of COVID, lab leak, etc recently.

In an American context, it makes very little sense to propose government expansion (as opposed to specific, universally-applied laws) without coming to terms with the ways that government has shown itself to be untrustworthy for handling catastrophic risks.

I think, if centralization is beneficial, it is wiser to centralize around DeepMind than any government. But again, the justification for this proposal seems spurious if it is in effect tending towards more centralization than default closed source AI.

Replies from: alex.herwix↑ comment by alex.herwix · 2023-09-05T21:03:50.378Z · LW(p) · GW(p)

So, I concede that the proposal is pretty vague and general and that this may make it difficult to get the gist of it but I think it's still pretty clear that the idea is broader than nationalizing. I refer specifically to the possible involvement of intergovernmental, professional, or civil society organizations in the regulating body. With regards to profit, the degree to which profit is allowed for could be regulated for each use case separately with some (maybe the more benign) use cases being more tailored to profit seeking companies than others.

Nevertheless, I agree that for a in-depth discussion of pros and cons more details regarding a possible governance structure would be desirable. That's the whole point of the post, we should start thinking about what governance structures we would actually want to have in place rather than assuming that it must be "closed source" or "open source". I don't have the answer but I advocate for engaging with an important question.

I completely disagree with the sentiment of the rest of your comment that "hands on regulation" is dead in the water because government is incompetent and that hoping for Google DeepMind or OpenAI to do the right things is the best way forward.

First, as I already highlighted above, nothing in this approach says that government alone must be the ones calling the shots. It may not be easy but it also seems entirely possible to come up with new and creative institutions that are able to regulate AI similar to how we are regulating companies, cars, aviation, or nuclear technology. Each of the existing institutions may have faults but we can learn from them, experiment with new ones (e.g., citizen assembly) and continuously improve (e.g., see the work on digital democracy in Taiwan). We must if we want to avoid both failure modes outlined in the post.

Second, I am surprised by the sanguine tone with regards to trusting for profit institutions. What is your argument that this will work out ok for all of us in the end? Even if we don't die due to corner cutting because of race dynamics, how do we ensure that we don't end up in a dystopia where Google controls the world and sells us to the highest bidder? I mean that's their actual business model after all, right?

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2023-09-05T21:14:21.601Z · LW(p) · GW(p)

Getting AI right is mainly a matter of technical competence and technical management competence. DeepMind is obviously much better at those than any government, especially in the AI domain. The standard AI risk threat is not that some company aligns AI to its own values, it's that everyone dies because AI is not aligned to anyone's values, because this is a technically hard problem, as has been argued on this website and in other writing extensively. If Google successfully allocates 99% of the universe to itself and its employees and their families and 1% to the rest of the people in the world, that is SO good for everyone's values compared with the default trajectory, due to a combination of default low chance of alignment, diminishing marginal utility in personal values, and similarity of impersonal values across humans.

If a government were to nationalize AI development, I would think that the NSA was the best choice due to their technical competence, although they aren't specialized in AI, so this would still be worse than DeepMind. DeepMind founder Shane Legg has great respect for Yudkowsky's alignment work.

Race dynamics are mitigated by AI companies joining the leader in the AI space, which is currently DeepMind. OpenAI agrees with "merge and assist" as a late-game strategy. Recent competition among AI firms, primarily in LLMs, is largely sparked by OpenAI (see Claude, Bard, Gemini). DeepMind appeared content to release few products in the absence of substantial competition.

Even if we don’t die due to corner cutting because of race dynamics, how do we ensure that we don’t end up in a dystopia where Google controls the world and sells us to the highest bidder?

Google obviously has no need to sell anything to anyone if they control the world. This sentence is not a logical argument, it is rhetoric.

Replies from: alex.herwix↑ comment by alex.herwix · 2023-09-06T07:40:03.706Z · LW(p) · GW(p)

Alright, it seems to me like the crux between our positions is that you are unwilling or unable to consider whether new institutions could create an environment that is more conducive to technical AI alignment work because you feel that this is a hopeless endeavor. Societies (in your view that seems to be just government) are simply worse at creating new institutions compared to the alternative of letting DeepMind do its thing. Moreover, you don't seem to acknowledge that it is worthwhile to consider how to avoid the dystopian failure mode because the catastrophic failure mode is simply much larger and more devastating.

If this a reasonable rough summary of your views then I continue to stand my ground because I don't think it's all that reasonable and well founded as you make it out to be.

First, as I tried to explain in various comments now, there is no inherent need to put only government in charge of regulation but you still seem to cling to that notion. I also never said that government should do the technical work. This whole proposal is clearly about regulating use cases for AI and the idea that it may be interesting to consider if mandating the sharing of source code and other knowledge assets could help to alleviate race dynamics and create an environment where companies like DeepMind and OpenAI can actually focus on doing what's necessary to figure out technical alignment issues. You seem to think that this proposal would want to cut them out of the picture... No, it would simply aim to shift their incentives so that they become more aligned with the rest of humanity.

If your argument is that they won't work on technical alignment if they are not the only "owners" of what they come up with and have a crazy upside in terms of unregulated profit, maybe we should consider whether they are the right people for the job? I mean, much of your own argument rests on the assumption that they will be willing to share at some point. Why should we let them decide what they are willing to share rather than come together to figure this stuff out before the game is done? Do you think this would be so much distraction to their work that just contemplating regulation is a dangerous move? That seems unreasonably naive and short sighted. In the worst case (i.e., all expert organizations defecting from work on alignment), with a powerful regulating body/regime in place, we could still hire individual people as part of a more transparent "Manhattan project" and simply take more time to role out more advanced AI capabilities.

Second, somewhat ironically, you are kind of making the case for some aspects of the proposal when you say:

Race dynamics are mitigated by AI companies joining the leader in the AI space, which is currently DeepMind. OpenAI agrees with "merge and assist" as a late-game strategy. Recent competition among AI firms, primarily in LLMs, is largely sparked by OpenAI (see Claude, Bard, Gemini). DeepMind appeared content to release few products in the absence of substantial competition.

The whole point of the proposal is to argue for figuring out how to merge efforts into a regulated environment. We should not need to trust OpenAI that they will do the right thing when the right time comes. There will be many different opinions what the right thing and when the right time is. Just letting for profit companies merge as they see fit is almost predictably a bad idea and bound to be slow if our current institutions tasked with overseeing merger and acquisitions are involved in processes that they do not understand and have no experience with. Maybe it's just me but I would like to figure out how society can reasonably deal with such situations before the time comes. Trusting in the competence of DeepMind to figure out those issues seems naive. As you highlighted for-profit companies are good at technical work where incentives are aligned but much less trustworthy when confronted with the challenge of having to figure out reasonable institutions that can control them (e.g., regulatory capture is a thing).

Third, your last statement is confusing to me because I do believe that I asked a sensible question.

Google obviously has no need to sell anything to anyone if they control the world. This sentence is not a logical argument, it is rhetoric.

Do you mean that the winner of the race will be able to use advanced nano manufacturing and other technologies to simply shape the universe in their image and, thus, not require currencies anymore because coordination of resources is not needed? I would contest this idea as not at all obvious because accounting seems to be a pretty fundamental ingredient in supposedly rational decision making (which we kind of expect an superintelligence to implement). Or do you want to imply that they would simply decide against keeping other people around? I think that would qualify as "dystopian" in my book and, hence, kind of support my argument. Thus, I don't really understand why you seem to claim that my (admittedly poignantly formulated) suggestion that the business model of the winner of the race is likely to shape the future significantly is not worthwhile discussing?

Anyhow, I acknowledge that much of your argument rests on the hope and belief that Google DeepMind et al. are (the only) actors that can be trusted to solve technical alignment in the current regulatory environment and that they will do the right things once they are there. To me that seems more like wishful thinking rather than a well-grounded argument but I also know the situation where I have strong intuitions and gut feelings about what the best course of action may be, so I sympathize with your position to some degree.

My intent is simply to raise some awareness that there are other alternatives beyond the dichotomy between open source and closed source which we can come up with that may help us to create a regulatory environment that is more conducive to realizing our common goals. More than hope is possible if we put our minds and efforts to it.

comment by Chris_Leong · 2023-09-05T05:46:40.040Z · LW(p) · GW(p)

Whereas the Regulated Source model would aim to compell responsible parties to share access to AI development products in an effort to counteract race dynamics and the centralization of power.

I guess my issue is that I expect regulated source to effectively become open-source because someone will leak it like they leaked LlaMa.

Replies from: alex.herwix↑ comment by alex.herwix · 2023-09-05T06:04:19.719Z · LW(p) · GW(p)

I think it could be possible to introduce more stringent security measures. We can generally keep important private keys from being leaked so if we treat weights carefully, we should be able to have at least a similar track record. We can also forbid the unregulated use of such software similar to the unregulated use of nuclear technology. Also in the limit, the problem still exists in a closed source world.

Llama is a special case because there are no societal incentives against it spreading… the opposite is the case! Because it was “proprietary”, it’s the magic secret sauce that everyone wants to stay afloat and in the race. In such an environment it’s clear that leaking or selling out is just a matter of time. I am trying to advocate a paradigm shift where we turn work on AI into a regulated industry shaped for the benefit of all rather than driven by profit maximization.

Replies from: Chris_Leong↑ comment by Chris_Leong · 2023-09-05T09:41:54.124Z · LW(p) · GW(p)

I'm not a fan of profit maximisation either.

Although I'm much more concerned about the potential for us to lose control then for a particular corporation to make a bit too much profit.

↑ comment by alex.herwix · 2023-09-05T12:00:02.538Z · LW(p) · GW(p)

I think my intuition would be the opposite... The more room for profit, the more incentives for race dynamics and irresponsible gold rushing. Why would you think it's the other way around?

comment by NeroWolfe · 2023-09-06T15:13:55.219Z · LW(p) · GW(p)

I don't share your optimistic view that transnational agencies such as the IAEA will be all that effective. The history of the nuclear arms race is that those countries that could develop weapons did, leading to extremes such as the Tsar Bomba, a 50-megaton monster that was more of a dick-waving demonstration than a real weapon. The only thing that ended the unstable MAD doctrine was the internal collapse of the Soviet Union. So, while countries have agreed to allow limited inspection of their nuclear facilities and stockpiles, it's nothing like the level of complete sharing that you envision in your description.

I think there is something to be said for treating AI technology similar to how we treat NBC weapons: tightly regulated and with robust security protocols, all backed up by legal sanctions, based on the degree of danger. However, it seems likely that the major commercial players will fight tooth and nail to avoid that situation, and you'll have to figure out how to apply similar restrictions worldwide.

So, I think this is an excellent discussion to have, but I'm not convinced that the regulated source model you describe is workable.

Replies from: alex.herwix↑ comment by alex.herwix · 2023-09-06T17:37:02.022Z · LW(p) · GW(p)

Thanks for engaging with the post and acknowledging that regulation may be a possibility we should consider and not reject out of hand.

I don't share your optimistic view that transnational agencies such as the IAEA will be all that effective. The history of the nuclear arms race is that those countries that could develop weapons did, leading to extremes such as the Tsar Bomba, a 50-megaton monster that was more of a dick-waving demonstration than a real weapon. The only thing that ended the unstable MAD doctrine was the internal collapse of the Soviet Union. So, while countries have agreed to allow limited inspection of their nuclear facilities and stockpiles, it's nothing like the level of complete sharing that you envision in your description.

My position is actually not that optimistic. I don't believe that such transnational agencies are very likely to work or a safe bet to ensure a good future, it is more that it seems to be in our best interest to really consider all of the options that we can put on the table, try to learn from what has more or less worked in the past but also look for creative new approaches and solutions because the alternative is dystopia or catastrophe.

A key difference between AI and nuclear weapons is that the AI labs are not as sovereign as nation states. If the US, UK, and EU were to impose strong regulation on their companies and "force them to cooperate" similar to what I outlined, this would seem (at least theoretically) possible and already a big win to me. For example, more resources could be allocated to alignment work compared to capabilities work. China seems much more concerned about regulation and control of companies anyway so I see a chance that they would follow suit in approaching AI carefully.

However, it seems likely that the major commercial players will fight tooth and nail to avoid that situation, and you'll have to figure out how to apply similar restrictions worldwide.

To be honest, it's overdue that we find the guts to face up to them and put them in their place. Of course that's easier said than done but the first step is to not be intimidated before we even tried. Similarly, the call for worldwide regulations often seems to me to be a case of "don't let the perfect be the enemy of the good". Of course, worldwide regulations would be desirable but if we only get US, UK, and EU or even the US or EU alone to make some moves here, we would be in a far better position. It's a bogeyman that companies will simply turn around and set up shop in the Bahamas to pursue AGI development because they would not be able to a) secure the necessary compute to run development and b) sell their products in the largest markets. We do have some leverage here.

So, I think this is an excellent discussion to have, but I'm not convinced that the regulated source model you describe is workable.

Thanks for acknowledging the issue that I am pointing to here. I see the regulated source model mostly as a general outline of a class of potential solutions some of which could be workable and others not. Getting to specifics that are workable is certainly the hard part. For me, the important step was to start discussing them more openly to build more momentum for the people who are interested in taking such ideas forward. If more of us would start to openly acknowledge and advocate that there should be room for discussing stronger regulation our position would already be somewhat improved.