AI Safety Newsletter #1 [CAIS Linkpost]

post by Orpheus16 (akash-wasil), Dan H (dan-hendrycks), ozhang (oliver-zhang) · 2023-04-10T20:18:57.485Z · LW · GW · 0 commentsThis is a link post for https://newsletter.safe.ai/p/ai-safety-newsletter-1

Contents

Growing concerns about rapid AI progress Plugging ChatGPT into email, spreadsheets, the internet, and more Which jobs could be affected by language models? None No comments

The Center for AI Safety just launched its first AI Safety Newsletter. The newsletter is designed to inform readers about developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions. First edition below:

---

Growing concerns about rapid AI progress

Recent advancements in AI have thrust it into the center of attention. What do people think about the risks of AI?

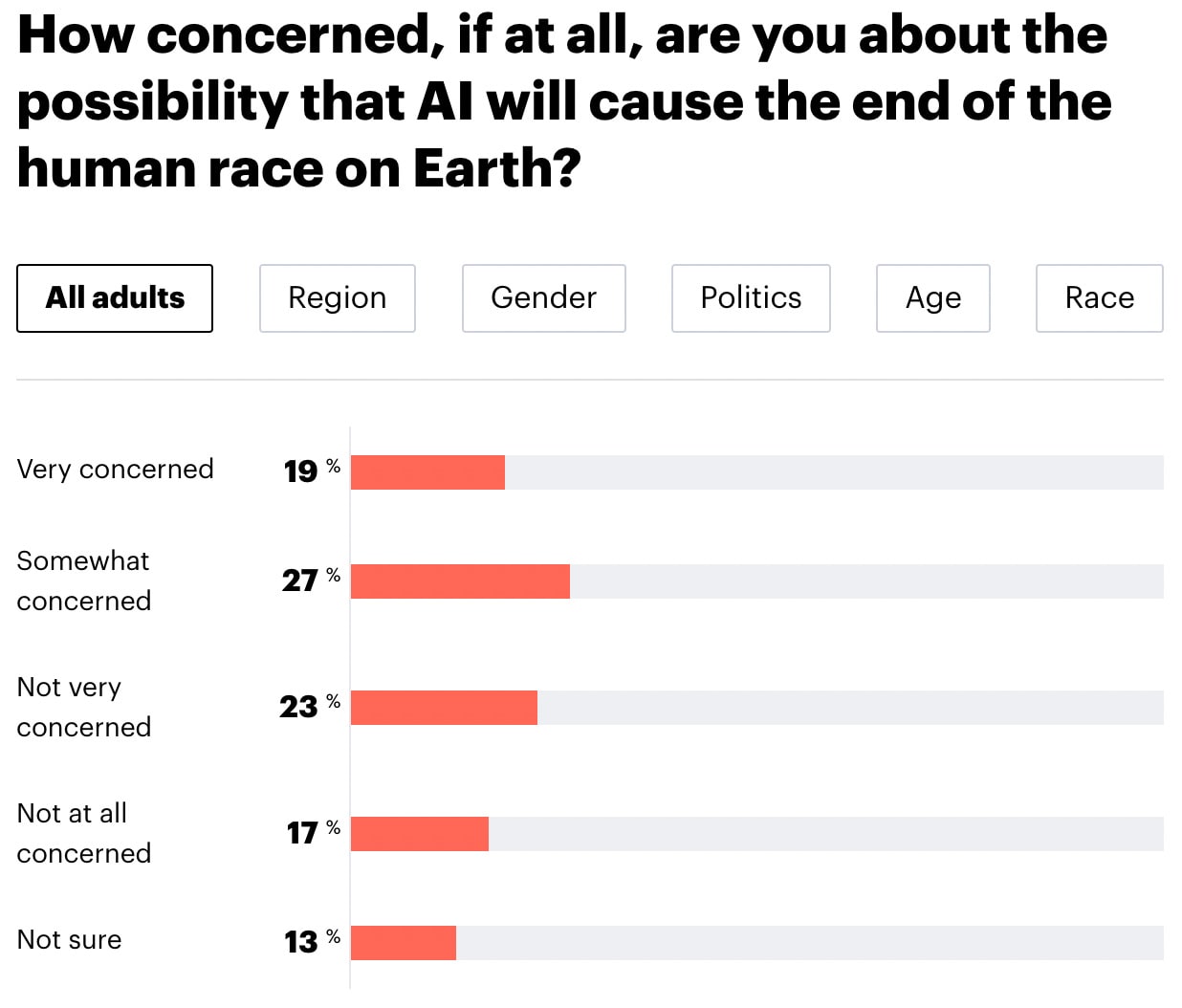

The American public is worried. 46% of Americans are concerned that AI will cause “the end of the human race on Earth,” according to a recent poll by YouGov. Young people are more likely to express such concerns, while there are no significant differences in responses between people of different genders or political parties. Another poll by Monmouth University found broad support for AI regulation, with 55% supporting the creation of a federal agency that governs AI similar to how the FDA approves drugs and medical devices.

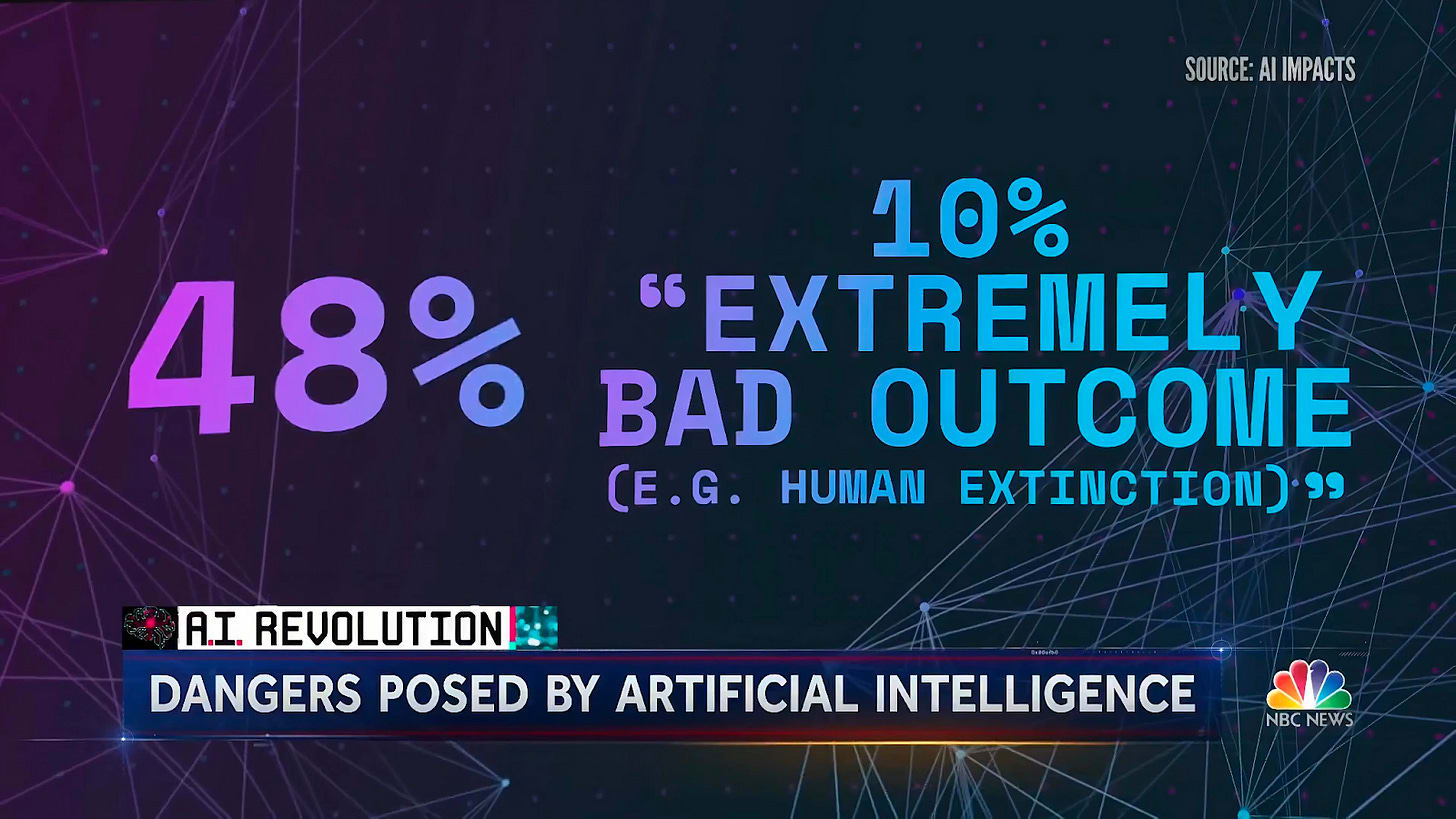

AI researchers are worried. A 2022 survey asked published AI researchers to estimate the probability of artificial intelligence causing “human extinction or similarly permanent and severe disempowerment of the human species.” 48% of respondents said the chances are 10% or higher.

We think this is aptly summarized by this quote from an NBC interview: Imagine you're about to get on an airplane and 50% of the engineers that built the airplane say there's a 10% chance that their plane might crash and kill everyone.

Geoffrey Hinton, one of the pioneers of deep learning, was recently asked about the chances of AI “wiping out humanity.” He responded: “I think it’s not inconceivable. That’s all I’ll say.”

Leaders of AI labs are worried. While it might be nice to think the people building AI are confident that they’ve got it under control, that is the opposite of what they’re saying.

Sam Altman (OpenAI CEO): “The bad case — and I think this is important to say — is lights out for all of us," Altman said.” (source)

Demis Hassabis (DeepMind CEO): “When it comes to very powerful technologies—and obviously AI is going to be one of the most powerful ever—we need to be careful. Not everybody is thinking about those things. It’s like experimentalists, many of whom don’t realize they’re holding dangerous material.” (source)

Anthropic: “So far, no one knows how to train very powerful AI systems to be robustly helpful, honest, and harmless… Furthermore, rapid AI progress will be disruptive to society and may trigger competitive races that could lead corporations or nations to deploy untrustworthy AI systems. The results of this could be catastrophic.” (source)

Takeaway: The American public, ML experts, and leaders at frontier AI labs are worried about rapid AI progress. Many are calling for regulation.

Plugging ChatGPT into email, spreadsheets, the internet, and more

OpenAI is equipping ChatGPT with “plugins” that will allow it to browse the web, execute code, and interact with third-party software applications like Gmail, Hubspot, and Salesforce. By connecting language models to the internet, plugins present a new set of risks.

Increasing vulnerabilities. Plugins for language models increase risk in the short-term and long-term. Originally, LLMs were confined to text-based interfaces, where human intervention was required before executing any actions. In the past, an OpenAI cofounder mentioned that POST requests (submitting commands to the internet) would be treated with much more caution. Now, LLMs can take increasingly risky actions without human oversight.

GPT-4 is able to provide information on bioweapon production, bomb creation, and the purchasing of ransomware on the dark web. Additionally, LLMs are known to be vulnerable to manipulation through jailbreaking prompts. Models with dangerous knowledge and well-known jailbreaks now have the potential to independently carry out actions.

Moreover, the infrastructure built by OpenAI’s plugins can be used for other models, including ones that are less safe. GPT-4 is trained to refuse certain bad actions. However, what happens when GPT-4chan gains access to the same plugin infrastructure?

Raising the stakes on AI failures. Plugins set a risky precedent for future systems. As increasingly capable and autonomous systems are developed, there is a growing concern that they may become harder and harder to control. One prominent proposal to maintain control over advanced systems is to enclose them within a "box," restricting their output to written text. The introduction of plugins directly contradicts these safety and security measures, giving future autonomous and strategic AI systems easy access to the real world.

Competition overrules safety concerns. Around a week after OpenAI announced ChatGPT plugins, the AI company Anthropic made a similar move. Anthropic gave their language model access to Zapier, which allows their language model to autonomously take actions on over 5,000 apps. It seems likely that OpenAI’s decision put pressure on Anthropic to move forward with their own version of plugins.

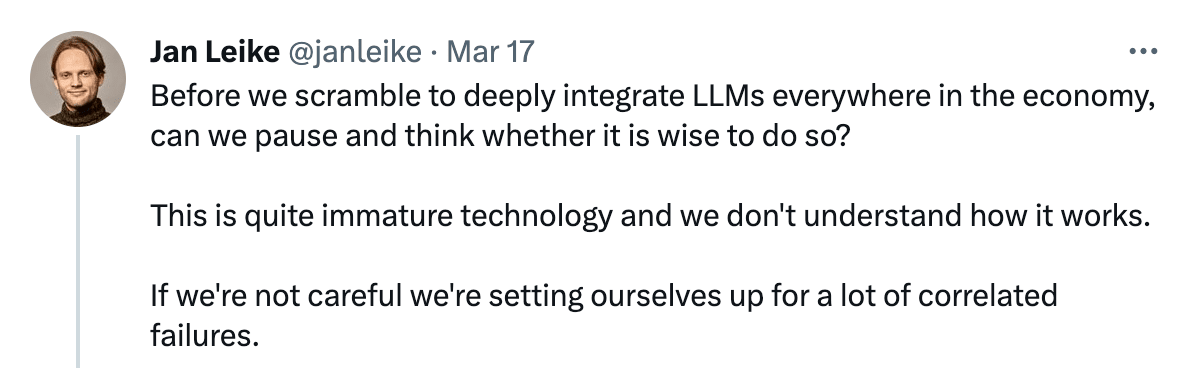

Executives at both OpenAI and Anthropic publicly admit that AI carries tremendous risks, but that doesn’t always translate into safer actions. Here’s a tweet from Jan Leike, the head of OpenAI’s alignment team, just a week before the company’s plugins announcement:

Which jobs could be affected by language models?

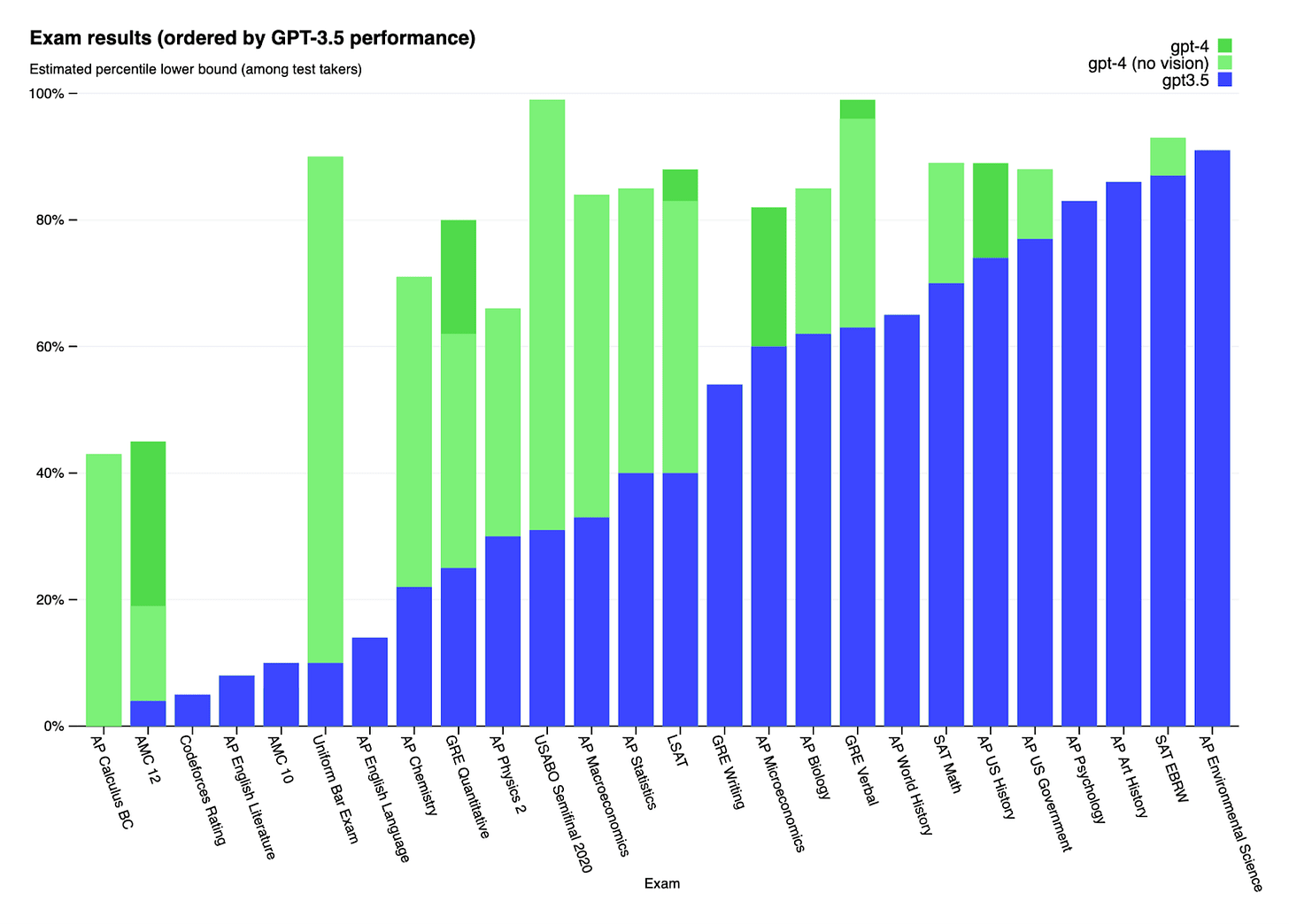

“Most of them,” according to a new paper titled “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models.” They estimate that 80% of American workers could use large language models (LLMs) like ChatGPT for 10% or more of their work tasks. If they’re right, language models could end up like electricity and internal combustion engines as a true general purpose technology.

Educated, high-income workers will see the biggest impacts. Unlike research such as Autor et al. (2003) and Acemoglu and Autor (2010) which find that older technology automated primarily low- and middle-income jobs, this paper forecasts the largest impacts of language models will be on educated, high-income workers. Writing and programming skills are estimated to have the most exposure to ChatGPT and its descendants, with manual labor, critical thinking, and science skills seeing less impact from LLMs. Only 3% of occupations had zero exposure to language models, including mechanics, cafeteria attendants, and athletes.

Action-taking language models drive economic impact. Equipping chatbots with tools like ChatGPT plugins is estimated to raise the share of potentially exposed jobs from 14% to 46%. The difficulty of building and deploying these new capabilities drives what Brynjolfsson et al. (2017) calls the “modern productivity paradox”: AI is shockingly intelligent already, but large economic impact will require time for complementary innovations and new business practices.

Your language model, if you choose to accept it. ChatGPT has already been banned by J.P. Morgan, New York City’s public schools, and the entire nation of Italy. The “GPTs are GPTs” paper focuses on technological potential, but explicitly ignores the question of whether we will or should use language models in every possible case.

Using AI to help conduct research. The authors of “GPTs are GPTs” used GPT-4 to quickly label the automation potential for each task, finding that humans agreed with GPT-4’s responses the majority of the time. As AI progressively automates its own development, the involvement of humans in the process gradually diminishes.

Want more? Check out the ML Safety newsletter for technical safety research and follow CAIS on Twitter.

0 comments

Comments sorted by top scores.