Studies of Human Error Rate

post by tin482 · 2025-02-13T13:43:30.717Z · LW · GW · 3 commentsContents

3 comments

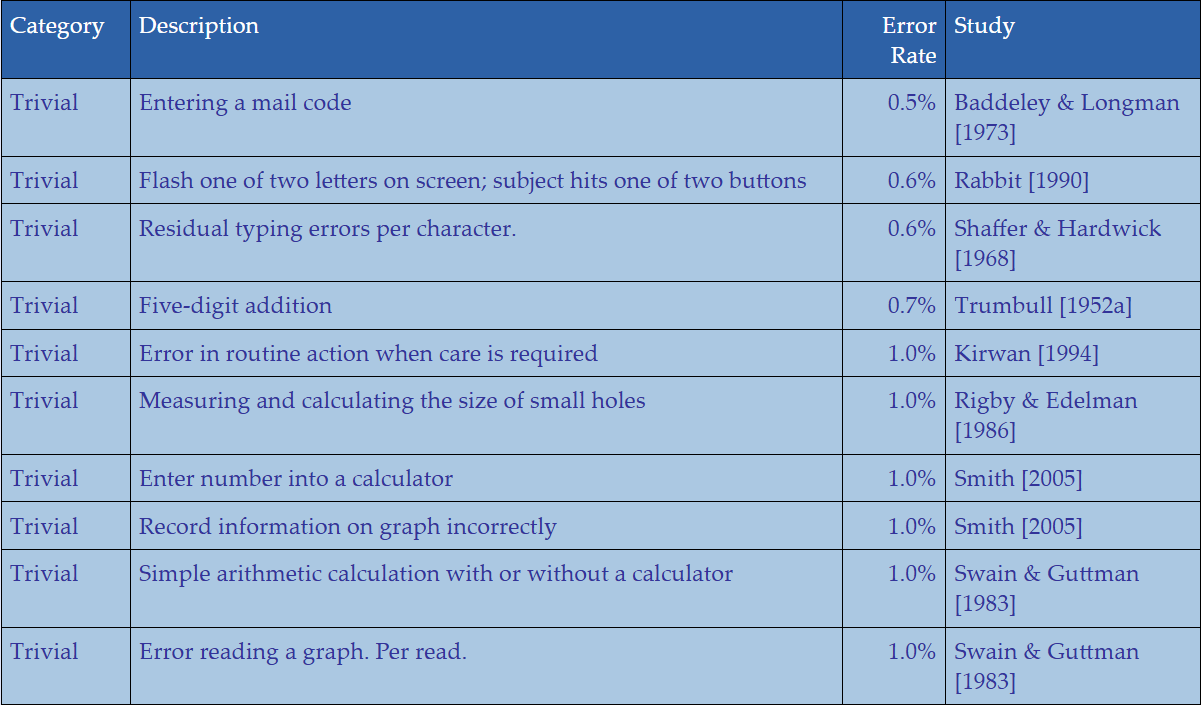

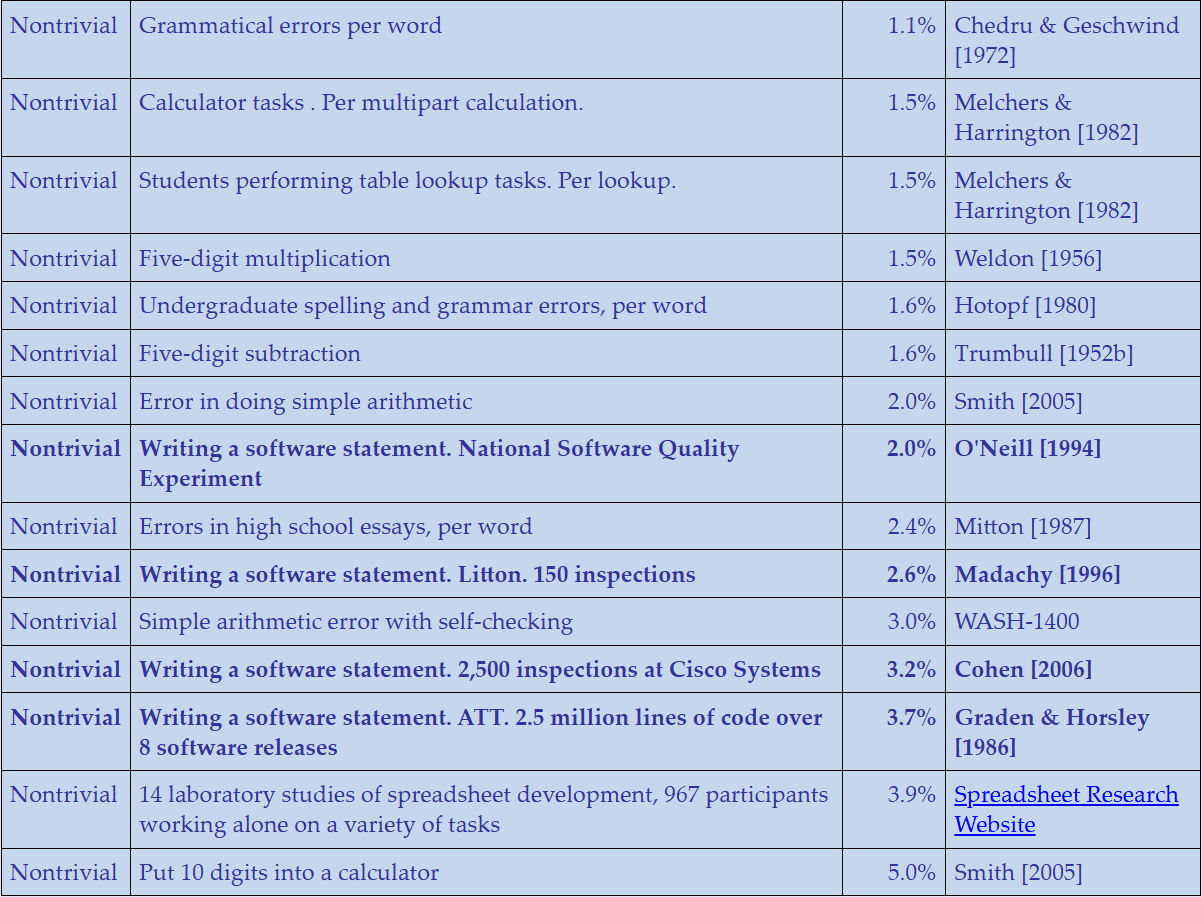

This is a link post for https://panko.com/HumanErr/SimpleNontrivial.html, a site which compiles dozens of studies estimating Human Error Rate for Simple but Nontrivial Cognitive actions. A great resource! Note that 5-digit multiplication is estimated at ~1.5%.

When LLMs were incapable of even basic arithmetic that was a clear deficit relative to humans. This formed the basis of several arguments about difference in kind, often cruxes for whether or not they could be scaled to AGI or constituted "real intelligence". Now that o3-mini can exactly multiply 9-digit numbers, the debate has shifted.

Instead, skeptics often gesture to hallucinations, errors. An ideal symbolic system never makes such errors, therefore LLMs cannot truly "understand" even simple concepts like addition. See e.g. Evaluating the World Model Implicit in a Generative Model for this argument in the literature. However, such arguments reliably rule out human "understanding" as well! Studies within Human Reliability Analysis find startlingly high rates even for basic tasks, and even with double checking. Generally, the human reference class is too often absent (or assumed ideal) in AI discussions, and many LLM oddities have close parallels in psychology. If you're willing to look!

3 comments

Comments sorted by top scores.

comment by jimmy · 2025-02-13T17:47:17.216Z · LW(p) · GW(p)

Instead, skeptics often gesture to hallucinations, errors. [...] However, such arguments reliably rule out human "understanding" as well!

"Can do some impressive things, but struggles with basic arithmetic and likes to make stuff up" is such a fitting description of humans that I was quite surprised when it turned out to be true of LLMs too.

Whenever I see a someone claim that it means LLM can't "understand" something, I find it quite amusing that they're almost demonstrating their own point; just not in the way they think they are.