Levels of analysis for thinking about agency

post by Cole Wyeth (Amyr) · 2025-02-26T04:24:24.583Z · LW · GW · 0 commentsContents

No comments

Claims about the mathematical principles of cognition can be interpreted at many levels of analysis. For one thing, there are lots of different possible cognitive processes - what holds of human cognition may not hold of mind-space in general. But the situation is actually a lot worse than this, because even one mind might be doing a lot of different things at a lot of different levels, and the level distinction is not always conceptually clear.

Observe the contortions that the authors go through below to establish what they are and are not saying about intelligent agents in a paper on self-modification (you don't need to understand what the paper is about):

The paper is meant to be interpreted within an agenda of “Begin tackling the conceptual challenge of describing a stably self-reproducing decision criterion by inventing a simple formalism and confronting a crisp difficulty”; not as “We think this Gödelian difficulty will block AI”, nor “This formalism would be good for an actual AI”, nor “A bounded probabilistic self-modifying agent would be like this, only scaled up and with some probabilistic and bounded parts tacked on”. Similarly we use first-order logic because reflection within first-order logic has a great deal of standard machinery which we can then invoke; for more realistic agents, first-order logic is not a good representational fit to most real-world environments outside a human-constructed computer chip with thermodynamically expensive crisp variable states.

-Yudkowsky and Herreshoff, "Tiling Agents for Self-modifying AI, and the Löbian Obstacle"

I think that this discussion is quite helpful, and we should do more of this - but ideally, we could take advantage of more concise language. We need some short hands for the types of claims we can make about cognition.

The best-known system is David Marr's levels of analysis (see page 25). He divides understanding of an information processing device to three levels:

- The computational level addresses the problem that the device is trying to solve: "What is the goal of the computation, why is it appropriate, and what is the logic of the strategy by which it can be carried out?"

- The algorithmic level addresses the algorithm that solves the computational problem: "How can this computatation and algorithm be implemented? In particular, what is the representation for the input and output, and what is the algorithm for the transformation?"

- The hardware implementation level asks how the algorithm is physically implemented: "How can the representation and algorithm be realized physically?"

As I understand it, this system has fallen out of favor a bit with cognitive scientists (also here's an amusing offshoot that questions whether neuroscientists have made much progress above level 3). But I think it's the sort of thing we want. Still, the quote I started with makes it clear that there's a lot more going on than this division makes clear.

Now, I'm going to introduce my own three levels: optimal standards, core engines, and convergent algorithms. However, there is not a one-to-one correspondence here; my levels attempt to zoom in on Marr's 1st and 2nd level, so the map is

computational optimal standards

algorithmic core engines / convergent algorithms

I'm not so concerned with Marr's (hardware implementation) level 3, though I think it's worth keeping in mind that both human brains and artificial neural networks are ultimately huge circuits, and its possible that some tricks implemented in the circuits to perform specific tasks are sophisticated enough to blur the hardware/software distinction, rather than the brain (for instance) simply implementing a hardware independent program that runs on neurons.

I think that Marr's first (computational) level survives into the context of AGI more or less unscathed in the sense that we often want to discuss the optimal behavior that a mind should aspire to. For instance, I think it's often appropriate to think of Bayesian updating as a fully normative standard of prediction under uncertainty. That is, in some cases we can understand how all of an agent's relevant information can be summarized correctly (dare I say objectively?) as priors and show from first principles that the Bayesian approach describes optimal inference. As an example from nature, I have filtering problems in mind here. In a game of chess, we can also write down the precise optimal policy that an agent should follow (of course as an algorithm or specification, we can't write down the impossibly huge table). Marcus Hutter's AIXI agent is an attempt to formalize the problem faced by an artificial general intelligence in this way - and I happen to believe that it is about right, despite idealizing the situation in a way that fails to capture some problems unique to embedded agents [LW · GW]. Let's call this the optimal standards level of analysis. It is essentially equivalent to Marr's computational level, but explicitly has no computability requirement and is focused on agents.

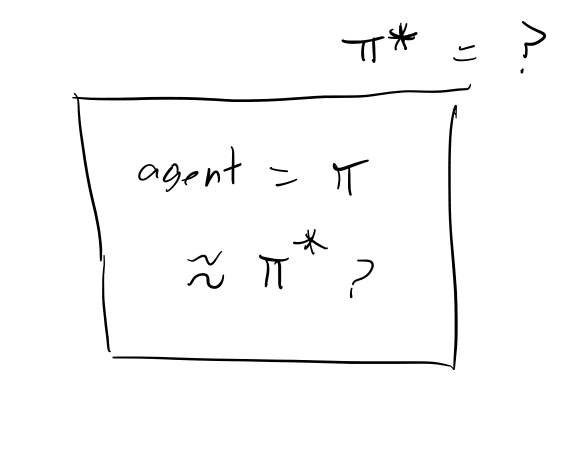

In the above diagram, represents the optimal policy under some unspecified standard.

However, there is a decent argument to be made that intelligence is inherently about using limited computational resources[1] to solve a problem - and if you assume away the compute limits, you're no longer talking about (many of) the interesting aspects of intelligence. For instance, I think @abramdemski [LW · GW] holds this view. At this point, you need to use something like Marr's algorithmic level of analysis. If you're a computational cognitive scientist, this at first seems conceptually fine - you can experiment with human subjects and try to determine what kind of algorithms their brains are using to process information. If you're a narrow AI engineer, you are also free to propose and analyze various algorithms for specific types of intellectual behavior. But if you want to study AGI, the situation is a little more subtle.

To mathematically understand AGI in a glass-box way, you need to write down algorithms (or perhaps algorithm schema) for solving general problems. This is really hard as stated, since the types of algorithms we have been able to find that exhibit some general intelligence are all pretty much inscrutable and would not really be considered glass-box. But from a philosophical or scientific perspective, the problem is even harder: we want to understand the kinds of common principles we expect to find between all implementations of AGI! On the face of it, it's not even clear what kind of form an answer would take. This is part of @Alex_Altair [LW · GW]'s research agenda at Dovetail, and unfortunately seems likely to be a subproblem of AI alignment.

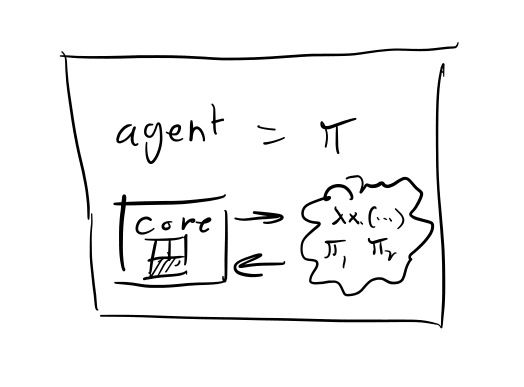

I think that a little more thought exposes a bit of a schism in the algorithmic level of analysis, and it has to do with the flexibility and perhaps recursiveness that seems to be inherent in intelligent minds. There are two reasons that an AGI may be said to use or implement a given algorithm internally; the algorithm may be coded into the AGI or it may have been discovered and adopted by the AGI's deliberative process.

As an example, let's consider Bayesian inference. Pierre Simon Laplace said that probability theory is nothing more than common sense reduced to calculation. But where does this "common sense" intuitive probability theory come from? Personally, I suspect that the brain is natively Bayesian, in the sense that it is internally manipulating probabilities in the process of forming and updating beliefs - I think this is one of the core engines of cognition (and I will put claims like this at the core engines level of analysis). This is partly backed up by extensive experimental evidence from computational cognitive science showing that human inferences tend to match Monte-Carlo approximations of Bayesian inference with very few samples[2]. Though I am not a cognitive scientist, I believe that some intuitive probability judgements appear even in infants, who have certainly never been taught Bayes rule (somehow, you can even get through high school without learning it).

There is an alternative explanation though. Perhaps because Bayes rule is often the gold standard for inference (as I claimed when discussing the computational level of analysis) it may be that the brain consistently learns to use it across many situations. If so, the core engine of cognition in the brain may not be Bayesian - perhaps instead it is some mixture of experts between various cognitive algorithms which is better understood as frequentist! Or perhaps there is no meaningful core engine at all (or a few vying for the position).

One thing that seems clear is that the human brain is capable of inventing and running algorithms. In fact, this is a central assumption of the rationalist project! We actually consciously discover Bayes rule and try to apply it more precisely than our brains do by default (in some cases, such as medical diagnoses, this works better). Naturally we also discover plenty of algorithms that seem unlikely to be central to intelligence, say merge sort. But this sort of thing seems more contingent, and we don't want to focus on it (we can leave it to algorithms and complexity researchers).

I think that often the right claim to make is that sufficiently powerful and intelligent agents will convergently discover and use a certain cognitive framework, representation, and/or algorithm. Though optimal standards are a guide that helps us suppose what kind of cognitive behavior may serve a useful purpose, we are ultimately interested in understanding the internal structure of agents. From the outside, the algorithms that a mind ends up adopting and using may be all that is visible to us when predicting how it will act. I will call this the convergent algorithms level of analysis. It's concerned with what I have previously called the "cognitive algorithmic content" of agent's minds, but particularly the kind of content that is reliably invented by a diverse class of agents and used across many situations for decision making (as opposed to, say, algorithms that Solomonoff induction approximations occasionally infer and use for knowledge representation).

I think that arguments for exotic acausal decision theories are usually best understood on the convergent algorithmic level. These decision theories (such as functional decision theory = FDT) are often motivated by highly unusual situations such as exact clones of an agent playing the prisoner's dilemma. I don't think evolution needed to invent FDT - and I am not sure it is efficiently approximable - so I don't think it forms the core engine of human intelligence. In part, this is because I think AIXI is usually ~the gold standard for intelligent behavior and does not need FDT. However, whatever the core engine is, I think it should be flexible enough to invent things like FDT when the circumstances demand it - and to be persuaded by the sort of philosophical arguments that (correctly) persuade us to change decision theories.

Within the algorithmic level of analysis, we have already found two conceptually distinct sublevels which I do not think are usually distinguished in rationalist discourse: core engines and convergent algorithms.

Now it is important to draw the boundaries carefully here - and indeed to consider to what extent those boundaries exist.

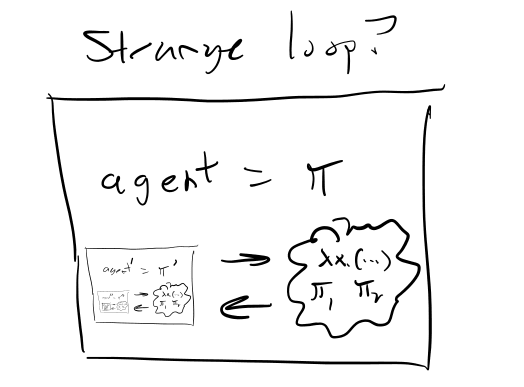

The following discussion is a little bit more technical than the rest of the post and can be skipped on a first reading. First of all, there is at least a framing difference between the algorithmic cognitive content that an agent discovers and uses as opposed to the self-modifications that an agent would choose to make. I usually don't think of producing new ideas and strategies as a form of self-modification, even if those strategies are cognitive. Still, it seems natural to guess that those decision theories that tile (in the sense of being fixed under self-modification) are closely connected to the decision theories that an agent would discover and choose to apply. One significant difference is that, if there is a core engine of cognition, it would almost by definition continue to function as new cognitive algorithms are discovered and used. This seems analogous to the default policy in the Vingean reflection paper.

Okay, a little less technically but subtler than it seems: Douglas Hofstadter seems to believe that there is no distinction between the core cognitive engine and the cognitive algorithms it discovers and uses. His (non-technical) idea of consciousness as a strange loop seems to imply that the cognitive algorithms kind of wrap around and form the core engine discovering and driving themselves. I seem to recall his group actually implementing toy systems that attempt to work in this sort of self-organized way (plucking programs from a "code-rack," attempting to combine them, running them in parallel, etc.) This seems reasonably plausible to me, though I suspect it probably is not how the brain actually works - I am less enamored with the idea of emergence [LW · GW] than I was as a child. It just seems more practical, robust, and evolutionarily plausible to have a fairly stable core engine.

Finally, in view of the potential complications above, my proposed levels are:

- optimal standards: what does the best performance for an agent look like from the outside?

- core engines: what (if anything) is driving the agent's cognition at a fundamental/root level?

- convergent algorithms: what cognitive algorithms or principles are all sufficiently general agents likely to discover and apply?

- ^

I believe Marr had in mind solutions that are at least computable from his formulation of the computational level.

- ^

One of my favorites among many examples: https://cocosci.princeton.edu/tom/papers/OneAndDone.pdf

0 comments

Comments sorted by top scores.