Alignment from equivariance

post by hamishtodd1 · 2024-08-13T21:09:11.849Z · LW · GW · 2 commentsContents

Equivariance as we currently have it Equivariance as we might hope to have it How do you make NNs equivariant? None 2 comments

Epistemic status: research direction

Equivariance is a way of enforcing a user-specified sense of consistency across inputs for a NN. It's a concept from computer vision which I think could be used to align AIs, or rather for building an interface/framework for aligning them.

So far it has been applied exclusively in CV - so it's speculation (by me) that you could apply it beyond CV. But I think I can convince you it's a reasonable speculation because, as you'll see, equivariance and consistency, for neural networks, are deeply linked mathematically.

Like me I imagine you expect (/hope!) that "consistency with the law" or "moral consistency" will turn out to be formalizable-enough to be part of the design of a computer programs. And I could almost go as far as to say that those vague senses of the word "consistency", when you formalize them, simply are equivariance. So it makes sense to me that a reasonable design program aiming towards moral or legal consistency could be started by trying to generalize equivariance.

Equivariance as we currently have it

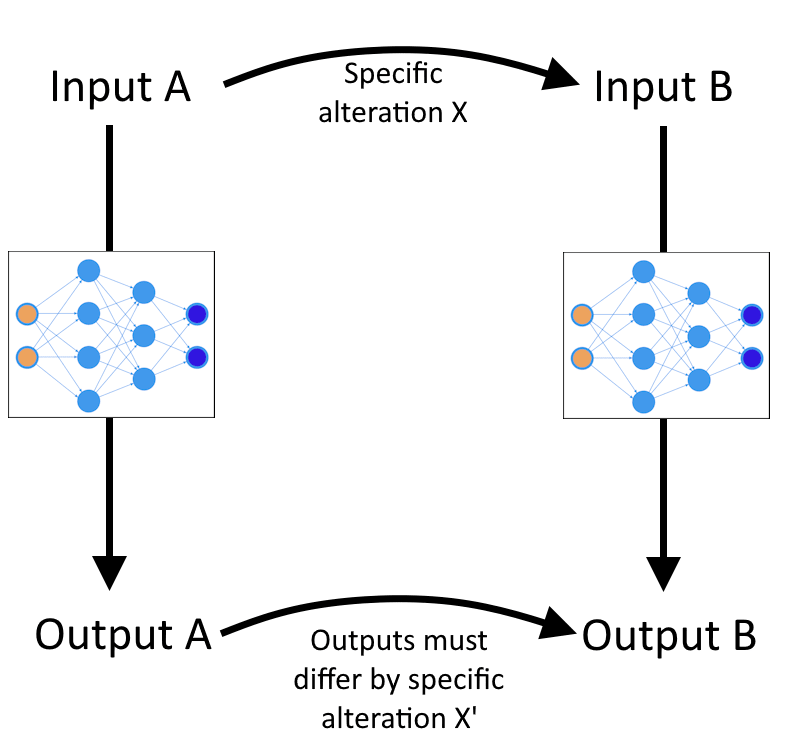

Here's the abstract picture you should, and usually do, see on the first page of any paper about equivariance - don't worry if you don't get it based on this:

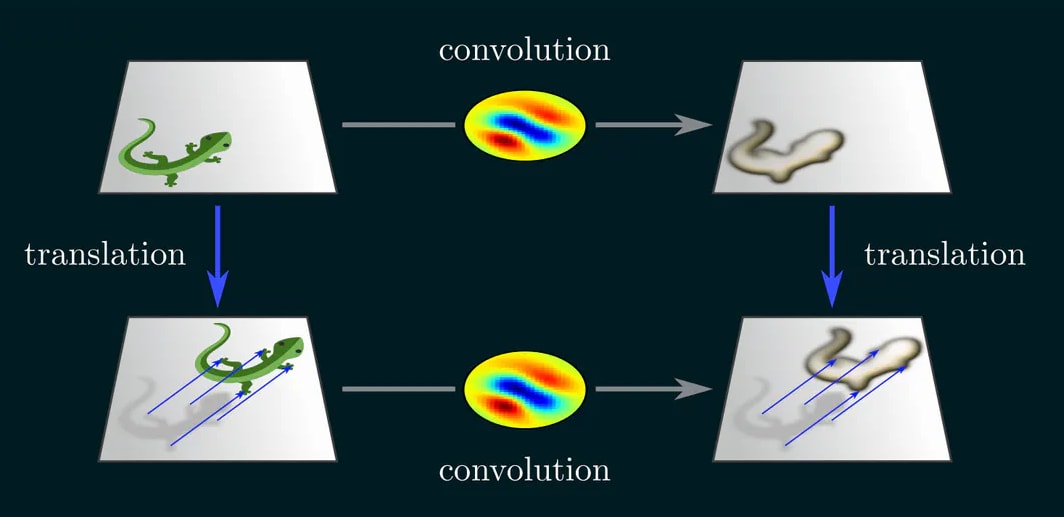

Let's see examples - here's one from equivariance king Maurice Weiler:

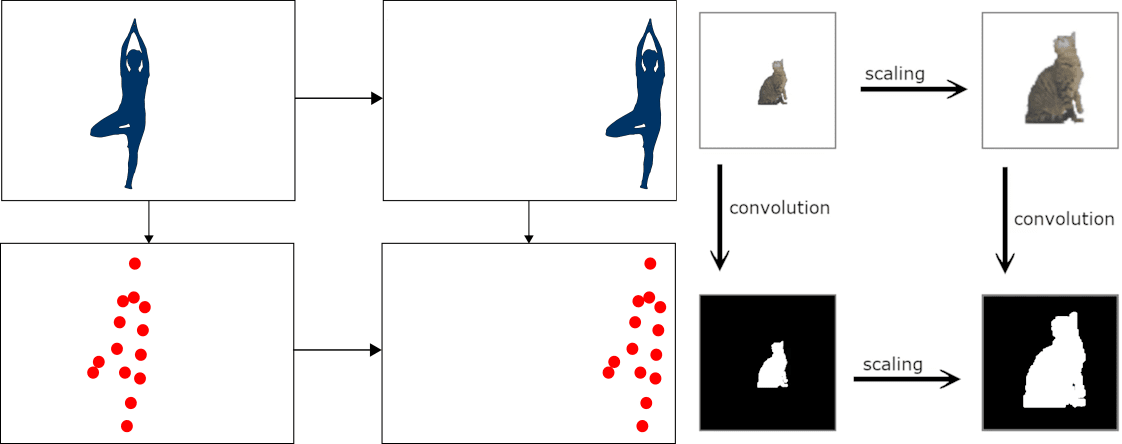

If I'm right, you who read alignment papers will be seeing lots more of these diagrams in future, so you might as well get used to them! Here are two more from Divyanshu Mishra and Mark Lukacs:

These are called commutative diagrams, and a neural network is equivariant to X if for all inputs that differ by X, its outputs differ by X'. On the left there is translation equivariance, on the right is scale equivariance.

Do you always want translation equivariance, or scale equivariance in computer vision? No. If you were training one on satellite photography that was all guaranteed to be at the same scale, you (probably) wouldn't want scale equivariance - otherwise your NN might not be able to distinguish a real truck from a toy truck left in someone's lawn. Or, if you were training a NN on mammograms that were completely guaranteed to be aligned a certain way and centered on a certain point, you might find translation equivariance to be a bad thing too.

But, often equivariance can be really useful. It's a guarantee you can offer a customer, an example of "debugability" - hard to come by in deep learning!

Additionally (perhaps more importantly) you can train a NN with ten times less data (Winkels & Cohen 2019), because your NN doesn't have to spend ages learning to rotate every little thing or whatever. You probably actually know translation-equivariant NNs by a different name - they're convolutional NNs! More on this soon.

Equivariance as we might hope to have it

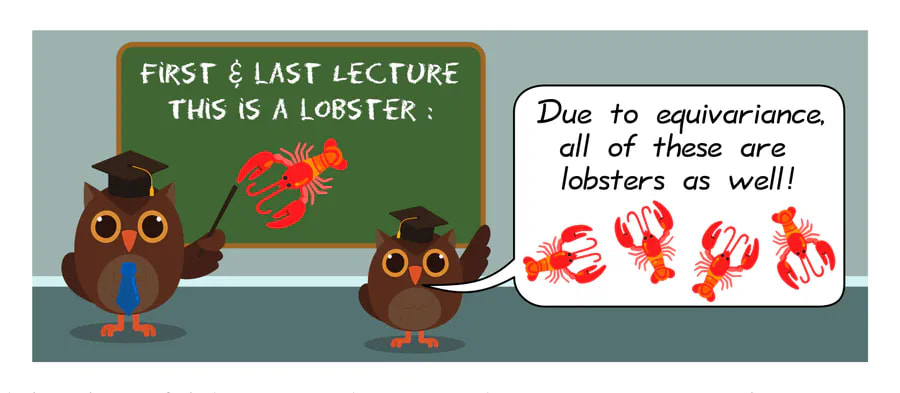

Blue arrow is a language model - nothing else in its context window!

The claim is that we ought to invest some thinking into how we could guarantee that a NN follows this particular moral principle.

Hmm, "moral principle" - that's a philosophy term. But here we might have a concrete mathematical way to cash it out: "does the AI/blue arrow commute with the equivalence/black arrow? If yes, hooray, if no, something has gone wrong".

I plan on doing another post like this with lots more examples than the above, but I'm hoping you get the general idea from just that. For now I think it's a mug's game for me to give you more, because since you're a LessWrong reader, you have the kind of mind that will immediately try to poke holes in any moral principle I offer (bless you). I welcome suggestions of moral principles in the comments - I'll try to make diagrams of how I think this framework applies to them!

For now, regarding this one: you might be tempted to say that a person's name doesn't change any situation, legally or morally (there's a word for that in the equivariance literature: you want a principle that is is not just "equivariant" but "invariant"; the AI could just say "report this person to the police" and now the diagram becomes simpler). About embezzlement specifically, perhaps we could have legal invariance - but across all situations involving crimes, I wouldn't be quite so sure; hearing about Ahmed being randomly assaulted versus Colin being randomly assaulted, one could make a case that the series of questions to be asked should be different (though of course, morally, if Ahmed and Colin do turn out to be in identical situations, an identical law should apply!).

How do you make NNs equivariant?

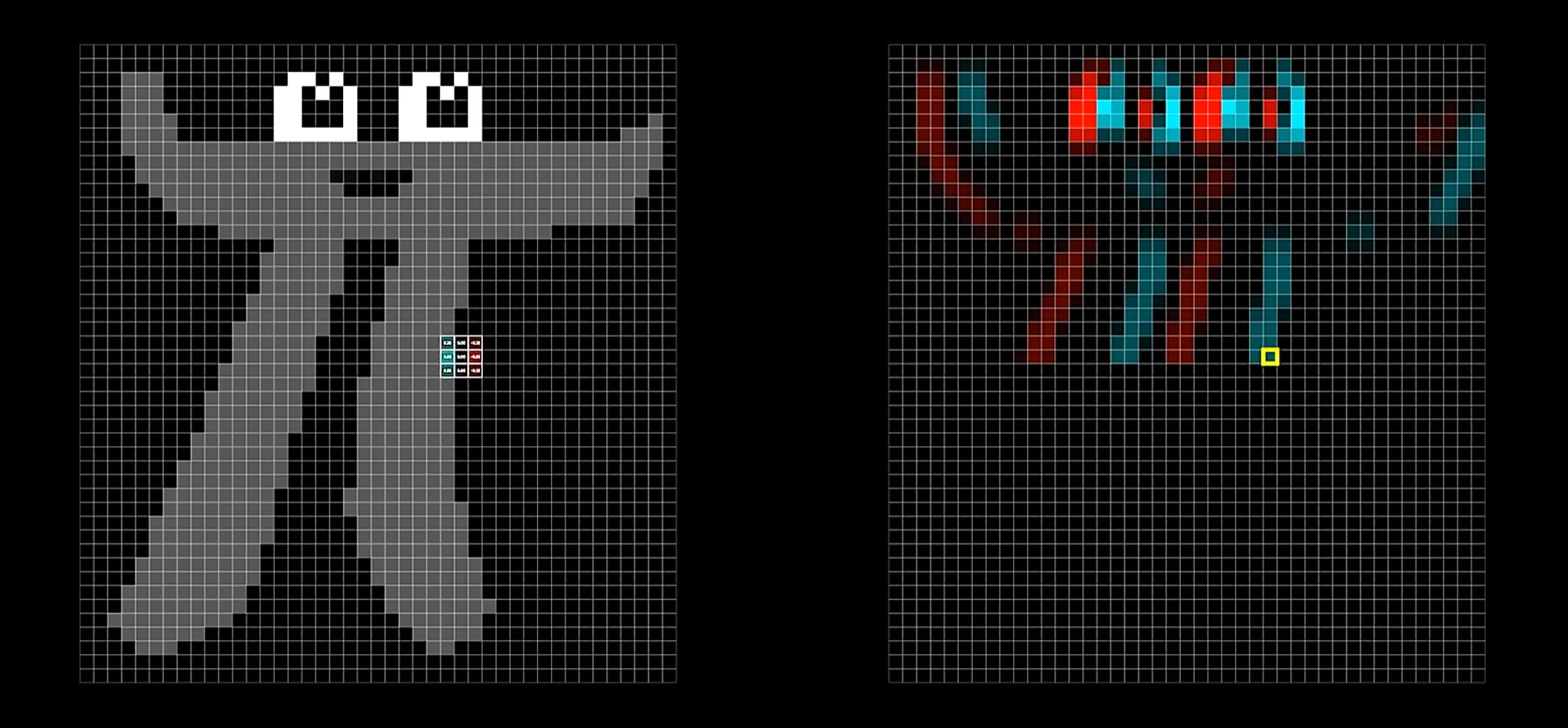

Again, convolutional neural networks, one of the major successes of deep learning, are based on a kind of equivariance - they are translation-equivariant, because the kernels (which are baked into the architecture of networks) are expected to be put in different places with the same result being output.

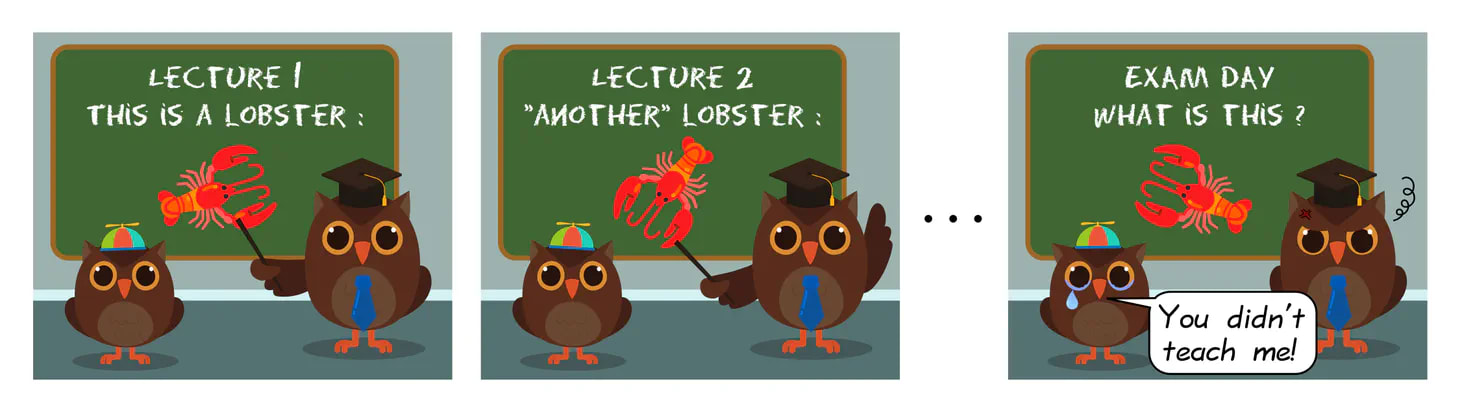

I strongly doubt that RLHF is a scalable way to bring about an AI which conforms to all the moral and legal rules ("commutes for all example sets we could give it") that we ideally want. And I doubt this for much the same reason that equivariance is used in computer vision, which is data scarcity. Equivariant image models outperform non-equivariant ones because the data goes further:

If it turns out this idea has legs conceptually, the future would be bright, because research on equivariant neural networks is old by NN standards, so we have a bunch of tools already existing for it. To give an example, a key difficult thing about moral principles (or laws!) is when they come into conflict with one another. Equivariance researchers have made strides on a similar problem, which is the situation of wanting an NN to be equivariant to two things - say rotation and translation. For the rotation-translation case, there's a natural mathematical way to combine them, quite similar to a tensor product, using something called Clifford Algebras (it's a side-project of mine toright now to set up translation-rotation-scale equivariance). Eliezer frequently talks about how we need to avoid the temptation to believe there is One True Utility Function. I'm sympathetic to that, and I could see Clifford Algebra could offering a kitchen, of sorts, where we can try out different combinations of principles.

Alright, maybe you're on board. But hang on - what's the mechanism by which you create a NN equivariant to even one principle of a moral or legal nature, rather than the relatively easy to define geometrical principles like rotation equivariance?

Yes, that's not obvious to me - I warned you, this is a research direction!

If I had the time and money to pursue this idea, I'd start with toy models - say, a language model capable of two-word sentences. I'd try to do the toy version of modern RLHF to it: give the language model a series of thumbs-up or thumbs-down to its responses. Then, I'd try to figure out how to make it generalize a single thumbs-down result to more situations than it usually would; I'd try to reproduce the Winkels and Cohen 10x data rate.

(Toy models seem to be frowned upon in interpretability, I'm sure with good reason - but this isn't interpretability; our principle at all times needs to be that equivariance works the same way for small/interpretable NNs and large/un-interpretable ones (equivariance "scales"))

A more ambitious idea did occur to me based on this paper, which claims to have a concrete (and fortunately relatively simple) realization of "concept space". It's a very high-dimensional space where (contextualized) words like "animal" and "cat" are affine subspaces. The computer vision researcher in me sees that and wonders whether larger sentences like "I hate that cat" can be thought of as transformations of this space. If so, being equivariant to a moral principle would restrict the sorts of transformations (sentences) that the NN could output, and moral/legal equivariance would turn out to be even more like geometric equivariance.

All comments, even very speculative ones, are welcome! If you are only 20% sure your comment will be worthwhile, I encourage you to say it anyway.

2 comments

Comments sorted by top scores.

comment by Philipp Alexander Kreer · 2025-02-12T12:15:41.937Z · LW(p) · GW(p)

Hi! Equivarience is a very powerful tool and is widely applied in various branches of physics. Geometric Algebra Transformers generalize the concept of equivarience to transformers for essentially any group, e.g., $SO(3,1), SO(3)$ etc.

In fact, you can achieve drastic simplification and performance boosts by using equivariant models. An impressive example is in this paper: 19 Parameters Is All You Need: Tiny Neural Networks for Particle Physics (arXiv:2310.16121). An important point is that equivarient networks often significantly push capabilities.

A more general discussion on equivariant transformers is here: Geometric Algebra Transformer (arXiv:2305.18415).

comment by Hastings (hastings-greer) · 2024-12-28T23:06:52.905Z · LW(p) · GW(p)

Hi! I've had some luck making architectures equivariant to a wider zoo of groups: my most interesting published results are getting a neural network to output a function, and invert that function if the inputs are swapped (equivariant to group of order 2, https://arxiv.org/pdf/2305.00087) and getting a neural network with two inputs to be doubly equivariant to translations: https://arxiv.org/pdf/2405.16738

These are architectural equivariances, and as expected that means they hold out of distribution.

If you need an architecture equivariant to a specific group, I can probably produce that architecture; I've got quite the unpublished toolbox building up. In particular, explicit mesa-optimizers are actually easier to make equivariant- if each mesa-optimization step is equivariant to a small group, then the optimization process is tyically equivariant to a larger group