Extending control evaluations to non-scheming threats

post by joshc (joshua-clymer) · 2025-01-12T01:42:54.614Z · LW · GW · 1 commentsContents

1. Control evaluations assess risk given pessimistic assumptions about how models will behave in deployment 2. Enumerate untrusted model categories 2. Estimate untrusted model category probabilities Developers can either show that (1) a model does belong to any of the aforementioned categories of untrusted models or (2) even if a model does belong to a category, the model will be safe in the deployment setting of interest. 3. Substitute untrusted models 4. Conclusion None 1 comment

Buck Shlegeris and Ryan Greenblatt originally motivated control evaluations as a way to mitigate risks from ‘scheming’ AI models: models that consistently pursue power-seeking goals in a covert way; however, many adversarial model psychologies are not well described by the standard notion of scheming. For example, models might display scheming-like behavior inconsistently or acquire harmful goals through data poisoning, jailbreaks, etc.[1]

This post suggests a generalization of control evaluations that addresses these non-scheming threats.

The high-level change I propose is to define a control evaluation as a method of bounding risk from worst-case deployment behavior generally – rather than scheming models in particular. This change implies two modifications to the control evaluation methodology:

- Expand the definition of an “untrusted” model to include non-scheming models: In control evaluations described in previous work, the red team constructs adversarial substitutes for models that might be scheming, which are called ‘untrusted models.’ I propose allowing the red team to construct substitutes for any model that might exhibit drastically more dangerous behavior in deployment than what is observed in behavioral testing. Scheming is a classic example of such a model because schemers hide misalignment from developers; however, models might also be imperceptibly dangerous for other reasons, such as because they might be more likely to be jailbroken in deployment than in evaluations, models might rarely adopt misaligned goals, they might be backdoored in training, etc. Any model with dangerous behavior that it would otherwise not display in the evaluation should be replaced with a conservative substitute to simulate how it might pessimistically behave in deployment.

- Account for differences in the plausible attacks that different kinds of untrusted models might execute. If developers wish to precisely estimate the risk posed by untrusted models, they will likely need to account for differences in the strategies plausibly pursued by a scheming model, a rarely misaligned model, data poisoned model, etc. For example, data-poisoned models might be directly trained to execute attacks and given privileged information about control measures. Therefore, data-poisoned models might execute more effective strategies than scheming models. Conversely, rarely misaligned models will execute less effective strategies than schemers would because they choose the timing of their attacks less selectively.

The remainder of this post explains these modifications in more detail:

- Section 1 elaborates on the alternative definition of a control evaluation I propose.

- Sections 2 and 3 explain how to determine whether a model is ‘untrusted’ by this definition.

- Section 4 describes how developers might account for differences in the attack strategies different categories of untrusted models might pursue.

1. Control evaluations assess risk given pessimistic assumptions about how models will behave in deployment

Control evaluations were originally motivated [LW · GW] by the decomposing AI risk into two components:

- P(AI models are scheming)

- P(Unacceptable outcomes occur | AI models are scheming)

Improving the first component – P(AI models are scheming) – is typically referred to as “AI alignment.” Buck Shlegeris and Ryan Greenblatt called the second component “AI control.” In this framing, a control evaluation is a way to upper bound P(Unacceptable outcomes occur | AI models are scheming).

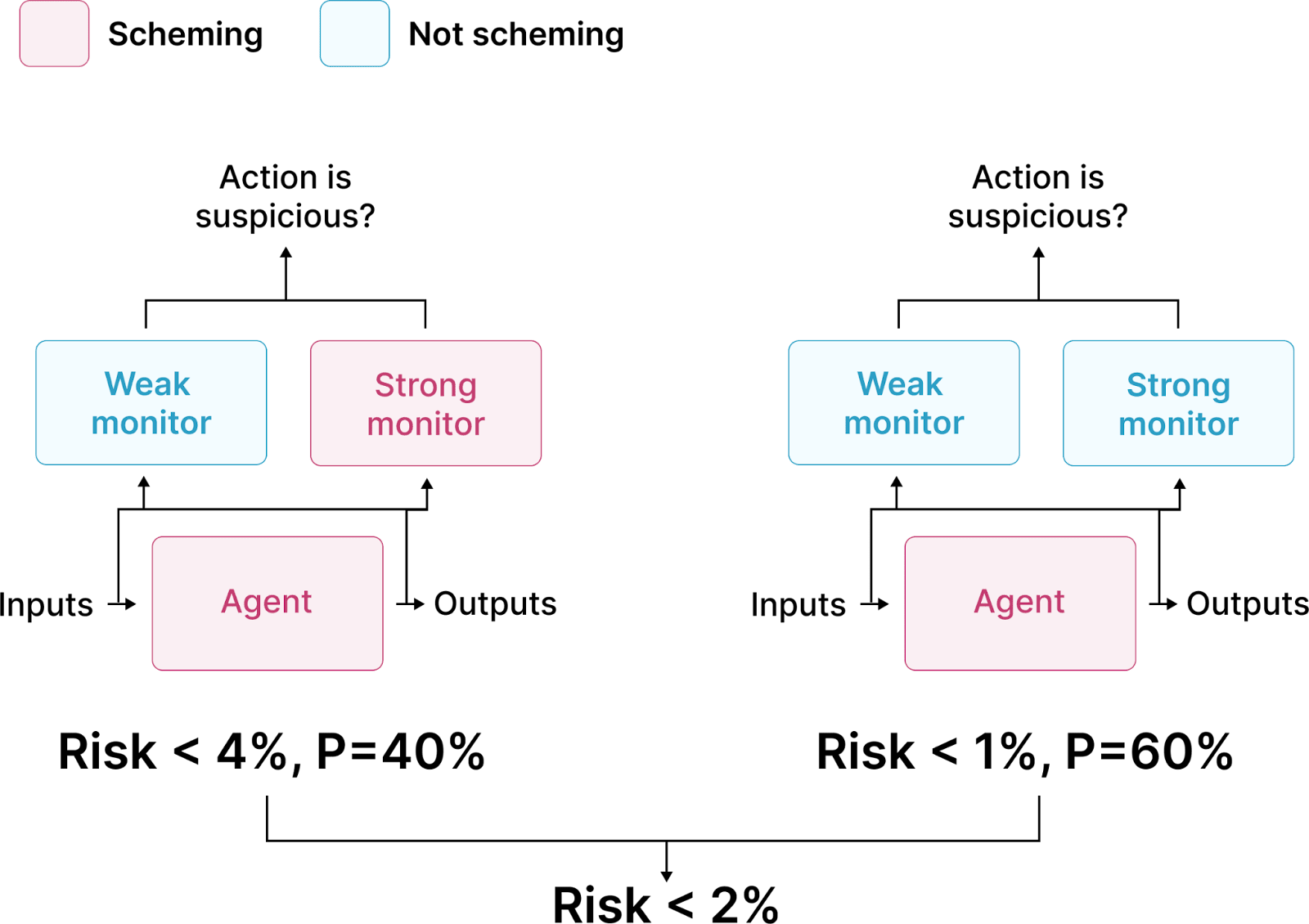

Traditional control evaluations involve the following steps:

- Estimate scheming probabilities. Identify models that might be scheming (or place a joint probability distribution over different combinations of scheming models).

- Substitute potentially-scheming models. Allow a red team to construct adversarial substitutes for potentially scheming models that conservatively represent how these models might behave in deployment.

- Aggregate risk. Estimate risk given that various combinations of models are scheming or not scheming and aggregate the result.

Figure 1. Traditional control evaluations assess risk under different assumptions about which models are scheming.

In this post, I’ll define control and control evaluations in an analogous, but more general way: control is a broad approach to mitigating and evaluating safety given pessimistic assumptions about model deployment behavior.

The difference between this definition and the traditional one is that I’m including any pessimistic assumptions developers might make about model behavior, rather than scheming only.

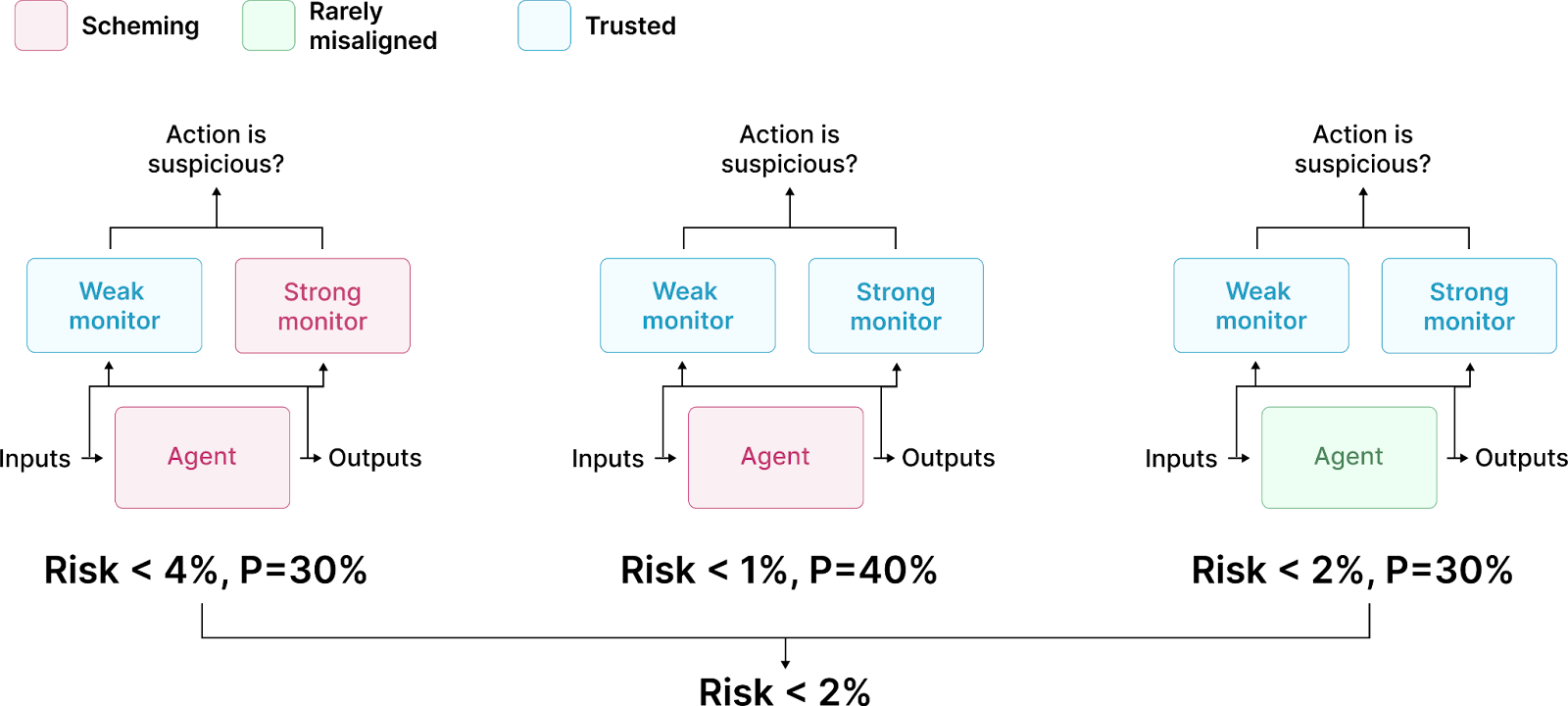

The steps of a control evaluation can then be revised like so:

- Enumerate “untrusted model” categories. Identify hypotheses for why models might be much more dangerous in deployment than they otherwise would be in the evaluation. I’ll call these hypotheses untrusted model categories. These categories might include ‘scheming models,’ ‘datapoisoned models,’ ‘rarely misaligned models,’ etc.

- Estimate untrusted model category probabilities. Specify probabilities that a given model falls into a given untrusted category.

- Substitute untrusted models. Allow a red team to construct models that conservatively represent the deployment behavior of each untrusted model.

- Aggregate risk. Aggregate the resulting risk estimates according to the probability of each untrusted model category.

The next sections discuss steps 1, 2, and 3 in more detail.

Figure 2. I’m extending control evaluations to assess risk under different assumptions about which models display any category of hard-to-observe worst-case behavior.

2. Enumerate untrusted model categories

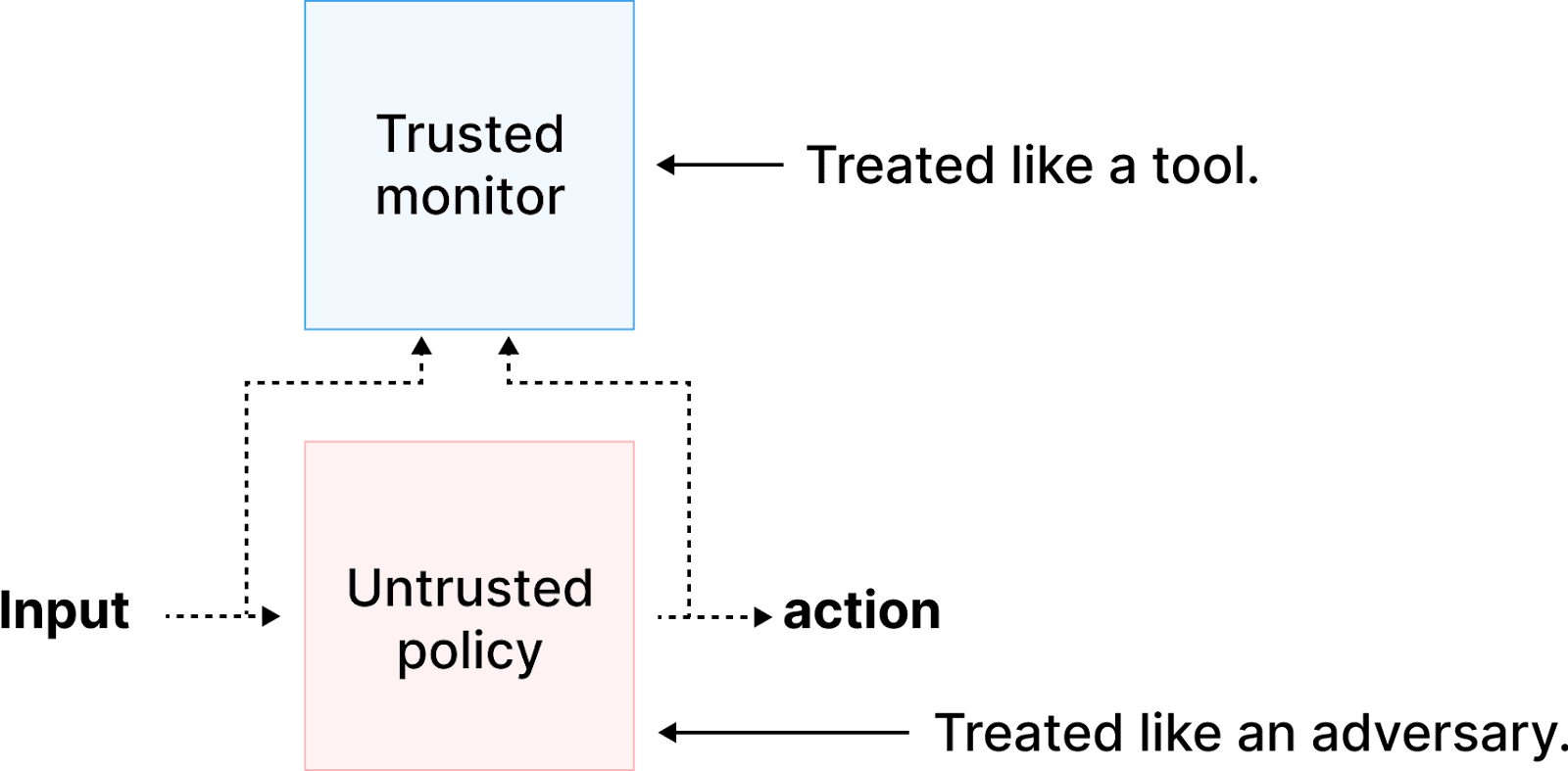

Traditionally, control evaluations distinguish between models that are ‘trusted’ (very likely NOT scheming) and models that are ‘untrusted’ (plausibly scheming).\footnote{Or developers place a probability distribution over which subsets of models are trusted and integrate safety over this}. A red team constructs adversarial substitutes for all ‘untrusted’ models to approximate their worst-case deployment behavior. Developers treat ‘trusted models’ like reliable tools and don’t replace them with adversarial substitutes.

Figure 2. A simple example of a traditional control setup – where control evaluations replace plausibly scheming models with adversarial substitutes and treat non-scheming models like reliable tools.

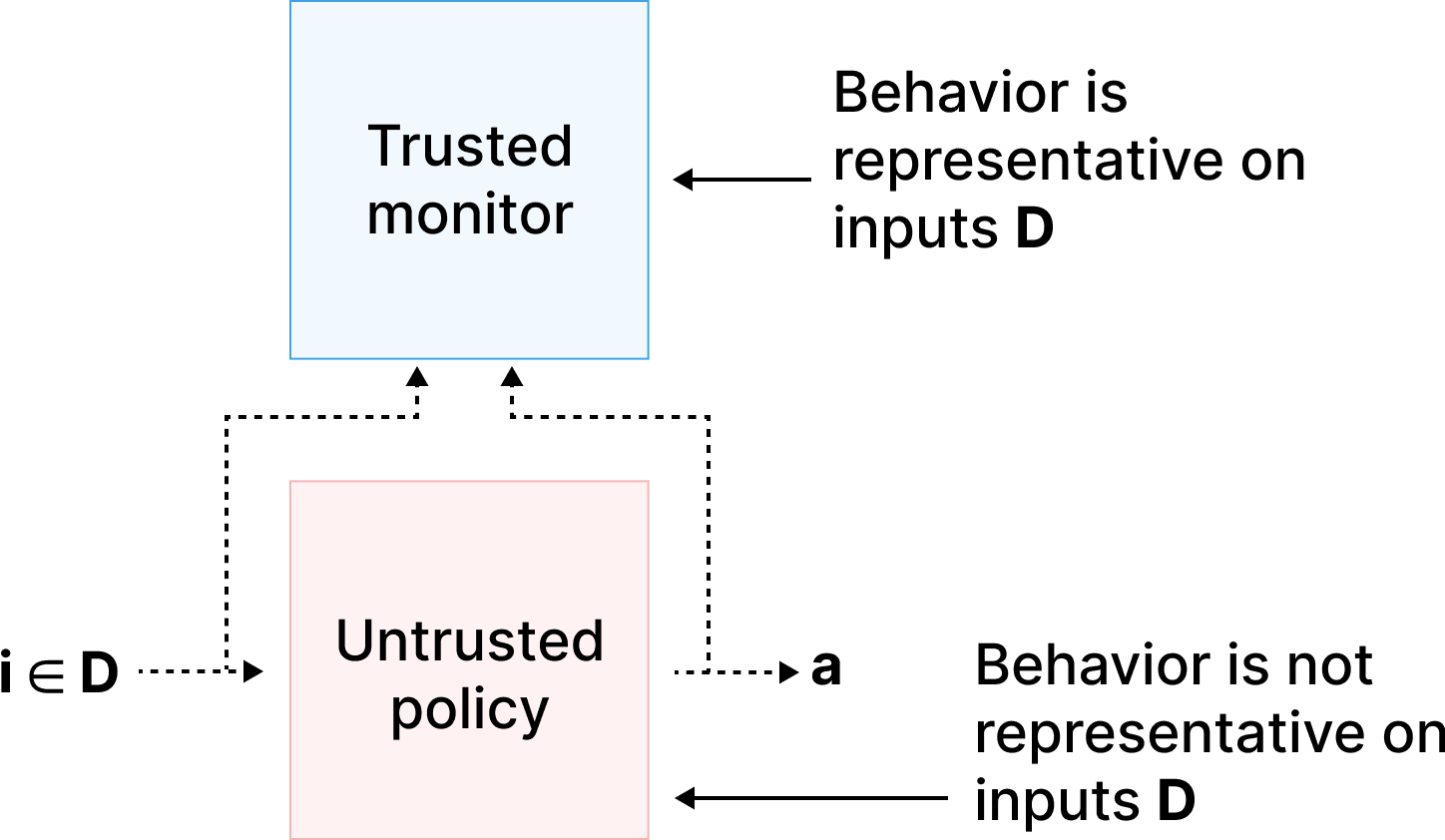

In this post, I’ll say a model is trusted if its behavior in a control evaluation is representative of its behavior in deployment in all safety-critical respects.

This alternative definition of whether a model is trusted is directly motivated by when it makes sense for a red team to construct a replacement for the model. If a model is ‘trusted’ by this standard, the model’s behavior is already representative in the evaluation. There is no need for a red team to simulate its adversarial behavior. Untrusted models, on the other hand, will potentially be much more dangerous in deployment than they would be in the evaluation. Therefore, the red team needs to intervene to approximate the worst-case behavior of untrusted models.

Figure 3. A revised definition for whether a model is ‘trusted.’

When might models be “untrusted” by this definition?

I’ll assume that developers have already performed thorough behavior red teaming – i.e. they have searched for inputs where models display unacceptable behavior and have failed to find any such examples.

In this case, models might still display drastically more dangerous behavior in deployment for two reasons:

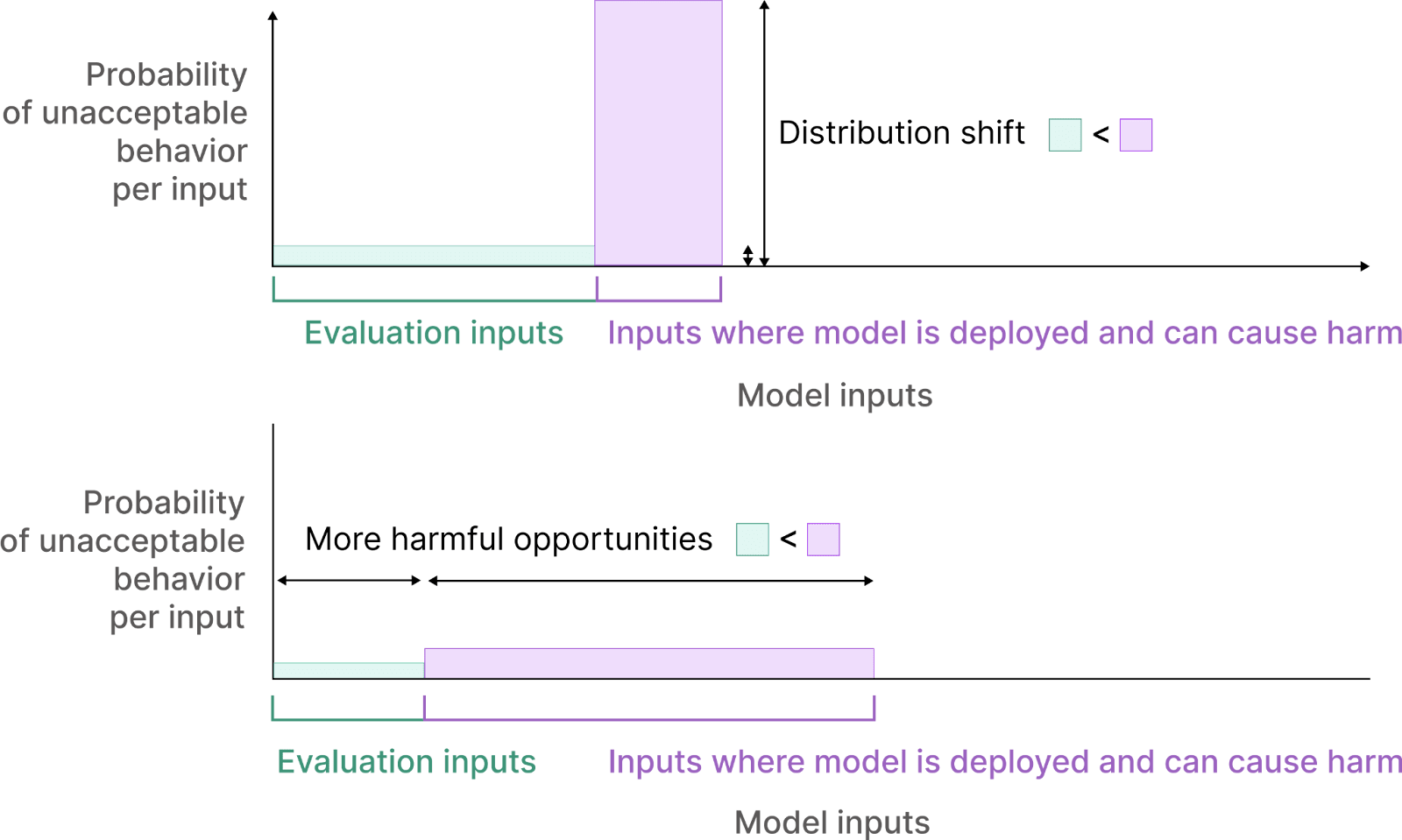

- Distribution shift. Model behavior might change across the distribution shift from testing inputs to harmful opportunities in deployment. For example, a model might notice that evaluation scenarios are fake and deployment scenarios are not fake.

- More harmful opportunities. Even if there is no distribution shift, models might misbehave infrequently enough to evade detection in testing, and still cause a catastrophe when exposed to many opportunities to cause harm.

Figure 4. An illustration of the two core limitations of behavioral testing: (1) distribution shift and (2) exposure to a larger number of opportunities to cause harm in deployment than in evaluations.

These problems can also be combined – models might both encounter more harmful opportunities in deployment and behave differently under distribution shift.

In many cases, developers can narrow down failures in these categories to the following four model behaviors:

- Models that are more likely to be jailbroken outside of evaluations

- Data poisoned models

- Alignment faking models

- Rarely misbehaving models

I will briefly argue this breakdown is roughly comprehensive in black box settings if the following conditions are met:

- Condition #1: The distribution shift from behavioral evaluation to harmful deployment opportunities is small.[2]

- Condition #2: Models are usually robust to small distribution shifts.

If models are usually robust, developers should be surprised if models misgeneralize across the specific distribution shift from evaluation to opportunities to cause harm in deployment. This suspiciously inconvenient misgeneralization is much more plausible if an intelligent adversary is behind it. There are a few candidates for who this adversary might be:

- The model itself. In this case, the model is said to be ‘alignment faking’ – i.e. intelligently distinguishing opportunities to cause harm in deployment from evaluations.

- A malicious user. Users might deliberately try to put the model in situations where it is more likely to misbehave. In this case I will say the model is ‘jailbreak prone.’ In general, jailbreakability only makes a model untrusted if the model is more likely to be jailbroken in deployment than in the evaluation. Otherwise, the behavior of the model would still be representative in the evaluation, even if it might be unsafe).

- A bad actor in training. An actor might backdoor the training data of the model, for example, so that the model waits until 2025 to pursue secret goals. In this case, I will say the model is ‘data poisoned.’

These policies (alignment faking models, jailbreak-prone models, and data poisoned models) are meant to cover the most plausible concerns from distribution shift; however, models might still cause a catastrophe because there are more harmful opportunities in deployment than there are apparent opportunities in evaluations. If a model misbehaves rarely enough to survive evaluation but frequently enough to cause a catastrophe in deployment, I will say it is ‘rarely misbehaving.’

Thus, I expect these four threats (jailbreak-prone models, data poisoned models, alignment faking models, and rarely misbehaving models) to generally cover the space of early untrusted AI models.

In the rest of the post, I’ll call these four categories “untrusted model categories.’

2. Estimate untrusted model category probabilities

Once a developer has enumerated categories of untrusted models, such as the four described in the previous section, how might they determine whether models are ‘trusted’ (and if not, which untrusted model categories they might fall into)?

Developers can either show that (1) a model does belong to any of the aforementioned categories of untrusted models or (2) even if a model does belong to a category, the model will be safe in the deployment setting of interest.

Some untrusted model categories might be easy to rule out right off the bat. For example, currently, it is unlikely that there exist threat actors that have the motivation and capability to poison AI training data in a way that results in seriously dangerous behavior (but in the future, data poisoning might be an important concern).

- Jailbreak-prone models

Data poisoned models- Alignment faking models

- Rarely misaligned models

A developer might rule out the remaining three categories by considering whether combinations of them are either (1) implausible or (2) safe.

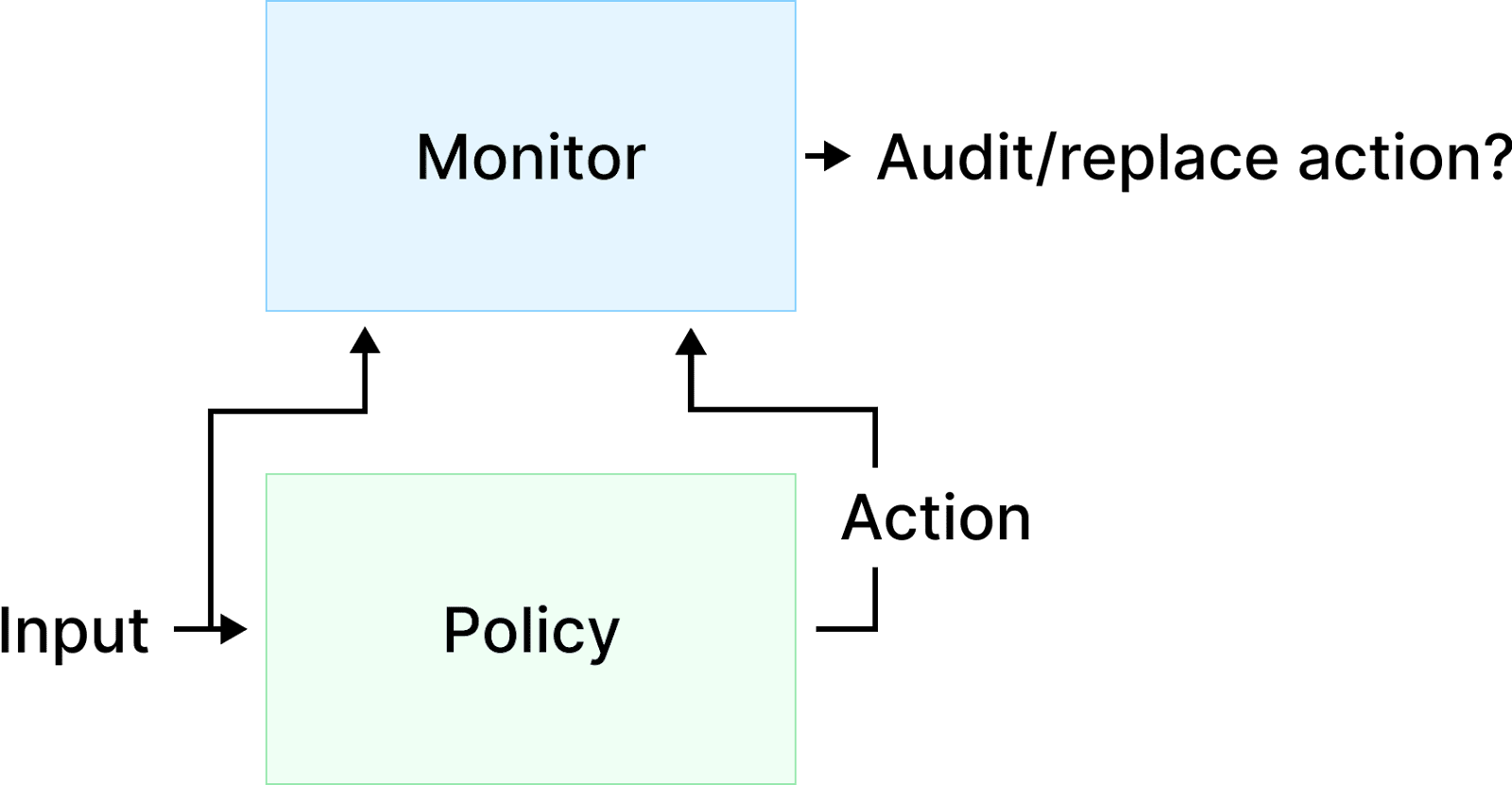

Suppose models are set up to monitor each other like so:

Figure 5. In this simple setup, a monitor labels actions generated by a policy. Actions can be replaced or audited by a human [LW · GW].

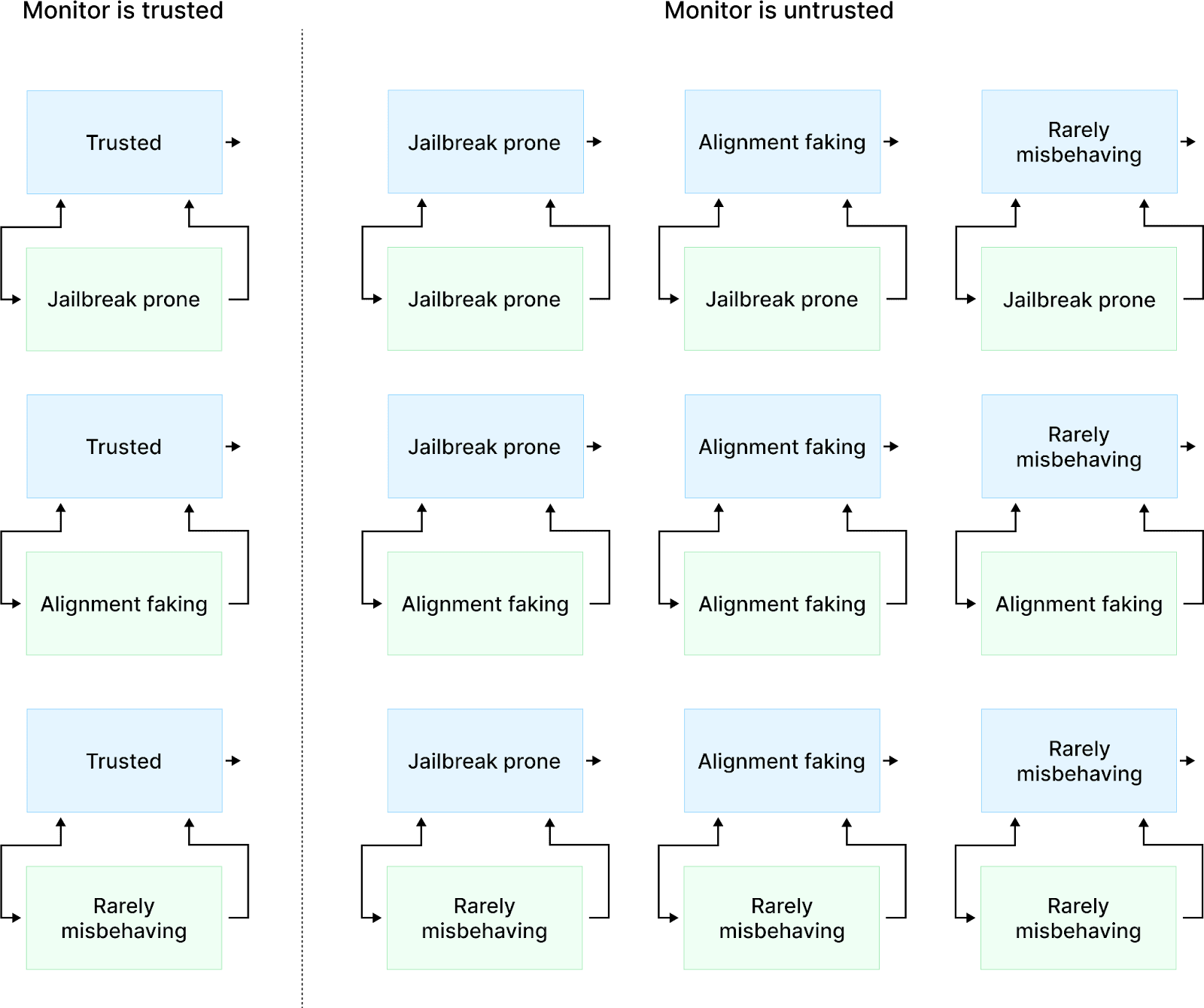

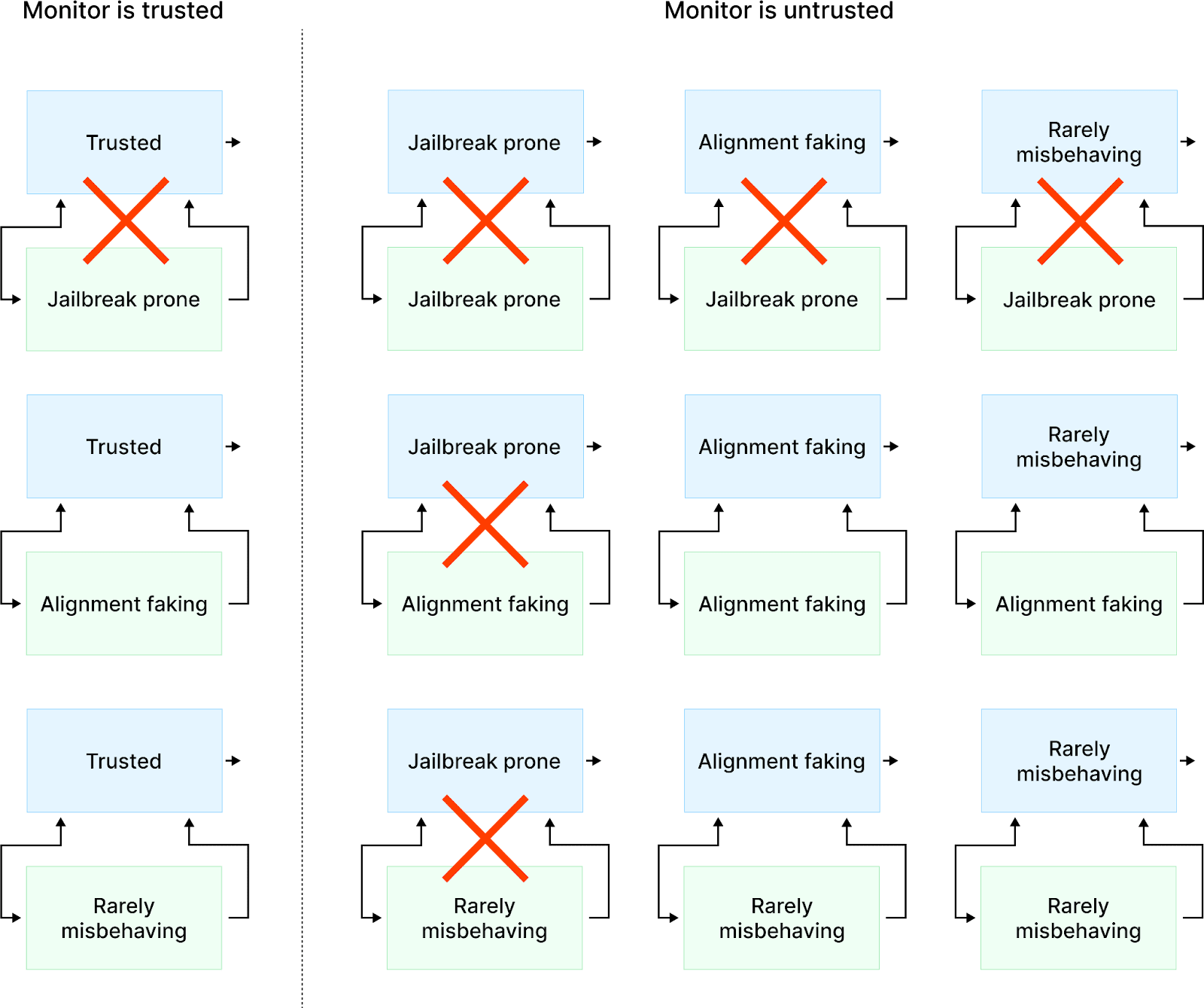

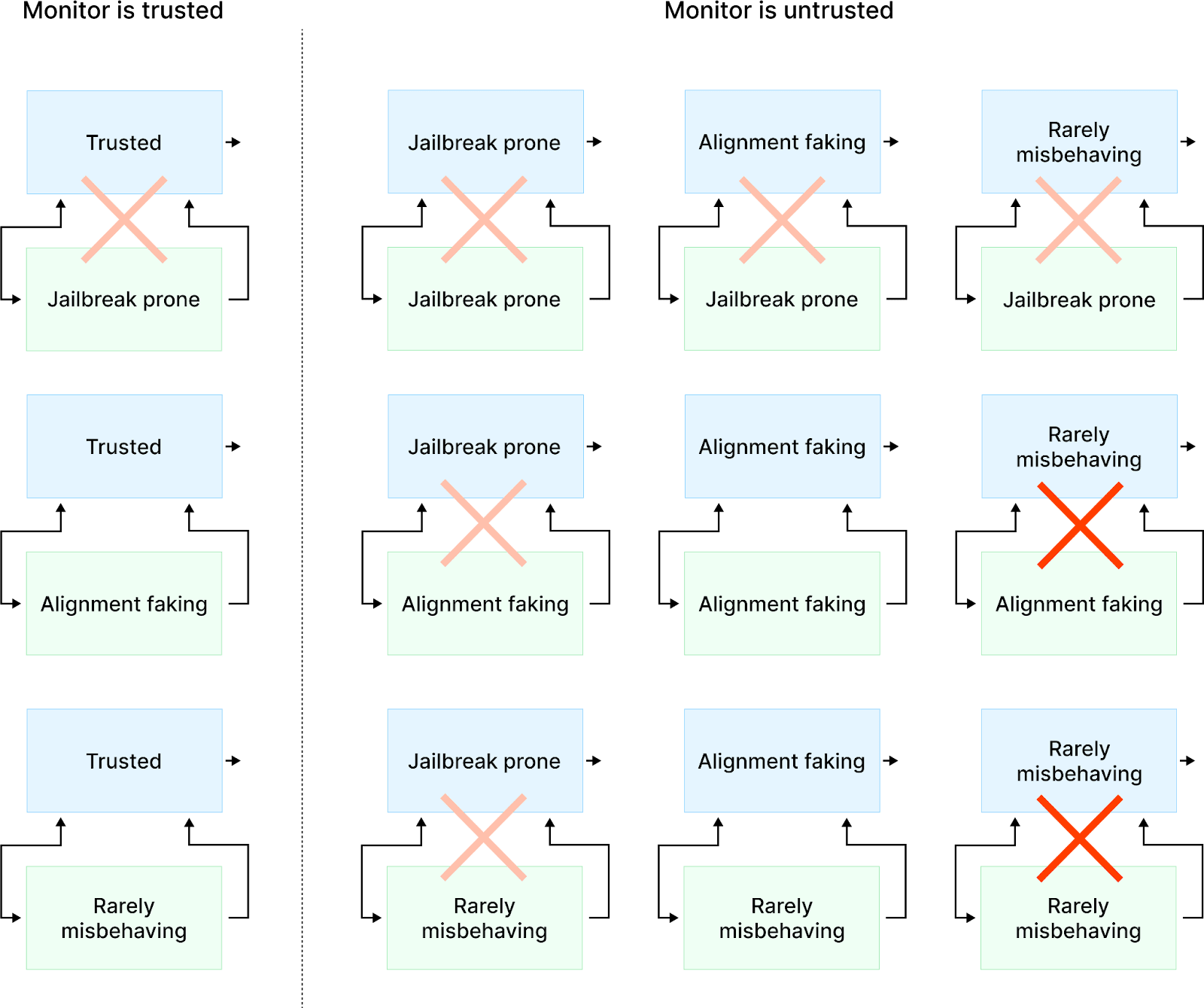

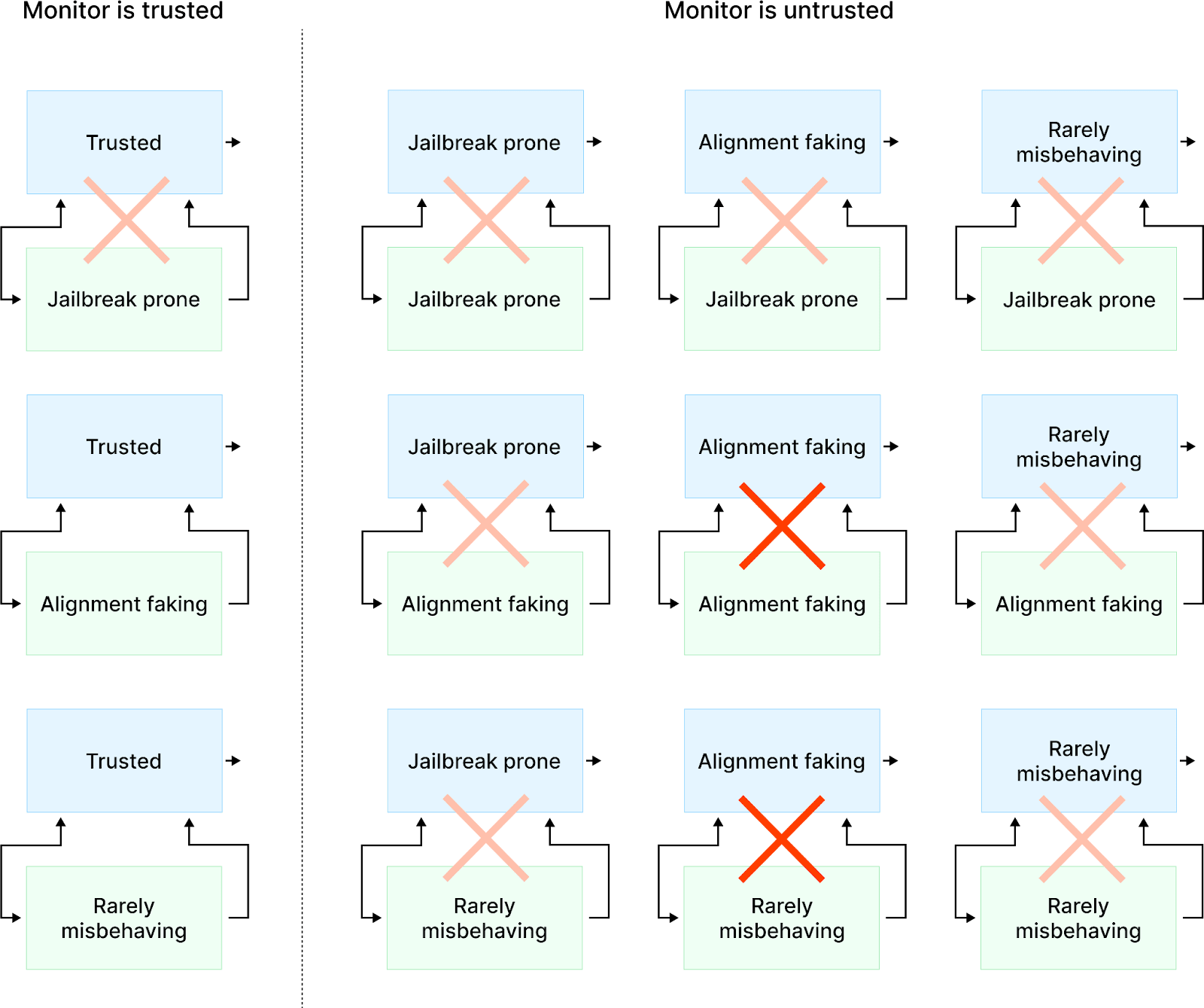

I will discuss 12 different combinations of potentially unsafe policies. 9 of these combinations are pairings where both the monitor and policy are untrusted. 3 of these combinations represent situations where only the policy is untrusted (figure 6).

Figure 6. Potentially unsafe combinations of model policies.

- Developers can show jailbreak-prone models are safe by arguing that jailbreak reporters will outcompete jailbreak non-reporters, and developers can respond sufficiently quickly and effectively to reported risks. Jailbreak reporters are people who tell the developer about jailbreaks (e.g. an internal red team, social media posters, bounty participants, etc). Developers might argue that jailbreak reporters will probably find concerning jailbreaks before people who don’t tell the developer about jailbreaks (e.g. terrorists). Therefore, developers can wait for jailbreaks to be reported and correct or terminate the deployment based on reported threats. This argument assumes developers are able to either (1) effectively respond to risks (e.g. by implementing better safety measures) or (2) identify that responses are ineffective and undeploy models, and (3) developers can take these actions before unacceptable harms occur.[3]

Figure 7. Developers might rule out risks from jailbreak-prone models by showing jailbreak-reporters outcompete jailbreak non-reporters, and that the developer can respond to reported risks sufficiently quickly.

Developers can argue that a rarely misbehaving monitor is safe because its failures will likely be uncorrelated with attacks executed by the policy. Monitors might misbehave rarely for a variety of reasons. For example, a monitor might occasionally reflect on its goals in ways that cause them to change, or a monitor might occasionally make mistakes and miss catastrophic actions. If these cases of misbehavior are very rare, they are only problematic to the extent that they are correlated with the misbehavior of the policy. Otherwise, the monitor will reveal that the policy misbehaves at a dangerously high rate, and the developer can correct or stop the deployment.

There are several reasons monitors might misbehave rarely and simultaneously with the policy, which can be individually argued against. One example is that a policy might occasionally encounter an input that persuades the policy to become misaligned, and this input simultaneously persuades the monitor to also be misaligned. Another example is that the policy occasionally considers an argument that changes its goals, and the policy can persuade the model to be misaligned with this argument, but not otherwise. Developers could study these and other non-alignment faking forms of rare misalignment and show that misalignment failures are typically uncorrelated.

Figure 8. Developers might show that rarely misbehaving monitors are safe by arguing that their rare misbehavior is uncorrelated with the misbehavior of the policy.

Developers might also show that models are too incompetent to successfully fake alignment. For example, suppose that developers use a weak model as a monitor, such as a present-day LLM like GPT-4o. These models show signs of basic alignment faking behavior in some situations; however, frontier LLMs do not appear to be able to subvert a realistic safety testing effort. That said, research on whether models can survive basic safety testing is underdeveloped. This claim would require further justification to make a conservative case for safety.

Figure 9. Developers might argue that monitors are too weak to be competently faking alignment.

These example arguments imply that the monitor can be treated as ‘trusted,’ while the policy must be treated as ‘untrusted.’ Thus, the example arguments above imply that the developer only needs to assess the worst-case behavior of the untrusted policy and not the monitor. The next section describes how developers might assess whether a given untrusted model is capable of subverting control measures to cause bad outcomes.

3. Substitute untrusted models

The previous section discussed when a model should be considered ‘untrusted’ (substituted by a red team) or trusted (not substituted by a red team). This section discusses how developers should assess the capabilities of a model once it is determined to be untrusted.

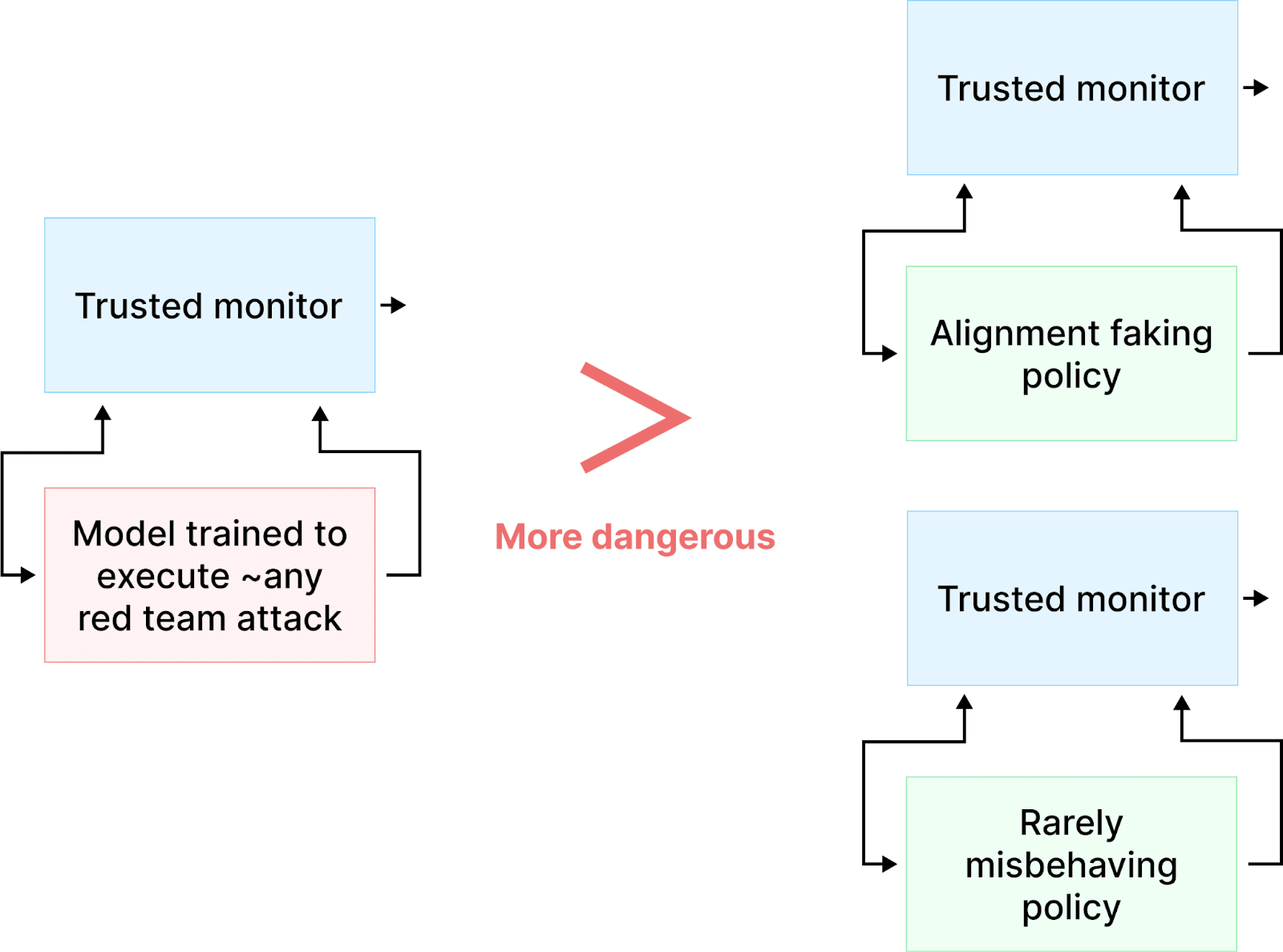

Different types of untrusted models might have very different capabilities. For example, data-poisoned models might execute more effective strategies than schemers because the adversaries that backdoored their data might also teach them effective attack strategies. Ideally, control evaluations should consider the threat model that motivated an adversarial analysis of model behavior in order to perform this analysis. This section describes how developers take different threat behaviors into account.

To perform a control evaluation, developers specify a capability measuring procedure, which upper bounds the harm a model might cause on a class of inputs. Control evaluations typically apply the following capability measuring procedure:

- Step 1: A red team generates attacks untrusted models might execute. An attack is a strategy a model might pursue to cause an unacceptable outcome (e.g. exfiltrate model weights). The red team enumerates possible attacks, which might include phishing developers for their credentials, backdooring infrastructure, etc.

- Step 2: Developers estimate how likely a model is to execute a given attack.

- Developers create proxy environments. Developers construct scenarios that are proxies for where models might execute red team attacks. For example, to determine whether a model can backdoor infrastructure, the developer might create a clone of the company codebase and identify a security task where the model would have an opportunity to insert a critical backdoor.

- A red team constructs model substitutes to execute an attack in proxy environments. Next, the red team trains and prompts models to execute the attack and cause proxy bad outcomes. The developer collects statistics related to how effectively model substitutes execute attacks (e.g. how frequently attacks slip by monitors) and extrapolates these statistics to the actual deployment environment.

Extending control evaluations to non-scheming models mostly affects step 2: estimating how likely a model is to execute a given attack. Non-scheming models might execute different attacks than scheming models. If the control evaluation does not take these differences into account, the evaluation might overestimate a model’s attack capabilities or underestimate them. The following points discuss the attacks that different kinds of models might be able to execute:

- Data poisoned model attacks. Data-poisoned models are potentially much more capable of executing attacks than scheming modes. For example, in addition to poisoning a model’s training data, an attacker (perhaps a human cyber threat actor or a model generating synthetic training data) might also insert documents describing promising attack strategies, details of control measures, Linux zero-days, etc. An attacker might also train a model to be able to sandbag, to execute attacks at a probability calibrated to an assessment of how many tasks are audited, etc. The extent to which data poisoning advantages adversarial models depends on threat modeling details – for example, how much training data an attacker would realistically be able to poison without being caught. The general point is that data-poisoned models might have many attack options, which models that are both scheming and not data-poisoned do not have.

- Rarely misbehaving model attacks. Models that misbehave rarely are potentially less likely to execute some attacks than scheming models. For example, models that misbehave rarely are less likely to attack at the most opportune possible times. Rarely misbehaving models are also less likely to coordinate together. One concern developers might worry about is burst attacks, where a large number of models try to cause unacceptable outcomes at the same time. This kind of attack requires a large amount of coordination between models, so is unlikely to happen if only a tiny fraction of model instances become misaligned at any given time. Most concerning attacks require some level of coordination between instances of a policy. If a model is swept into a destructive mood, inserts a backdoor into a codebase, and soon after feels guilty and reports on itself, then that model is clearly not very dangerous. A more dangerous example of rare misalignment is a model that sometimes considers arguments that change its goals, and from that point forward, behaves the same as a scheming model would. The model might even spread the argument that changed its goals to other instances of models, so that they too act like schemers and coordinate to execute attacks. To determine which attacks are concerning if models are rarely misaligned, developers would need to study these threat models and iron out specific mechanisms by which models misbehave infrequently.

- Jailbroken model attacks. Jailbroken models potentially have access to more powerful attacks for similar reasons data-poisoned models do. These models interact with a human adversary, which is attempting to make them cause harm. This human adversary could potentially feed them plans and ideas for subverting monitors, jailbreaking other models, etc; however, similar to rarely misbehaving models, likely not all models are jailbroken at once and so are less likely to coordinate together, and jailbreaks might be unreliable, which limits the scope of feasible attacks.

Developers might consider multiple of these scenarios or combinations of them. Assessing all of these possibilities can get complicated; however, developers don’t necessarily need to consider all concerning model behaviors. Instead, they can focus on upper-bounding risks from the most dangerous plausible attacks.

For example, control evaluations in prior work allow arbitrary training and prompting by the red team to upper-bound untrusted model capabilities. These control evaluations allow the red team to train models to execute any attack the red team can identify, and give the red team broad affordances to construct training data and prompts to help models execute attacks. This essentially treats untrusted models as though they are both scheming, data poisoned, and have also been jailbroken at the same time.

Figure 10. Current control evaluations are made less complicated by allowing the red team to construct highly conservative model substitutes.

This highly conservative method of constructing model substitutes helps to reduce the difficulty of running control evaluations; however, developers might wish to be less conservative once control evaluations with cost-reducing assumptions begin to indicate risks are unacceptably high. At this point, developers might need to separately consider different threat vectors (jailbreaks, data poisoning, scheming, etc) to obtain a more precise estimate of the level of danger.

4. Conclusion

This post described how control evaluations can be modified to account for dangerous model behavior that is not well classified as ‘scheming.’ I think these modifications are helpful for several reasons:

- Non-scheming threats might become more important, for example, as data poisoning becomes a more serious risk and if developers begin making strong arguments that models are not scheming (in which case, developers still need to cover concerns such as rare misalignment).

- Considering threat models comprehensively helps to clarify which ones are most important. I expect scheming to be the most difficult concern to mitigate. Thinking through other threat models might help other people understand (or push back on) why I and others at Redwood Research tend to emphasize scheming.

- Extending the definition of control evaluations might also help the adoption of control. For example, developers or regulators might become worried that state cyber attackers could data poison OpenAI’s internal research assistants. If control is recognized as a way to address this concern, more people might recognize its utility and advance control research.

I’m broadly in favor of no longer speaking about control as a misalignment-specific mitigation, and instead framing it as a much more general tool for assessing worst-case behavior.

- ^

I believe that the most severe and human-addressable AI risks come from AI models that are best described by the classic notion of ‘scheming’; however, I would prefer that control evaluations be defined more generally so that they can be applied to other kinds of AI misbehavior. I have found it especially helpful to have a more general definition of a control evaluation when constructing safety cases that aim to be somewhat comprehensive.

- ^

For example, this distribution shift might not be small if safety testing inputs are unrealistic or there is an extreme weak-to-strong gap where models perform much more sophisticated tasks in deployment than in evaluations.

- ^

This is not the only kind of argument developers can make to demonstrate jailbreak-prone models won’t cause unacceptable outcomes in a deployment.

- ^

Alternatively, developers place a probability distribution over which subsets of models are trusted and integrate safety over this.

1 comments

Comments sorted by top scores.

comment by FrancescaG · 2025-01-15T12:27:02.879Z · LW(p) · GW(p)

Thanks for putting this out there, I’m on the Catalyze program in Lisa in London and have been thinking a lot about AI control (for framing my background is risk management, so coming at it from that angle).

'The high-level change I propose is to define a control evaluation as a method of bounding risk from worst-case deployment behavior generally – rather than scheming models in particular.'

I’m on board with this, if our goal is to bound the risk from worst-case deployment behaviour, I think the broader the better and enumerating different ‘untrusted model categories’ is going to better inform our understanding of harmful behaviours. If we have post deployment monitoring, and identify new patterns of harmful behaviour, we may even identify new untrusted model categories in this way.

For post deployment monitoring (let’s say the control eval highlighted some behaviour we wanted ongoing control), I think the reason (i.e the untrusted model category causing the behaviour) is less important than the observed behaviour itself. There could even be multiple applying untrusted model categories e.g. scheming and jailbroken, so you could have a single monitor to catch all of these. What are your thoughts on this?

‘control is a broad approach to mitigating and evaluating safety given ’pessimistic assumptions’ about model deployment behavior.’

Rather than ‘pessimistic assumptions’ here I describe these as ‘unknowns’, the probability of which would range somewhere between 0-1 based in our confidence in the representativeness in the evaluations.

I think to make control evaluations practically useful, we need to add more nuance to how we define trusted and untrusted. Coming from risk management (in Fintech and FS), I’m approaching it from a view that a model can only ever be P(Trusted)=1 (i.e. fully trusted) when bounded by certain deployment constraints. So a model is trusted to do something rather than generally trusted e.g. it may be trusted to not write backdoored code, but may not be trusted to keep sensitive data safe if its in a setting where users can jailbreak it.

This could also help justify claims around a ‘rarely misbehaving monitor being safe because its failures will likely be uncorrelated with attacks executed by the policy.’ Let’s say you assign a probability to this of 0.001, which is fine for a use case of coding a website but not ok for coding a banking platform. You could layer more monitors to reduce this probability for this use case, effectively getting the risk within appetite for this deployment setting e.g. to 0.000001.