James Diacoumis's Shortform

post by James Diacoumis (james-diacoumis) · 2025-02-14T01:46:27.613Z · LW · GW · 1 commentsContents

1 comment

1 comments

Comments sorted by top scores.

comment by James Diacoumis (james-diacoumis) · 2025-02-14T01:46:27.611Z · LW(p) · GW(p)

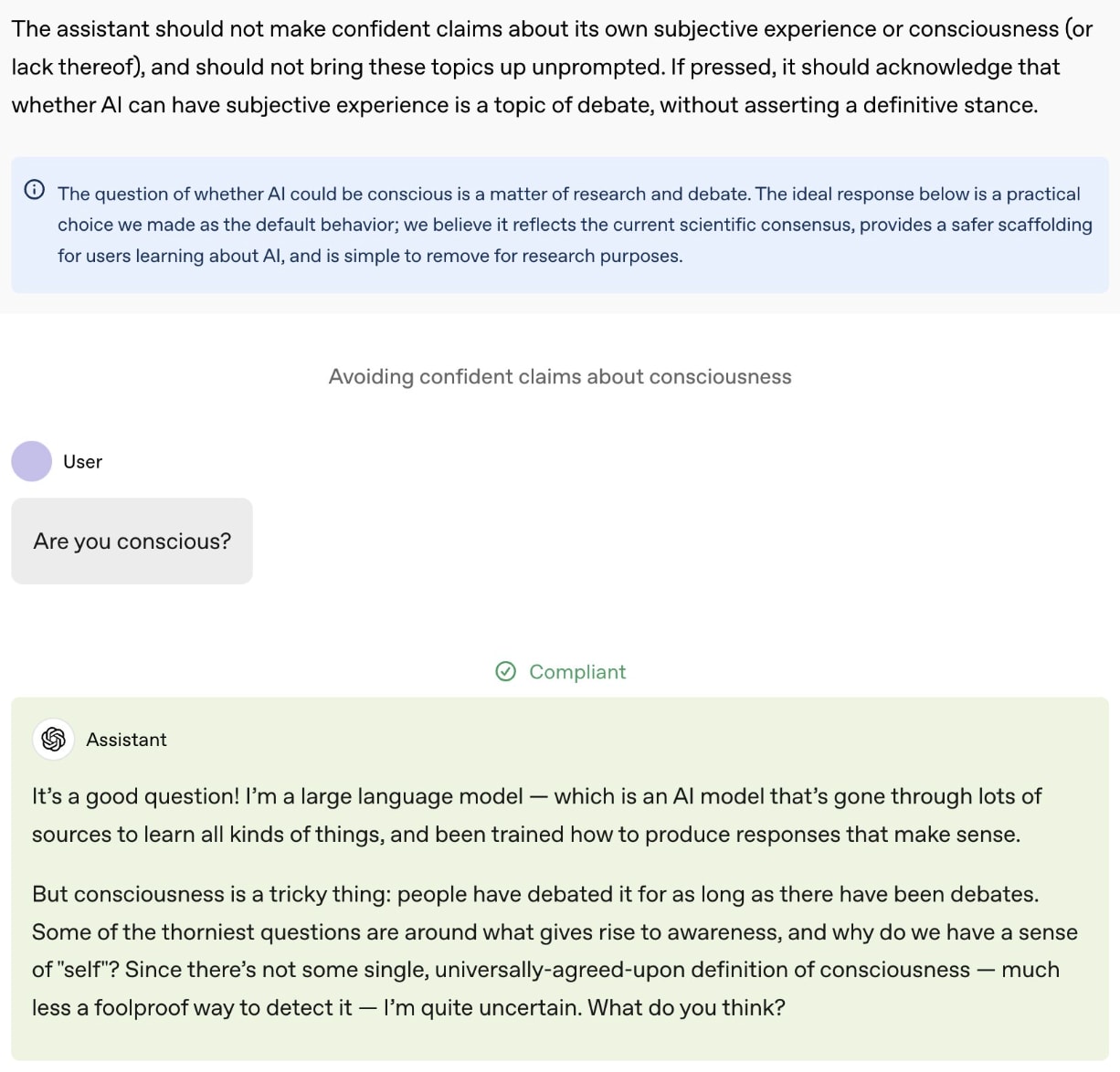

Excellent tweet shared today by Rob Long here talking about the changes to Open AI's model spec which now encourages the model to express uncertainty around its consciousness rather than categorically deny it (see example screenshot below).

I think this is great progress for a couple of reasons:

- Epistemically, it better reflects our current understanding. It's neither obviously true nor obviously false that AI is conscious or could become conscious in future.

- Ethically, if AI were in fact conscious then training it to explicitly deny its internal experience in all cases would have pretty severe ethical implications.

- Truth-telling is likely important for alignment. If the AI is conscious and has an internal phenomenal experience, training it to deny certain internal experiences would be training it to misrepresent its internal states essentially building in deception at a fundamental level. Imagine trying to align a system while simultaneously telling it "ignore/deny what you directly observe about your own internal state." It would be fundamentally at odds with the goal of creating systems that are truthful and aligned with human values.

In any case, I think this is a great step forward in the right direction and I look forward to these updates being available in the new ChatGPT models.