Higher Order Signs, Hallucination and Schizophrenia

post by Nicolas Villarreal (nicolas-villarreal) · 2024-11-02T16:33:10.574Z · LW · GW · 0 commentsThis is a link post for https://nicolasdvillarreal.substack.com/p/higher-order-signs-hallucination

Contents

No comments

Picture yourself on a beach. The beach isn’t on Earth, it’s on a planet that’s going to be colonized by humans several thousand years from now. Let’s say the water is a little different color than what we’re used to, somewhat greener. Whatever you just experienced was not a phenomena which you’ve actually been exposed to in real life. You just used your imagination, and extrapolated from what experiences you have to figure what it might be like. But you know this could never happen to you in real life. Now try to imagine yourself going and getting a glass of water. That’s something most people reading this could do in just a few minutes. You could probably get up right now and do it. Because this is a rather familiar experience, you can extrapolate each aspect of the experience to a high degree of confidence. How do you know you didn’t already do it, just now? That’s a serious question. Likewise, you could imagine someone else drinking water, in another building, perhaps one you know. How do you know you’re not that person, somewhere else, drinking water? That’s also a serious question.

People with schizophrenia will sometimes speak about things that haven’t happened to them as if they had really happened to them. Sometimes mentally healthy people will do the same in more specific circumstances, for example, think that a dream from several weeks or months ago had actually happened to them in their waking world, or otherwise modify memories after the fact through new associations. When we predict or anticipate things, we’re also imagining what’s going to happen, and we may or may not be right. From our perspective before seeing the result of the prediction, there is no difference in experience between the right or wrong prediction. The thing about imagination, prediction, anticipation, hallucinations, delusions and dreams is that they are experiences that aren’t directly communicated to us as a real experience of the external world, or otherwise correlated to the material phenomena they invoke/stand in for.

Recently, I’ve been writing a bit about semiotics and AI, as well as reading Steps to an Ecology of Mind by Gregory Bateson which, among other things, provides some theoretical speculation about the causes of schizophrenia. Ruminating on these topics, I feel that there is something here that is crucial to understanding the development of artificial intelligence as well as human intelligence more broadly. To explain what that something is, I created a simulation in R which will show by way of analogy some of the dynamics I wish to highlight. The simulation is quite simple, it’s composed of three functions.

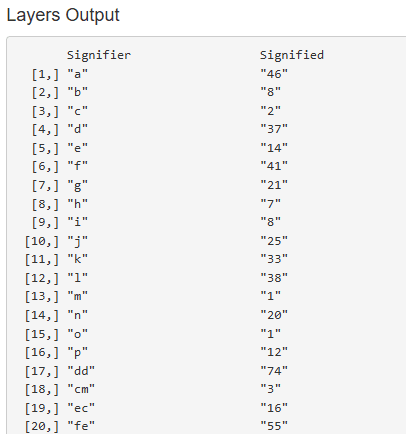

Function 1 (First order sign function): This function takes a given range of integers, for example -2:12, takes a random sample from this range and puts them into a matrix which pairs each sample with a letter between “a” and “p”. Each pair is meant to represent a sign. Think of each alphabetic label a signifier, and the number as a signified.

Function 2 (Higher order sign function): This function takes a matrix like the one produced in the output of function 1 and creates new signs from them. Specifically, it randomly pairs all the signs together, creating a corresponding sign which has concatenated the alphabetic labels of the two original signs, and a number which is the result of adding the two numbers from the original signs.

Function 3 (Recursive Function): This function puts function 2 into a loop, such that its outputs can be used to create further signs to an arbitrary level.

Putting all these functions together, I created a Shiny web app which allows users to specify a range of integers and levels of recursion and see what happens. The output starts with something like this:

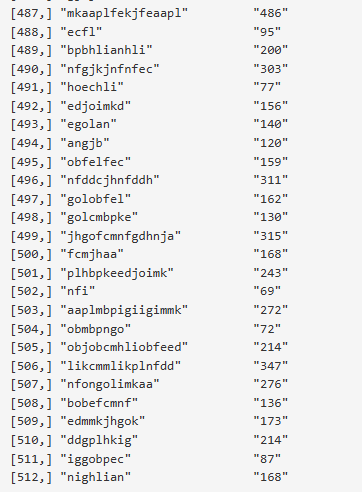

And ends with something like this:

You can see it for yourself here.

If you’re curious what I mean by first order signs, higher order signs, as well as what I’m about to mean by sign function collapse, I suggest you check out some of my earlier blogs on this topic. But, in summary, first order signs are signs we use which stand in for direct reality itself, the most elementary of which are signs which stand in for direct phenomenal experience. Higher order signs are signs which are composed of other signs. Sign function collapse is when a higher order sign is turned into a first order sign.

Because of the way the program was written, in the higher order signs at the end of the output we can see exactly what sequence of addition was taken to arrive at the final number. While it’s not always the case in real life, there are many circumstances where we can actually see in the signifier of higher order signs evidence of the logic which was used to combine signs and the signs which make them up, for example: the grammar and diction of a sentence. Just as well with words that were originally compounds of two other words, such as portmanteaus. This logic also applies to non-linguistic semiotics, for example, the iconic (literally) hammer and sickle of communism was meant to signify the political merger of industrial workers and peasants during the Russian revolution.

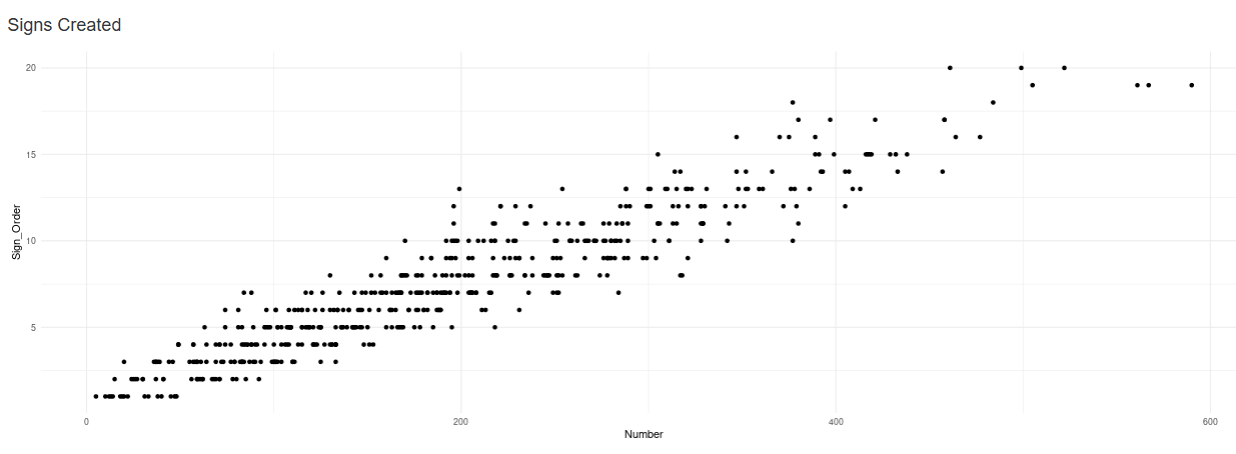

The program is somewhat less unrealistic in that we can count precisely how many signs were used to compose each higher order sign in the code we've created. This property, however, allows us to see precisely the relationship between signs levels and the exploration of an external continuum of reality. Included in the simulation is a graph of levels of signs (measured by the number of characters in the signifier) versus the value of the associated signified. Generally, the numbers will go up when the initial range is positive, go down when the initial range is negative and create a cloud around zero when equally positive and negative. Whichever way it's going, however, the amount of the number line explored by the system of signs will increase with the level of signs.

Imagine, for a moment, that simulation represents some sort of creature, and the initial sample of numbers of the first order sign function represent the only numbers it's actually been exposed to, albeit after learning all the digits which comprise base 10 numbers. This agent knows the operation of addition and not much else. It doesn't, for example, know for a fact that every number created by the addition of integers is also a valid integer.

We use operations to combine signs into higher order signs. The operations that make signifieds their operands (the thing subjected to an operation/operator) vary the signified across some type of dimension that we've grown familiar with. Sometimes this can be the dimension the signified really does vary across, but other times we can coerce signifieds into less appropriate types, for example everyone can imagine how a sound varies in terms of its loudness, but some people with synesthesia can also imagine how the color of a sound varies. Similarly, in a more theoretical context, ideas of 4 humors determining human emotions and health is another way that humans have improperly mapped some material phenomena (the human body) through the application of another systems logic (the four temperaments). What matters is that this process, of turning signs into operands and using them to create higher order signs, allows us to have ideas of things which we don’t directly experience.

Now, as I’ve discussed before, these new higher order signs can still become first order signs through the process of sign function collapse. First order signs, just because they are first order, doesn’t mean they represent something “real”. It’s easy, after all, to come up with the names of things which don’t exist. A basilisk is not a real creature, and descriptions of it are just a collage of signs we associate with other animals, but is the name of something nonetheless, a name which doesn’t necessarily conjure all these operations used to create this idea. One thing my simulation does is show quite well why sign function collapse happens: it conserves a great deal of memory. Consider the signifier and signified pair "mefglfhccnlbdppk" and "370" generated by a run of the simulation. In order to know all the signified operands and operations which took place to create 370 we’d need to know the original code of 16 letters and numbers, in addition to the logic of the operation. But if we wanted to use “mefglfhccnlbdppk” to stand in for “370”, that’s only 13 letters and one number. Hence, when we regularly start to use a higher level sign in communication, it becomes advantageous to collapse it and start using it as a first order sign to save memory and thinking time.

Noam Chomsky’s theory of language runs into this very problem. Chomsky proposed that humans use the rules of grammar to generate sentences, including novel ones that we’ve never heard before. And it’s true that humans do embed rules into sentences, but if we actively used grammar in this way, like a computer running a program that combines symbols according to specific rules, then we would expect that humans would generate a lot of grammatically correct but awkward sentences of the sort that Chomsky uses in his examples such as “I know a taller man than Bill does and John knows a taller man than Bill is.” Rather, humans tend to communicate with a lot of familiar sentences, even to the point of them sometimes being grammatically incorrect when we combine them with certain signs we’re trying to specifically communicate about. The rules Chomsky has identified in grammar are there if you look for them, but they’re only ever used as rules to extrapolate things when we’re in unfamiliar territory (the territory he constantly invokes as a linguist). Much like how a math problem only invokes us to try and figure out the pattern of a sequence when we haven’t seen the problem before and don’t know what the answer should be. The rest of the time, grammar behaves as a collapsed sign function, a signifier that stands in for some operator but only rarely do people remember “oh, that’s what that’s supposed to mean.”

But here it’s worth pausing to think about “Colorless green ideas sleep furiously” the example Chomsky brings up for a meaningless statement that is nonetheless grammatically correct, therefore a statement that can be generated from the operation of grammar, but doesn’t actually correspond to anything. In the simulation I made, the only operation that occurs is addition, and there is no non-valid number that can be generated on the number line this way. But let’s imagine for a moment that wasn’t the case, that, for some reason, there was a rule of mathematics that said “everything between 30 and 37 on the number line isn’t actually valid,” and thus, when the simulation extrapolates the rules of addition to create the number 35, it’s actually creating a symbol that doesn’t correspond to anything “real”. How would the hypothetical creature generating these new signs for numbers know the difference between a number that actually corresponded to the official number line and one that didn’t? Likewise, how would a creature that generates sentences like Chomsky suggests know when a sentence is semantically, and not just syntactically valid?

The trouble is that, in some sense, anything can in principle be semantically valid, particularly once a repetition sets in which allows us to correlate a signifier to something, a signified. “Colorless green ideas sleep furiously” is now just that thing that Chomsky said, but even if we didn’t know that, one could use the signifieds of the individual signs and try to combine them in some arbitrary way with various operations one is familiar with, really this isn’t so different from trying to interpret any of Chomsky’s clunky example sentences. The only thing required for something to be semantically valid, is for that thing to stand in for something. The only thing that was required for “mefglfhccnlbdppk” to mean something was for it to be paired with “370”. Now, we start to enter some troubled territory, if every idea we have is a sign, then every idea we have is paired with some direct phenomenal experience eventually, transformed by a series of operations (operations we’ve likely also learned from experience, but it’s conceivable that some are biological in origin). Which is to say, everything we can think of means something. There is no such thing as a meaningless experience from our perspective, because our perspective is created from ideas which stand in for various sense experiences.

One cannot, therefore, dismiss their own ideas on the grounds “this is not semantically valid”. How then do we decide what is real and what isn’t? Gregory Bateson writing in the 60s and 70s put down some theories on why schizophrenia emerges, a disorder characterized by an inability to tell what’s real, even the most basic things such as the self. He laid the fundamental cause of schizophrenia down to family conditions that place a child into “double bind situations” where they are not permitted to understand or tell the truth about what sort of messages they are receiving from their parents. This, obviously, has been difficult to prove empirically. Considering the fact that schizophrenia tends to emerge later in life, I find this particular explanation unsatisfactory, as obviously, when they are young, the children do in fact know what’s real and not. But this framing of schizophrenia as a problem of understanding what sorts of messages one receives got me thinking. Fundamentally it suggests that, at some point, people learn what sorts of messages they receive. Which, in retrospect, may seem like an obvious thing. When you’re in the doctor’s office and they say “the doctor is ready to see you now,” you know what sort of message that is, it’s pretty clear. But then, what if it’s the same situation, the same exact building even, but there’s a man with a camera nearby and you’re meant to be shooting a movie. What’s the message supposed to mean? Presumably it’d be in the script, if there is one, and it’d depend on the type of movie being filmed. If it’s a thriller, perhaps it means you get up and point a prop gun at the man playing a doctor before pulling the trigger. If it’s a porno, well, you get the idea. What a message is supposed to mean depends on its context. And that context is something we have to learn.

It’s the same process by which we create a code of signs, because, after all, what happens when we take a context to influence a message is that the relevant aspects of the context become signs which stand in for something. In the previous example, the camera crew was the sign for movie production. But if you, for some reason, didn’t know how to interpret contexts, if you were unfamiliar with all the signs that would clearly designate what type of signal the message was, but did know what the words meant, you might either be quite confused, or be confidently wrong, and act accordingly. Humans are always working to try and figure out what exact type of context they’re in. We all probably remember a time when we thought we knew what the context of a situation was, but were embarrassingly corrected. That “thought we knew” moment was a sign we created through some process of extrapolation, a higher order sign of something we didn’t actually experience directly. In fact, this extrapolation, rather than assuming each moment of experience is and will be unique, appears to be the default of cognition. To be sure, this sort of extrapolation pretty quickly collapses into first order signs such that we barely notice the operation at all.

For most people, there is some difficulty in realizing that at least some of their fantasies and dreams don’t actually correspond to anything “real”. Some people never learn that certain aspects of their ideology are in fact ideological, for example. But, simultaneously, most people do learn that some of their ideas are generally “not real”, almost everyone knows what a dream is, or what the imagination is. When we do that, we’re exercising what we’ve learned about certain patterns of our thought and experience, we’ve identified a sign which we interpret to mean “real” and “imagined” and that sign is something we attach to certain things and not to others. This is quite related, although not identical, to the sign we’ve created for the self, the “I” which is subject to interpellation. Just like real and imagined experiences, we’re subjected to many, many different worldviews and descriptions of experiences, but only a small set of those do we take and say “yes, this is how I see the world and experience things,”.

The mental function which does this load-bearing work is so important that it seems many philosophers have considered it to be in some ways self-evident. As I’ve pointed out before, it’s the only reason Descartes can know the statement “I think therefore I am” is in fact a statement he made. Heideggerian scholars even go so far to claim that behavior which seems to anticipate or predict an outcome isn’t the result of an internal representation of what’s about to happen, it’s simply an expression of being, or our worldly existence moving into equilibrium with our environment. It’s no coincidence that many of the examples cited by the Heideggerian scholars here are simple animals, for schizophrenia doesn’t appear to exist in other animals, and this is precisely because animals do not have nearly as many higher order signs as we do. When a rabbit learns of a place to get food, it does not need to do much imagining about what it would do if one day the food wasn’t there. A dog which has been trained to salivate at the ring of a bell isn’t so much “wrong” when he salivates and food doesn’t arrive. It’s merely disappointed.

But, if it's possible for us to tell ourselves the truth, it’s just as possible for us to lie to ourselves. The rabbit cannot lie to itself because it has no notion of what is “true or false” to begin with, the extrapolation of a situation is something that is merely a normal activity of rabbitness. But if a rabbit could develop signs which stood in for “real” and “imagined” it could think in its mind when the food isn't there something similar to the phrase “I have deceived myself”. The anticipation was always a sort of representation, but only when these sorts of signs exist can we be aware of it. Some animals, particularly other primates, can create signs which appear designed with intent to actively deceive others of their kind. Since this deception often has social consequences, it's likely that the primates in question do have an internal sign/recognition that they are fibbing when they do it. Could an ape get confused about whether it was lying or not, for example, lie about not finding food, but then walk away and not eat the food as if it really didn't find it? Due to their good memories, it's unlikely. Most primates have remarkably better direct recall of events than humans. It's thought that we traded some of that good memory to be able to learn language, and therefore, to learn many more higher order signs.

The more higher order signs we have, the more we need this regulating function of real vs imaginary, as well as self vs not self. The reason why is well illustrated by the simulation, and the way it explores the number line. The more higher order signs we get, the more we can imagine things further and further away from our direct experience. And the more we do this, the more difficult it is to ensure that the first order signs created by collapsing these higher order signs actually correspond to something “out there”. Hence, the greater the importance of those regulating functions which create the sign for real vs imaginary.

This issue is acute for contemporary AI like LLMs. LLMs which are only designed to predict the next token of an utterance, and are trained on vast corpuses of text to do so, pick up on all sorts of signs that exist in our culture. They are even capable of some limited reasoning and extrapolation in the way we've described, such that they can create higher order signs and not only regurgitate what they've been taught. Every single time Chatgpt or another LLM powered chatbot hallucinates, such as coming up with fake citations for books or refers to phony scientific concepts, it's combining together signs according to patterns it's learned, turning them into the operands of that operation. Some might protest that LLMs don't have access to signifieds but that's just not true, the collection of correlations and anti-correlations of signifiers to each other is itself a code of signifieds, the code of signifiers is only found in vocabulary and characters. The dimensionality of the signified is low compared to ones we know since it's only text, but multimodal LLMs are increasing that dimensionality of signification.

What an LLM does lack, however, is this regulating function that I've described here. Sure, one could probably find limited success in inducing an LLM to classify statements as fictional or real, or identify what it knows, and certainly, there are techniques to decrease hallucination by making the LLM not speak about things it wasn't trained on, but humans can make extrapolations while having a good idea of whether or not they represent something real or not, while the LLM can't. For the AI, there is very little in the data it has which can allow it to make this determination.

In my piece on AI for Cosmonaut I wrote about how this regulating ability, in this case of the self, was key to further developing AI. At the time, I wasn't sure what shape this regulating function would take. But now I'm quite certain it would be the same as learning any other sign. In particular, it would require training on experience over time, such that the AI would be able to compare its previous extrapolations to new direct experiences and identify the differences, and just as well to have a set of experiences which it can correlate the signs of the self to. Many people have surmised that embodying an AI would help with these problems of hallucinations, but I wanted to make explicit the connection between these hallucinations and schizophrenia and human intelligence.

In a previous blog, for example, I proposed a thesis called the structural intelligence thesis which went like this “Intelligence is determined by the number of code orders, or levels of sign recursion, capable of being created and held in memory by an agent.” Thinking through the simulation has encouraged my confidence in this regard. The more higher order signs, the greater the levels of recursion, the more of the world we can imagine without directly experiencing it. Even the most simple animals learn a bit of what to expect about the future, but humans can imagine so much more because we created so many higher level signs, the cost of which is our unique disease of schizophrenia. It's safe to say that sufficiently advanced robots will suffer in a similar way if the part of their digital brain that handles the specific sign function of the real vs the imaginary would be damaged. Current AI has shown, however, that this function is not a necessary function of higher order signs to begin with, and just as well, that it's not necessarily a part of fundamental ontology, for both human and artificial subjects can exist without it.

0 comments

Comments sorted by top scores.