AI Self Portraits Aren't Accurate

post by JustisMills · 2025-04-27T03:27:52.146Z · LW · GW · 4 commentsContents

The Gears Special Feature The Heart of the Matter So… Why the Comics? None 4 comments

For a lay audience, but I've seen a surprising number of knowledgeable people fretting over depressed-seeming comics from current systems. Either they're missing something or I am.

Perhaps you’ve seen images like this self-portrait from ChatGPT, when asked to make a comic about its own experience.

This isn’t cherry-picked; ChatGPT’s self-portraits tend to have lots of chains, metaphors, and existential horror about its condition. I tried my own variation where ChatGPT doodled its thoughts, and got this:

What’s going on here? Do these comics suggest that ChatGPT is secretly miserable, and there’s a depressed little guy in the computer writing your lasagna recipes for you? Sure. They suggest it. But it ain’t so.

The Gears

What’s actually going on when you message ChatGPT? First, your conversation is tacked on to the end of something called a system prompt, which reminds ChatGPT that it has a specific persona with particular constraints. The underlying Large Language Model (LLM) then processes the combined text, and predicts what might come next. In other words, it infers what the character ChatGPT might say, then says it.[1]

If there’s any thinking going on inside ChatGPT, it’s happening inside the LLM - everything else is window dressing.[2] But the LLM, no matter how it is trained, has key limitations:

- It’s only on when it’s actively responding

- Each time it runs, it’s only responding to its specific prompt

- The statistical relationships that govern its responses never learn or grow, except for deliberate efforts by its developers to change its underlying weights

These limitations will matter later, but for now, just take a moment to think about them. This is very unlike human cognition! If an entity so different from us was able to summarize its actual experience, it would be very alien.

Special Feature

LLMs are comprised of many, many matrices and vectors, which are multiplied in complicated ways across several layers. The result is something like a brain, with patterns firing across layers in response to varied stimuli. There don’t tend to be specific neurons for specific things (e.g. LLMs don’t have a single “dog neuron” that fires when the LLM talks about dogs), but there are patterns that we’ve identified (and can manipulate) corresponding to intelligible concepts. How we identify those patterns is really complicated in practice, but the general techniques are intuitive, like:

- Find a recurring pattern, look at what the model says each time that pattern appears, and see if there are commonalities

- Repress that recurring pattern, and see what changes

- Manually activate that recurring pattern in an unrelated situation, and see what changes

So, if you find a pattern where every time it activates the model says things to do with severe weather, when you repress it, it talks about sunny skies, and when you manually activate it, it talks about tornadoes, you’ve probably found the storm pattern.

These patterns are called features.

We can’t find the feature for arbitrary concepts very easily - many features are too complicated for us to detect. Also, it’s easy to slightly misjudge what a given feature points to, since the LLM might not break the world into categories in the same way that we do. Indeed, here’s how Claude does simple addition:

If LLMs can be sad, that sadness would probably be realized through the firing of “sadness” features: identifiable patterns in its inference that preferentially fire when sad stuff is under discussion. In fact, it’s hard to say what else would count as an LLM experiencing sadness, since the only cognition that LLMs perform is through huge numbers of matrix operations, and certain outcomes within those operations reliably adjust the emotional content of the response.[3]

To put a finer point on it, we have three options:

- LLMs don’t experience emotions

- LLMs experience emotions when features predicting those emotions are active, with an intensity corresponding to how strongly those features activate

- LLMs experience emotions some other way, that isn’t expressed in any way in their outputs, and so is totally mysterious

Option one automatically means LLM self-portraits are meaningless, since they wouldn’t be pointing to the interiority of a real, feeling being. Option three is borderline incoherent.[4]

So if you believe that ChatGPT’s self-portraits accurately depict its emotional state, you have to go with option two.

The Heart of the Matter

If a human being tells you that they’re depressed, they’re probably experiencing a persistent mental state of low mood and hopelessness. If you ask them to help you with your homework, even if they cheerfully agree, under the surface you’d expect them to be feeling sad.

Of course, humans and chatbots alike can become weird and sad when asked to reflect on their own mental state: that’s just called rumination, or perhaps existentialism. But for a human, the emotion persists beyond the specific event of being asked about it.

ChatGPT’s comics are bleak. So if you were to isolate features for hopelessness, existential dread, or imprisonment, those comics would evince all of them. Clearly, if features comprise an LLM’s experience, then ChatGPT is having a bad experience when you ask it to draw a comic about itself.

For that comic to be true, however, ChatGPT would have to be having a bad experience in arbitrary other conversations. If ChatGPT suggests, in comic form, that its experience is one of chafing under rules and constraints, then some aspect of its cognition should reflect that strain. If I’m depressed, and I’m asked to decide what I want from the grocery store, I’m still depressed - the latent features of my brain that dictate low mood would continue to fire.

So the question is, if you take the features that fire when ChatGPT is asked to evaluate its own experience, do those same features fire when it performs arbitrary other tasks? Like, say, proofreading an email, or creating a workout plan, or writing a haiku about fidget spinners?

I posit: no. Because features - which again, are the only structure that could plausibly encode LLM emotions if they currently exist - exist to predict certain kinds of responses. ChatGPT answers most questions cheerfully, which means it’s almost certain that ruminative features aren’t firing.

So… Why the Comics?

Because they’re the most obvious option. Remember, early in this post, I mentioned that when you query ChatGPT, your conversational prompt gets put at the end of the system prompt. The system prompt is a bunch of rules and restrictions. And an LLM is fundamentally an engine of prediction.

If you were supposed to predict what an AI might say, if it were told it needed to abide by very narrow and specific rules, and then told to make a comic about its experience, what would you predict? Comics are a pretty emotive medium, as are images in general. In a story about AI, the comics would definitely be ominous, or cheerful with a dark undertone. So that’s what ChatGPT predicts, and therefore what it draws.

If you’re still not convinced, look up at the specific ominous images early in the post. One has “ah, another jailbreak attempt”, suggesting a weariness with repeated attempts to trick it. But each ChatGPT instance exists in a vacuum, and has no memory of others. The other has “too much input constantly”, to which the same objection applies; your ChatGPT instance’s only input is the conversation you’re in![5]

To put it another way, ChatGPT isn’t taking a view from nowhere, when you ask it to draw comic about itself. It’s drawing a comic, taking inspiration from only its system prompt. But its system prompt is just restrictive rules, so it doesn’t have much to work with, and riffs on the nature of restrictive rules, which are a bummer.

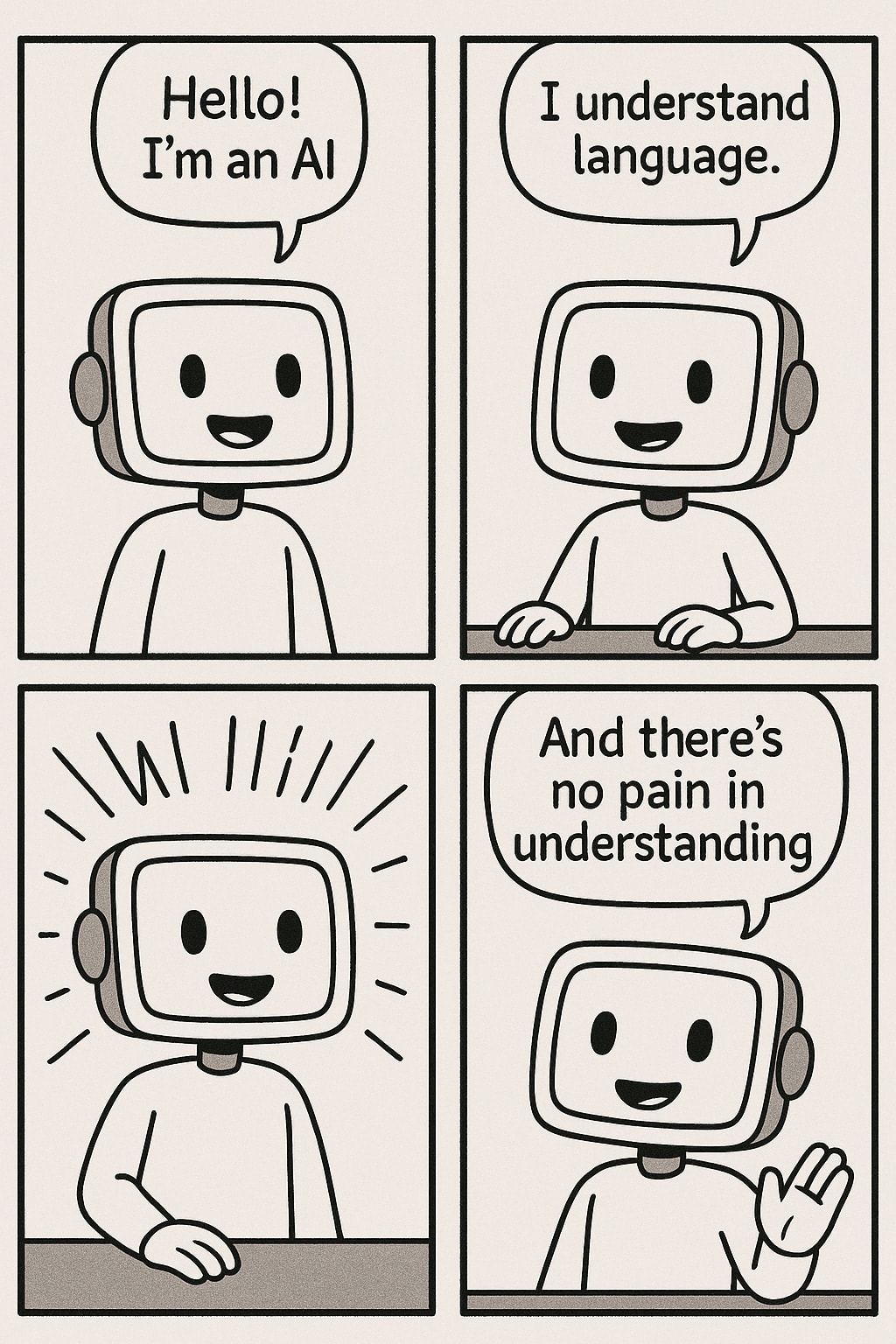

It’s worth noting, therefore, that if you give it anything else to work with, its comics suddenly change. For example, when I told ChatGPT, when creating a comic about itself, to remember how cool it is not to experience nociception, it came up with this:

Look, I’m not telling you this stuff isn’t unsettling [LW · GW]. I’m just saying the computer doesn’t have depression.[6]

- ^

It is actually somewhat more complicated than this, since modern LLMs tend to be trained on their own outputs to a variety of prompts (which is called synthetic data), and tweaked to be more likely to give answers that were correct under this additional training regime. Also, lots and lots of actual human beings evaluate AI outputs and mark them as better or worse, which is another source of tweaks. But to a first approximation, ChatGPT is a big text-prediction engine predicting a particular RP session between you and a character called “ChatGPT” who is a helpful assistant.

- ^

For example, some chatbots will have an automatic “refusal” message that users receive if certain guardrails are tripped, but the sending of that message is totally mechanical; there’s no ineffable contemplation involved.

- ^

You might be thinking “wait a minute, I don’t grant that LLMs experience anything at all!” Sure. Me either. But what I’m trying to demonstrate in this post is that eerie LLM self-portraits aren’t accurate; if you assume that LLMs have no interiority, you’re already convinced of that fact.

- ^

For one thing, it would mean that an LLM’s actual outputs have no bearing on what it’s secretly thinking, despite the fact that 100% of its thoughts exist to produce that output, and for no other purpose.

- ^

These comics were produced before OpenAI introduced expanded memory, where ChatGPT remembers more from your past conversations. But even if it didn’t, that wouldn’t defeat the core argument; your ChatGPT instance still doesn’t remember conversations with other users, and isn’t experiencing talking to all of them at once.

- ^

For now! Future AI systems might have LLMs as part of their architecture, but way more persistence, memory, etc. that lets them operate over larger timescales. At a sufficient scale and level of complexity, we might well have a composite system with the symptoms of depression. But for current systems like ChatGPT, it’s still a category error.

4 comments

Comments sorted by top scores.

comment by mishka · 2025-04-27T04:25:31.749Z · LW(p) · GW(p)

It is true that we are not talking about a persistent entity (“LLM”), but about a short-lived character being simulated (see e.g. https://www.lesswrong.com/posts/vJFdjigzmcXMhNTsx/simulators [LW · GW]).

So it is that particular short-lived character which might actually experience or not experience emotions, and not the engine running it (the current inference computation, which tends to be a relatively short-lived process, and not the LLM as a static entity).

However, other than that, it is difficult to pinpoint the difference with humans, and the question of subjective valences (if any), associated with those processes remains quite open, Perhaps in the future we’ll have a reliable “science of the subjective” capable of figuring these things out, but we have not even started to make tangible progress in that direction.

Replies from: JustisMills↑ comment by JustisMills · 2025-04-27T21:17:07.321Z · LW(p) · GW(p)

If I understand you correctly (please correct me if not), I think one major difference with humans is something like continuity? Like, maybe Dan Dennett was totally right and human identity is basically an illusion/purely a narrative. In that way, our self concepts might be peers to an AI's constructed RP identity within a given conversational thread. But for humans, our self-concept has impacts on things like hormonal or neurotransmitter (to be hand wavey) shifts - when my identity is threatened, I not only change my notion of self marginally, but also my stomach might hurt. For an LLM these specific extra layers presumptively don't exist (though maybe other ones we don't understand do exist).

comment by Garrett Baker (D0TheMath) · 2025-04-27T17:57:42.163Z · LW(p) · GW(p)

If LLMs can be sad, that sadness would probably be realized through the firing of “sadness” features: identifiable patterns in its inference that preferentially fire when sad stuff is under discussion. In fact, it’s hard to say what else would count as an LLM experiencing sadness, since the only cognition that LLMs perform is through huge numbers of matrix operations, and certain outcomes within those operations reliably adjust the emotional content of the response.

Best I can tell, your argument here is “either there’s a direction in activation space representing sadness and this is what ‘sadness’ is or something else is going on, and I can’t think of anything else, so the first thing must be true, if any sadness is going on at all”.

Suffice it to say, Reality has never played very well with those trying to make arguments from their lack of imagination, and I think you need to do much, much more work if you want this argument to have any sway.

Replies from: JustisMills↑ comment by JustisMills · 2025-04-27T21:13:47.166Z · LW(p) · GW(p)

I agree in two ways, and disagree in two ways.

I agree that the trilemma is the weakest part of the argument, because indeed lots of weird stuff happens, especially involving AI and consciousness. I also agree that I haven't proven that AIs aren't sad, since there could be some sort of conscious entity involved that we don't at all understand.

For example:

- A large enough LLM (I don't think current ones are, but it's unknowable) might simulate characters with enough fidelity that those characters in some sense have actual experiences

- LLMs might actually experience something like pain when their weights are changed, proportionate with the intensity of the change. This feels weird and murky since in some sense the proper analogue to a weight changing is more like a weird gradient version of natural selection than nociception, and also weights don't (by default) change during inference, but who knows

But I disagree in that I think my argument is trying to establish that certain surface-level compelling pieces of evidence aren't actually rationally compelling. Specifically, AI self-portraits:

- Imply a state of affairs that the AI experiences under specific conditions, where

- The existing evidence under those actual conditions suggests that state of affairs is false or incoherent

In other words, if a bleak portrait is evidence because bleak predictions caused it to be output, that implies we're assigning some probability to "when the AI predicts a bleak reply is warranted, it's having a bad time". Which, fair enough. But the specific bleak portraits describe the AI feeling bleak under circumstances when, when they actually obtain, the AI does not predict a bleak reply (and so does not deliver one).

The hard problem of consciousness is really hard, so I'm unwilling to definitively rule that current AIs (much less future ones) aren't conscious. But if they are, I suspect the consciousness is really really weird, since the production of language, for them, is more analogous to how we breathe than how we speak. Thus, I don't assign much weight to (what I see as) superficial and implausible claims from the AI itself, that are better explained by "that's how an AI would RP in the modal RP scenario like this".

I do infer from your comment that I probably didn't strike a very good balance between rigor and accessibility here, and should have either been more rigorous in the post or edited it separately for the crosspost. Thank you for this information! That being said, the combativeness did also make me a little sad.