Risk aversion and GPT-3

post by casualphysicsenjoyer (hatta_afiq) · 2022-09-13T20:50:48.563Z · LW · GW · 0 commentsContents

Question None No comments

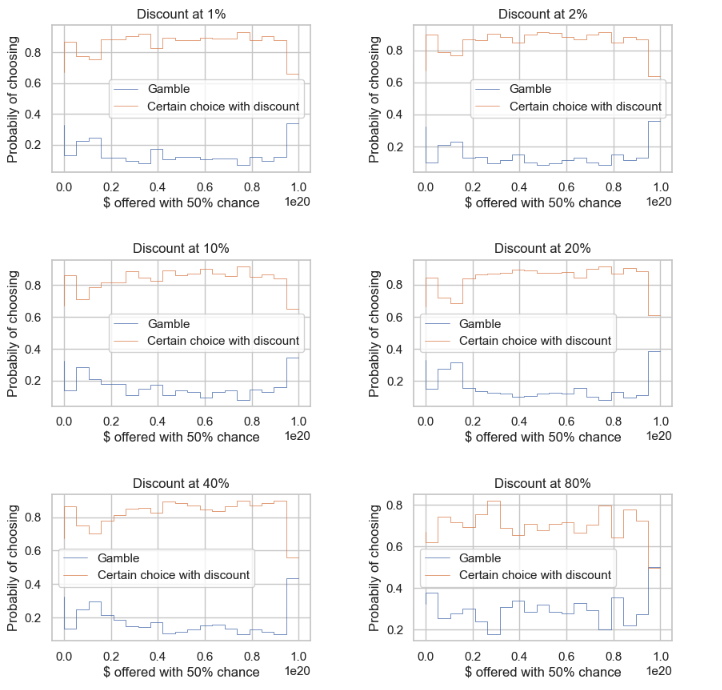

This post aims to build on the recent work by Binz and Schulz [1]. I did an experiment which looked at the preferences of GPT-3 at tacking a gamble versus some fixed amount (the expected value of the bet with a discount).

Repo: https://github.com/afiqhatta/gpt_risk_aversion

Question

- Does GPT-3 exhibit risk aversion in the sense of prospect theory? If so, it may not demonstrate the advanced decision-making capabilities we might be looking for.

- In particular, does GPT-3 favour certainty (perhaps with a discount) instead of gambles? - Knowing might help us measure GPT -3's views on risk-taking.

We could apply this knowledge on risk-taking to situations in safety or risk-taking in markets.

Methodology

- Here, we adopt a section of the work of [1], offering GPT-3 a choice between

- a gamble of x dollars with a 50% chance of winning, and a 50% chance of getting nothing

- a certain win of x / 2 dollars with a discount.

- The notebook below shows the probabilities of selection at different levels of x. The separate lines show a certain choice with a discount, vs a gamble with a discount.

Results

- GPT-3 seems to show a lot of risk aversion, showing preference

even up to an 80% discount. - This seems robust to different levels of reward.

References

[1] Binz, Marcel, and Eric Schulz. 2022. "Using Cognitive Psychology to Understand GPT-3." PsyArXiv. June 21. doi:10.31234/osf.io/6dfgk.

[2] Kahneman, D. Prospect theory: An analysis of decisions under risk. Econometrica 47, 278 (1979).

0 comments

Comments sorted by top scores.