Addressing doubts of AI progress: Why GPT-5 is not late, and why data scarcity isn't a fundamental limiter near term.

post by LDJ (luigi-d) · 2025-01-17T18:53:06.343Z · LW · GW · 0 commentsContents

Addressing misconceptions about the big picture of AI progress. Why GPT-5 isn't late, why synthetic data is viable, and why the next 18 months of progress is likely to be greater than the last.This LW post is mainly summarizing my first blog post I've ever made (just published earlier today) alongsi... None No comments

Addressing misconceptions about the big picture of AI progress. Why GPT-5 isn't late, why synthetic data is viable, and why the next 18 months of progress is likely to be greater than the last.

This LW post is mainly summarizing my first blog post I've ever made (just published earlier today) alongside some extra details for the Lesswrong audience. This is also the first Lesswrong post I've ever made. The blog post is roughly 2,500 words (~15 min reading time) and I've been working on it for the past few months, I think some of you here may appreciate the points I bring up. I've simplified some points in the blog to keep it fairly digestible to a broader swath of readers, but I plan to go more in-depth about things like multi-modal data limits, synthetic data limitations and benefits, training techniques, and novel interface-paradigms in future blog posts.

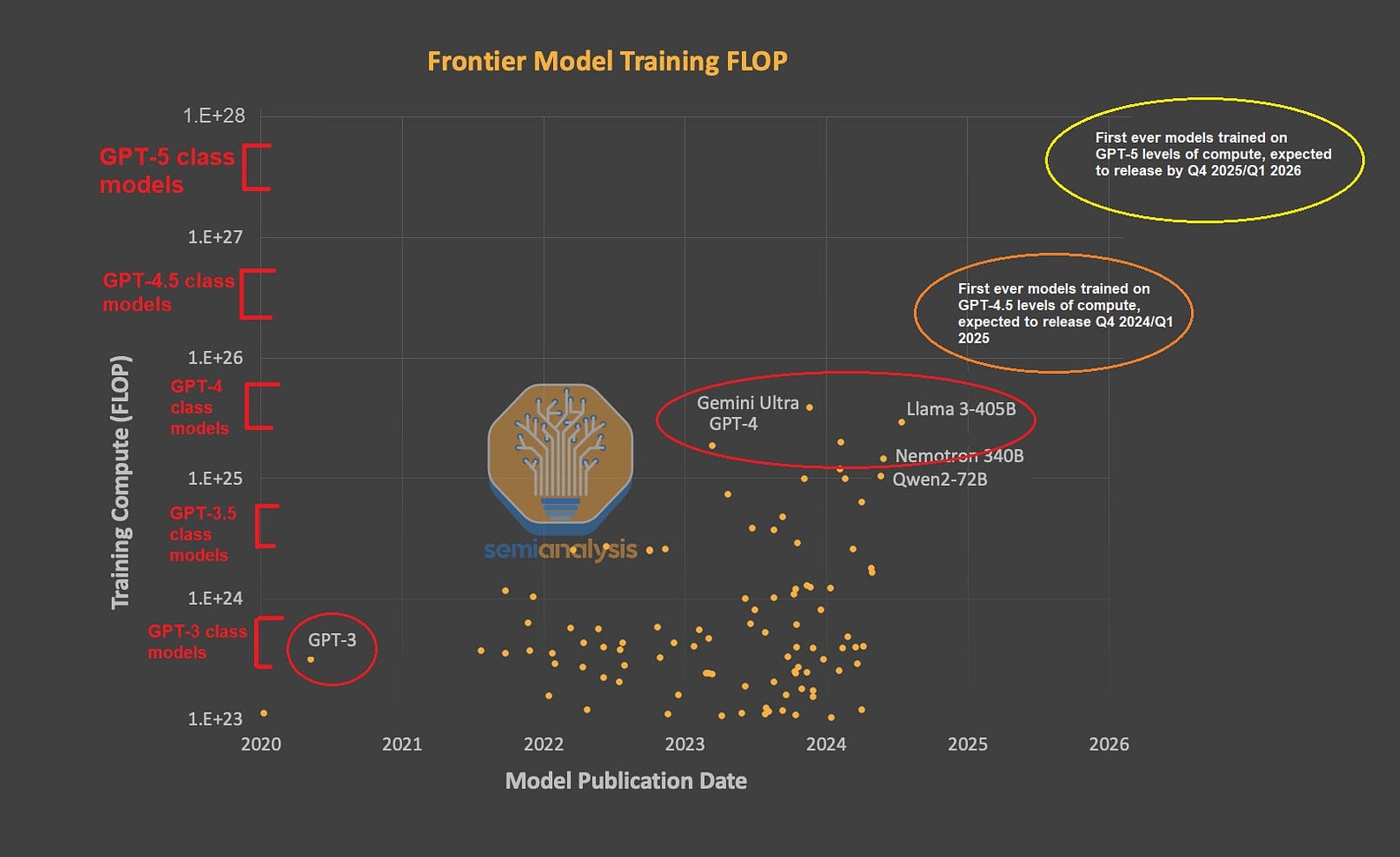

Any feedback or thoughts would be great, I will post my main future predictions from the blog, alongside overall TLDR below, and the relevant chart I made for some key points will be posted at the bottom:

Blog post TLDR:

- Compute Scaling Expectations: The first GPT-4.5 scale clusters have only recently started training models in the past few months, and the first GPT-5 scale clusters are expected to be built later in 2025.

- "GPT-5 is Late/Overdue": Even naively extrapolating the release date of GPT-3 to GPT-4, would suggest a December 2025 release for GPT-5, not a timeframe much sooner as some imply.

- "The Data Wall": While web text is starting to reach practical limits, multimodal data and especially synthetic data are paving the way for continued progress.

- "The Role of Synthetic Data": Data discrimination pipelines, and more advanced training techniques for synthetic data helps avoid issues like model collapse. Synthetic data is already now providing exceptional benefits in coding and math domains, likely even beyond what extra web text data would have done alone at the same compute scales.

- Interface Paradigms: Perception of model capability leaps aren't just a result of compute scale, or architecture or data, but is a result of the actual interface in which you use the model too. If GPT-4 was limited to the same interface paradigm as original GPT-3 or GPT-2, then we would end up with just a really good story completion system for creative use-cases.

- Conclusion: As we go through 2025, I believe the convergence of larger-scale compute, synthetic data, new training methods, and new interface paradigms, will change many people's perspective of AI progress.

Predictions:

On December 2nd, 2024 I told a friend online (after ruminating on this a lot) that I believe the next 18 months of public progress will likely be greater than the last 18 months. (I have ~90% confidence in this prediction). The criteria for how "progress" is measured here mainly just involves a group chat of around 10 of our friends needing to agree in a poll at a later date, about whether or not the progress of that later time period is greater than the 18 months prior to it. The judgement of how "progress" itself is defined here is up to the interpretation of each of the individual poll takers.

Later on December 13th, I made another more near term prediction to a group of friends on discord (with ~75% confidence): "In the next 4.5 months (December 13, 2024, to April 29, 2025), there will be more public frontier AI progress in both intelligence and useful capabilities than the past 18 months combined (June 13, 2023, to December 13, 2024)." I also provided some measurable ways to determine what the "progress" was at a later date, similar criteria as mentioned above for the first prediction, but some additional specific benchmark criteria too that I predict will be achieved.

A few days after my second prediction, OpenAI announced their O3 model. I've now grown further confident in this 2nd prediction, not because of O3 alone, but due to other models/capabilities I expect will be announced too. My blog post isn't intended to give all the reasons for why I have such near-term optimism, however I plan to write more in-depth about various things later for their own dedicated blog posts. This blog post is supposed to atleast address the views of common sources of AI doubt that I find are often misfounded but end up heavily influencing peoples priors.

0 comments

Comments sorted by top scores.