Confusing the metric for the meaning: Perhaps correlated attributes are "natural"

post by NickyP (Nicky) · 2024-07-23T12:43:18.681Z · LW · GW · 3 commentsContents

Introduction 1 - Age and Associated Attributes in Children 2 - Price and Quality of Goods 3 - Size and Weight of Objects Implications for Information Encoding Rethinking PCA and Dimensional Reduction None 3 comments

Epistemic status: possibly trivial, but I hadn't heard it before.

TL;DR: What I thought of as a "flaw" in PCA—its inability to isolate pure metrics—might actually be a feature that aligns with our cognitive processes. We often think in terms of composite concepts (e.g., "Age + correlated attributes") rather than pure metrics, and this composite thinking might be more natural and efficient

Introduction

I recently found myself describing Principal Component Analysis (PCA) and pondering its potential drawbacks. However, upon further reflection, I'm reconsidering whether what I initially viewed as a limitation might actually be a feature. This led me to think about how our minds — and, potentially, language models — might naturally encode information using correlated attributes.

An important aspect of this idea is the potential conflation between the metric we use to measure something and the actual concept we're thinking about. For instance, when we think about a child's growth, we might not be consciously separating the concept of "age" from its various correlated attributes like height, cognitive development, or physical capabilities. Instead, we might be thinking in terms of a single, composite dimension that encompasses all these related aspects.

After looking at active inference a while ago, it seems like in general, a lot of human heuristics and biases seem like they are there to encode real-world relationships that exist in the world in a more efficient way, which are then strained in out-of-distribution experimental settings to seem "irrational".

I think the easiest way to explain is with a couple of examples:

1 - Age and Associated Attributes in Children

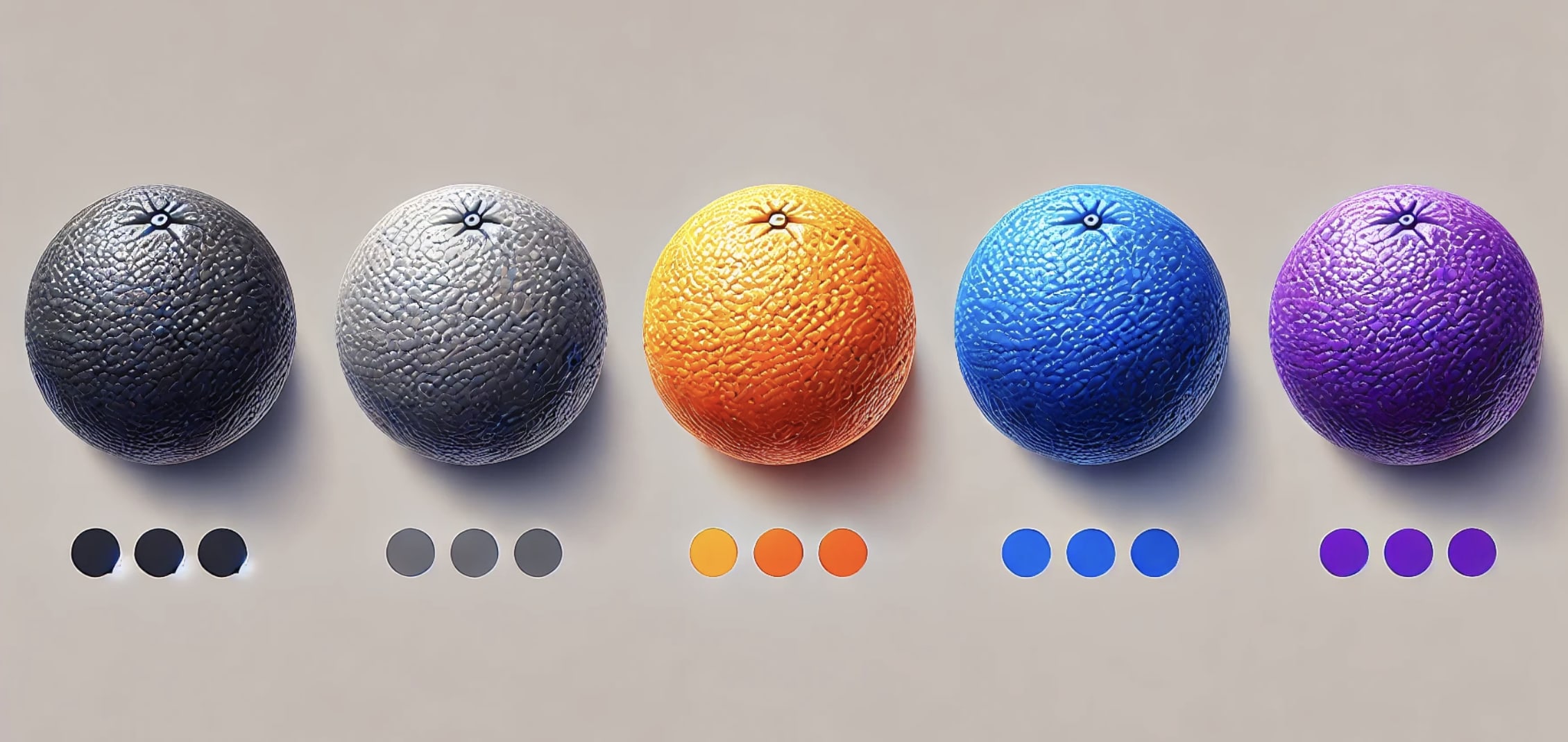

Suppose we plotted two attributes: Age (in years) vs Height (in cm) in children. These are highly correlated, so if we perform Principal Component Analysis, we will find there are two main components. These will not correspond to orthogonal Age and Height components, since they are quite correlated. Instead, we will find an "Age + Height" direction, and a "Height relative to what is standard for that age" direction.

While once can think of this as a "failure" of PCA to find the "true things we are measuring", I think this is perhaps not the correct way to think about it.

For example, if I told you to imagine a 10-year-old, you would probably imagine them to be of height ~140 ± 5cm. And if I told you they were 2.0m tall or 0.5m tall, you would be very surprised. On the other hand, one often hears phrases like "about the height of a 10-year-old".

That is, when we think about a child's development, we don't typically separate each attribute into distinct vectors like "age," "height," "voice pitch," and so on. Instead, we might encode a single "age + correlated attributes" vector, with some adjustments for individual variations.

This approach is likely more efficient than encoding each attribute separately. It captures the strong correlations that exist in typical development, while allowing for deviations when necessary.

When one talks about age, one can define it as:

- "number of years of existence" (independent of anything else)

but when people talk about "age" in everyday life, the definition is more akin to:

- "years of existence, and all the attributes correlated to that".

2 - Price and Quality of Goods

Our tendency to associate price with quality and desirability might not be a bias, but an efficient encoding of real-world patterns. A single "value" dimension that combines price, quality, and desirability could capture the most relevant information for everyday decision-making, with additional dimensions only needed for finer distinctions.

That is, "cheap" can be conceptualised in two separate ways:

- Having a low price (only)

- Having a low price, and this, other attributes that are correlated with price, such as low quality and status.

Then deviations from the expected correlations can be added explicitly, like "cheap but high quality".

3 - Size and Weight of Objects

We often think of size and weight as closely related. Encoding a primary "size + weight" dimension, with density as a secondary factor, might be more efficient than always considering these attributes separately.

For example, if one is handed a tungsten cube, even when being explicitly told "wow this is way heavier than one would expect", one is often still surprised at how heavy it is. That is, people often think of "size" in two separate ways":

If something is "small", it can be:

- of small volume (only)

- of small volume, and correlated qualities (like little weight).

Then deviations from the expected correlation can be added separately, like "small and dense", or "large but not heavy (given the size)"

Implications for Information Encoding

This perspective suggests that encoding information along correlated dimensions might be more natural and efficient than always separating attributes. For most purposes, a single vector capturing correlated attributes, plus some adjustment factors, could suffice.

This approach could be particularly relevant for understanding how language models and other AI systems process information. Rather than maintaining separate representations for each possible attribute, these systems might naturally develop more efficient, correlated representations.

This may mean that if we are thinking about something or doing interpretability on AI models, we occasionally find "directions" that are, perhaps, not exactly related to specific metrics we have in mind, but rather some "correlated" direction, and in some cases could be considered "biased" (especially when there might be spurious correlations).

Rethinking PCA and Dimensional Reduction

Initially, I viewed PCA's tendency to find correlated directions as a potential limitation. However, I'm now considering whether this might actually be a feature that aligns with how our minds naturally process information.

In the past, I might have been "disappointed" that PCA couldn't isolate pure metrics like "Age" and "Height". But I've come to realise that I've been conflating Age (the metric) with Age (plus its correlated attributes) in my own thinking. Upon reflection, I believe I more often think in terms of these composite concepts rather than isolated metrics, and this is likely true in many scenarios.

If correlated attributes are indeed more natural to our cognition, then dimensional reduction techniques like PCA might be doing something fundamentally correct. They're not just compressing data; they're potentially revealing underlying structures that align with efficient cognitive representations. These representations may not correspond directly to our defined metrics, but they might better reflect how we actually conceptualise and process information.

However, it's important to note potential drawbacks to this perspective:

- Spurious correlations or confounding factors might lead to misleading interpretations.

- Optimising for correlated attributes rather than core metrics could result in Goodharting.

- We might overlook opportunities for targeted improvements by focusing too much on correlated clusters of attributes.

Therefore, while thinking in terms of correlated attributes might be more natural and efficient, we should remain aware of when it's necessary to isolate specific variables for more precise analysis or intervention.

3 comments

Comments sorted by top scores.

comment by Noosphere89 (sharmake-farah) · 2024-07-25T16:14:13.865Z · LW(p) · GW(p)

If I were to take anything away from this, it's that you can have cognition/intelligence that is efficient, or rational/unexploitable cognition like full-blown Bayesianism, but not both.

And that given the constraints of today, it is far better to have efficient cognition than rational/unexploitable cognition, because the former can actually be implemented, while the latter can't be implemented at all.

Replies from: chasmani↑ comment by chasmani · 2024-07-26T14:06:16.222Z · LW(p) · GW(p)

I agree with your point in general of efficiency vs rationality, but I don’t see the direct connection to the article. Can you explain? It seems to me that a representation along correlated values is more efficient, but I don’t see how it is any less rational.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-07-26T16:01:21.583Z · LW(p) · GW(p)

This wasn't specifically connected to the post, just providing general commentary.