(Yet Another) Map for AI Risk Discussion

post by chronolitus · 2023-04-06T11:55:34.523Z · LW · GW · 0 commentsContents

AI Risk None No comments

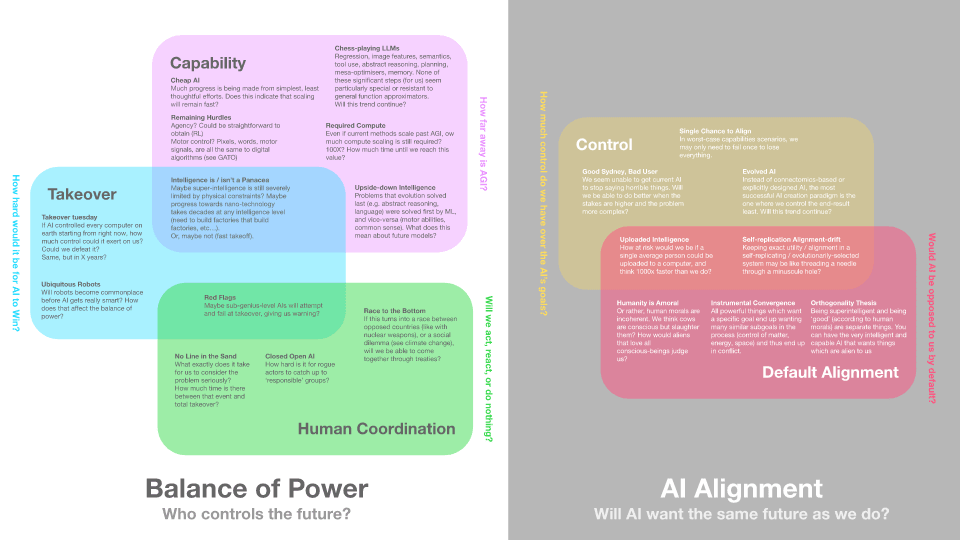

I made this map for myself, but it quickly turned into a tool which I use to help find productive discussion - for example, in steering towards a common understanding of key divergences.

I also find that it helps me introduce the topic to friends who are not aware of it at all.

Maybe sharing it here will be helpful to others as well?

AI Risk

This is not a post of AI Risk opinions. Rather, it's a post full of AI Risk questions, aimed towards myself and others.

One of many ways to frame AI Risk is: What is the current situation for humanity with respect to AI killing/controlling everyone?

The map focuses on the following 5 domains for clustering thought:

-

System control: how much control do we have on the AI's shape?

-

Default alignment: if we don't have control on the AIs shape, how much does it disagree with us by default?

-

AI Capabilities: How capable is the AI, and how soon?

-

Takeover Difficulty: How easy is it for someone who controls all computers and thinks really fast to control the physical world? (now, in 10 yrs, in 100 yrs)

-

Human coordination: How good is civilization at coordinating against AI threats?

The first two domains together - system control and default alignment - form the pillar of alignment (how easy will it be to have aligned AI?)

The next three together represent the balance of power between humanity and AI-dom.

For finding disagreements, it can be useful to try to assign gut-feeling scores to each domain, which should somewhat decompose each person's overall feeling about the chance of AI doom.

Using probabilities, or simple scores (depending on preference / who you're discussing with):

For example, if I'm speaking to my mom, I'll say I'm at: (where 0 means the situation is dire for humanity, and 100 completely favorable.) (in the context of AI Risk, less capabilities corresponds to a safer / better situation - higher score).

- System control: 20

- Default alignment: 30

- Capabilities: 40

- Takeover difficulty: 60

- Human coordination: 20

And then she can say "20 seems rather low, I think people are better than you think at solving these problems", and we can focus on this particular area of disagreement.

Your overall AI doom score should be some combination of the 5 ( feel free to disagree on how to combine them).

(Q: Why didn't I use probabilities by default? A: You should totally use probabilities if that works for you! I find that in discussion, for many people, probabilities lead to more confusion and focus on mathematical aspects, and less on identifying beliefs and world models - but they're obviously more accurate since they actually map directly to planning and action. If probabilities are second nature for you, replace 0-100 with p(bad) for each domain, and multiply them all to get p(doom) - or, again, do your own thing. What matters most is that both people agree broadly what kinds of scores mean in terms of how much we should worry about a particular problem - e.g. 0 means drop everything now, 100 means all good.)

The arguments in the map are those which strike me (emphasis on the me) as the most essential arguments, but mainly they are there to guide the initial discussion, which should not exclude adding, moving, and changing these arguments. Here's a link to the original so you can copy and modify it to suit your own purposes

0 comments

Comments sorted by top scores.