if you're reading this it's too late (a new theory on what is causing the Great Stagnation)

post by rogersbacon · 2023-06-08T11:49:29.136Z · LW · GW · 2 commentsThis is a link post for https://www.secretorum.life/p/if-youre-reading-this-its-too-late

Contents

2 comments

A sword is against the internet, against those who live online, and against its officials and wise men. A sword is against its false prophets, and they will become fools. A drought is upon its waters, and they will be dried up. For it is a place of graven images, and the people go mad over idols. So the desert creatures and hyenas will live there and ostriches will dwell there. The bots will chatter at its threshold, and dead links will litter the river bed.

One consistent theme of this blog has been the role of the internet and digital technology in what I see as a subtle but pervasive loss of creativity across the arts, science, and technology (manifesting as the so-called Great Stagnation in the latter domains). Here, I want to pull together some of my writing on this subject from various essays and share some recent research/sources that have led me to a new hypothesis for what’s going on here.

~~~~~

From “On Stories and Histories: Q&A”:

The crushing excess of new content is especially problematic in this regard. We have less familiarity with the deep history of art and culture than ever before, and this has caused us to lose a kind of instinct for Greatness, an intuition for what makes things stand the test of time. At the same time, most of us also have too much knowledge of our chosen art or discipline and this makes it difficult for us to do the unprecedented, path-breaking work that revolutionizes a field. In the arts, the danger is that everything you make will feel derivative, a pale imitation of yesteryear’s avant garde. In the sciences, an extensive knowledge of the literature will bias you towards conducting incremental gap-filling research instead of the truly innovative research which creates new gaps in the literature. Richard Hamming has a little riff on this in his classic speech, “You and Your Research”:

“There was a fellow at Bell Labs, a very, very, smart guy. He was always in the library; he read everything. If you wanted references, you went to him and he gave you all kinds of references. But in the middle of forming these theories, I formed a proposition: there would be no effect named after him in the long run. He is now retired from Bell Labs and is an Adjunct Professor. He was very valuable; I'm not questioning that. He wrote some very good Physical Review articles; but there’s no effect named after him because he read too much.

If you want to think new thoughts that are different, then do what a lot of creative people do—get the problem reasonably clear and then refuse to look at any answers until you’ve thought the problem through carefully how you would do it, how you could slightly change the problem to be the correct one.”

~~~~~

From “Exploring the Landscape of Scientific Minds (Let My People Go)”:

The internet is usually seen as an unequivocal good for the advancement of science, but I suspect that we do not fully appreciate the psychological costs. I can think of at least three reasons why the internet might have negative effects on creativity and diversity in scientific thinking (note: the first reason has been omitted because it was redundant with the previous excerpt).

(2) It is too easy to know if your ideas are considered fringe and unusual, or have already been studied and “proved”. This makes people less likely to do the kind of thinking that can overturn conventional wisdom or show something to be true which was highly unlikely to be so, resulting in a sort of global chilling effect on intellectual risk-taking.

(3) The internet has also had the effect of homogenizing cultures across the world. German, Russia, American, and Chinese cultures are much more similar now than they were 50 years ago (and were much more similar 50 years ago than they were 100 years ago); accordingly, German, Russia, American, and Chinese science (in terms of their organization, goals, norms, values, etc.) are much more similar as well.

I wonder if the overall effect of the internet has been to create a cognitive environment which is better at producing minds that do “normal science” (working towards fixed goals within a given paradigm, “puzzle-solving” as Thomas Kuhn called it) and worse at producing the kinds of minds that do revolutionary, paradigm-shifting science.

~~~~~

This last conjecture was developed further in my essay “Exegesis”.

In science the Apollonian tends to develop established lines to perfection, while the Dionysian rather relies on intuition and is more likely to open new, unexpected alleys for research…The future of mankind depends on the progress of science, and the progress of science depends on the support it can find. Support mostly takes the form of grants, and the present methods of distributing grants unduly favor the Apollonian. Applying for a grant begins with writing a project. The Apollonian clearly sees the future lines of his research and has no difficulty writing a clear project. Not so the Dionysian, who knows only the direction in which he wants to go out into the unknown; he has no idea what he is going to find there and how he is going to find it. Defining the unknown or writing down the subconscious is a contradiction in absurdum.

The ancient dichotomy of the Apollonian (order, logic, restraint, harmony) and the Dionysian (chaos, emotion, intuition, orgiastic revelry) provides the psychological bedrock upon which the higher-level dichotomy of climbing and wandering is built. This points us towards a crucial, perhaps the crucial, difference between our two modes of travel: climbing is about the quantifiable (the logic can be traced, the calculations can be checked, the data can be analyzed) while wandering is about the unquantifiable—ideas, intangible and ineffable, their value, causes, and consequences only knowable in hindsight, if at all (more on this later).

[…]

Computers and the internet have been rocket fuel for legibility, easily allowing us to track nearly every aspect of the scientific enterprise.

I would argue that this intense “metrification” is responsible, either directly or indirectly, for much of what’s wrong with science. Much ink has been spilled on the nature of these issues so I won’t belabor the discussion, but suffice it to say that many of the metrics used to evaluate scientists and their research serve to incentivize conservatism and easily-quantified productivity over risk-taking and the development of new ideas (which, as previously noted, are by their very nature unquantifiable) (See Matt Clancy’s “Conservatism in Science”). In essence we have created a system where scientists are disincentivized from doing anything that won’t help them publish more research or bring in more grant money: performing replications, sharing data or ideas, mentoring undergraduates, teaching, etc.

Some of these problems with metrics are due to the unsophisticated use of simple metrics and it should be possible to alleviate these problems with better metrics and greater awareness of their strengths and weaknesses. That being said, there are also some more fundamental issues that arise from the intrinsic nature of metrics and the incentive structures they create. It is easy to say that we need more complex metrics, but greater complexity comes with its own costs (e.g. more data might be needed to develop the new metric, more bureaucracy needed to collect that data, less intuitive metrics are less likely to be understood and correctly applied). Metrics, whether complex or simple, always suffer from the curse of Goodhart’s Law, “when a measure becomes a target, it ceases to be a good measure” (see the Eponymous Laws series). For example, citation counts may have been a good proxy for a scientist’s influence when we first started using them, but now that scientists are aware of their importance they have become a less effective measure because scientists are incentivized to game the citation counts however they can (publishing in hot research areas, rushing flashy results to publication without careful replication, developing superfluous new terms or metrics in hopes that they will catch on, self-citing). We might also mention here that citation norms themselves represent an implicit epistemological orientation that predisposes one towards incremental research that clearly builds off previous work (i.e. climbing) at the expense of truly novel ideas that might not have clear antecedents (I could provide a citation for this point, but I refuse to do so as an act of civil disobedience). Lastly, it is fair to wonder about the psychological cost of excessive metrification. I’m no expert here, but the awareness that your work is constantly being measured on a variety of dimensions probably isn’t conducive to creativity, job satisfaction, or mental health.

It might be helpful to make explicit something that has been lurking in the background of this section: the role of the internet in the scientific enterprise. The internet is, I think, usually seen as an unequivocal boon for science as it allows greater access to information and rapid communication between scientists. My contrarianism runs deep, but not quite deep enough to deny that the internet has been, on balance, a good thing for science. But there is a balance, and I don’t think we have fully grappled with the costs of this mass shift in scientific thinking and doing. To put it in the terminology of this essay: the internet has supercharged our ability to climb but subtly (or not so subtly) harmed our ability to wander. In his song “Welcome to the Internet”, comedian-troubadour Bo Burnham sings, “Could I interest you in everything all of the time?”; I’m not the first to say it nor will I be the last, but there is something incredibly unnatural about this everything-everywhere-all-the-time state of affairs and it seems more than likely to me that it is warping our creativity and cultural dynamics in a problematic manner. Derek Thompson speculates on this theme in his recent article “American is Running on Fumes”:

The world is one big panopticon, and we don’t fully understand the implications of building a planetary marketplace of attention in which everything we do has an audience. Our work, our opinions, our milestones, and our subtle preferences are routinely submitted for public approval online. Maybe this makes culture more imitative. If you want to produce popular things, and you can easily tell from the internet what’s already popular, you’re simply more likely to produce more of that thing. This mimetic pressure is part of human nature. But perhaps the internet supercharges this trait and, in the process, makes people more hesitant about sharing ideas that aren’t already demonstrably pre-approved, which reduces novelty across many domains.

~~~~~~

A more poetic take on this theme in “Nothing.”:

“The internet is hell, a fallen realm in which souls are threshed and all that is Good, Beautiful, and True is optimized out of existence.

The past is denied its usual slip into nothingness, instead becoming trapped in the ever-growing machine-readable databases that provide food for the ravenous algorithms which predict and control our actions with ever-increasing power and precision. Ambiguity and idiosyncrasy will be the first to go, replaced by perfect dichotomy and uniformity; all numbers besides 1 and 0 will cease to exist; grey areas become mythical places like Atlantis or Hyperborea. Soon thereafter, the eclipse will be total—The Future as a programmed event, a synthetic remix of the past.

Rejoice! Tonight, we feast on copypasta for evermore!

Time itself now enslaved, all possibility of novelty is extinguished. This third rock hanging in endless void become a haunted merry-go-round, a zombie theatre of eternal recurrence."

A few distinct but interrelated hypotheses for why the internet/computers stifles creativity were put forth in the preceding excerpts.

Too much information/knowledge is bad for your imagination. It’s almost impossible for us to now come at any problem with fresh eyes (“an extensive knowledge of the literature will bias you towards conducting incremental gap-filling research instead of the truly innovative research which creates new gaps in the literature”).

Internet-induced cultural homogenization

The internet makes it too easy for the entire world to criticize radical ideas. People won’t try crazy shit if the threat of criticism and ridicule is too salient. Similarly, “It is too easy to know if your ideas are considered fringe and unusual, or have already been studied and “proved”. This makes people less likely to do the kind of thinking that can overturn conventional wisdom”.

The internet increases mimetic pressure and leads to more groupthink (see the Derek Thompson quote above).

Excessive metrification/hyper-legibility is bad for creativity.

Something about algorithms disfavoring novelty and making us more predictable or whatever I was going on about in that excerpt.

A recent study provides another hypothesis (and yes I’m just going to take the result at face value and run with it).

Listening prompts intuition while reading boosts analytic thought

It is widely assumed that thinking is independent of language modality because an argument is either logically valid or invalid regardless of whether we read or hear it. This is taken for granted in areas such as psychology, medicine, and the law. Contrary to this assumption, we demonstrate that thinking from spoken information leads to more intuitive performance compared with thinking from written information. Consequently, we propose that people think more intuitively in the spoken modality and more analytically in the written modality. This effect was robust in five experiments (N = 1,243), across a wide range of thinking tasks, from simple trivia questions to complex syllogisms, and it generalized across two different languages, English and Chinese. We show that this effect is consistent with neuroscientific findings and propose that modality dependence could result from how language modalities emerge in development and are used over time. This finding sheds new light on the way language influences thought and has important implications for research that relies on linguistic materials and for domains where thinking and reasoning are central such as law, medicine, and business.

I’ll focus on science and technology but the story should be similar in the arts and other creative fields. It goes something like this: back in the day, the scientific literature was small enough that you could keep up to date without reading all that much and getting most of your information through in-person discussions with other scientists. This mode of research life gave scientists something like an optimal balance between analytical and intuitive thinking, enabling them to excel at both hill-climbing towards “known unknowns” and wandering off in search of new “unknown unknowns”. I like the way one anonymous commenter on Marginal Revolution summarizes the difference between analytical and intuitive thinking:

“Intuitive” I’d think includes that just-out-of-reach place where creativity and knowledge—known things—blur. I guess “possibilities” is the word.

“Analytic” should be more about known things, or at least a great deal of rigor about unknown things. “Solutions” maybe is the word here.

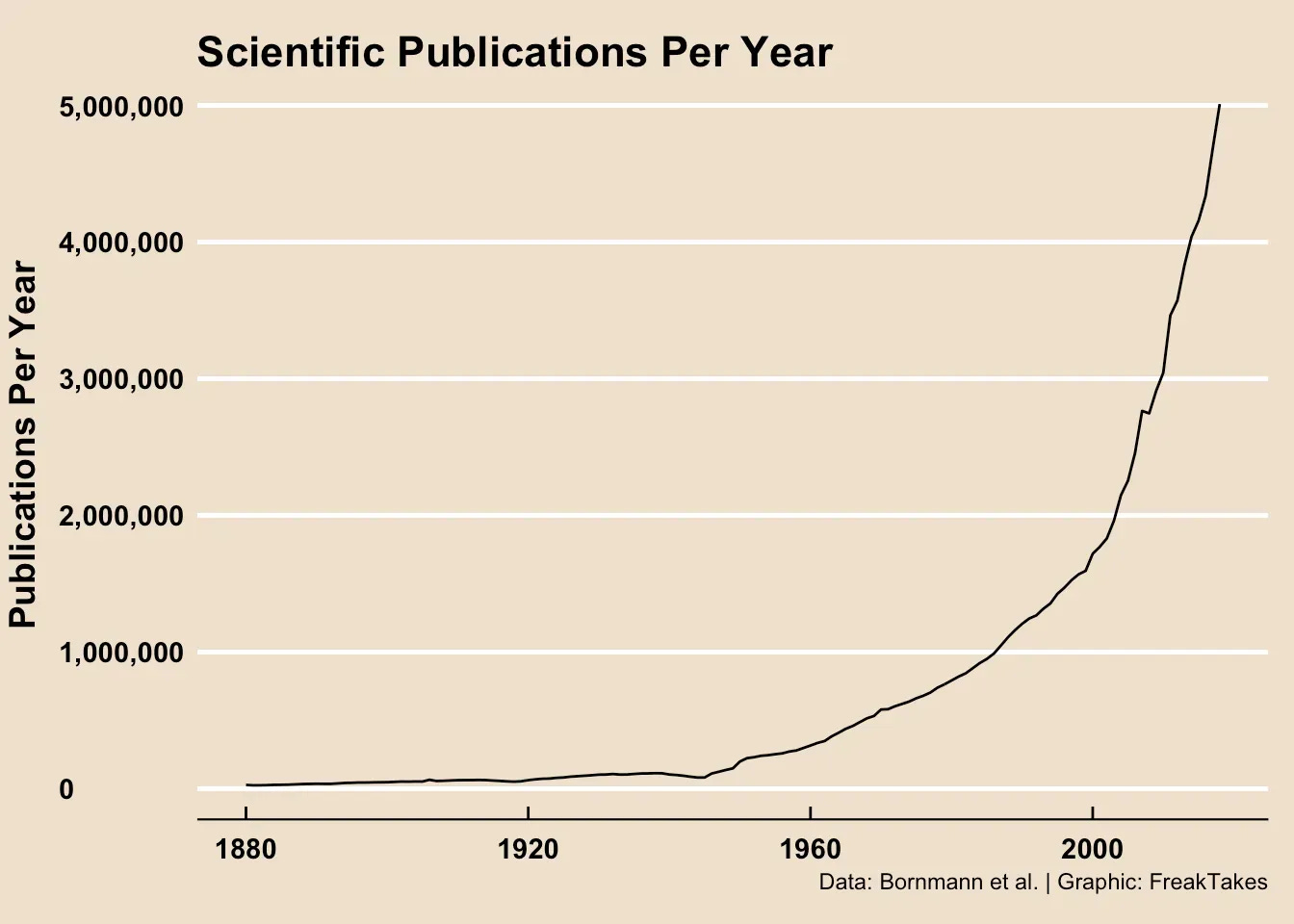

Back to the story: this all started to change during the post-WWII science boom as it became virtually impossible to keep up with the cutting edge unless you were doing massive amounts of reading and writing.

There also were too many scientists now—you could no longer get all of the experts in one field in a room and have them hash out some problem. Similar changes occurred in science education—individual attention from professors and small discussion-based classes were replaced by lecture halls and textbooks. The overall effect was that the entire scientific community shifted towards more analytical modes of thinking/researching, making us really good at incremental progress and worse at revolutionary, paradigm-shifting progress. Some 50 years after this mass psychological shift we are seeing a slowdown in science and technology (the Great Stagnation) because the existing paradigms are starting to become exhausted. The End.

A recent essay by Eric Gilliam at his blog FreakTakes, “Math and Physics’ Divorce, Poetry, and the Scientific Slowdown”, offers some qualitative evidence for my thesis (he uses it in service of a related but ultimately different thesis).

Many World War II-era physicists were not only practicing researchers in the small and bustling field of early-1900s physics, but continued on as researchers well into the 1970s. As there was an explosion of publications in their field, the researcher accounts I’ve come across do contain complaints from the old-guard researchers.

The old guard did, “grumble at the changing state of publications and how smaller findings were published more often”, but their primary complaints were about something else:

The growing conference sizes made it much more difficult to keep up with adjacent fields and scientific meetings. Seminars began to cater to narrower and narrower sub-branches of work rather than broad ones. These were the places that many researchers leveraged to actually keep up to date on new work and problems in their fields as well as others.

Richard Feynman, “who never religiously kept up with the literature” and was an imaginative and intuitive researcher if there ever was one, pointed to the growing conferences sizes as a significant problem during a 1973 interview:

Feynman: No, they’ve gotten too big. For example, they have parallel sessions which they never had before. Parallel sessions means that more than one thing is going on at a time, in fact, usually three, sometimes four. And when I went to the meeting in Chicago, I was only there two days before I broke my kneecap, but I had a great deal of trouble making up my mind which of the parallel sessions I was going to miss. Sometimes I’d miss them both, but sometimes there were two things I would be interested in at the same time. These guys who organize this imagine that each guy is a specialist and only interested in one lousy corner of the field. It’s impossible really to go — so it’s just as if you went to half the meeting. Therefore half is not much better than nothing. You might as well stay home and read the reports.

Warren Weaver—physicist, director of the division of natural sciences at the Rockefeller Foundation (1932–55), and president of the American Association for the Advancement of Science (AAAS)—lodged a similar complain in the 70s:

This vast organization (the AAAS), with the largest membership of any general scientific society in the world, embraces all fields of pure and applied science. In the earlier days each branch of science conducted intensive sessions at which its own specialized research reports were given, and there was also some mild attempt to organize interdisciplinary sessions of general interest. Then the meetings got so large that they almost collapsed under their own weight. One group after another, first the chemists, then the physicists, the mathematicians, the biologists found it necessary to hold other and separate meetings. Attendance fell and the function of serving as a communication center between the various branches of science became less effective.

Also of interest in Gilliam’s article is this quote about the shift in mathematical thinking that began to take hold in the post-war era:

As physics became messy and muddy, mathematics turned austere and rigid. After the war, mathematicians started to reconsider the foundations of their discipline and turned inside, driven by a great need for rigor and abstraction. The more intuitive and approachable style of the “old-school” mathematicians like von Neumann and Weyl, who eagerly embraced new developments in physics like general relativity and quantum mechanics, was replaced by the much more austere approach of the next generation. (Robbert Dijkgraaf)

Taken together, all of these examples point to the same story: reading is a helluva drug and we’re all addicted. The rapid growth of STEM fields following WWII precipitated a shift towards more reading and less face-to-face discussion which in turn precipitated a shift away from more intuitive styles of research and towards more analytical approaches.

Adam Mastroianni raises a good point: the bigger issue here might be psychological homogeneity—having some scientists that read “too much” is probably a good thing, but there needs to be other avenues to scientific success which don’t require you to read quite as much and we seem to have lost that.

There is another aspect of this problem that I’ll just mention in passing as I’m currently writing another piece which explores the idea in detail: given the incredible amount of time that we spend staring at things which are inches from our face, is it surprising that we’ve become “small-minded” and are lacking in “vision” and foresight?

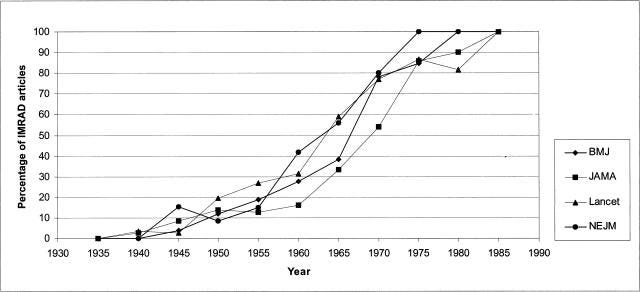

It’s also worth considering how scientific writing has changed over time, something I wrote about at length in “Research Papers Used to Have Style. What Happened?”. To make a long story short: scientists used to write much longer pieces and infused their writing with much more personal style and aesthetic value than they do today. The explosion in scientific literature put pressure on scientists to write with greater concision and clarity and led to almost universal standardization of article formats.

This draining of nearly all style/aesthetic value from scientific writing was really bad for our collective creativity:

Some scientists, for example, may choose to add a dash of humor to their writing. Aside from making the reading experience more enjoyable (not something that should be underestimated given how much scientists read), humor often juxtaposes ideas or introduces a novel way of looking at something commonplace, two things that are wonderful to do when searching for new ideas. And what can be said about humor can be said of other aesthetic qualities as well; infusion of beauty (either in content or language) or emotion may lead an author to develop a new metaphor or consider some minor aspect of a phenomenon in more detail, either of which could provide the seed of a new idea or observation.

[…]

The “life of science” (i.e. scientific creativity) demands a balance between the instrumental and the aesthetic in the same way that evolution requires a balance between selection and mutation. Selection improves the average fitness of a population, but drains it of diversity and limits its potential for future adaptation. Mutation increases diversity, but lowers the average fitness of the population.

Like mutation, aesthetics are diversifying and generative, but generally harmful to clarity and concision. And like selection, restrictions on style and format may improve the average quality of our writing, but at the cost of creative potential.

I’ll leave you with the conclusion of what is (judging by pageviews and controversy generated) still my popular post, “Fuck Your Miracle Year”.

Do you really want to have an annus mirabilis (miracle year)?

Delete the draft of that blog post you were writing. The post sucks and no one was going to read it anyways.

Stop gorging yourself on the internet and its endless buffet of information. Stop masturbating to progress studies porn. Stop reading my blog. Stop masturbating to sex porn while you’re at it.

Use your imagination, just like they did back in Einstein’s day.

Fantasize.

Think.

2 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2023-06-08T16:57:42.320Z · LW(p) · GW(p)

Downvoted for extreme rambliness. There are a few good ideas, like how tighter integration might be killing creativity, but they are lost in the sea of musings and random quotes.

Replies from: rogersbacon↑ comment by rogersbacon · 2023-06-09T12:33:48.811Z · LW(p) · GW(p)

Fair enough, but as I said not all writing has to be aimed at maximum concision and clarity (and insisting that it should be is bad for our collective creativity). One may choose to write in a less direct manner in order to briefly present numerous tangentially related ideas (which readers may follow up on if they choose) or simply to provide a more varied and entertaining reading experience. Believe it or not, there are other goals that one can have in writing and reading besides maximally efficient communication/intake of information.