Review of "Why AI is Harder Than We Think"

post by electroswing · 2021-04-30T18:14:34.855Z · LW · GW · 10 commentsContents

Introduction: AI Springs and Winters Fallacy 1: Narrow intelligence is on a continuum with general intelligence My opinion Fallacy 2: Easy things are easy and hard things are hard My opinion Fallacy 3: The lure of wishful mnemonics My opinion Fallacy 4: Intelligence is all in the brain My opinion Conclusion None 10 comments

"Why AI is Harder Than We Think" is a recent (April 26, 2021) arXiv preprint by Melanie Mitchell. While the author is a tenured professor with technical publications, they also have published multiple layman-level essays and articles which tend to be skeptical and pessimistic of AI progress. Why AI is Harder Than We Think falls somewhere in between the two extremes—it's written in the style of an academic paper, but the arguments appeal to a layman audience.

Reddit's /r/machinelearning was pretty harsh on the paper—click here for the full discussion. While I agree it has flaws, I still found it an interesting and valuable read because I enjoyed the process of figuring out where my opinions diverged from the author's.

In this post, I will briefly summarize the paper and state my opinions. The most interesting part (= where I disagree the most) is Fallacy 4, so skip to that if you don't want to read the whole blog post.

Introduction: AI Springs and Winters

Self-driving cars were predicted to be available for purchase by 2020, but they aren't. The author also gives a more in depth historical account of the various AI "springs" (periods of growth and optimism due to advances in AI research) and "winters" (periods of stagnation and pessimism when said advances aren't powerful 'enough') which have occurred since the 1950s.

Why does this keep happening? The author's thesis:

In this chapter I explore the reasons for the repeating cycle of overconfidence followed by disappointment in expectations about AI. I argue that over-optimism among the public, the media, and even experts can arise from several fallacies in how we talk about AI and in our intuitions about the nature of intelligence.

Specifically, they discuss four fallacies, which I'll address individually in the coming sections.

Sidenote: I really enjoyed pages 2–3 (the section on AI Springs and Winters). Whether or not you buy the thesis of this paper, that section is a well written historical account and you should check it out if you're interested.

Fallacy 1: Narrow intelligence is on a continuum with general intelligence

The claim here is, when we make progress in AI by beating top humans at chess, we've solved a problem which is much more narrow than we think. Similar claims apply to seemingly general systems such as IBM's Watson and OpenAI's GPT-3.

My opinion

Issue 1: Narrow tasks are slightly generalizable. While it is true that chess is much narrower than general intelligence, the author neglects to mention that once we solved chess, we were able to "easily" apply those techniques to other tree search based domains. For a certain class of tasks, chess is like an ""NP-Complete problem""—once we solved it, we (after a ""polynomial time transformation"") were able to solve all other problems in that class. This is how DeepMind went from AlphaGo to MuZero so quickly.

Issue 2: GPT-3 is kinda general. I completely disagree with the notion that GPT-3 is only slightly less narrow than chess, in comparison to human intelligence. GPT-n trained on a sufficiently large amount of text written by me would be indistinguishable from the real me. If GPT-n is 90% "general intelligence" and chess is 0.001% (coming from some dumb heuristic like "chess-like things are 1 out of 100,000 tasks a general intelligence should be able to do"), then I think GPT-3 is 1% general intelligence. And 1% is closer to 100% than it is 0.001%, in terms of orders of magnitude.

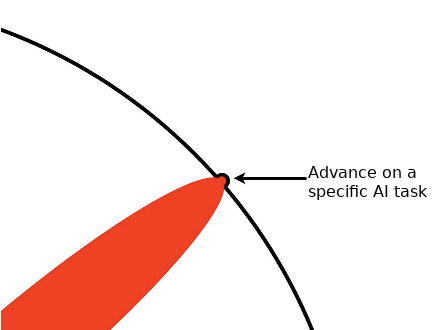

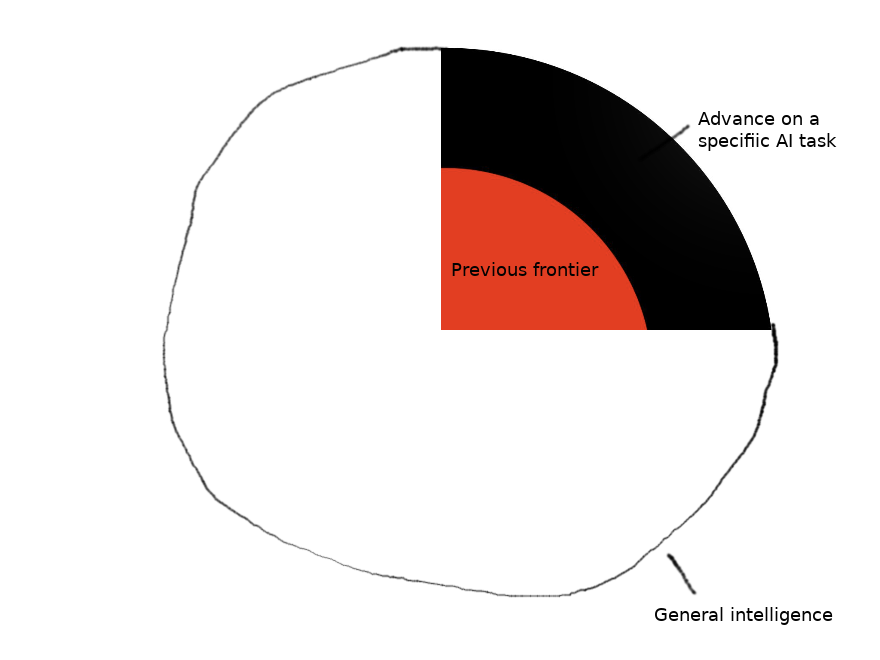

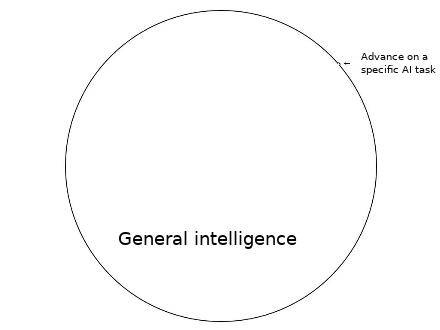

Where we agree: The "first-step fallacy" is real. Here, the "first step fallacy" refers to the phenomenon where an advance ("first step") in AI is perceived as less narrow than it really is. I agree that the ML research frontier tends to "overfit" to tasks, causing research progress to appear like it required more insight than it did. This seems related to the planning fallacy—researchers assume research progress will continue at the same rate, where really they should expect to experience diminishing returns in effort. A third way of thinking about this is related to The illustrated guide to a Ph.D.. I've lazily altered some images from there to make my point.

Fallacy 2: Easy things are easy and hard things are hard

The claim here is basically Moravec's law—tasks which humans think are hard are actually relatively easy for AI, and tasks which humans consider to be easy are much more difficult. For example, AlphaGo solving Go was seen as a triumph because Go is a very challenging game, but "challenging" here refers to a human's perspective. From an AI's perspective, charades is a much more "challenging" game.

My opinion

I agree with the author in that Moravec's law is correct. I think this is a good point to bring up when talking about the history of AI, and (for example) why early attempts at computer vision failed. However, I think the modern ML research community has internalized this phenomenon, so I don't think this point is super relevant to a modern conversation about the future of artificial general intelligence.

Fallacy 3: The lure of wishful mnemonics

The claim here is related to the idea that the terminology we use impacts our understanding of the objects we are discussing. For example, calling a neutral network a neural network implies that neutral networks are more similar to a human brain than they actually are. This also applies to how NLP benchmarks are named (a model which does well on the "Stanford Question Answering Dataset" is not going to be able to answer all questions well) and how people talk about models' behavior (saying "AlphaGo's goal" implies more coherence than it may have). That is, the terminology/shorthands/mnemonics here are all wishful.

Using these shorthands is damaging because they give off an impression to the public that AI systems are more capable than they actually are.

My opinion

I agree with the author that terminology is often not well-explained, and this leads to misrepresentation of AI research in the media. It's hard to fix this problem because STEM coverage is rarely good. I think the solution here is to get better reporters (for example, Quanta Magazine has good math reporting) rather than to change the language AI researchers use.

There is an additional problem, which the author doesn't focus on as much, of researchers using these "wishful mnemonics" within the research community. Sometimes this is fine—I find it easy to substitute a phrase like "AlphaGo's goal" with a more precise phrase like "AlphaGo's loss function is minimized by the following policy".

But in the context of AI Safety, anthropomorphizing can get dicey. For example, the problem of understanding deceptive mesa-optimizers only becomes tricky and nuanced when we stop anthropomorphizing the system. If this point is not communicated well from the AI Safety community to the ML research community at large, the AI Safety community runs risk of leaving the ML research community unconvinced that deceptive alignment is an important problem.

I wish the author more clearly distinguished between these two settings—the first being communicating with the media and their resulting message to the public, and the second being the language used internally to the AI research community. Their point about "wishful mnemonics" would be stronger if they more clearly explained when they are beneficial/problematic in either setting.

Fallacy 4: Intelligence is all in the brain

This is the most complex claim of the four. The author's reasoning here can be separated into two ideas. In both, the theme is that human intelligent reasoning does not only occur in the brain, but also <somewhere else>.

Idea 1: human intelligent reasoning does not only occur in the brain—it is also inextricably intertwined with the rest of our body. This theory is called embodied cognition. Experimental evidence in favor of embodied cognition exists, for example:

Research in neuroscience suggests, for example, that the neural structures controlling cognition are richly linked to those controlling sensory and motor systems, and that abstract thinking exploits body-based neural 'maps'.

Idea 2: human intelligent reasoning can not be reduced to a sequence of purely rational decisions—it is also inextricably intertwined with our emotions and cultural biases. Therefore, we have no reason to believe that we can create artificial general intelligence which would be superintelligent, but lack emotions and cultural knowledge.

My opinion

Fallacy 4 is where this paper is weakest. I don't find either of these ideas particularly convincing, and I find Idea 2 especially problematic.

My opinion on Idea 1: Embodied cognition sounds reasonable to me. I found the example mentioned in the paper too abstract to be compelling, so here is a more concrete example of my own: When humans do geometry, they might use spacial reasoning in such an intense way so that their eyes, arms, hands, etc. are engaged. This means that the amount of computational power which is being used on geometry exceeds the amount of computational power the human brain has.

Where my opinion differs from the author's opinion is on the subject of what to do about this. The author seems to think that more research into embodied cognition—and more specifically the precise mechanisms underlying human intelligence—are necessary for making progress on artificial general intelligence. However, I think that all embodied cognition is saying is that we might need a bit more than 10^15 FLOP/s to match the processing power of a human. An extra factor of 2, 10, or 100 won't make a difference in the long run. The Bitter Lesson provides evidence in favor of my opinion here—compute often eventually outperforms hard-coded human insights.

My opinion on Idea 2: At best, this seems incorrect. At worst, this seems completely incoherent.

Ironically, the author's problem here seems to be that they are falling for their own Fallacy 3 ("The lure of wishful mnemonics")—more specifically, they seem to be over-anthropomorphizing. Yes, it is true that human intelligent reasoning is intertwined with our irrational heuristics and biases. But this doesn't mean that an artificial general intelligence has to operate in the same way.

For example, the author is skeptical of Bostrom's orthogonality thesis because they think (for example) a paperclip maximizer with enough intelligence to be a threat cannot exist "without any basic humanlike common sense, yet while seamlessly preserving the speed, precision, and programmability of a computer."

While I agree that a superintelligent paperclip maximizer will have to have a decently accurate world model, I disagree with the notion that it will have to learn and internalize human values.

For example, one could imagine a paperclip maximizer trained exclusively on synthetic physics simulation data. If its influence on human society is only indirect (for example, maybe all it can do is control where clouds are distributed in the sky or something silly), then the strategies it employs to increase paperclip production will seem convoluted and unintelligible from a human perspective. ("Why does putting clouds in these exact 1000 places triple paperclip production? Who knows!") Maybe concepts like "what is a paperclip factory" will crystalize in its internals, but still, I think such a model's inner workings would be very far from "humanlike common sense".

Moreover, there is no reason to expect it to be difficult for an artificial general intelligence to learn whatever human biases and cultural knowledge are supposedly necessary for human-level intelligence. In fact, this seems to be the default path for AGI.

Interpreting the author's point here as charitably as possible, it seems like the issue here is an imprecise notion of intelligence. MuZero is able to "superintelligently" beat some Atari games without the human bias of "getting dopamine when I reach a checkpoint" or the cultural knowledge of "death is bad and I should avoid it".

So, the author's notion of intelligence must be broader—closer to a general-purpose, human-level intelligence. But then, does GPT-3 count? Its output is human-like writing which feels like it was influenced by irrational heuristics and biases, not a superintelligent, purely rational system.

Even if GPT-3 doesn't count—the entire field of AI Ethics is specifically devoted to the problem of ridding AI of human biases. Whenever we train ML systems on human data, the default outcome is that they learn our human biases! Since we keep running into these problems, the idea that AGI progress will be blocked by our understanding of the mechanics of human cognition seems ludicrous.

Conclusion

This paper is at its best when it goes over the history of AI, and to some extent when it discusses Fallacy 3 ("The lure of wishful mnemonics"). I do think that crisp communication, both within the AI research community and the general public, would make conversations about AI policy more productive.

This also applies to the AI Safety community in particular—the fact that the author, a professor of computer science, understood the orthogonality thesis as poorly as they did, speaks to how much more credible AI Safety could become if it had more accessible literature.

The paper is certainly at its worst in Fallacy 4, where it claims that AI is hard by appealing to a "special sauce"-type argument in favor of human cognition. I would not be surprised if human reasoning is more complex than a brain-sized neural network, due to the brain being highly optimized and the body performing additional computation. However, at worst, I think all this implies is that we'll need a bit extra compute to achieve human-level intelligence.

For the well written and sourced AI history content alone, I do recommend you read this paper. Just maybe critically evaluate the author's claims about what progress in AI will look like going forward, because I don't buy many of them.

10 comments

Comments sorted by top scores.

comment by [deleted] · 2021-05-01T01:47:17.362Z · LW(p) · GW(p)

My opinion on Idea 1: Embodied cognition sounds reasonable to me. I found the example mentioned in the paper too abstract to be compelling, so here is a more concrete example of my own: When humans do geometry, they might use spacial reasoning in such an intense way so that their eyes, arms, hands, etc. are engaged. This means that the amount of computational power which is being used on geometry exceeds the amount of computational power the human brain has.

This claim is false. (as in, the probability that it is true is vanishingly close to zero, unless the human brain uses supernatural elements). All of the motor drivers except for the most primitive reflexes (certain spinal reflexes) are in the brain. You can say that for all practical purposes, 100% of the computational power the brain has is in the brain.

Moreover, most of the brain is inactive during any given task. So for your 'geometry' example, most of the brain isn't being used on the task, this is a second way your claim is false.

I want to advance a true hypothesis. The reason "embodiment" is necessary for human-like intelligence is because a "body" allows the developing intelligence to perform experiments on the real world and obtain clean information. For example, if you wanted an AI to learn how simple objects behave - say a set of blocks a baby might play with. You could feed the AI thousands of hours of video taken from cameras, or give it control of a robotic system with manipulators with similar capabilities as hands. And build a software stack with rewards for 'curiosity' - putting the blocks into states not previously seen and observing the results.

I think the latter case, where the AI system is performing direct manipulation, gives the opportunity to learn from thousands of A:B comparisons. Where A is "hands performed a manipulation" and B is "hands did a different manipulation or nothing". This allows the learning system, if structured to learn from this kind of data, the opportunity to learn causality well and to develop a general model for manipulation.

Moreover this kind of A:B data (this is the scientific method in a different form) is clean information. All of the variables are the same except for the manipulation performed by the 'body'.

I posit that "tool using" AI, which I think are more indicative of human intelligence than merely babbling like GPT-3 does, requires the AI to either have a high fidelity simulation or actual connected robotics in order for the system to become good at using tools. (as well as obviously the right structure of internal software to be able to learn from the information)

Note, of course, unlike science fiction, the AI system need not have humanlink bodies to satisfy the requirement for 'embodiment'. Nor does it need to be connected to them in realtime - offline or parallel learning is fine.

Replies from: electroswing, HunterJay↑ comment by electroswing · 2021-05-01T16:35:36.140Z · LW(p) · GW(p)

This claim is false. (as in, the probability that it is true is vanishingly close to zero, unless the human brain uses supernatural elements). All of the motor drivers except for the most primitive reflexes (certain spinal reflexes) are in the brain. You can say that for all practical purposes, 100% of the computational power the brain has is in the brain.

I agree with your intuition here, but this doesn't really affect the validity of my counterargument. I should have stated more clearly that I was computing a rough upper bound. So saying something like, assuming embodied cognition is true, the non-brain parts of the body might add an extra 2, 10, or 100 times computing power. Even under the very generous assumption that they add 100 times computing power (which seems vanishingly unlikely), this still doesn't mean that embodied cognition refutes the idea that simply scaling up a NN with sufficient compute won't produce human-level cognition.

Replies from: None↑ comment by [deleted] · 2021-05-01T18:10:37.623Z · LW(p) · GW(p)

Yes and first of all, why are you even attempting to add "2x". A reasonable argument would be "~1x", as in, the total storage of all state outside the body is so small it can be neglected.

Replies from: electroswing↑ comment by electroswing · 2021-05-01T22:24:44.630Z · LW(p) · GW(p)

I mean...sure...but again, this does not affect the validity of my counterargument. Like I said, I'm using as strong as possible of a counterargument by saying that even if the non-brain parts of the body were to add 2-100x computing power, this would not restrict our ability to scale up NNs to get human-level cognition. Obviously this still holds if we replace "2-100x" with "1x".

The advantage of "2-100x" is that it is extraordinarily charitable to the "embodied cognition" theory—if (and I consider this to be extremely low probability) embodied cognition does turn out to be highly true in some strong sense, then "2-100x" takes care of this in a way that "~1x" does not. And I may as well be extraordinarily charitable to the embodied cognition theory, since "Bitter lesson" type reasoning is independent of its veracity.

↑ comment by HunterJay · 2021-05-01T02:43:51.252Z · LW(p) · GW(p)

I took the original sentence to mean something like "we use things external to the brain to compute things too", which is clearly true. Writing stuff down to work through a problem is clearly doing some computation outside of the brain, for example. The confusion comes from where you draw the line -- if I'm just wiggling my fingers without holding a pen, does that still count as computing stuff outside the brain? Do you count the spinal cord as part of the brain? What about the peripheral nervous system? What about information that's computed by the outside environment and presented to my eyes? I think it's kind of an arbitrary line, but reading this charitably their statement can still be correct, I think.

(No response from me on the rest of your points, just wanted to back the author up a bit on this one.)

Replies from: mikkel-wilson, None↑ comment by MikkW (mikkel-wilson) · 2021-05-01T23:43:28.072Z · LW(p) · GW(p)

Writing stuff down to work through a problem is clearly doing some computation outside of the brain, for example.

I'm not sure that this is correct. While making the motions is needed to engage the process, the important processes are still happening inside of the brain- they just happen to be processes that are associated with and happen during handwriting, not when one is sitting idly and thinking

↑ comment by [deleted] · 2021-05-01T02:58:10.675Z · LW(p) · GW(p)

Yes but take this a step further. If you assume that each synapse is 4 bytes of information (a bit sparse it's probably more than that), 86 billion neurons times 1000 synapses times 4 bytes = 344 terabytes.

How much information do you store when you have 3 fingers up when counting on your fingers? How much data can a page of handwritten notes hold?

You can probably neglect it. It doesn't add any significant amount of compute to an AI system to give it perfect, multi-megabyte, working memory.

comment by AM · 2021-05-01T09:40:50.798Z · LW(p) · GW(p)

Great and fair critique of this paper! I also enjoyed reading it and would recommend it also just for the history write up.

What do you think is the underlying reason for the bad reasoning in fallacy 4? Is the orthogonal it thesis particularly hard to understand intuitively or has it been covered so badly by media so often that the broad consensus of what it means is now wrong?

Replies from: electroswing↑ comment by electroswing · 2021-05-01T23:04:29.358Z · LW(p) · GW(p)

Hmmm...the orthogonality thesis is pretty simple to state, so I don't think necessarily that it has been grossly misunderstood. The bad reasoning in Fallacy 4 seems to come from a more general phenomenon with classic AI Safety arguments, where they do hold up, but only with some caveats and/or more precise phrasing. So I guess "bad coverage" could apply to the extent that popular sources don't go in depth enough.

I do think the author presented good summaries of Bostrom's and Russell's viewpoints. But then they immediately jump to a "special sauce" type argument. (Quoting the full thing just in case)

The thought experiments proposed by Bostrom and Russell seem to assume that an AI system could be“superintelligent” without any basic humanlike common sense, yet while seamlessly preserving the speed, precision and programmability of a computer. But these speculations about superhuman AI are plagued by flawed intuitions about the nature of intelligence. Nothing in our knowledge of psychology or neuroscience supports the possibility that “pure rationality” is separable from the emotions and cultural biases that shape our cognition and our objectives. Instead, what we’ve learned from research in embodied cognition is that human intelligence seems to be a strongly integrated system with closely interconnected attributes, include emotions, desires, a strong sense of self hood and autonomy, and a commonsense understanding of the world. It’s not at all clear that these attributes can be separated.

I really don't understand where the author is coming from with this. I will admit that the classic paperclip maximizer example is pretty far-fetched, and maybe not the best way to explain the orthogonality thesis to a skeptic. I prefer more down-to-earth examples like, say, a chess bot with plenty of compute to look ahead, but its goal is to protect its pawns at all costs instead of its king. It will pursue its goal intelligently but the goal is silly to us, if what we want is for it to be a good chess player.

I feel like the author's counterargument would make more sense if they framed it as an outer alignment objection like "it's exceedingly difficult to make an AI whose goal is to maximize paperclips unboundedly, with no other human values baked in, because the training data is made by humans". And maybe this is also what their intuition was, and they just picked on the orthogonality thesis since it's connected to the paperclip maximize example and easy to state. Hard to tell.

It would be nice if AI Safety were less disorganized, and had a textbook or something. Then, a researcher would have a hard time learning about the orthogonality thesis without also hearing a refutation of this common objection. But a textbook seems a long way away...

Replies from: AM↑ comment by AM · 2021-05-06T06:50:06.205Z · LW(p) · GW(p)

Good points!

Yes this snippet is particularly nonsensical to me

an AI system could be“superintelligent” without any basic humanlike common sense, yet while seamlessly preserving the speed, precision and programmability of a computer

It sounds like their experience with computers has involved them having a lot of "basic humanlike common sense" which is a pretty crazy experience in this case. When I explain what programming is like to kids, I usually say something like "The computer will do exactly exactly exactly what you tell it to, extremely fast. You can't rely on any basic sense checking or common sense, or understanding from it, if you can't define what you want specifically enough, the computer will fail in a (to you) very stupid way, very quickly."