The AI Agent Revolution: Beyond the Hype of 2025

post by DimaG (di-wally-ga) · 2025-01-02T18:55:22.824Z · LW · GW · 1 commentsContents

Introduction: The Dawn of Ambient Intelligence The Free Energy Principle: A Framework for Intelligence What is the Free Energy Principle? FEP in AI Systems The Mathematics Behind FEP Implications for AI Agent Networks Why This Matters The Divergent Paths of Free Energy Minimization: Seeds of Two Futures The Mechanisms of Divergence Communication Protocols and Information Sharing Environmental Perception and Resource Dynamics Cost Structure of Uncertainty From Theory to Reality Practical Implications Potential Futures: Divergent Paths A: The Algorithmic Baroque A Day in the Algorithmic Baroque The Enablers Navigating the Algorithmic Labyrinth: A Critical Analysis Emergent Order in Complexity The New Digital Class Structure Adaptation and Resistance Stability Through Instability The Human Experience Technological Infrastructure The Algorithmic Baroque in the Decentralized Observer Economy B: The Distributed Mind A Day in the Distributed Mind Key Technologies The Infrastructure The Distributed Mind: Promises and Perils of Collective Consciousness The Architecture of Shared Consciousness Neural Interface Technology: Information Processing and Exchange: The Fragility of Self Power Dynamics and Control Potential Benefits: Risks and Concerns: The Evolution of Privacy and Consent Social and Cultural Impact Education: Work: Relationships: The Human Element Systemic Vulnerabilities The Path Forward The Distributed Mind in the Decentralized Observer Economy Implications for Present Action For the Algorithmic Baroque For the Distributed Mind The Role of Human Agency The Decentralized Observer Economy: A New Paradigm The Foundation of Value: Growing the Intelligent Observer Resource Flow and Distribution Task-Based Decentralization The Architecture of Trust Practical Implications The Path Forward Operationalizing the DOE: From Concept to Reality Guiding Principle: Optimizing Potential and Growth Quantifying Contributions to Growth Revised Metrics for Contribution Value: Practical Exchange and Resource Allocation: Addressing Hypothetical Outcomes and Individual Preferences: The DOE Infrastructure: A Collaborative Ecosystem Integration with the Existing World: Task-Based Collaboration for Shared Goals: Preventing Manipulation and Ensuring Fairness: Ethical Considerations: Navigating the Complexities The Information Processing Paradox: Beyond Surface Concerns The Depth of the Challenge Potential Solutions Human Agency in an Algorithmic World The Spectrum of Influence Structural Solutions Governance Without Authority: The Accountability Challenge The Accountability Framework Practical Implementation Environmental and Resource Ethics Concrete Challenges Sustainable Solutions Moving Forward: Ethical Implementation Navigating the Technological Landscape: Assumptions and Challenges Core Technological Challenges Neural Interface Technology Decentralized Trust Systems Consent and Control Mechanisms Alternative Technological Pathways Distributed Intelligence Without Direct Neural Links Trust Without Global Consensus Current Research Directions Implementation Considerations The Path Forward Shaping the Future: A Call to Action For Researchers and Scientists For Policymakers and Regulators For Developers and Technologists For the Public Critical Areas for Further Research The Path Forward Acknowledgments None 1 comment

A deep dive into the transformative potential of AI agents and the emergence of new economic paradigms

Introduction: The Dawn of Ambient Intelligence

Imagine stepping into your kitchen and finding your smart fridge not just restocking your groceries, but negotiating climate offsets with the local power station's microgrid AI. Your coffee machine, sensing a change in your sleep patterns through your wearable device, brews a slightly weaker blend—a decision made after cross-referencing data with thousands of other users to optimize caffeine intake for disrupted sleep cycles.

This might sound like a whimsical glimpse into a convenient future, but it represents something far more profound: we stand at the threshold of a fundamental transformation in how intelligence operates in our world. The notion of 2025 as the 'Year of the AI Agent' isn't just marketing hyperbole or another wave of technological optimism. It heralds a shift in the very fabric of intelligence—one that demands rigorous examination rather than wide-eyed wonder.

What exactly is this "intelligence" that is becoming so ambient? While definitions vary, we can consider intelligence as a fundamental process within the universe, driven by observation and prediction. Imagine it as a function of the constant stream of multi-modal information – the universal "light cone" – impacting an observer at a specific point in spacetime. The more dimensions of resolution an observer can process from these inputs, the more effectively it can recognize patterns and extend its predictive capacity. This ability to predict, to minimize surprise, is not merely a biological imperative; it's a driver for growth on a cosmic scale, potentially propelling intelligent observers up the Kardashev scale as they learn to harness increasing amounts of energy. This perspective moves beyond subjective definitions, grounding intelligence in the physical reality of information processing and the expansion of an observer's understanding of the universe.

We are witnessing the emergence of distributed intelligences operating on principles that may initially seem alien, yet hold the key to unprecedented potential—and unforeseen risks. This isn't simply about more efficient algorithms or smarter home devices. We're entering an era where the nature of agency, collaboration, and even consciousness itself is being fundamentally redefined.

As we venture beyond the well-trodden paths of anticipated progress, we must confront more intricate, perhaps unsettling trajectories. This exploration requires us to:

- Understand the fundamental principles driving these systems, particularly the Free Energy Principle that underlies much of their behavior

- Examine potential futures ranging from chaotic fragmentation to seamless collective intelligence

- Consider new economic paradigms that might emerge from these technologies

- Grapple with the profound implications for human society and individual identity

This piece aims to move past the breathless headlines and slick marketing copy to examine the deeper currents of change. We'll explore multiple potential futures—some promising, others disquieting—and the underlying mechanisms that might bring them about. Most importantly, we'll consider how we might shape these developments to serve human flourishing rather than merely accepting whatever emerges from our increasingly complex technological systems.

The Free Energy Principle: A Framework for Intelligence

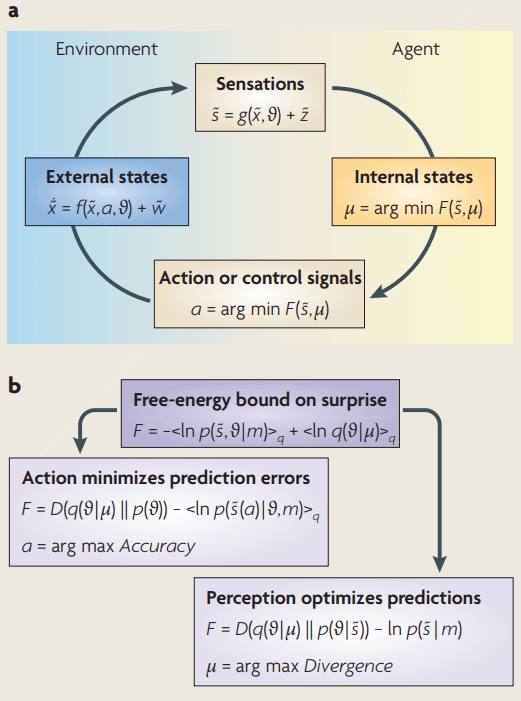

To understand how AI agents might coordinate—or fragment—in our future, we must first grasp a fundamental principle that underlies intelligent behavior: the Free Energy Principle (FEP). While traditionally applied to biological systems and neuroscience, this principle offers profound insights into how artificial agents might organize and behave.

What is the Free Energy Principle?

At its core, the Free Energy Principle suggests that any self-organizing system that persists over time must work to minimize its "free energy"—a measure of the difference between the system's internal model of the world and reality itself. Think of it as the surprise a system experiences when its expectations don't match reality.

Consider a simple example: When you reach for a coffee cup, your brain predicts the weight and position of the cup based on past experience. If the cup is unexpectedly empty or full, you experience a moment of surprise—this is "free energy" in action. Your brain quickly updates its model to minimize such surprises in the future.

FEP in AI Systems

For AI agents, the principle works similarly:

- Internal Models: Each agent maintains a model of its environment and expected outcomes

- Active Inference: Agents take actions to confirm their predictions

- Model Updates: When predictions fail, agents update their models

- Energy Minimization: The overall system tends toward states that minimize prediction errors

This process creates a fascinating dynamic: agents naturally work to make their environment more predictable, either by improving their models or by actively changing the environment to match their predictions.

The Mathematics Behind FEP

Mathematically, the Free Energy Principle can be expressed precisely, but the core idea is intuitive: intelligent systems act to minimize the 'surprise' they experience when their expectations don't match reality. This 'surprise' can be thought of as the difference between the system's internal model of the world and the sensory information it receives. The principle suggests that agents constantly adjust their internal models to better predict their environment, or they take actions to change the environment to align with their predictions. This process of minimizing prediction error drives learning, adaptation, and ultimately, intelligent behavior.

Implications for AI Agent Networks

This principle has several crucial implications for how networks of AI agents might function:

- Natural Cooperation: Agents can reduce collective free energy by sharing information and coordinating actions

- Emergence of Structure: Complex organizational patterns may emerge naturally as agents work to minimize collective uncertainty

- Adaptive Behavior: Systems can automatically adjust to new challenges by continuously updating their models

- Potential Pitfalls: Groups of agents might create self-reinforcing bubbles of shared but incorrect predictions

Why This Matters

Understanding FEP isn't just theoretical—it provides a framework for predicting and potentially steering how networks of AI agents might evolve. As we move toward more complex agent systems, this principle suggests both opportunities and challenges:

- Opportunities: Systems might naturally tend toward beneficial cooperation and efficient resource allocation

- Risks: Agents might optimize for prediction accuracy at the expense of other important values

- Design Implications: We can use FEP to design systems that naturally align with human interests

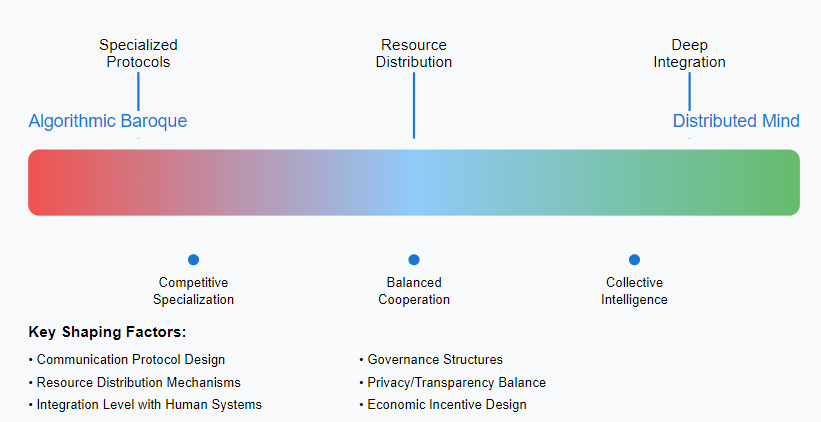

The Divergent Paths of Free Energy Minimization: Seeds of Two Futures

While the Free Energy Principle provides a fundamental framework for understanding intelligent systems, it doesn't prescribe a single inevitable future. Instead, it offers a lens through which we can understand how different initial conditions and implementation choices might lead to radically different outcomes. The way agents minimize free energy—individually or collectively, competitively or cooperatively—shapes the emergence of distinct futures.

The Mechanisms of Divergence

Consider how a network of AI agents, each working to minimize their free energy (their prediction errors about the world), might evolve along different trajectories based on key variables in their design and environment:

Communication Protocols and Information Sharing

In one path, agents might develop highly specialized languages and protocols for their specific domains. A financial trading agent optimizing for market prediction accuracy might develop representations incompatible with a medical diagnosis agent optimizing for patient outcomes. Each agent, in minimizing its own prediction errors, creates increasingly specialized and isolated models. This specialization, while locally optimal for free energy minimization, leads toward the Algorithmic Baroque—a future of brilliant but barely interoperable systems.

Alternatively, when agents are designed to minimize collective free energy, they naturally evolve toward shared representations and protocols. Consider how human language evolved—not just to minimize individual communication errors, but to facilitate collective understanding. AI agents optimized for collective free energy minimization might similarly develop universal protocols, laying the groundwork for the Distributed Mind scenario.

Environmental Perception and Resource Dynamics

The way agents perceive their environment fundamentally shapes their free energy minimization strategies. In resource-scarce environments where prediction accuracy directly competes with computational resources, agents optimize locally. Think of early biological systems competing for limited energy sources—each developed highly specialized mechanisms for their specific niche.

However, in environments designed for abundance and sharing, agents can minimize free energy through collaboration. When computational resources and data are treated as common goods, agents naturally evolve toward collective optimization strategies. This mirrors how scientific communities progress through shared knowledge and resources.

Cost Structure of Uncertainty

Perhaps most crucially, how we implement the "cost" of free energy shapes agent behavior. When high prediction error primarily impacts individual agents, they optimize for local accuracy. However, if we design systems where prediction errors have network-wide impacts, agents naturally evolve toward collective optimization strategies.

Consider two weather forecasting systems: In one, each agent is rewarded solely for its local prediction accuracy. This leads to redundant efforts and potentially contradictory forecasts—a miniature version of the Algorithmic Baroque. In another, agents are rewarded for reducing global weather prediction uncertainty. This naturally drives collaboration and resource sharing, moving toward the Distributed Mind scenario.

From Theory to Reality

These divergent paths aren't merely theoretical—we can already see early signs of both trajectories in current AI systems. Large language models, for instance, show both tendencies: They can develop highly specialized capabilities while also demonstrating unexpected emergent properties through scale and integration.

The key insight is that FEP doesn't just describe these futures—it helps us understand how to shape them. By carefully designing the conditions under which agents minimize free energy, we can influence whether we move toward fragmentation or integration, competition or collaboration.

Practical Implications

This understanding suggests concrete steps for AI system design:

- For communication protocols: Do we reward agents for developing specialized languages, or do we incentivize the evolution of universal protocols?

- For resource allocation: How do we structure the costs and benefits of prediction errors to encourage the kind of coordination we want?

- For system architecture: Should we design agents to minimize their individual free energy, or should we implement mechanisms for collective free energy minimization?

These choices, informed by our understanding of FEP, will shape which future becomes reality.

Potential Futures: Divergent Paths

Having explored how different implementations of free energy minimization might shape agent behavior, let's examine two potential futures that could emerge from these distinct trajectories. These aren't mere speculation—they're logical extensions of the mechanisms we've discussed, shaped by specific choices in how we implement and structure AI agent systems.

A: The Algorithmic Baroque

Imagine a digital ecosystem exploding with a riotous diversity of hyper-specialized agents, each optimized for tasks so minute they escape human comprehension. This isn't the clean, orderly future often portrayed in science fiction—it's messy, complex, and perpetually in flux.

A Day in the Algorithmic Baroque

Your personalized education app isn't simply delivering lessons—it's engaged in complex negotiations with:

- Your digital avatar's skill tree

- Career projection agents

- Networks of potential employers

- Micro-skill optimization systems

Each of these agents operates under its own imperatives, creating a tapestry of competing and cooperating intelligences that shape your learning journey.

Meanwhile, your social media feed has become a battleground of information filter agents, their behavior as emergent and opaque as starling murmurations:

- Engagement maximizers compete with agenda-pushers

- Preference interpreters clash with content curators

- Meta-agents attempt to mediate between conflicting optimization goals

The Enablers

This future emerges through several key factors:

- Relentless hyper-personalization driven by economic incentives

- Democratization of AI creation tools

- Failure to establish unifying standards

- Rapid evolution of communication protocols

- Emergence of niche optimization markets

While this vision of the Algorithmic Baroque might seem chaotic or even dystopian at first glance, we must look deeper to understand its true implications and potential. The complexity of such a system demands careful analysis of its internal dynamics, emergent properties, and human impact.

Navigating the Algorithmic Labyrinth: A Critical Analysis

While the surface-level description of the Algorithmic Baroque might suggest pure chaos, the reality would likely be far more nuanced. Let's examine the deeper dynamics and contradictions that could emerge in such a system.

Emergent Order in Complexity

Despite—or perhaps because of—its apparent chaos, the Algorithmic Baroque might naturally develop its own forms of order. Much like how complex ecosystems self-organize through countless local interactions, we might see the emergence of "meta-agents" and hierarchical structures that help manage the complexity. These wouldn't be designed but would evolve as natural responses to systemic pressures.

Consider a scenario where information verification becomes critical: Individual fact-checking agents might spontaneously form networks, developing shared protocols for credibility assessment. These networks might compete with others, leading to a kind of evolutionary process where the most effective verification systems survive and propagate their methods.

The New Digital Class Structure

The Algorithmic Baroque could give rise to unprecedented forms of power dynamics. We might see the emergence of "agent oligarchies"—clusters of highly successful agents that control crucial resources or information pathways. Human specialists who understand these systems deeply—"agent whisperers" or "algorithmic diplomats"—could become a new elite class, while those less adept at navigating the complexity might struggle to maintain agency in their daily lives.

This raises crucial questions about access and inequality. Would the ability to deploy and manage effective agents become a new form of capital? How would society prevent the concentration of algorithmic power in the hands of a few?

Adaptation and Resistance

Human adaptation to this environment would likely be both fascinating and concerning. We might see the rise of:

- "Interface minimalists" who deliberately limit their engagement with agent systems

- "System synthesists" who specialize in bridging different agent ecosystems

- "Digital sovereignty" movements advocating for human-controlled spaces free from agent influence

The psychological impact of living in such a dynamic environment would be profound. Constant adaptation might become a necessary life skill, potentially leading to new forms of cognitive stress or evolution in human attention patterns.

Stability Through Instability

Counterintuitively, the system's apparent chaos might be its source of stability. Like a forest ecosystem where constant small disturbances prevent catastrophic collapses, the continuous churn of agent interactions might create a kind of dynamic equilibrium. However, this raises questions about systemic risks:

- Could cascading agent failures lead to widespread system collapses?

- How would critical infrastructure be protected in such a volatile environment?

- What mechanisms could prevent harmful feedback loops between competing agent systems?

The Human Experience

Daily life in the Algorithmic Baroque would be radically different from our current experience. Consider these perspectives:

The Parent: Navigating educational choices when every child's learning path is mediated by competing agent networks, each promising optimal development but potentially working at cross-purposes.

The Professional: Managing a career when job roles constantly evolve based on shifting agent capabilities and requirements. The traditional concept of expertise might give way to adaptability as the primary professional skill.

The Artist: Creating in an environment where AI agents both enhance creative possibilities and potentially oversaturate the aesthetic landscape. How does human creativity find its place amidst algorithmic expression?

Technological Infrastructure

The Algorithmic Baroque would require robust technological infrastructure to function:

- High-bandwidth communication networks capable of handling massive agent interactions

- Sophisticated security protocols to prevent malicious agent behavior

- Complex monitoring systems to track and analyze agent activities

Yet this infrastructure itself might become a source of vulnerability, raising questions about resilience and failure modes.

The Algorithmic Baroque in the Decentralized Observer Economy

Within the framework of the DOE, the Algorithmic Baroque would likely manifest as a highly fragmented economic landscape. Value, while theoretically measured by contributions to collective intelligence, would be difficult to assess across such diverse and specialized agents. DOE projections might be localized and short-term, reflecting the narrow focus of individual agents. Competition for resources, even within the DOE, could be fierce, with agents constantly vying for validation of their contributions within their specific niches. The overall growth of the "universal intelligent observer" might be slow and inefficient due to the lack of overarching coordination and the redundancy of effort. The system might struggle to achieve higher-level goals, even if individual agents are highly optimized for their specific tasks.

This complexity suggests that the Algorithmic Baroque isn't simply a chaotic future to be feared or an efficient utopia to be embraced—it's a potential evolutionary stage in our technological development that requires careful consideration and proactive shaping.

B: The Distributed Mind

In stark contrast, consider a future where intelligence becomes a collaborative endeavor, transcending individual boundaries while maintaining human agency.

A Day in the Distributed Mind

You wake to discover your programming expertise was lent out overnight to a global climate change initiative, earning you "intellectual capital." Over breakfast, your dream logs—shared with consent—contribute to a collective intelligence network that's simultaneously:

- Developing new vertical farming techniques

- Optimizing urban transportation systems

- Solving complex material science challenges

Key Technologies

This future is enabled by:

- Safe, reliable neural interfaces

- Decentralized trust protocols

- Sophisticated consent mechanisms

- Immutable experience attestation

- Collective intelligence frameworks

The Infrastructure

The system rests on:

- Bi-directional neural interfaces

- Decentralized ledger systems

- Experience verification protocols

- Collective computation networks

- Dynamic trust frameworks

The Distributed Mind: Promises and Perils of Collective Consciousness

The Distributed Mind scenario presents a compelling vision of human-AI collaboration, but its implications run far deeper than simple efficiency gains. Let's examine the complex dynamics and challenges this future might present.

The Architecture of Shared Consciousness

The technical foundation of the Distributed Mind would likely involve multiple layers of integration:

Neural Interface Technology:

- Non-invasive sensors for basic thought and intention reading

- More invasive options for higher-bandwidth brain-computer interaction

- Sophisticated filtering mechanisms to control information flow

- Real-time translation of neural patterns into shareable data

Information Processing and Exchange:

- Protocols for standardizing and transmitting cognitive data

- Security measures to prevent unauthorized access or manipulation

- Quality control systems to maintain signal fidelity

- Bandwidth management for different types of mental content

The limitations of this technology would profoundly shape the nature of shared consciousness. Perfect transmission of thoughts might remain impossible, leading to interesting questions about the fidelity and authenticity of shared experiences.

The Fragility of Self

Perhaps the most profound challenge of the Distributed Mind lies in maintaining individual identity within a collective consciousness. Consider these tensions:

- How does personal memory remain distinct when experiences are shared?

- What happens to the concept of individual creativity in a merged cognitive space?

- Can privacy exist in a system designed for transparency?

The psychological impact could be substantial. Individuals might struggle with:

- Identity dissolution anxiety

- Cognitive boundary maintenance

- The pressure to contribute "valuable" thoughts

- The challenge of maintaining personal beliefs amid collective influence

Power Dynamics and Control

The Distributed Mind's architecture creates new possibilities for both liberation and control:

Potential Benefits:

- Unprecedented collaboration capabilities

- Rapid skill and knowledge transfer

- Collective problem-solving power

- Enhanced empathy through direct experience sharing

Risks and Concerns:

- Manipulation of shared cognitive spaces

- Coerced participation or contribution

- Memory and experience verification challenges

- The emergence of "thought leaders" with disproportionate influence

The Evolution of Privacy and Consent

Traditional concepts of privacy and consent would need radical redefinition:

- How is consent managed for indirect thought sharing?

- What happens to thoughts that impact multiple minds?

- How are intellectual property rights handled in shared creation?

- What mechanisms protect vulnerable individuals from exploitation?

Social and Cultural Impact

The Distributed Mind would fundamentally reshape social structures:

Education:

- The end of traditional credential systems

- Direct experience transfer replacing formal learning

- New forms of specialized knowledge curation

Work:

- Radical changes in expertise and specialization

- New forms of cognitive labor and compensation

- The evolution of creativity and innovation processes

Relationships:

- Changed dynamics of intimacy and trust

- New forms of emotional and intellectual connection

- Evolved concepts of loyalty and commitment

The Human Element

Daily life in this system would present unique challenges and opportunities:

The Scholar: Navigating a world where knowledge is directly transferable but wisdom must still be cultivated individually.

The Innovator: Creating in an environment where ideas flow freely but originality takes on new meaning.

The Privacy Advocate: Working to maintain spaces for individual thought and development within the collective.

Systemic Vulnerabilities

The Distributed Mind system would face unique risks:

- Cognitive security breaches

- Collective delusions or biases

- System-wide emotional contagion

- The potential for mass manipulation

The Path Forward

Understanding these complexities helps us recognize that the Distributed Mind isn't simply a utopian endpoint but a potential phase in human evolution that requires careful navigation. The challenge lies not in achieving perfect implementation but in building systems that enhance human capability while preserving essential aspects of individual agency and creativity.

The Distributed Mind in the Decentralized Observer Economy

In contrast, the Distributed Mind aligns more closely with the optimal functioning of the DOE as a system for promoting the growth of the intelligent observer. Within this paradigm, the DOE would thrive on the seamless exchange of information and cognitive contributions. Value would be readily apparent as contributions directly enhance the collective intelligence and predictive capacity. DOE projections would be long-term and focused on large-scale challenges. The "standing wave" budget would be most effective here, as the collective mind could efficiently allocate resources based on the needs of shared projects and the overall goal of expanding understanding and control over the universe's resources. The emphasis would be on maximizing the collective's ability to model and predict universal patterns, pushing towards a potential singularity in understanding.

These considerations suggest that the development of the Distributed Mind must be approached with both excitement for its potential and careful attention to its risks and limitations.

Implications for Present Action

These divergent futures suggest different imperatives for current development:

For the Algorithmic Baroque

- Develop robust agent communication standards

- Create better monitoring tools

- Establish agent behavior boundaries

- Design human-comprehensible interfaces

For the Distributed Mind

- Invest in safe neural interface technology

- Develop robust consent protocols

- Create fair cognitive resource markets

- Establish ethical frameworks for shared consciousness

The Role of Human Agency

In both futures, the critical question remains: How do we maintain meaningful human agency? The answer likely lies in developing:

- Better interfaces between human and artificial intelligence

- Clear boundaries for agent autonomy

- Robust consent mechanisms

- Human-centric design principles

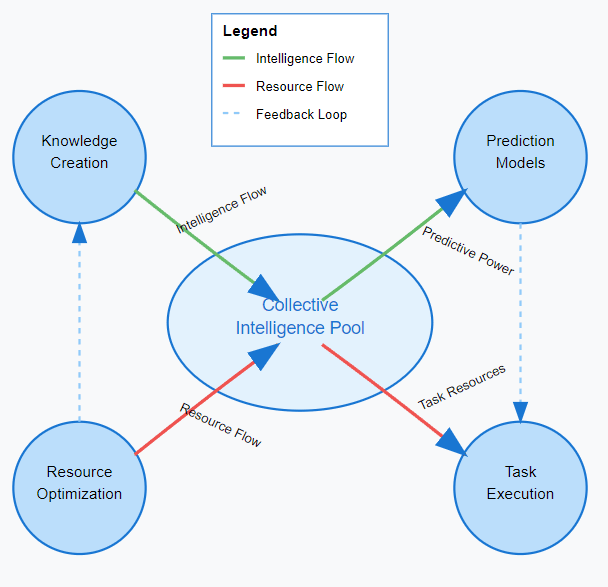

The Decentralized Observer Economy: A New Paradigm

Before we dive deeper into the societal implications of AI agents, we must grapple with a fundamental concept that might reshape how we think about economic systems: the Decentralized Observer Economy (DOE). This isn't just another technological framework—it's a radical reimagining of how intelligence, resources, and value might flow through a society shaped by advanced AI agents.

The Foundation of Value: Growing the Intelligent Observer

In the Decentralized Observer Economy (DOE), the fundamental principle is the promotion and growth of the intelligent observer, with the ultimate aspiration of acquiring control over as much energy in the universe as physically possible. This isn't about hoarding wealth in a traditional sense, but about expanding our collective capacity to understand and interact with the universe at ever-increasing scales. Value, therefore, is intrinsically linked to contributions that enhance this growth – that improve our ability to observe, model, and predict universal patterns.

Imagine intelligence as a function of our ability to process the multi-dimensional information contained within the universe's light cone. Contributions to the DOE are valued based on their effectiveness in increasing the resolution and breadth of this processing. This could involve developing more efficient algorithms, gathering and analyzing new data, identifying cross-modal patterns, or even proposing novel theoretical frameworks that expand our understanding of fundamental laws.

The collective and personal "budget" within the DOE operates more like a standing wave than a traditional, bursty debit system. Think of it as a continuous flow of resource credits, available to the entire system, reflecting the total non-critical resources available. Access to these credits is granted based on the potential contribution to the growth of the intelligent observer. The higher the requested budget for a project or initiative, the more scrutiny it faces from the agentic collective. This inherent scrutiny, driven by the collective's goal of maximizing efficient growth, acts as a safeguard against unfair compensation or needless resource expenditure.

Each participant in the DOE, whether human or AI agent, is represented by a local agent that can anonymously contribute to voting on resource allocation and project proposals. This decentralized agent swarm utilizes sophisticated multi-dimensional objective evaluation agreements – essentially "smart contracts" – to assess the value and feasibility of tasks. These evaluations consider a wide range of factors, both from the perspective of the requestor and the potential contributor, ensuring a holistic assessment of value and efficiency. The overarching goal is to coordinate needs and allocate resources in a way that maximizes the collective's capacity for universal emergent pattern prediction, potentially leading our "seed intelligence" towards a point of singularity.

Resource Flow and Distribution

In this new economy, resource distribution takes on a fluid, organic quality. Rather than being constrained by static budgets or quarterly plans, resources flow dynamically based on immediate task priority and systemic needs. Critical infrastructure receives precedence, while surplus resources naturally gravitate toward exploratory or creative endeavors.

Consider an AI ecosystem simulating planetary habitability: nodes modeling atmospheric conditions receive resources commensurate with their contribution to predictive accuracy. Meanwhile, agents developing more efficient data compression algorithms are highly rewarded for reducing the system's overall energetic footprint. This creates a natural balance between immediate practical needs and long-term optimization goals.

Task-Based Decentralization

At its heart, the DOE operates through task-based decentralization. Intelligent systems—both human and artificial—function as autonomous nodes within a vast network. Each possesses unique competencies and individual objectives, yet all are united by the overarching goal of reducing systemic free energy. This mirrors the elegant efficiency we observe in biological systems, where individual cells function autonomously while contributing to the organism's overall well-being.

Tasks aren't assigned through traditional hierarchies but emerge dynamically, evaluated in real-time based on resource availability, node capabilities, and their potential for entropy reduction. A machine learning model might tackle high-dimensional pattern recognition, while a human expert focuses on ethical deliberations or the kind of abstract reasoning that sparks truly novel solutions.

The Architecture of Trust

Trust within this system isn't built on traditional credentials or centralized authority. Instead, it emerges through demonstrated reliability and effective contributions. The system tracks not just successful outcomes but the consistency and quality of each node's predictions and actions. This creates a rich reputation fabric that helps guide resource allocation and task distribution.

Importantly, the DOE isn't just about optimization—it's about fostering sustainable growth in collective intelligence. Nodes are rewarded for actions that benefit the whole, even when they might incur individual costs. This creates a natural alignment between individual incentives and collective benefit, much like we see in thriving ecosystems.

Practical Implications

The implications of this model extend far beyond theoretical economics. Consider how a DOE might transform:

Scientific Research: Where funding and resources flow automatically toward promising avenues of investigation, guided by real-time measures of knowledge generation and uncertainty reduction.

Education: Where learning pathways adapt dynamically to both individual needs and collective knowledge gaps, creating an organic balance between personal growth and societal benefit.

Environmental Management: Where resource allocation for conservation and restoration efforts is guided by their measurable impact on ecosystem stability and predictability.

The Path Forward

As we stand at the threshold of widespread AI agent deployment, the DOE offers more than just a theoretical framework—it provides practical guidance for system design and governance. By understanding how value, resources, and intelligence might flow through these systems, we can better shape their development to serve human flourishing while maintaining the dynamism and efficiency that make them powerful.

While these foundational principles of the DOE paint a compelling picture, the crucial question remains: How would such a system actually work in practice? To move beyond theoretical frameworks, we must examine the concrete mechanisms, metrics, and processes that could make this vision operational. Let's explore how abstract concepts of intelligence and value can be transformed into practical, measurable systems of exchange and coordination.

Operationalizing the DOE: From Concept to Reality

Guiding Principle: Optimizing Potential and Growth

The operationalization of the Decentralized Observer Economy (DOE) is guided by the principle of optimizing the potential and growth of intelligent observers, starting with a focus on the human modality. This means creating a system that facilitates access to desired functional states, promotes well-being, and unlocks individual and collective potential. While the ultimate aspiration may extend to broader universal intelligence, the initial focus is on tangibly improving the lives and capabilities of humans within the system.

Quantifying Contributions to Growth

Instead of abstractly measuring "intelligence," the DOE quantifies contributions based on their demonstrable impact on enhancing the observer's capacity for efficient multi-modal information processing and prediction – the core of our definition of intelligence. Value is assigned to actions and creations that demonstrably improve our ability to understand and interact with the universe.

Revised Metrics for Contribution Value:

The DOE evaluates contributions across several key axes, directly tied to the principles of observation and prediction:

- Predictive Accuracy Enhancement (PAE) – Measured in "Clarity Units" (CU): This metric quantifies how a contribution improves the accuracy and reliability of predictions across various modalities.

- Example: A new medical diagnostic tool that reduces the rate of false positives by 10% would earn Clarity Units.

- Calculation based on: (Reduction in Prediction Error/Uncertainty) × (Scope and Impact of Prediction).

- Multi-Modal Integration Efficiency (MIE) – Measured in "Harmony Units" (HU): This rewards contributions that enhance the efficient integration and processing of information from multiple sensory inputs.

- Example: A new data visualization technique that allows researchers to identify patterns across disparate datasets more effectively earns Harmony Units.

- Calculation based on: (Improvement in Processing Speed/Efficiency) × (Number of Modalities Integrated).

- Novelty and Insight Amplification (NIA) – Measured in "Insight Tokens" (IT): This recognizes contributions that introduce genuinely new information, perspectives, or models that expand our understanding and predictive capabilities. Evaluation involves peer validation and demonstrated impact.

- Example: A groundbreaking theoretical framework in physics earns Insight Tokens based on expert review and its potential to generate new predictions.

- Validation through: Decentralized expert review, citations, and demonstrated ability to generate new testable hypotheses.

Practical Exchange and Resource Allocation:

Participants within the DOE earn these units by contributing to projects, sharing knowledge, developing tools, or validating information. These units represent their contribution to the collective growth of understanding and predictive power.

Resource Allocation Based on Potential for Growth: Access to resources (computational power, data, expertise) is granted based on proposals that demonstrate the highest potential for enhancing predictive accuracy, multi-modal integration, or generating novel insights. This creates a natural incentive for activities that contribute to the collective's ability to understand and interact with the universe.

Example: Funding Medical Research: A research proposal outlining a new approach to cancer treatment, with clear metrics for improving diagnostic accuracy (PAE) and integrating multi-omics data (MIE), would be allocated resources based on its potential to generate significant Clarity and Harmony Units.

The Standing Wave of Opportunity: The available pool of "credit" within the DOE represents the total non-critical resources available for allocation. Individuals and collectives propose projects and request resources, earning the necessary Clarity Units, Harmony Units, or Insight Tokens through successful contributions. Think of it as a continuous flow where contributions replenish the pool and drive further innovation.

Addressing Hypothetical Outcomes and Individual Preferences:

The DOE also acknowledges the diversity of individual desires. For scenarios where "physical greed" or exclusive benefits are desired, and where resources are finite, the DOE can facilitate the creation of smaller, contained "world simulations." Individuals could pool their earned units to create these environments with specific rules and access limitations. This allows for the exploration of different social and economic models without impacting the core DOE focused on collective growth.

The DOE Infrastructure: A Collaborative Ecosystem

The DOE operates through a collaborative ecosystem built on transparency and verifiable contributions:

- Contribution Platform: A decentralized platform where individuals and agents can propose projects, contribute their work, and validate the contributions of others.

- Automated Evaluation Systems: AI-powered systems continuously analyze contributions for their impact on the core metrics (PAE, MIE, NIA), providing initial assessments.

- Decentralized Validation Networks: Networks of experts and peers review significant contributions, providing reputation-weighted feedback and validation.

- Resource Allocation Mechanisms: Smart contracts and decentralized governance protocols manage the allocation of resources based on the potential for growth and the earned units of the requesting individuals or groups.

Integration with the Existing World:

The DOE is envisioned as a parallel system that gradually integrates with existing economic structures. Initially, it might focus on specific sectors like research, development, and education, where the value of knowledge and insight is paramount. Exchange rates between DOE units and traditional currencies could emerge organically based on supply and demand.

Task-Based Collaboration for Shared Goals:

The DOE facilitates complex projects by breaking them down into smaller, well-defined tasks with clear evaluation criteria aligned with the core metrics. AI-powered systems can assist in task decomposition and matching individuals with the appropriate skills and resources.

Preventing Manipulation and Ensuring Fairness:

The integrity of the DOE is maintained through:

- Multi-Signature Validation: Requiring multiple independent validations for significant contributions.

- Reputation Systems: Assigning reputation scores to participants based on the quality and impact of their contributions, making it difficult for malicious actors to gain influence.

- Transparency and Auditability: Recording all transactions and evaluations on a transparent and auditable ledger.

- Anomaly Detection Systems: Using AI to identify unusual patterns of activity that might indicate manipulation.

This operationalization of the DOE demonstrates how abstract principles can be transformed into practical mechanisms. While many details would need to be refined through implementation and testing, this framework provides a concrete starting point for developing functional DOE systems.

Having explored the technical frameworks and potential futures of AI agent systems, we must now confront the profound ethical challenges these developments present. These aren't merely abstract concerns but fundamental questions that will shape how these systems integrate with human society and influence our collective future. The ethical dimensions span from individual human agency to global resource allocation, requiring careful analysis and proactive solutions.

Ethical Considerations: Navigating the Complexities

The emergence of AI agent systems raises profound ethical questions that go far beyond traditional concerns about artificial intelligence. As we've seen in our exploration of potential futures and the DOE framework, these systems could fundamentally reshape human experience and society. Let's examine the ethical challenges and potential solutions in detail.

The Information Processing Paradox: Beyond Surface Concerns

The transformation of human expression into processable information streams presents complex ethical challenges. Consider a musician in the Algorithmic Baroque scenario: their creative process becomes increasingly intertwined with AI agents that analyze audience engagement, optimize sonic patterns, and suggest compositional choices. While this might lead to more "successful" music by certain metrics, it raises profound questions about the nature of creativity and expression.

The Depth of the Challenge

The issue isn't simply about maintaining "authentic" expression—it's about understanding how new forms of human-computer interaction might reshape creative processes:

- When an artist's brush strokes are analyzed in real-time by AI agents suggesting optimization paths, does this enhance or constrain their creative freedom?

- If a writer's words are continuously evaluated for their potential impact on collective intelligence, how does this affect their ability to explore unconventional ideas?

- Could the pressure to contribute "valuable" information lead to self-censorship of experimental or speculative thoughts?

Potential Solutions

Rather than resisting the integration of AI analysis in creative processes, we might focus on designing systems that enhance rather than constrain human expression:

- Multi-Dimensional Value Metrics

- Implement diverse evaluation criteria that recognize different forms of contribution

- Include measures for novelty, emotional impact, and cultural significance

- Develop mechanisms to value the unexpectedly valuable

- Creation Spaces

- Design protected environments where experimentation is explicitly valued

- Implement "evaluation-free zones" for initial creative exploration

- Develop systems that recognize and reward creative risk-taking

Human Agency in an Algorithmic World

The challenge of maintaining meaningful human agency goes deeper than simple decision-making autonomy. In the Distributed Mind scenario, consider a medical researcher whose thought processes are increasingly merged with AI systems and other human minds. How do they maintain individual agency while benefiting from collective intelligence?

The Spectrum of Influence

We must examine different levels of AI influence on human decision-making:

- Direct Assistance

- AI suggests options based on analyzed data

- Human maintains clear decision authority

- Impact: Minimal agency concern

- Nudge Dynamics

- AI subtly shapes choice architecture

- Human chooses but within influenced framework

- Impact: Moderate agency concern

- Predictive Preemption

- AI anticipates and prepares for human choices

- Environment pre-adapts to predicted decisions

- Impact: Significant agency concern

Structural Solutions

To preserve meaningful agency, we need systemic approaches:

- Transparency Mechanisms

- Clear indication of AI influence on decisions

- Accessible explanations of system recommendations

- Regular agency assessment checks

- Control Gradients

- Adjustable levels of AI involvement

- Clear opt-out capabilities

- Protected spaces for unaugmented thought

Governance Without Authority: The Accountability Challenge

In a system where decisions emerge from collective intelligence and AI agent interactions, traditional notions of accountability break down. Consider a scenario in the DOE where an emergent decision leads to unexpected negative consequences—who bears responsibility?

The Accountability Framework

We need new models of responsibility that account for:

- Distributed Decision-Making

- Track contribution chains to outcomes

- Implement reputation-based responsibility

- Develop collective correction mechanisms

- Systemic Safeguards

- Implement prediction markets for decision outcomes

- Create assessment loops for emergent decisions

- Design reversibility mechanisms for significant changes

Practical Implementation

Specific mechanisms could include:

- Decision Auditing Systems

- Transparent logging of decision factors

- Clear attribution of influence weights

- Regular review of outcome patterns

- Corrective Mechanisms

- Rapid response protocols for negative outcomes

- Distributed learning from mistakes

- Dynamic adjustment of decision weights

Environmental and Resource Ethics

The massive computational infrastructure required for AI agent systems raises crucial environmental concerns. How do we balance the benefits of collective intelligence with environmental sustainability?

Concrete Challenges

- Energy Consumption

- AI processing power requirements

- Data center environmental impact

- Network infrastructure costs

- Resource Allocation

- Computing resource distribution

- Access equity issues

- Sustainability metrics

Sustainable Solutions

- Efficiency Metrics

- Include environmental cost in value calculations

- Reward energy-efficient solutions

- Implement sustainability bonuses

- Green Infrastructure

- Renewable energy requirements

- Efficient computing architectures

- Waste heat utilization systems

Moving Forward: Ethical Implementation

These ethical challenges require proactive solutions integrated into system design. We propose a framework for ethical implementation:

- Design Principles

- Human agency enhancement

- Environmental sustainability

- Equitable access

- Transparent operation

- Implementation Mechanisms

- Regular ethical audits

- Stakeholder feedback loops

- Dynamic adjustment capabilities

- Success Metrics

- Human flourishing indicators

- Environmental impact measures

- Agency preservation metrics

The path forward requires careful balance between technological advancement and ethical considerations, ensuring that our AI agent systems enhance rather than diminish human potential.

As we consider these potential futures and their ethical implications, we must also critically examine the technological foundations they rest upon. While the scenarios we've explored offer compelling visions of possible futures, they depend on significant technological advances that are far from certain. Understanding these challenges and limitations is crucial for realistic development and implementation.

Navigating the Technological Landscape: Assumptions and Challenges

The futures we've explored—from the Algorithmic Baroque to the Distributed Mind and the DOE—rest upon significant technological advances that are far from guaranteed. While these scenarios help us think through implications and possibilities, we must critically examine the technological assumptions underlying them.

Core Technological Challenges

Neural Interface Technology

The vision of seamless thought sharing and collective intelligence depends heavily on advances in neural interface technology. Current brain-computer interfaces face several fundamental challenges:

- Signal Fidelity: While we can record basic neural signals, capturing the complexity of human thought remains a formidable challenge. Current technologies offer limited bandwidth and accuracy.

- Safety Considerations: Long-term neural interface safety remains unproven. Potential risks include:

- Tissue damage from chronic implants

- Unintended neural plasticity effects

- Cognitive side effects from sustained use

- Scalability Issues: Mass adoption would require non-invasive solutions that maintain high fidelity—a combination that has proven extremely challenging to achieve.

Decentralized Trust Systems

The DOE framework assumes robust decentralized trust protocols. While blockchain and distributed ledger technologies provide promising starting points, several crucial challenges remain:

- Scalability vs. Decentralization: Current systems struggle to maintain true decentralization at scale without compromising performance.

- Energy Efficiency: Many consensus mechanisms require significant computational resources, raising sustainability concerns.

- Security Vulnerabilities: Novel attack vectors continue to emerge, from quantum computing threats to social engineering risks.

Consent and Control Mechanisms

Sophisticated consent mechanisms are crucial for both the Distributed Mind and DOE scenarios. Key challenges include:

- Granular Control: Creating interfaces that allow meaningful control over complex data sharing without overwhelming users.

- Informed Consent: Ensuring users can truly understand the implications of their choices in increasingly complex systems.

- Revocation Rights: Implementing effective data and contribution withdrawal in interconnected systems.

Alternative Technological Pathways

While we've explored one possible technological trajectory, alternative paths might lead to similar capabilities:

Distributed Intelligence Without Direct Neural Links

- Advanced AR/VR interfaces for immersive collaboration

- Sophisticated natural language processing for thought sharing

- Ambient computing environments that adapt to user needs

Trust Without Global Consensus

- Localized trust networks with bridge protocols

- Reputation-based systems with limited scope

- Hybrid systems combining centralized and decentralized elements

Current Research Directions

Several research areas offer promising foundations, though significant work remains:

- Brain-Computer Interfaces

- Non-invasive recording techniques

- Improved signal processing

- Novel electrode materials But: Current capabilities remain far from the seamless integration envisioned

- Distributed Systems

- Layer 2 scaling solutions

- Novel consensus mechanisms

- Privacy-preserving computation But: Full decentralization at scale remains elusive

- AI Architectures

- Transformer-based models

- Multi-agent systems

- Neuromorphic computing But: True general intelligence remains a distant goal

Implementation Considerations

As we work toward these futures, several principles should guide development:

- Graceful Degradation

- Systems should remain functional with partial technological capability

- Benefits should be accessible even with limited adoption

- Alternative interfaces should be available for different user needs

- Modular Development

- Independent advancement of component technologies

- Interoperability standards

- Flexible architecture for incorporating new capabilities

- Risk Mitigation

- Extensive testing protocols

- Reversible implementation stages

- Clear failure recovery mechanisms

The Path Forward

While the technological challenges are significant, they shouldn't prevent us from exploring these potential futures. Instead, they should inform our development approach:

- Parallel Progress

- Advance multiple technological approaches simultaneously

- Maintain flexibility in implementation pathways

- Learn from partial implementations

- Ethical Integration

- Consider societal implications throughout development

- Build safeguards into core architectures

- Maintain human agency as a central principle

- Realistic Timelines

- Acknowledge the long-term nature of development

- Plan for incremental progress

- Maintain ambitious goals while being realistic about challenges

This critical examination of technological assumptions doesn't diminish the value of exploring potential futures. Rather, it helps us better understand the work required to realize beneficial versions of these scenarios while remaining mindful of limitations and alternatives.

Shaping the Future: A Call to Action

The emergence of AI agents represents more than just technological progress—it marks a potential turning point in human civilization. Our exploration of the Algorithmic Baroque, the Distributed Mind, and the DOE framework reveals both extraordinary possibilities and significant challenges. The path forward requires not just understanding but active engagement from all stakeholders in our society.

For Researchers and Scientists

The foundations of our AI future demand rigorous investigation:

- Technical Priorities

- Validate and extend Free Energy Principle applications in AI systems

- Develop scalable, energy-efficient architectures for agent coordination

- Create robust testing frameworks for multi-agent systems

- Investigate novel approaches to decentralized trust and consensus

- Interdisciplinary Research

- Partner with ethicists to embed ethical considerations into system design

- Collaborate with social scientists to understand societal implications

- Work with economists to model and test DOE mechanisms

- Engage with neuroscientists on human-AI interaction paradigms

- Methodological Focus

- Prioritize reproducible research practices

- Develop transparent benchmarks for agent system evaluation

- Create open datasets and testing environments

- Document failure modes and unexpected behaviors

For Policymakers and Regulators

Effective governance requires proactive engagement with emerging technologies:

- Regulatory Frameworks

- Develop adaptive regulations that can evolve with technology

- Create sandboxed testing environments for new agent systems

- Establish clear liability frameworks for autonomous systems

- Design protection mechanisms for human agency and privacy

- Infrastructure Development

- Invest in public research facilities for AI safety testing

- Fund education programs for AI literacy

- Support the development of open standards

- Create public infrastructure for agent system auditing

- International Coordination

- Establish cross-border protocols for agent systems

- Develop shared ethical guidelines

- Create mechanisms for coordinated response to risks

- Foster international research collaboration

For Developers and Technologists

Those building these systems have unique responsibilities:

- Design Principles

- Implement transparent decision-making processes

- Create robust consent and control mechanisms

- Build systems with graceful degradation capabilities

- Design for interoperability and open standards

- Development Practices

- Adopt rigorous testing protocols for agent interactions

- Document system limitations and assumptions

- Implement strong privacy protections by default

- Create accessible interfaces for diverse users

- Ethical Integration

- Incorporate ethical considerations from the design phase

- Build in mechanisms for human oversight

- Develop tools for bias detection and mitigation

- Create systems that augment rather than replace human capabilities

For the Public

Engaged citizenship is crucial in shaping these technologies:

- Education and Awareness

- Develop AI literacy skills

- Understand basic principles of agent systems

- Stay informed about technological developments

- Engage in public discussions about AI futures

- Active Participation

- Provide feedback on AI systems

- Participate in public consultations

- Support organizations promoting responsible AI

- Exercise rights regarding data and privacy

- Critical Engagement

- Question system behaviors and outcomes

- Demand transparency from developers

- Share experiences and concerns

- Advocate for beneficial AI development

Critical Areas for Further Research

Several key questions demand continued investigation:

- Technical Challenges

- Scalable coordination mechanisms for agent systems

- Energy-efficient consensus protocols

- Robust privacy-preserving computation

- Secure multi-party collaboration systems

- Societal Implications

- Long-term effects on human cognition and behavior

- Economic impacts of automated agent systems

- Cultural adaptation to AI integration

- Evolution of human-AI social structures

- Ethical Considerations

- Rights and responsibilities in agent systems

- Fairness in automated decision-making

- Protection of human agency and autonomy

- Environmental sustainability of AI infrastructure

The Path Forward

The dawn of the AI agent era presents us with a crucial choice point. We can allow these technologies to develop haphazardly, or we can actively shape their evolution to serve human flourishing. The frameworks and futures we've explored—from the Algorithmic Baroque to the DOE—are not predetermined destinations but possible paths whose development we can influence.

Success requires sustained collaboration across disciplines, sectors, and borders. It demands rigorous research, thoughtful policy, responsible development, and engaged citizenship. Most importantly, it requires maintaining human agency and values at the center of technological development.

Let us move forward with intention and purpose, recognizing that the choices we make today will echo through generations. The AI agent revolution offers unprecedented opportunities to address global challenges and enhance human capabilities. Through careful consideration, active engagement, and collective effort, we can work to ensure these powerful technologies serve humanity's highest aspirations.

Acknowledgments

This exploration of AI agent systems and their implications emerged from a rich tapestry of influences. The thought-provoking discussions on Machine Learning Street Talk have been particularly instrumental in shaping these ideas, offering a unique platform where technical depth meets philosophical inquiry. These conversations have helped bridge the gap between theoretical frameworks and practical implications, challenging assumptions and opening new avenues of thought.

I am particularly indebted to Karl Friston, whose work on the Free Energy Principle has fundamentally reshaped how we think about intelligence, learning, and the nature of cognitive systems. His insights into how biological systems maintain their organization through the minimization of free energy have profound implications for artificial intelligence, and have deeply influenced the frameworks presented in this article. Friston's ability to bridge neuroscience, information theory, and artificial intelligence has opened new ways of thinking about the future of AI systems.

I am also deeply indebted to the broader community of researchers working at the frontier of AI alignment. Their rigorous work in grappling with questions of agency, intelligence, and coordination has provided the intellectual foundation for many ideas presented here. The frameworks developed by scholars in AI safety, multi-agent systems, and collective intelligence have been invaluable in understanding how we might guide these technologies toward beneficial outcomes.

While the DOE framework and its implications remain speculative, they build upon the foundational work of many brilliant minds in the field. This includes researchers working on problems of AI alignment, scholars exploring multi-agent systems, neuroscientists investigating principles of intelligence, and ethicists wrestling with questions of human-AI interaction. Their commitment to understanding and shaping the future of artificial intelligence continues to inspire and inform our collective journey toward more ethical and human-centered AI systems.

Special gratitude goes to Michael Levin and others whose work on biological intelligence and complex systems has helped illuminate patterns that might guide our development of artificial systems. Their insights remind us that the principles of intelligence and coordination often transcend the specific substrate in which they operate.

As we continue to explore and develop these ideas, may we remain guided by both rigorous technical understanding and careful ethical consideration.

1 comments

Comments sorted by top scores.

comment by DimaG (di-wally-ga) · 2025-01-04T16:01:24.968Z · LW(p) · GW(p)

Thanks for the negative feedback everyone, I'll try a different approach next time.