AR Glasses: Much more than you wanted to know

post by frcassarino · 2021-01-16T00:31:29.689Z · LW · GW · 6 commentsThis is a link post for https://federicorcassarino.substack.com/p/ar-glasses-much-more-than-you-wanted

Contents

I. II. III. IV. V. VI. VIII. Final Thoughts. None 6 comments

I.

AR Glasses are an unusually foreseeable technological innovation. They’ve been around in pop culture and science fiction for a long time. Everybody seems to agree that at some point, be it in 5, 10 or 20 years, they’ll be as ubiquitous as smartphones and eventually replace them.

Maybe it’s precisely the fact that we know they are coming that makes them seem uninteresting. There’s a lack of excitement about them. Few people seem to be speculating deeply about how a world in which everybody owns a pair of AR Glasses will look like; except with ultra pessimistic Black Mirror-ish objections.

The current zeitgeist does make it hard to get excited. There’s a general narrative that we are enslaved to our phones, that we are addicted to mindlessly scrolling, that technology is keeping us from the present moment, and so on. I know a lot of people who think of AR glasses with resignation; as if they accept they’ll end up owning a pair which will further doom them to a life permeated by digital distraction.

In a sentence, people’s attitude towards AR is: It’s like a smartphone, but in your face. And everybody is in a toxic relationship with their smartphones, so nobody is excited about having one in their visual field 24/7.

But that line of thinking on AR glasses might be myopic.

II.

Imagine you told somebody in the year 2000 that a decade later, everybody would carry a touchscreen device (with internet access) with them at all times.

They might say something like: “Amazing! It’ll be like having a tiny personal computer with me all the times! It’ll be a much more convenient way to send emails, use Microsoft Office and browse the web!”

And they’d be right in a limited way. You are able to use your smartphone to do almost everything you do in a computer. You can indeed write emails and browse the web.

But they’d also be missing all of the ways in which smartphones radically transformed the world. Because what makes smartphones special is not that they are a more convenient way to do the same stuff you could do with a computer.

What makes smartphones special is that they’re with you all the time.

Because they are with you all the time, you can now get live directions anywhere with Google Maps. It’s also why it makes sense that you can use them to hail an Uber, or order food. Because they are with you all the time, we put cameras on them which made social media explode as sharing any moment of your life became possible. We now use email much less and instant messaging much more; after all we can count on the other person having their smartphone on them and seeing the message as soon as you sent it. Because they are with you all the time, being extremely bored has become almost impossible as you can always scroll Reddit on the dentist’s lobby. Because they are with you all the time, you get timely notifications of your incoming messages and reminders. Because they are with you all the time, we use them to pay for everything both online and in real life with Google/Apple Pay. Because they are with you all the time, we use them to track runs, count calories, 2-fac authentication, listen to audiobooks, generate raining sounds to go to sleep, play games, use a calculator, unlock Lime scooters, replace alarm clocks, do our banking, facetime friends, etc.

So now on 2021, we guess that ten years down the line everybody will wear all day a pair of glasses that can project holograms. One could think “Amazing! It’ll be like having a smartphone on your face! It’ll be such a convenient way to use Google Maps, instantly see my notifications, hail an Uber, project Netflix shows on a wall, and choose what to play on Spotify!”

But maybe you’d be missing the key differentiating factor for AR Glasses, and hence missing the most interesting ways in which they will transform the world. What makes AR Glasses special is not that they are a convenient way to use a smartphone. What makes AR Glasses special is that they understand your context.

III.

Phones are mind-bogglingly useful. Most of us don’t wanna go back to a world without all of the use-cases I just outlined. But, as countless documentaries and articles have stated, smartphones also feel like addictive attention-sinks.

You’ve read the following rant already. You’ve got an app store where users download apps. Users generally use one app at a time. The very prevalent advertising model incentivizes apps to compete for the users attention, and the apps that end up at the top are the ones that most successfully engage their users. All of this incentive-game resulted on billions of people using a suite of apps that keep you scrolling and consuming content, having way too many daily interactions with random people on instant messaging and social media, and truncating our attention spans to the point where we can only consume tweet-sized content.

That’s the current narrative, and it’s somewhat true. Part of why this is going on are the plain facts of what a phone can and can’t do.

A smartphone can’t know what you’re doing. It doesn’t know if you’re having a conversation with someone or if you’re at the dentist’s lobby waiting for your name to be called. It might know if you’re in a car or a restaurant based on GPS signal, but it doesn’t know if you’re currently driving or in a passenger seat, currently dining with friends or queuing for a table. It doesn’t know who you are with, what’s around you, or in which room of your house you’re in. It doesn’t know whether you’re talking or in silence, excited or sad. It doesn’t know if you’re on a run, meditating, gazing at the ocean, kissing someone, or having a drink.

Because it’s almost entirely unaware of the context you’re in, it feels intrusive. It tries to capture your attention at all times, desperate for you to use it. It’s a tiny screen, in a zero-sum competition against whatever is around you. The incentive for the people making the phones and apps is to get you to look at that tiny screen for as long as possible. And unsurprisingly, almost 15 years after Steve Jobs announced the iPhone, we’re realizing that staring at a tiny screen that sucks you in for hours on end is not conducive to human flourishing and wellbeing.

Maybe, AR Glasses are a perfect remedy for this. They are the quintessential context-aware device. They’ll be sensitive to what you’re doing, who you are with, and where you are.

Let’s think of some optimistic examples of how AR Glasses could use context for your advantage.

If you receive a casual WhatsApp message while you’re enjoying an engaging real-life conversation with a friend, the Glasses won’t show you the notification until a more appropriate moment. If you set a reminder to take your medication, it won’t remind you while you’re driving, but while you’re in your bedroom right next to your pill bottle. It won’t notify you that your favorite youtuber uploaded a new video while you’re writing an important work document; it’ll do so when you’re waiting at the dentist’s office.

Cynics might protest at this point, and claim that whatever nefarious corporation manufactures AR Glasses will either not care about the context or use it to further exploit you. They’ll project holographic ads while you’re relaxing, show a persistent HUD that blocks your view with distracting notifications while you work, prompt you to open social media on every possible free-time, etc.

Obviously, anything is possible. But it’s also possible that pessimistic takes are more prevalent because of the present “big-tech bad” narrative. Just maybe, AR Glasses — devices that are inherently sensitive to context— will tend to be considerate and tactful with that context.

If a company makes a pair of AR Glasses that’s invasive and makes people anxious, it’ll most likely lose out to a competing brand of AR Glasses. The natural, obvious way to design and sell a pair of AR Glasses is to make the users enjoy wearing them, which doesn’t involve holo-ads and obnoxious popups.

If you’re adamant to be pessimistic, I offer a compromise: AR Glasses will have their problems. But they will most likely be a different set of problems that smartphones have. They are probably not trivial to predict, and they’ll be more nuanced than “I can’t stop mindlessly scrolling through my holographic mentions”.

IV.

I’ve made my case for why AR Glasses won’t feel like smartphones. What will they feel like then? Why do I claim that they’ll be so powerful and transformative?

Well, here’s my grand theory of AR. Ta-da!

Of course, everything I’ll talk about here is wildly speculative. It’s just my attempt to do some nth-order thinking —i.e try to figure out how the AR Glasses phenomena will unfold. Things might not end up happening the way I predict; but it’s at least an attempt to think more deeply about them.

Let’s assume that we are talking about a mature generation of AR Glasses, which are not severely limited by compute, battery or thermal limitations.

One way to think of mature AR glasses is as devices that have three main properties.

They can control the user’s input (initially what they see and hear) all day, from the moment they wake up and put them on to the moment they go back to sleep. They can do this by displaying interactive world-locked holograms in the environment, seamlessly blending the digital and physical worlds (i.e AR) . These holograms can be private or shared with other users.

As I’ve mentioned, they can understand the user’s context. This includes what the user is doing, where he/she is (very granularly with 3D live-mapping), what objects the user is surrounded by, the people around the user and what they’re up to, and (up to a point, aided by wearable sensors) how the user is feeling and behaving. As AI, sensors, and CV improve, glasses will be able to model the external and internal world of the user with increasing richness.

The input can vary conditionally based on the context. 1 and 2 interoperate, so depending on the context (ex: the user sits at a piano) the input can change (ex: glasses project a sheet music app next to the piano).

Initially I was struck by how insanely useful these devices would be. Leaving your house? They’ll let you know where your keys are, and remind you to grab your wallet. About to assemble some furniture? Its holographic instruction manual will interactively walk you through every step. Struggling to read some distant street sign? Gesture to zoom in. About to wash the dishes? Sounds like a good time to offer you to listen to your favorite podcast. Studying some algebra? Might as well project an interactive calculator hologram on the table. Are you surrounded by a bunch of ads in the tube station? Lucky you, your real life ad-blocker is blurring them out. Throwing a party? Let’s place some shared AR decoration. Made a mess out of your flat after the party? They can help you tidy up by showing you what goes where. Going to sleep? They’ll remind you to take your medication and take off and charge your glasses.

With devices that are sensitive to context and can adjust what they show you based on it, there’s no end to how useful they can be. It’s a whole new level of granularity in what sort of situations they can assist in. They can be proactive in a way that smartphones can’t. And they’ll only improve as CV and AI build better semantic models of the user, its environment and how they interact.

V.

In order to maximize the usefulness of these devices, they’ll need an application model that can enable the sort of use-cases I’ve just outlined. I think it needs to be a very different application model than smartphones.

Implicit in the smartphone application model, is the fact that apps tend to be used actively. For most apps most of the time, the user opens the app through a menu or notification, uses it for a while, and then exits the app. A user opens Reddit, scrolls for a while, and then closes Reddit.

There’s also apps that blur this boundary a bit. In WhatsApp the app runs in the background receiving messages. It shows a notification if the user has a new message, the user opens it, replies, and then exits.

The app lifecycle in smartphones accommodates these use cases well. Apps can run some background processes. You can minimize Uber while you’re waiting for the car and it’ll still show up. You can minimize WhatsApp and still receive messages.

But even in these examples, the primary use-cases rely on users actively opening and using the apps. There’s exceptions to this — like f.lux—but they are uncommon.

Contrast this smartphone application model, with the one present in Web Browser extensions.

In Google Chrome, browser extensions are not usually apps that you open, use and exit. They are, for the most part, apps that run passively.

LastPass, a password manager, is passively running all the time. It’s richly aware of the context (i.e the website the user is browsing and its source code), and so does not need to be explicitly opened by the user. It can passively check whether a password form is present, and if so it triggers more complex functionality (i.e automatically fill in the websites password).

Similarly, uBlock (an adblocker), also runs passively all the time. It can continuously check a given sites scripts, compare it to its list of scripts that display ads, and block the relevant ones.

There’s extensions that are used actively, but are still passively triggered based on context. Google Translate checks whether a website is in a foreign language, and if so, offers you to translate. It runs passively, and if the user is in the requisite context (i.e there’s text in a foreign language) it becomes active/interactive. So you get prompted to use it based on the context, as opposed to you having to find the app on a menu and open it.

There’s also extensions that add simple widgets, such as the Weather extension that adds a forecast widget to the chrome homepage. They are not an app that you open from a menu; it’s just passively present somewhere within the context (i.e browser).

And then there’s extensions that are actively opened, just like smartphone apps, but operate within the context. Read Aloud is an extension that, with a single manual click, does text-to-speech on the current website.

I think the application model for AR Glasses will look less like the one in smartphones, and more like the one in Browser extensions. That’s because what Browsers and AR Glasses have in common, is that they are aware of context. Browsers understand websites in a rich way, and AR Glasses understand the world around the user in a rich way.

In the same way that LastPass gets triggered when there’s a login form, an AR app could be triggered when you’re leaving your flat and remind you to grab your keys and wallet.

In the same way that uBlock is constantly checking when there’s ads on a website and blocks them, an AR adblocker could be constantly monitoring when there’s a real-life ad in the users field of view and blur it.

In the same way that Google Translate automatically offers you to translate when you’re on a website in a foreign language, an AR Translation app could automatically offer to translate when reading a foreign language signpost in the real world.

In the same way that the Weather extension is passively present on the Chrome homepage, an AR weather widget could be placed on your kitchen table, and always show up passively present when you look towards the table.

And in the same way Read Aloud can be opened just like a smartphone app and do some operation on the browser context (i.e text-to-speech), an AR tape measure could be opened just like a smartphone app and be used to measure a wall or table.

VI.

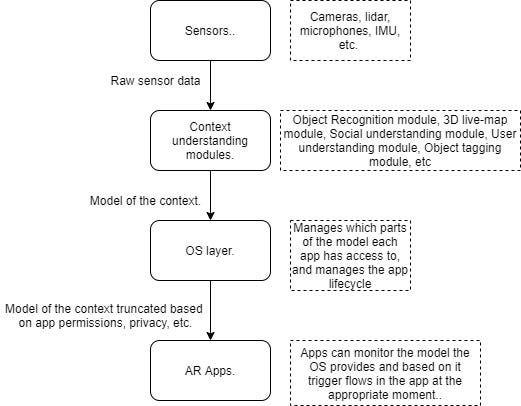

The AR application model would then look something like this:

At the base layer there’s hardware sensors that perceive the environment. Initially they might be cameras, lidar, microphones, accelerometers, etc. As the generations of glasses go by, sensors could get progressively futuristic, with stuff like face/body facing sensors, heart-beat monitors, other wearables, neural interfaces, etc.

Then there’s a layer of context understanding modules that receive the raw sensor data, and do all of the context understanding. These obviously are the key software innovation required to enable AR glasses with the use-cases I outlined. Whichever tech company comes up with a pair of AR glasses should be focused on improving the state of the art of computer vision and machine learning.

We can expect that initially the context understanding modules will be able to provide a relatively basic model of the context. As AR glasses mature and the state of the art improves, I think we can expect that soon enough there will be modules capable of:

Creating a 3D map of the environment (ex: your flat) and understanding how to navigate it (ex: move rooms).

Recognizing objects in the environment and their position within the 3D map. For example, recognize a couch, a table, a coffee cup, a glass, the sky, grass, etc. Tracking them if the move.

Detecting people in the environment, potentially recognizing them, and perceiving some social cues (ex: are they laughing, are they serious, are they currently talking, etc). Obviously, there’s ethical and privacy implications here and social modules should be limited based on them.

Providing some extra holistic information: what time is it, is the sun still out, is the user indoors or outside, what scene are we in (restaurant, flat, park, airplane) etc.

Understanding what actions the user himself is doing. Is he running, is he talking or quiet, is he calm or fidgety, is he cheering, etc. If there’s extra sensors like a fitbit there’s also health data available such as heart rate. The state of the art might even advance enough to understand the users current mood, or in the more distant future thoughts with neural interfaces.

All of these models will be continually outputting data, which we can think of as a model of the user’s context.

There’s a lot of privacy implications here, and it’ll be the OS’s responsibility to manage that. There’s a lot to think about here, and I’ll write much more about this. But as a minimum, no sensitive context data should be uploaded to a server without the user’s consent, and apps should only have access to the most limited amount of data possible to run the app. A permission system like the one that exists in smartphones should exist, and it should be much more thorough as the data will be flowing all the time. I’ll soon write a separate article about how to best safeguard the users privacy.

The OS will then provide this version of the model —truncated to preserve privacy— to the apps. Apps should be thought of as routines or extensions, which run passively but can surface based on the context.

For example, a Driving Assistant app will be dormant/paused by default. But there will be a background routine that frequently polls/receives the context to check whether the user is inside a car. If at any point, the model recognizes that the user is sitting on their car, the driving assistant app will awake and (for example) remind the user to put on his seatbelt.

The tl;dr of this section is:

The OS, based on sensor data and CV/ML modules, should maintain a constantly updating model of the user’s context/environment.

Apps should be able to become active/trigger based on the context.

There should be a system (with permissions) that gives apps access to the relevant slice of context necessary to unlock their use-cases.

When active, apps should be able to interoperate in a rich way with the context they have access to.

VII.

Some of you might have realized that there is feedback loop brewing here.

If Glasses can understand a users context and show input based on it, it follows that Glasses will also be able to increasingly understand how a person reacts to whatever input it shows them. Before showing you the same input again, they can adjust it based on your behavioral/emotional/physiological response to it.

Glasses will show inputs with a certain purpose. For instance, a health app might remind you to drink a glass of water. After showing that reminder, the glasses will be able to see how you reacted. That is, upon seeing the reminder, did you in fact pour yourself a glass of water?

Based on your reaction, the app will be able to adjust for the next time. If you didn’t pour yourself a glass of water, the app might try switching up the time or place of the reminder; maybe try showing it while you’re in the kitchen. Or it might reward you with a cheering sound and a checkmark on your calendar whenever you do drink your daily glass of water. It might even be worth a shot to encourage people to eat a cracker — so they get thirsty— and then show them a reminder to pour themselves a glass of water.

This freaks me out. It represents an unprecedented power to do classic conditioning.

Conditioning, in physiology, a behavioral process whereby a response becomes more frequent or more predictable in a given environment as a result of reinforcement, with reinforcement typically being a stimulus or reward for a desired response.

Conditioning is used everywhere, and there’s plenty of it in smartphones. It can be used in both white mirror-ish and black mirror-ish ways. Addictive games reward you with dings and trophies whenever you score a point or click replay. Habit tracking apps condition you in a positive way, rewarding you by showing streaks of how long you’ve stuck to your habit. Some websites condition you to keep scrolling forever by always showing you one more interesting thing.

In general though, conditioning works much better when there’s a feedback loop. That is, keeping a user scrolling is easy because the app can adjust which interesting content to show based on what the user has clicked on before. It can see which kind of content kept the user scrolling before, and show more of it in the future.

Keeping a user drinking water with a smartphone app though, is hard. There’s no feedback loop, because the app doesn’t know when the user drinks water, nor any extra context about what got him to drink a glass of water in the first place. The Fitbit app for instance, tries to create a feedback loop by asking you to open the app and tell it whenever you’ve drunk water. But of course, that means it needs to convince you both of drinking water and of meticulously keeping logs of your water intake, which is a bit too obsessive for most of us. And even if you did log your water intake, the app would have no further context of what got you to drink water, so it would not be able to easily adjust the conditioning stimuli/reward.

That’s part of why smartphones are usually great at keeping you hooked to apps, which understand their own context, but not great at keeping you doing real-world actions, because they lack the necessary context to generate a feedback loop.

With AR Glasses, that real-world context will be orders of magnitude wider and richer. In a good future where all the incentives align properly, they might be able use this ability to adjust stimuli based on your behavior to help you become a healthier, happier, more productive, virtuous person. In an evil future, you need only imagine the same “drinking reinforcement” app but created by the Coca Cola Company.

VIII.

If you think that’s crazy, just consider the next level of this. What happens when you combine this feedback loop at an individual level, along with millions of users to increase the sample size, with metrics of the app’s effects on users, plus an efficient market incentivized to maximize those metrics?

Let’s take a hypothetical but concrete example: getting people to lose weight.

First of all, we have a simple metric: is the user losing or gaining weight.

Then we have an infinity of possible “stimuli” which apps could show to passively condition users into losing weight. A 2-min random brainstorm yielded these ideas:

Proactively suggesting healthy recipes when it’s dinner time.

Show estimated calorie counts next to any food item in the user’s room.

Frequently show the user a streak of how many days in a row they’ve eaten under their calorie limit.

Actively blurring out unhealthy, processed food in the users visual field.

Reminding the user to drink black coffee after waking up, in order to blunt their appetite.

Reminding the user to go buy groceries only after a meal, so they don’t buy food based on their hunger cravings.

Play satisfying sounds whenever the user is eating something healthy.

Increase the saturation of the colors of any healthy looking food in the users visual field, to make it look more appetizing.

For each of these inputs, the same feedback loop applies. The health app could give each of them a try, and see how the user’s behavior changes. It could then use more frequently the stimuli that work the best.

But the health app could also use its userbase to increase the sample size. It could do A/B testing on its potentially millions of users, and see which of these stimuli work best for the majority of its userbase. It could measure the effect size of each stimuli on weight loss to a statistically significant degree.

It could find correlations between the effect size of each stimuli and users with different characteristics. For example, some stimuli could work better for people of a certain age, or for people of a certain culture. These correlations can get much richer and granular as well; some stimuli could work better for people that sleep longer, or exercise more, or have a particular habit or personality trait we can detect.

And it can use all of this gathered data to narrow down which stimuli work, which stimuli to try on users with certain characteristics and in which order, which stimuli can cause side-effects to a statistically significant degree, etc.

It’s almost an ultra cheap way to conduct randomized-controlled trials at a massive scale, and then use the results to consistently use proven-to-work interventions on millions of people. Half of you will be excited about this, and the other half will be going haywire about the ethical implications of this.

The next level of this is the possibility that App Stores could compound the transformative power of this even further. What if the Weight-Loss category of the App Store, instead of surfacing apps based on some weakly-useful star rating, surfaced them based on anonymized metrics of how effective an app is at causing weight loss. To be fair, this could have happened with the current App Stores and hasn’t, but given how context-aware AR Glasses are, and how effective AR Apps could be at conditioning users’ behavior, it might be the natural way to design an AR App Store.

If something like this took place, where apps were competing with each other to be the best at getting users to lose weight, there’s no telling how profoundly powerful the resulting innovations could be at affecting the behavior of the users. I think human ingenuity could come up with some crazy stuff (let alone AI ingenuity later on). And it’s a fascinating exercise to broaden our scope and imagine the kind of ways this could improve human productivity, health, well-being, creativity, and generally cultivate virtues. It’s scary to imagine the kind of ways this could go wrong too.

My intuition tells me that this ecosystem would be extremely powerful, for better or for worse. I’d be very interested in hearing arguments why this actually wouldn’t be that big of a deal. The case for skepticism is interesting, and I’d be interested in steel-manning it with another article. Maybe AR Glasses have an inherent limitation I’m not thinking of, maybe conditioning and feedback loops are actually very limited and humans not that malleable, maybe much more advanced AI or CV is necessary to make interesting enough apps (I’m aware that some of the examples I gave are not trivial at all), etc.

Final Thoughts.

Assuming this is as big a deal as I’m making it out to be, it all sounds like it could lead down some crazy transhumanist rabbit-holes. Obviously, my mind goes wild with all of the countless ethical implications, possible negative paths this capability could take, incentive issues that might arise, safeguards that need be implemented, etc.

I don’t want to get too trippy too soon though. I’ve done enough speculation for a single article here, and it’s possible that a lot of this is wildly off-base. What I really would like to see happen is to start a conversation. Lets collectively begin to think more deeply about what AR glasses can and should be like, and what a world where they are ubiquitous will look like as a result.

There’s so much to think about and so much to say. I’ll be writing a lot more articles on the subject. Do subscribe to my Substack if you’re interested in reading more (it’s free).

If you were to take home a few key points out of this article, they should be that:

-What makes AR glasses special is they are aware of context.

-AR will feel very different to smartphones, and have a different set of problems.

-The AR application model should be more analogous to browser extensions than smartphone apps.

-There’s a feedback loop that occurs because glasses can adjust stimuli based on how the user reacts.

- sensors+context->[AR Device]->stimuli->[brain]->automatic feedback to context+sensors (credit to shminux)

-That feedback loop makes them very powerful at conditioning human behavior.

[DISCLOSURE: I’m an employee at a big tech company]

6 comments

Comments sorted by top scores.

comment by chaosmage · 2021-01-16T08:09:08.814Z · LW(p) · GW(p)

Awesome article, I would only add another huge AR-enabled transformation that you missed.

AR lets you stream your field of view to someone and hear their comments. I hear this is already being used in airplane inspection: a low level technician at some airfield can look at an engine and stream their camera to a faraway specialist for that particular engine and get their feedback if it is fine, or instructions what to do for diagnostics and repair. The same kind of thing is apparently being explored for remote repairs of things like oil pipelines, where quick repair is very valuable but the place of the damage can be quite remote. I think it also makes a lot of sense for spaceflight, where an astronaut could run an experiment while streaming to, and instructed by, the scientists who designed it. As the tech becomes cheaper and more mature, less extreme use cases begin to make economic sense.

I imagine this leads into a new type of job that I guess could be called an avatar: someone who has AR glasses and a couple of knowledgable people they can impersonate. This lets the specialist stay at home and lend their knowledge to lots of avatars and complete more tasks than they could have done in person. Throw in a market for avatars and specialists to find each other and you can give a lot of fit but unskilled youngsters and skilled but slow seniors new jobs.

And this makes literal hands-on training much cheaper. You can put on AR glasses and connect to an instructor who will instruct you to try out stuff, explain what is going on and give you the most valuable kind of training. This already exists for desk jobs but now you can do it with gardening or cooking or whatever.

comment by Shmi (shminux) · 2021-01-16T01:24:14.477Z · LW(p) · GW(p)

To distill your point to something that makes sense to me:

sensors+context->[AR Device]->stimuli->[brain]->automatic feedback to context+sensors.

Though I doubt that the AR device will be glasses, those are cumbersome and conspicuous. It could be a Scott A's talking jewel in one's ear (can't find the story online now), it could be some combination of always on sensors, a bluetooth connected brick in your pocket with wireless earbuds, a dashcam-like device attached somewhere, a thin bracelet on your arm... Basically sensors processed into stimuli in whatever form that makes the most sense, plus the AR-aware devices that react to the stimuli, your brain being just one of them.

They’ll let you know where your keys are, and remind you to grab your wallet.

Well, in this particular example, keys and a wallet are redundant, the augmentations and the stimuli-aware environment will perform their function, like locking doors, identifying you to others and others to you, paying for anything you buy, etc. We are part way there already.

Replies from: frcassarino↑ comment by frcassarino · 2021-01-16T03:39:57.522Z · LW(p) · GW(p)

I loved the notation you used to distill the feedback loop idea. I'll add it to the post, if you don't mind.

Replies from: shminux↑ comment by Shmi (shminux) · 2021-01-16T04:32:17.781Z · LW(p) · GW(p)

by all means, glad it made sense.

comment by [deleted] · 2021-01-16T06:49:04.511Z · LW(p) · GW(p)

I’d be very interested in hearing arguments why this actually wouldn’t be that big of a deal.

It won't be a big deal because smartphone was not a big deal. People still wake up, go to work, eat, sleep, sit hours in front of a screen - TV, smartphone, AR, who cares. No offense to Steve Jobs, but if the greatest technological achievement of your age is the popularization of the smartphone, exciting is about the last adjective I'd describe it with.

Speaking of Black Mirror, I find the show to be a pretty accurate representation of the current intellectual Zeitgeist (not the future it depicts; I mean the show itself) - pretentious, hollow, lame, desperate to signal profundity through social commentary.