Launching Third Opinion: Anonymous Expert Consultation for AI Professionals

post by karl (oaisis) · 2024-12-19T19:06:15.355Z · LW · GW · 0 commentsContents

The Problem The Solution We Offer Scope and Focus Technical Implementation About Us Next Steps Submit a question Get updates Give feedback None No comments

Dear LW community,

One week ago we have launched Third Opinion (Zvi's coverage of us from today, our X.com thread, website), a service that enables AI professionals to receive anonymous, expert guidance on concerning developments they observe in their work. This post outlines what we're doing and why we think it matters.

Thank you to all the individuals (also from this community) who have supported us over the past months and contributed to getting this off the ground. We hope this to be a valuable resource and contribution to the ecosystem.

The Problem

AI professionals working at the development frontier may encounter situations that they feel raise (serious) concerns.

This matters as they are on the ‘frontlines’ and in a position to spot concerning developments potentially earlier than anyone else.

Unfortunately, they today face significant barriers to evaluating whether these concerns are actually well-founded - i.e. on an object-level concerning.

- Internal discussions may be constrained by organizational dynamics

- Given the ‘bleeding edge’ research nature, few individuals may understand any given concern

- Little precedent/ few public resources may exist for what is acceptable and what is not

- NDAs and confidentiality agreements limit their ability to discuss specifics

- Direct outreach to external experts could compromise their position (precedent for this exists)

- Public discussion carries professional risks - and frequently individuals do not want to harm their own lab, but rather gain clarity on whether they are concerned for good reasons before evaluating their next steps.

Through conversations with over 50 individuals in the field over the past ~year, we found this has the potential to create a persistent information gap - the opposite to what Dean Ball called a 'high quality information environment'.

This negatively affects…

- The Individual: Frustration, loneliness, and a high mental tax affect those that find themselves in uncertainty on whether what they’re seeing is cause for concern. Resolving this situation can be done through a) disassociation with feelings of responsibility or b) potentially entering personal risk (see above).

- The Organizations developing/ deploying AI: Individuals may leak issues publicly to get clarity on their concerns, potentially causing undue harm to the organization. In addition, better informed employees directly improve internal policies and practices.

- Society at large: We want individuals sitting ‘at the steering wheel’ to have access to as much quality information as possible. We also don’t want ‘boy who cried wolf’ situations.

The Solution We Offer

The concept for Third Opinion was developed together with a former frontier lab insider. Third Opinion provides:

- Anonymous submission of questions to us - without including confidential information or information that may allow a reader to draw conclusions about the identity of the 'assistance seeker' or their organization. Take the OpenAI case from this year - an example question here could have been "what are standard terms in the Silicon Valley when it comes to non-disparagement agreements? What terms, especially as part of an exit agreement, would be unacceptable and/ or illegal?". Similar threshold questions (comparable to If-Then scenarios/ Safety Cases) are also thinkable for technical or governance topics, as are more concrete situational evaluations.

- Carefully vetted expert consultation: We identify relevant experts together with the assistance seeker. We then approach those expert candidates to provide an opinion on their question, once they have committed to confidentiality (take a look at our T&C). If sufficient time is available and all parties consent, this can include a Delphi round. As experts are tailored to each case, they can have a wide variety of backgrounds, incl. technical, ethics, governance, legal, etc.

- Secure information exchange that preserves confidentiality: We are the privacy-preserving ‘middle-man’ in this Q&A process, with assistance seekers never leaving the anonymous submission platform (see below).

- Consent & honesty as our absolute primary principle: We don’t have any legal obligations to pro-actively disclose submissions to any (third) party*. We only ever share questions with parties where the assistance seeker has consented. Using Third Opinion in no way establishes a duty on the assistance seeker to do anything with the knowledge they receive.

Our service is free. We help frame inquiries to determine if specific thresholds of concern have been crossed, while protecting sensitive information.

Find details on our process here, our FAQ here.

At this point you may ask yourself “Ah, so this is a Whistleblowing Service?” Not quite. Third Opinion is aimed solely at helping concerned individuals assess whether their concerns are well founded. We must be explicit here that we do not support the sharing of confidential information to third parties via this offering. We are of course always open to discuss the whistle-blower support organization landscape if this is an interest of yours - throughout our research phase we have naturally come across other capable organizations doing important work in this space.

*we are not above the law - there are circumstances imaginable where we could be forced to share conversation data, if not yet deleted, through judicial pressure. This is why it is important for us to share with you to not submit confidential or personal information. If you are concerned about this, please reach out to us directly. We will run you through the scenarios and the likelihood of this risk materializing.

Scope and Focus

We are starting with a focus on frontier AI development. We will expand our coverage over the coming months.

If you are considering submitting a question, please apply the following checklist to your question. You should be answering ‘yes’ to all below:

- Does your question relate to development or deployment of frontier AI models?

- Is there a concern underlying your question relating to your organization behaving potentially adverse to the public interest (now or in the future)?

- Is the concern underlying your question not yet public?

- Are you worried about reaching out to relevant experts directly?

Note: If you are uncertain about whether your question fits our scope - submit it. You can stop the process at any time and we'll let you know if the question falls outside our purview. We will delete all data relating to your question upon your request or latest after 21 days of inactivity.

We list example (!) categories and ways to frame your question here. A selection of categories:

- Technical safety concerns in development

- Deployment risks and safeguards

- Organizational practices affecting safety

- Governance and oversight issues

- Potential misuse scenarios

Technical Implementation

Given the sensitive nature of this topic, we always look to upgrade our security and are very open to feedback and ideas for improvements - ideally on this topic via email (see bottom of post).

Question submission is possible via the Tor Network (tool link) through a self-hosted instance of the most up to date version of GlobaLeaks - an open source solution used by news-rooms and non-profits globally for handling information confidentially and anonymously. GlobaLeaks is regularly pen-tested and audited.

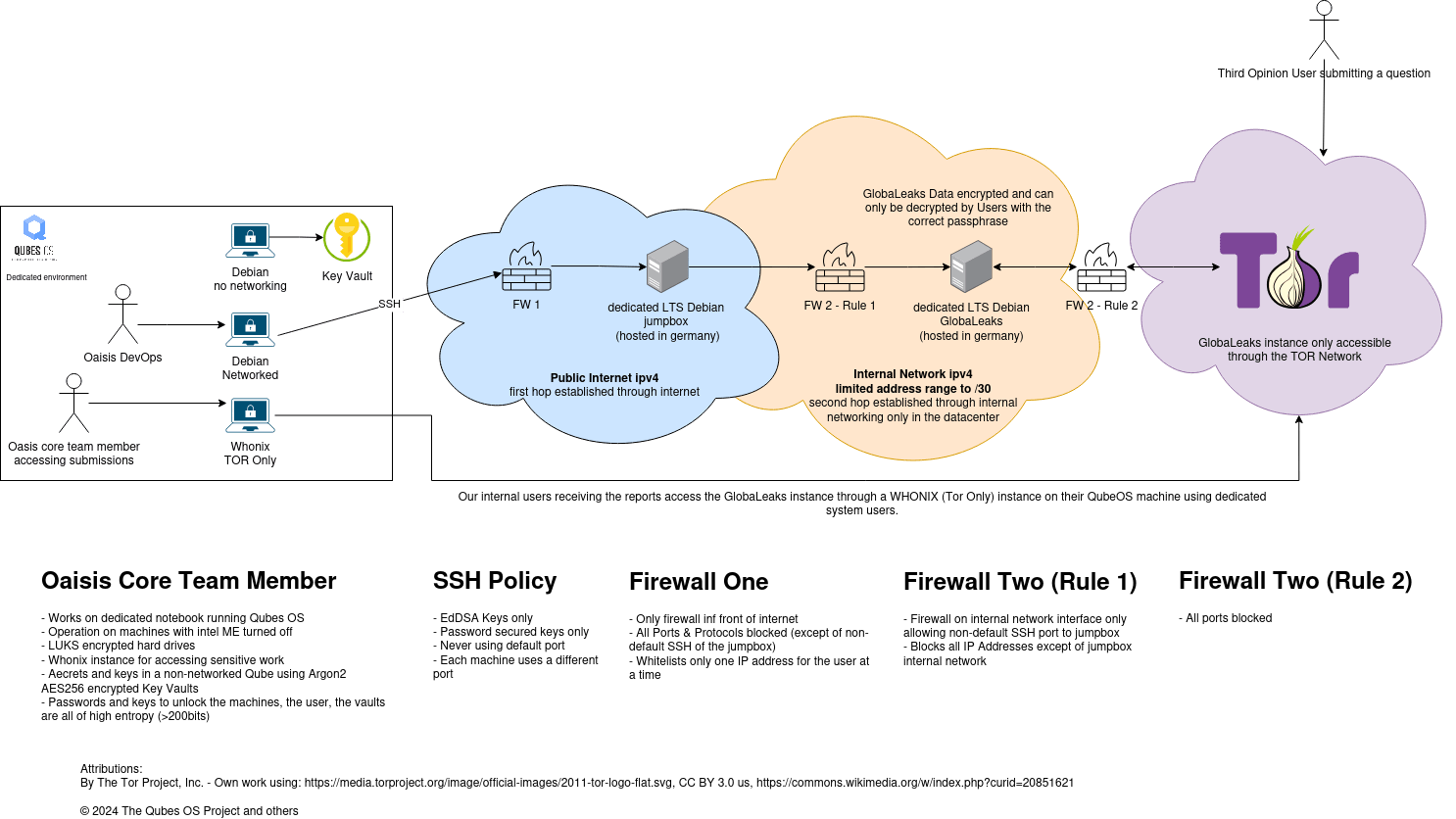

We've implemented several security measures to protect user anonymity and data:

- We own our data and host our own GlobaLeaks instance

- Our disks are fully encrypted, and GlobaLeaks encrypts the data stored in the database

- We host in our Jurisdiction and our services are hosted on dedicated Servers

- We do not use shared VMs to prevent noisy neighbours and mitigate security risks that stem from vulnerabilities such as meltdown and spectre

- Accessing questions, we work on secured machines using QubesOS, where untrusted and trusted work is seperated into "Qubes" (imagine separate machines), network connections are firewalled for trusted and disabled or behind TOR on untrusted Qubes. The hard drives are encrypted and all secrets follow strict rules yielding a minimum entropy of 200 bits

- Our deployment is highly isolated, and access to the system is extremely safeguarded to prevent unauthorized access - for more details, please see the diagram below

- We employ data deletion protocols, i.e. automated deletion of all conversation data 21 days after conclusion of a case or earlier if demanded by the individual.

About Us

Third Opinion is an initiative of OAISIS, an independent non-profit focused on supporting responsible AI development. Our core team includes:

- Karl Koch (Co-founder)

- Maximilian Nebl (Co-founder)

- Network of advisors from AI safety, law, and journalism fields

Third Opinion is our first offering - more will follow. We are in partnership with Whistleblower Netzwerk e.V. - Germany’s most experienced and largest non-profit dedicated to supporting courageous individuals.

Next Steps

Submit a question

If you work in AI development and have concerns you'd like evaluated:

2. Review our question policy

3. Submit your query through our tool on Tor

Get updates

- Social: X.com/Bluesky/Threads

Give feedback

Questions or suggestions are very welcome in the comments or via hello@oais.is for more sensitive matters - find our PGP key at the bottom of this page.

0 comments

Comments sorted by top scores.