Advice for Entering AI Safety Research

post by scasper · 2023-06-02T20:46:13.392Z · LW · GW · 2 commentsContents

A 2D Cluster Model of the AI Safety Research Landscape The Four Pillars Considerations for Grad School None 2 comments

I know that other posts already exist giving advice for people who want to get more involved in AI safety research, and they are worth reading. But here I want to (1) add my two cents and (2) put in writing some of the things I find myself talking about often.

The intended audience for this post is people wanting to pursue work in AI safety research, particularly undergraduate students.

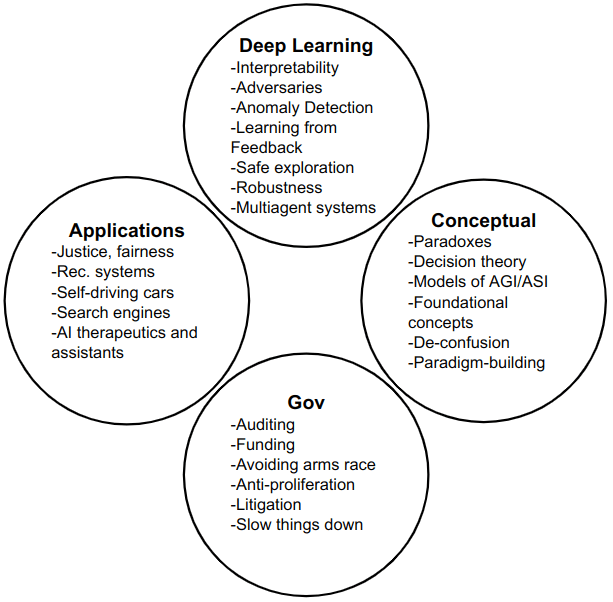

A 2D Cluster Model of the AI Safety Research Landscape

I think of the AI safety research landscape as clustering fairly well by topic and skillset into four types. I arrange them in this square shape because there are a lot of connections along the sides of the square (e.g. between the deep learning and conceptual clusters) but relatively few connections along the diagonals (e.g. between deep learning and governance). Connections along the diagonals still exist though! For each cluster, I list some subtopics in the graphic, but they are not exhaustive.

- Deep Learning: Here, most people code every day, work with neural nets, and try to publish to deep learning conferences like NeurIPS.

- Conceptual: This is the cluster where most of the foundational concepts, vocabulary, and ideas involving AI safety are born. There is a lot of work being done here, and a good amount of it is approached by people with a theoretical, mathematical, philosophical, background – most of this work does not involve coding. Lots of this work ends up on the AI Alignment forum instead of being put into academic papers.

- Governance: This work involves trying to pull levers in policy and politics to make AI develop in a way that’s more healthy and less dangerous. As you might guess, most people working here have policy skills and backgrounds, but this work also benefits greatly from technical insights.

- Applications: This is neartermist work, and lots of people may not emphasize this in an overview of “AI safety” work. But engaging more with immediate issues involving making AI go well can (1) solve important problems even if they aren’t ones involving catastrophic risk, (2) help the AI safety community engage more with the outside world, (3) help develop useful technical tools, and (3) help build strategies for governance. Imagine we did a great job of solving any of the issues listed in the left cluster in the diagram above. What are the changes we could do this without developing a bunch of useful technical and governance strategies in the process?

If you find this model to be clarifying, consider exploring the topics in each cluster you might find interesting. A great way to start is with survey papers (discussed further below).

The Four Pillars

When it comes to what you know and what skills you have, they are mainly upheld by four key pillars: your classes, projects, reading, and network.

- Classes are essential but easily overrated. Most of what you learn in them will not be useful for your later career (research or not), but you will learn a lot from good classes about basic ideas and, more importantly, how to think. It’s brain training. The biggest thing to remember is that your classes should serve you – not the other way around.

- Major in what is convenient: Classes are costly, and if you end up in ones you don’t really want to take, it’s a big loss of time. I recommend majoring in whatever forces you to take the fewest courses you don’t want to take. I also recommend only getting a minor if it’s by accident. I am currently a CS PhD student who technically majored in statistics as an undergrad but still worked on CS anyway. It never caused me any trouble or closed any doors for me.

- Self-study generic prerequisites: lots of things can be learned on Khan Academy or elsewhere online with less time, effort, and money than it would take to learn them in college. I recommend self-studying them to get them out of the way so you can prioritize the kind of classes that teach you things that are harder to learn online.

- Taking hard classes or a lot of classes is not a point of pride. Don’t intentionally make your classes difficult. And similarly, don’t strive for straight A’s. If you goodhart your performance in classes, your ability to develop skills relevant to research will suffer. And by “you”, I mean you! When I used to get this advice, I thought that somehow I was an exception and that if I worked hard enough, I could take hard classes, get great grades, and do great research. I didn’t succeed.

- Take advantage of course projects: Lots of classes require an end-of-semester project, so you should use those to try out research ideas.

- Projects are everything. There is nothing like them for developing research skills and experience.

- Mentorship when getting into a field is key. There are different tiers of it though. The best but rarest kind is when you find someone who brings you onto a project that they are also actively working on and which is close to their agenda. But if this kind of arrangement is hard for you to find, then you might be able to find someone who will give you guidance, semi-regular meetings, and advice (formally or informally) even though they might not be working by your side or heavily involved/invested in the project.

- It’s ok to work on other people’s ideas. It’s probably good to do this at first.

- A project does not need to involve AI safety for you to gain valuable experience from it.

- Summer planning starts in November. It’s the time to start looking for opportunities, talking to professors, etc.

- Papers are everything in the research world. You will want experience being on them before applying to grad school for most research positions. It is papers, not grades that will get you far.

- Read papers and take notes on them all the time.

- Do deep dives [LW · GW]. I think this is probably by far the most important piece of advice in this entire post.

- If you are ambitious, consider reading a ton about a particular research topic and eventually writing a survey paper on it in your spare time. If you work really hard on it, it might be worth putting it on arXiv (else maybe LessWrong or someplace similar). The process of writing a survey paper is probably the best way possible to learn something thoroughly, come up with new ideas, find out what is missing in a field, and form a research agenda. It also makes a nice portfolio item. And if you apply to grad school or jobs, it’s nice to be able to point out that you do research and write things for fun in your spare time.

- If you find that the things you read about AI safety are all either on the Alignment Forum or discussed on the Alignment Forum, you are probably being much too parochial. There’s a lot more to read.

- When reading about a field, it is often best to start with survey papers. Here are some surveys about AI safety-relevant topics. I’ll try to update this list periodically. Also, if you have any recommendations for any to add, please let me know in the comments.

- Interpretability

- Anomaly detection

- Adversaries

- Diagnostics

- Backdoors/Trojans

- Specification learning

- Recommender systems

- RLHF

- Paradoxical dilemmas for AGI/ASI

- Embedded agency

- Potential less dangerous ways to build AGI

- Governance

- General problems

- Other general problems

- Some more general problems

- Yet another survey of general problems

- Networking is really valuable.

- Cold emailing people to talk is great, but expect few replies from well-known, important people.

- Often people will be happy to talk to you about their work and what they think should be done with it next. This is a great way to find collaborators and ideas.

- In general, good conversations generate great ideas.

- When you have ideas about a project or agenda, it may be helpful to type them up in a Google doc and try to find people to give you feedback on them.

Considerations for Grad School

In general, the choice to go to grad school is not a high-stakes one. If you go to grad school, you can always drop out or master out after a year or two. And if you don’t, you can always still apply in a year or two. Doors stay open for years and don’t usually close for a while as long as you’re working on research-related stuff.

Grad school is just another place to do research. Your decision about whether to go to grad school should usually mostly be a decision based on what work you will have the chance to do with what people. If you want to do research, your primary consideration should be about what places will be a good fit for your research interests instead of about whether they are in academia or not. However, there are a few inherent advantages and disadvantages. Pros of grad school are being able to be a professor later and having more academic freedom. The main cons are having to take a few more classes and not making much money.

Also, check out these two guides.

Hope you enjoyed reading this! Feel encouraged to comment on stuff you think should be added or anything you emphatically agree/disagree with.

2 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2023-06-02T21:00:04.995Z · LW(p) · GW(p)

Isn't the field oversaturated?

Replies from: scasper