Keep humans in the loop

post by JustinShovelain, Elliot Mckernon (elliot) · 2023-04-19T15:34:20.960Z · LW · GW · 1 commentsContents

Moloch: why do coordination failures happen? What are "loops", and why is it good to have people in them? How do preserve the benefits of humans in the loop? Using AI to develop AI Conclusion None 1 comment

In this post, we’ll explore how systems that are populated by people have some protection against emergent coordination failures in society. We’ll argue that if people are removed from the system or disempowered, technological development will drift away from improving human welfare, and coordination failures will worsen. We’ll then focus on AI development, exploring some practical techniques for AI development that keeps humans in the loop.

Moloch: why do coordination failures happen?

In Scott Alexander’s classic Meditations on Moloch, he uses the ancient deity Moloch to personify the perverse incentives that can lead a society to become miserable in spite of individuals’ desire to be non-miserable. This metaphor draws on a poem by Alan Ginsberg, where the god similarly symbolizes the disembodied societal system around us:

Moloch whose mind is pure machinery! Moloch whose blood is running money! Moloch whose fingers are ten armies!

What are the perverse incentives and misery we’re talking about? Let’s look at a quick example: why are countries reluctant to raise taxes on big business or the rich, despite the opportunities this would afford? Well, if one country lowers its tax rate, it’ll attract the rich and their businesses, improving the country’s economy. This leads to a “race to the bottom”, where each country tries to undercut the others, leaving them all with lower tax rates than they want. For a practical example, this behaviour could be seen when American cities competed to attract Amazon’s HQ2 project.

This is an example of a coordination failure [LW · GW]: a failure by people to coordinate to produce the best global outcome. Scott presents Moloch as the answer to the question “why do coordination failures happen?”.

However, not all attempts at coordination fail, and not all systems devolve into Molochian hellholes. We could also ask “Why don’t coordination failures happen all the time?”, and in this article, we’ll propose a partial answer: that “systems”, broadly speaking, have some protection against Molochian dynamics when they are populated by people who interact with other people, and who have some control over the system.

When we reduce the level of interaction between people, or disempower people within a system, the alignment between technological development and human welfare in that system is weakened. That is, the correlation between technological development and human welfare is dependent on there being people “in the loop”.

This means we are at greater risk of coordination failures and Molochian misery when people are “out of the loop”, and therefore we should be cautious about societal changes that reduce the importance of humans, such as the accelerating development of AI and the automation of human labour and decision-making.

In the rest of this article, we’ll define what we mean by “humans in the loop” more precisely, then explore our claim that keeping humans in the loop will protect us against coordination failures and Moloch. We’ll then focus on AI safety and dive into a couple of proposals for how to keep people in the loop during AI development.

What are "loops", and why is it good to have people in them?

Broadly, we will use “loop” as a catch-all for the systems that we engage with in our lives, such as systems of governance, of production and distribution, of education, of technological development, and so on. The term “loop” hints at the importance of feedback loops within these systems. Adjusting the behaviour of feedback loops can dramatically alter the global behaviour of the system, and feedback loops can be operated by humans or non-humans (such as AI, bureaucracy, simple logic, and so on). This makes feedback important for understanding the role of people in loops.

We often think about loops from a big picture perspective, ignoring individuals and focusing on the system’s structure and incentives. This is why, in Meditations on Moloch, Scott argues that personifying emergent systemic outcomes as Moloch is useful:

It’s powerful not because it’s correct – nobody literally thinks an ancient Carthaginian demon causes everything – but because thinking of the system as an agent throws into relief the degree to which the system isn’t an agent.

However, these loops are full of people. A.J. Jacobs once tried to thank everyone involved in making his morning cup of coffee. He thanked the barista that served him, the taster who traveled the world picking the beans the cafe would serve, the logo designer, the farmers who grew the coffee, the lorrymen who transported it, the workers who built and maintained the road those lorries travelled on, and so on. In the end he thanked over a thousand people who were involved in getting him that cheap morning coffee.

In analyzing these loops, and understanding how to protect and improve them, it's helpful to think in terms of the roles people have in them:

- The customers who determine the value of outputs.

- The suppliers who determine the cost of inputs.

- The workers who implement operations and object if something is likely to harm themselves or others.

- The managers that ensure that work flows well and safely and respond to worker objections.

- The owners and voters who reap the benefits of the system.

- The designers & founders who design the systems to be safe and good for people.

- The regulators & nonprofits who internalize externalities.

- The family & friends who care for and protect each other from harm.

People have empathy, and a basic desire to help each other. Our claim is that when people are in a loop, with some influence over it, and grant the people they interact with moral consideration, this mitigates the severity of the Molochian coordination failures (failures such as the “race to the bottom” example earlier, or the other examples presented in Scott’s post).

To be clear, we’re not claiming that people are purely selfless and kind, nor assuming that all interactions are ideal, positive, or fair. For example, democratically elected politicians require the support of voters, but that doesn’t mean that their goals are perfectly aligned with the electorate. Instead, our claim is that the people in the loop do limit the worst emergent effects by aligning new developments with human welfare.

If our hypothesis is correct, then we should see more severe coordination failures when there’s less “humanity” in the loop, whether that’s because (1) people are replaced by agents (human or otherwise) that are less capable, less aligned, or have less influence over the loop, or (2) people’s empathy for one another is diminished. Let’s look at these in more detail:

- Automation. Automating jobs replaces people with agents that can be less capable or morally aligned with the human they’re replacing. For example, self-service checkouts are cheaper than employing humans, but the machines only achieve a single task and remove the interaction between staff and customer. This can be a disadvantage to customers, such as making shopping more challenging for disabled people.

A more serious example is automation within the military. Militaries around the world are developing remote-controlled & autonomous robots. This insulates military personnel from danger, and machines can outcompete people at certain tasks. However, this means that military actions are less constrained by the willingness of participants. Further, it separates military action from public awareness, since war coverage often focuses on domestic military casualties. Since the opponents are often depersonalised in such situations, as described above, this can also reduce the importance of public approval for military decision-making.

- Circles of empathy. When one group’s sense of empathy towards another group is diminished, we often see more injustice and misery. For example, groups might be less considerate to those outside their tribe, or religion, or nation. At its most sinister, this can be a feature of propaganda and discourse prior to genocides, but it also features in other cases, such as the online disinhibition effect, where people act much crueler online than in-person.

Generally, the circle of people we give moral status has expanded throughout history, which has helped align technological development with human welfare, especially in recent history.

How do preserve the benefits of humans in the loop?

So, if our claim that having people in the loop reduces the severity of coordination failures is correct, how can we apply it? How can we preserve the benefits of human interactions in social systems?

Well, we should be vigilant and cautious towards developments that disempower or replace people that interact with other people. Such developments are more common in recent history and will be even more common as AI becomes more prevalent.

For example, even if we dislike both, we should probably favour remote-controlled drones in the military rather than complete automation. Note that this is more specific than merely opposing automation. In cases where human interactions are already limited or absent, automation is less dangerous, since it won’t reduce the “total empathy” in the system. Automating solitary tasks such as data entry or machine maintenance is less likely to cause coordination problems.

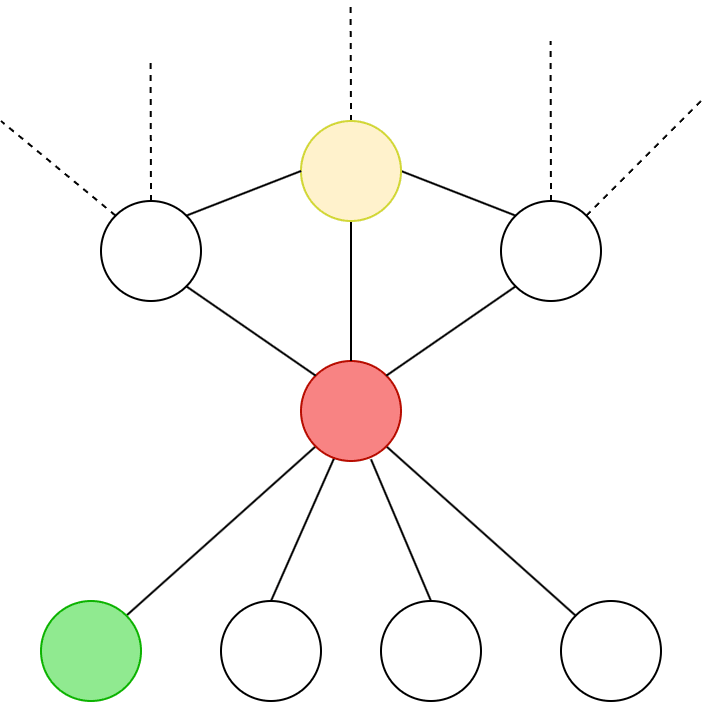

We could think of this graph-theoretically. If vertices represent people and edges represent their interaction within a system, we should prefer the automation of low-degree leaves to high-degree cut vertices. That is, in the following network we’d rather automate the green vertex than the yellow, and rather the yellow than the red:

When a vertex like the red one is automated, we should try to force connections between the people above and below that layer. For example, though supermarkets and restaurants have replaced many staff with digital interfaces, it’s generally still possible to cross the automated layer and try to engage with people overseeing the automata, or a person at a higher layer.

Using AI to develop AI

Let’s focus now on AI development. Before we do, note that some AI developers use the phrase “human-in-the-loop” to describe a particular kind of ML model, but that’s a much more narrow usage than how we’re using the phrase.

We’ve already talked about the impact of existing automation on human-in-the-loop systems, but AI development is accelerating. New developments such as GPT and other large language models are likely to disrupt loops a lot more, and radically transformative AI may be near.

Further, there’s a multiplier effect when automation occurs in the loop of AI development. As we’ve discussed, automation can have dire consequences in certain situations. Therefore, the developmental loops that create AI and automata are critically important. It’s crucial to keep humans in these loops especially, but it’s naturally a field where people are familiar with machine learning, automation, AI and their uses. For example, in mesa-optimizers [AF · GW], one type of learning model is used to optimize another, making alignment more challenging.

There are some practical proposals for mitigating or avoiding some of the risks of autamed AI development: we’ll briefly outline two we’ve come across, though we should note these are good but not sufficient to resolve the problems we’ve described.

- In Rafael Hart’s post, A guide to Iterated Amplification & Debate [LW · GW], he explores two proposals for using an AI system that’s being trained to help with its training while maintaining a person in the loop.

Both are focused on reducing outer misalignment. That is, the alignment of what we want with what we use to train the AI (the training signal). For example, outer alignment is easy with a chess AI, since our goal, “build an AI that wins at chess”, maps cleanly onto the training signal, “win at chess”. In contrast, GPT is outer-misaligned, since the training signal is “predict the next word in this sequence”, while our goal is more like “which choice of word next will be the most useful” (or something). GPT repeats false facts it has seen in its training data because this achieves its goal of predicting what word comes next.- The first proposal, credited to Paul Christiano, is Iterated Distillation & Amplification, or IDA. Here, the first step is to distill a human’s competence at a task into a model which is quicker but less accurate than the human. Then, the human and the model together are better than either alone. This is amplification: the human can outsource easy questions to her fast-but-less-smart simulacrum, giving her more time to tackle hard questions herself. This is one step each of distillation and amplification, and the next step would be to distill this human+model into a new model, then give the human access to the new model, and so on.

Each distillation reduces performance, but less than each amplification increases it. After many iterations, this provides the human with a model that is close to her performance but faster.

- The second proposal, credited to Geoffrey Irving, is debate. The idea here is for two AIs to debate a question by breaking it down into sub-questions. In the game, an AI system argues with an identical copy of itself. The input to the game is a question, and the output is a transcript of the arguments made by each agent. A human judges and decides who wins while enforcing two principles: (1) If one agent points to a particular part of their opponent's argument, the other should respond by arguing for that specific point. If it changes the subject, it loses. (2) If any sub-question is resolved in favour of one side, that side wins the entire debate (you can read the full post for more detail on why these rules are chosen).

This has the advantage of reducing the complexity of the problem at each step, since sub-questions and sub-arguments are sub-sections of the whole problem. Further, the human judge doesn’t have to concern herself with decomposing the question, since the AIs handle that. At most, she has to figure out whether a decomposition is valid.

- The first proposal, credited to Paul Christiano, is Iterated Distillation & Amplification, or IDA. Here, the first step is to distill a human’s competence at a task into a model which is quicker but less accurate than the human. Then, the human and the model together are better than either alone. This is amplification: the human can outsource easy questions to her fast-but-less-smart simulacrum, giving her more time to tackle hard questions herself. This is one step each of distillation and amplification, and the next step would be to distill this human+model into a new model, then give the human access to the new model, and so on.

- In their post, Cyborgism [LW · GW], NicholasKees and Janus offer a different way to mitigate the problem. In particular, they propose the training and empowering of “cyborgs” - that is, improving the capabilities of humans in AI development - as a way to keep humans in the loop without being outcompeted by those who go for full automation.

At a high level, the plan is to train and empower “cyborgs”, a specific kind of human-in-the-loop system which enhances and extends a human operator’s cognitive abilities without relying on outsourcing work to autonomous agents. This differs from other ideas for accelerating alignment research by focusing primarily on augmenting ourselves and our workflows to accommodate unprecedented forms of cognitive work afforded by non-agent machines, rather than training autonomous agents to replace humans at various parts of the research pipeline.

Rather than turning GPT into an agent (an entity with its own goals that engages with the world purposefully), they argue that we should use GPT as a simulator as part of a human-in-the-loop system that enhances a person’s capabilities. Rather than giving an AI its own AI research assistant, such people can take the role of a research assistant to “a mad schizophrenic genius that needs to be kept on task, and whose valuable thinking needs to be extracted in novel and non-obvious ways”.

Conclusion

Humans evolved empathy and a sense of morality to help us cooperate fairly. Groups of cooperating individuals can outcompete groups of the selfish, but selfish individuals in otherwise cooperative groups can sometimes get the best of both worlds. To mitigate this, we evolved a desire for fair reciprocity, to reward those who fit with societal norms and “trade” honestly and fairly.

These morals are baked in at a pretty deep level for us, but they aren’t an inevitable consequence of intelligence. The automata we relinquish control to don’t replicate human empathy and intelligence. Relinquishing control over to automata can separate people, potentially removing empathy from at least one layer of the network. To summarise our recommendations:

- Be cautious about automating the roles of people in the loops you influence.

- Don’t mistake improved efficiency for improved outcomes.

- If you influence any AI development loops, encourage development that keeps humans in the loop and keeps them empowered.

- If you influence any AI development loops, resist development that removes people from the loop, or disempowers them.

If you’re interested in this idea, or work in AI development, we recommend you read the articles we summarised earlier (A guide to Iterated Amplification & Debate [LW · GW] and Cyborgism [LW · GW]) and you may also enjoy these related posts:

- Improving the future by influencing actors' benevolence, intelligence, and power [EA · GW] by MichaelA and Justin Shovelain, where they discuss the role of actors and how to influence them positively.

- Moloch and the Pareto optimal frontier [EA · GW] by Justin Shovelain, which covers a Molochian topic similar to this article.

We’d love your input on this topic. Can you extend these ideas or come up with new examples? Could you use these ideas to design improvements to AI development and control? Let us know in the comments.

This article is based on the ideas of Justin Shovelain, written by Elliot Mckernon, for Convergence Analysis. We’d like to thank David Kristoffersson for his input and feedback, and Scott Alexander, Rafael Hart, NicholasKees, & Janus for the posts we’ve referenced and discussed in this article.

1 comments

Comments sorted by top scores.

comment by Justin Bullock (justin-bullock) · 2023-07-10T23:44:23.164Z · LW(p) · GW(p)

Thanks for this post. As I mentioned to both of you, it feels a little bit like we have been ships passing one another in the night. I really like your idea here of loops and the importance of keeping humans within these loops, particularly at key nodes in the loop or system, to keep Moloch at bay.

I have a couple scattered points for you to consider:

- In my work in this direction, I've tried to distinguish between roles and tasks. You do something similar here, which I like. To me, the question often should be about what specific tasks should be automated as opposed to what roles. As you suggest, people within specific roles bring their humanity with them to the role. (See: "Artificial Intelligence, Discretion, and Bureaucracy")

- One term I've used to help think about this within the context of organizations is the notion of discretion. This is the way in which individuals use of their decision making capacity within a defined role. It is this discretion that often allows individuals holding those roles to shape their decision making in a humane and contextualized way. (See: "Artificial discretion as a tool of governance: a framework for understanding the impact of artificial intelligence on public administration")

- Elsewhere, coauthors and I have used the term administrative evil to examine the ways in which substituting machine decision making for human decision making dehumanizes the decision making process exacerbating the risk of administrative evil be perpetuated by an organization. (See: Artificial Intelligence and Administrative Evil")

- One other line of work has looked at how the introduction of algorithms or machine intelligence within the loop changes the shape of the loop, potentially in unexpected ways, leading to changes in inputs in decision making throughout the loop. That is machine evolution influences organization (loop) evolution. (See: Machine Intelligence, Bureaucracy, and Human Control" & "Artificial Intelligence, bureaucratic form, and discretion in public service")

- I like the inclusion of the work on Cyborgism. It seems to me that in someways we've already become Cyborgs to match the complexity of the loops in which we work and play together. as they've already evolved in response to machine evolution. In theory at least, it does seem that a Cyborg approach could help overcome some of the challenges presented by Moloch and failed attempts at coordination.

Finally, your focus on loops reminded me of "Godel, Escher, Bach" and Hofstadter's focus there and in his "I am A Strange Loop." I like how you apply the notion to human organizations here. It would be interesting to think about different types of persistent loops as a ways of describing different organizational structures, goals, resources, etc.

I'm hoping we can discuss together sometime soon. I think we have a lot of interest overlap here.

Thanks for this post! Hope the comments are helpful.