A Formula for the Value of Existential Risk Reduction

post by harsimony · 2021-03-02T18:54:15.346Z · LW · GW · 0 commentsThis is a link post for https://harsimony.wordpress.com/2021/02/28/a-formula-for-the-value-of-existential-risk-reduction/

Contents

The Model The Implications None No comments

(EDIT: fixed the formatting issues on an earlier version of this post)

How important is existential risk reduction (XRR)? Here, I present a model to estimate the value of eliminating existential risks.

Note that this model is exceedingly simple; it is actually contained within other studies of existential risk, but I feel the implications have not been fully considered.

The Model

Let’s imagine that, if we did nothing, there is a certain per-century probability of human extinction . This means that the discount rate (survival probability) is . This would mean that the total value of the future, discounted by the probability of extinction each century, is1:

Where is the expected value (however that might be defined) of each century .

For the purposes of this post, our goal is to maximize via XRR.

To do this, imagine we eliminate a specific existential risk (e.g. get rid of all nuclear weapons). This has the effect of raising by a multiplicative factor , making the new discount rate (note that since the overall discount rate can’t be larger than one).

Now, the new value of the future is:

Using this “risk reduction coefficient” is a natural representation of XRR because different risks multiply to produce the overall discount rate.

For example, if there was a per-century probability of extinction from asteroids and a per-century probability of extinction from nuclear war , then the overall discount rate is . So, if we eliminated the risk of nuclear extinction, the new discount rate is now . In this case, .

Lets assume is a constant each century2, we obtain a simple formula for in this case:

What happens to when we reduce existential risks in a world where X-risk is high, versus a world where X-risk is low?

Imagine two scenarios:

- A world with nuclear weapons which also has a low level of existential risk from other sources (e.g. not many dangerous asteroids in it’s solar system, AI alignment problem has been solved, etc.). in this world (that is, there is a 99% chance of surviving each century).

- A world with nuclear weapons which also has a high level of existential risk from other sources (e.g. many dangerous asteroids, unaligned AI, etc.). in this world (that is, there is a 98% chance of surviving each century).

It is easy to calculate the value of the future in each scenario if nothing is done to reduce existential risk:

Next, let’s posit that the existential risk from nuclear war is 0.5% each century in both worlds. An action which completely eliminated the risk of nuclear war would increase to 0.995 and 0.985 in scenario 1 and 2 respectively. in each case is:

So in this example, the removal of the same existential risk is more valuable to the “safe” world in both an absolute and relative sense.

Let’s examine this in a more general way.

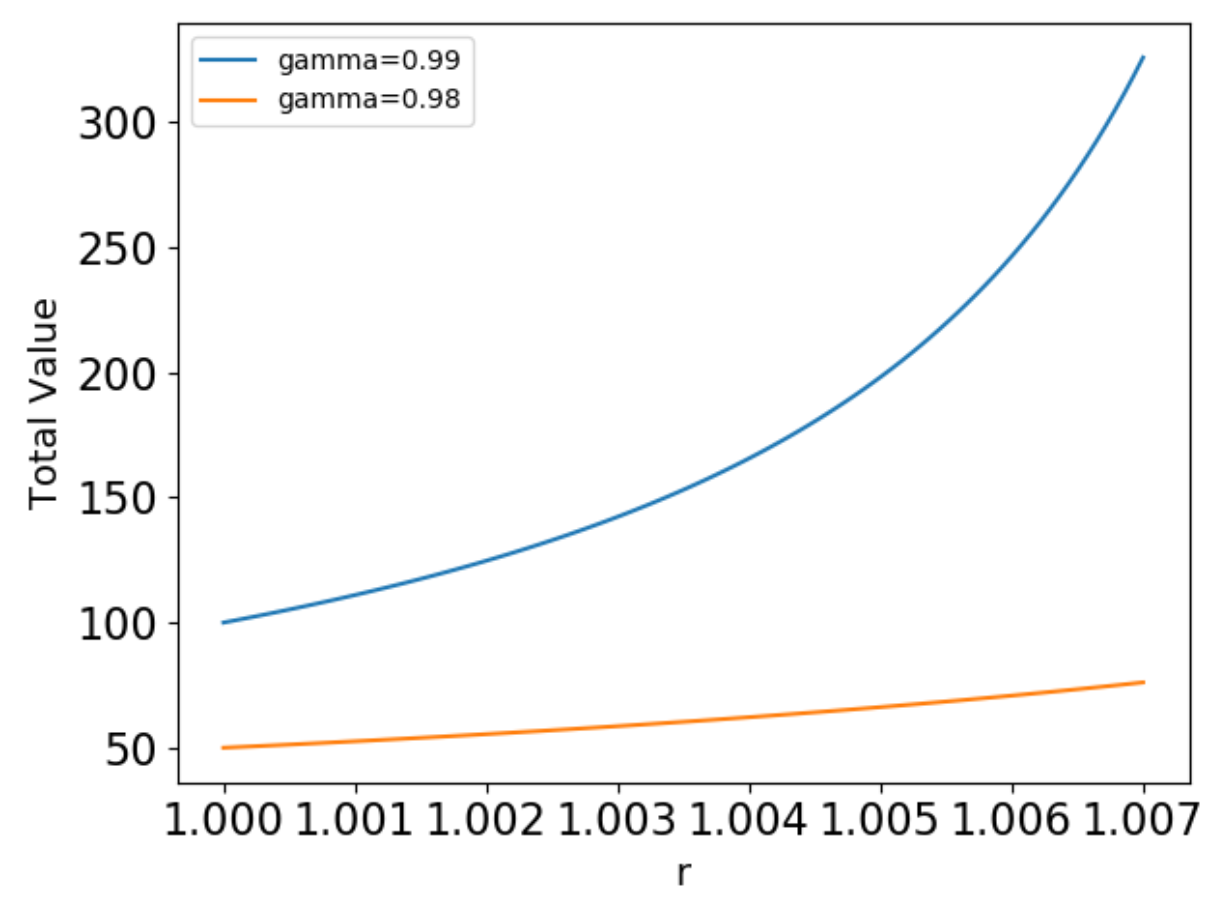

What happens to the value of the future in each world when we change the risk reduction parameter versus with two different initial discount rates and :

Once again, the trend is very different depending on the baseline level of risk!

The Implications

There are several interesting implications of this model.

First, as the previous example illustrates, the higher the level of X-risk, the lower the benefit of the same constant reduction in X-risk.

The second implication follows from the first: the more you have reduced X-risk, the more valuable further reductions are. You can imagine sliding along the upper blue line; as you go further right, the slope increases, meaning that the marginal X-risk reduction has an even higher value than the last.

What does this model imply for the strategy of existential risk reduction? With the accelerating nature of returns, it might be good idea to find quick, early ways to reduce risk. Potentially, initial X-risk reductions would increase the value of subsequent reductions and create a snowball effect, where more and more investment occurs as the value increases.

There is a flip side to this effect. Innovation in certain areas can increase existential risk (e.g. developments in AI) and thus lower , reducing the value of XRR. This means that in order maintain the value of the future, efforts to prevent human extinction have to keep pace with technological growth. This further increases the urgency of finding ways to reduce existential risk before technology advances too quickly.

Do the increasing returns and high urgency imply that some sort of “hail Mary” with regards to XRR would be a good idea? That is, should we look for unlikely-to-succeed but potentially large reductions in X-risk? Since the value of the future increases so quickly with increases in , even a plan with a small chance of success would have a high value. I am hesitant to endorse this strategy since the model overestimates the upside of XRR by assuming that the lifetime of the universe is infinite3; but it seems like something which deserves more thought.

Even this trivial formula has implications for XRR strategy, and I am interested to see if these considerations hold up in more complete treatments of existential risk. Aschenbrenner 2020 and Trammel 2021 present more sophisticated models of the tradeoff between growth, consumption, and safety. I hope to consider these in more depth, but that will be the focus of a future post.

This model may also be useful as a pedagogical tool by demonstrating the value of eliminating small risks to our existence while highlighting different strategic concerns.

Notes

- If you have issues with the fact that I am using and infinite series when the lifetime of the universe is probably finite, you can consider this formula to be an upper bound on the value of XRR.

- What happens in the more realistic case that is not constant? More concretely, we can expect that the value of the future will increase significantly with time. An increasing means that XRR is more valuable than the constant case. Since most of the value will occur in the future, it becomes even more important to survive longer. If peaks early or falls in the future, then XRR is less valuable since there is less reason to be concerned about surviving long term. Note that the other implications hold as long as V(t) is independent of XRR efforts.

- To see how this assumption raises the value of a “hail Mary”, note that goes to infinity when .

0 comments

Comments sorted by top scores.