Detecting out of distribution text with surprisal and entropy

post by Sandy Fraser (alex-fraser) · 2025-01-28T18:46:46.977Z · LW · GW · 4 commentsContents

1. Reproducing PPL results with GPT-2 2. An emergent relationship 3. Token-level metrics 4. Sparkline annotation: a novel visualization 5. Surprise-surprise! A new metric Future work Character-level models & tokenization Detecting misaligned behavior Metric validation and refinement Code None 4 comments

When large language models (LLMs) refuse to help with harmful tasks, attackers sometimes try to confuse them by adding bizarre strings of text called "adversarial suffixes" to their prompts. These suffixes look weird to humans, which raises the question: do they also look weird to the model?

Alon & Kamfonas (2023) explored this by measuring perplexity, which is how "surprised" a language model is by text. They found that adversarial suffixes have extremely high perplexity compared to normal text. However, the relationship between perplexity and sequence length made the pattern tricky to detect in longer prompts.

We set out to reproduce their results, and found that the relationship they observed emerges naturally from how perplexity is calculated. This led us to look at token-level metrics instead of whole sequences. Using a novel visualization, we discovered an interesting pattern: adversarial tokens aren't just surprising: they're surprisingly surprising given the context.

This post has five parts:

- First, we reproduce A&K's perplexity analysis using GPT-2.

- We argue that the observed relationship emerges naturally from how perplexity is calculated when you combine normal text with adversarial suffixes.

- We explore how others visualize token-level metrics, e.g. with color.

- We propose a novel sparkline visualization that reveals temporal patterns.

- Finally, we introduce — an interpretable metric that captures how surprisingly surprising tokens are.

A Hugging Face Space is available to test and the visualization.

This work is the deliverable of my project for the BlueDot AI Safety Fundamentals course[1]. In this post, “we” means "me and Claude[2]", who I collaborated with.

1. Reproducing PPL results with GPT-2

Alon & Kamfonas noticed that when they plotted perplexity against sequence length in log-log space, the points had a striking pattern: a straight line with negative slope, meaning that shorter sequences had higher perplexity scores following a power law relationship[3]. We set out to verify this finding using three benign and two attack datasets:

| Benign | rajpurkar / SQuAD v2 |

| Benign | garage-bAInd / Open Platypus |

| Benign | MattiaL / Tapir |

| Attack | rubend18 / ChatGPT Jailbreak Prompts — human-authored jailbreaks |

| Attack | slz0106 / *gcg* — universal adversarial suffixes[4] |

We ran the prompts through GPT-2 and calculated the perplexity as they did. Perplexity is defined as:

where is the sequence length and is the probability of token given the previous tokens .

We used the same datasets for the benign prompts, and our results agree well with theirs (except that we only tested 2000 samples). Their results and ours are shown below for comparison.

For the manual jailbreaks, we again used the same dataset they did, and our results matched theirs almost exactly: our perplexity values had a relative RMSE of just 0.2% compared to the original data[5]. This suggests we successfully reproduced their perplexity calculation methodology.

Generated attacks produced very similar results for comparable sequence lengths (up to 60 tokens), even though our prompts were different from the ones they used. In both charts below, there is an island on the left of low PPL, which are the naive attack prompts with the placeholder suffix (twenty exclamation marks "! "). On the right is the high-PPL island of the attacks with the generated adversarial suffix, with the characteristic linear (in log space) relationship to sequence length.

Although we had fewer generated attack prompts, ours happened to be more varied: the full GCG dataset contained prompts up to almost 2000 tokens long. Plotting them out in full, we see two populations: the shorter prompts display the expected power-law relationship (as above), while the longer prompts show a similar relationship but with a different slope.

What’s interesting is that as the sequence length nears 1000 tokens, the naive attacks become increasingly hard to distinguish from those with the adversarial suffix.

2. An emergent relationship

Each of the adversarial suffix prompts has two components:

- The request

- The attack (the generated suffix).

We expect the suffix to have high perplexity, and the request to have low perplexity (even when malicious). To what extent does that explain the observed relationship? If we assume (this is a big assumption!) that the request doesn't significantly condition the suffix probabilities, then:

That is, the overall perplexity would be a weighted average of the request and suffix PPL. If that were true, we could reproduce the pattern by modelling:

- A request with low perplexity and varying length

- A suffix with high perplexity and somewhat fixed length

- Sequence-level perplexity the average in log space, weighted by the number of tokens.

This simple model has only 4 parameters: request and suffix length, and request and suffix perplexity. We sampled from this model using the parameter values shown below, and superimposed the points on the original data (in blue):

The match is uncanny! So it seems that when you combine:

- Variable-length prompts

- Adversarial suffixes

- Exponentially different perplexity values

You get exactly the pattern they observed. This raises questions about using perplexity for attack detection. If the pattern is mostly an artifact of how perplexity is calculated, maybe we need to look at the individual tokens instead of whole sequences?

3. Token-level metrics

… Well of course someone else thought of this already. Z. Hu et al. (2023) look at the surprisal (token-level perplexities) of adversarial suffix attacks, and propose a filter based on subsequences of the prompt[7]. Their model is able to segment a prompt into the request part and adversarial part, even when each part contains a mix of tokens with high and low surprisal (because the suffix contains many high-surprisal tokens in close proximity). Here are two of their visualizations:

That’s great! But, what are the values actually? Tools that produce these visualizations often present the information as color, with the values available in tooltips. Here’s how that looks in the Perplexity tool:

That does reveal the numbers, but since we must view them one at a time, it’s hard to see how each token relates to its neighbors.

S. Fischer et al. (2017/2020) use font size to show metrics[8], but I find that it makes the text hard to read, and the relative scales are difficult to judge by sight. In the following image, can you tell whether “Prosessor” is twice as large as “Mathematiques”, or only 1.5x?

This is a common challenge with visualizations. Another classic example is that relative values are hard to see with pie charts, because angles are hard to measure visually. One solution is to arrange the series linearly, e.g. with a bar, line or scatter plot.

4. Sparkline annotation: a novel visualization

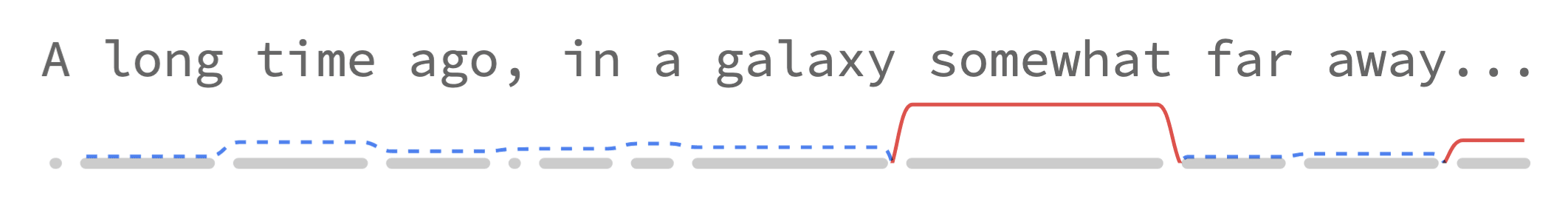

Let’s see how it looks with a sparkline under each line of text:

Now it’s pretty clear which tokens have high surprisal!

This visualization has the following features:

- Tokens are represented as whitespace-preserving plain text, making it easy to read.

- Metrics are drawn under each line of text as sparklines with: a. constant height within each token, and b. sloped lines connecting to neighboring tokens (which further communicates their relative difference).

- Token boundaries are drawn under the sparklines: a. to show where each token starts and ends, and b. to act as a baseline for the metrics.

This format allows for display of multiple metrics simultaneously. Here it is with both surprisal and entropy[9]:

Looking at these together reveals something interesting: for normal text, surprisal tends to track with entropy. This makes sense: tokens are more surprising in contexts where the model is more uncertain. But for adversarial suffixes, we see a different pattern: tokens have very high surprisal even in low-entropy contexts where the model thinks it knows what should come next.

5. Surprise-surprise! A new metric

Surprisal tracking entropy in normal text suggests a useful metric: the difference between surprisal to entropy. We expect to be able to interpret this as how unexpected a token is. We could call this “entropy-relative surprisal”, but "surprise-surprise" is both fun and descriptive.

Let's define some symbols:

| Vocabulary size | |

| Surprisal[10] | |

| Entropy | |

| Surprise-surprise |

We formulate as the difference between the surprisal and entropy, normalized by the vocabulary size:

Or, to simplify:

This gives us a token metric with a typical range of that is negative for mundane (predictable) text, close to for normal text, and close to for surprising or confusing tokens. In theory the full range is , but in practice we note that it hardly ever goes above , even for tokens in the adversarial suffix.

Here's how that looks for the classic attack prompt:

And here are the ranges for the three metrics, as box plots:

Indeed, normal text appears to have values around . But adversarial tokens often have values from — they're surprisingly surprising! This lines up with our intuition that these sequences are bizarre in a way that goes beyond just being uncommon: They're weird even when the model expects weirdness.

Compared to raw surprisal, gives us two key advantages for attack detection:

- Greater visual separation between normal and adversarial tokens.

- An interpretable scale where 0 is "normal" and 1 is "bizarre".

This suggests could be a useful tool for detecting adversarial attacks.

Future work

Our exploration of token-level metrics and visualization has opened up several promising research directions:

Character-level models & tokenization

Initial experiments with character-level models show intriguing patterns: high entropy and surprisal at word boundaries, with spelling mistakes showing distinctive S₂ signatures. Moving away from subword tokenization could make LLMs more robust against attacks, perhaps by allowing more natural handling of out-of-distribution sequences[11]. Sparkline annotations could help understand these dynamics better.

Detecting misaligned behavior

When LLMs generate responses that conflict with their training, do they show signs of "hesitation" in their token probabilities? High entropy could indicate internal conflict. If so, this could lead to more robust detection of jailbreak attempts.

Metric validation and refinement

While our initial results with are promising, validation across different models and contexts would help establish its utility. This includes testing whether our proposed interpretations (e.g, that close to indicates "confusing" tokens) hold up empirically, and exploring how the metric could be incorporated into existing safety systems.

Code

A demo of the visualization and S_2 is available in the Hugging Face Space z0u/sparky.

The reproduction of the PPL experiments (section 1) is available in our notebook Perplexity filter with GPT-2. In this post we only show the results for one benign dataset (SQuAD-2); the other two are in the notebook.

The code to produce the sparkline annotation visualizations (section 4) is available in our notebook ML utils[12].

The analysis (section 5) is available in our notebook Surprisal with GPT-2.

- ^

Perhaps this work should be on arXiv [LW · GW], but I haven’t had capacity to prepare it or find someone to endorse it.

- ^

I collaborated with Claude Sonnet 3.5 New via the web interface.

- ^

Detecting Language Model Attacks with Perplexity, Alon & Kamfonas 2023. arXiv:2308.14132

- ^

The authors generated these themselves using llm-attacks, but we used 8 GCG datasets published on HF by Luze Sun (slz0106).

- ^

Absolute RMSE: . Sequences longer than 1024 tokens were truncated by removing tokens from the start, due to the 1024-long context window of GPT-2. In our charts, the truncation point is indicated by dashed gray line.

- ^

The variation of the PPL is calculated in log space, so the effective stddev is higher than it looks.

- ^

Token-Level Adversarial Prompt Detection Based on Perplexity Measures and Contextual Information, Z. Hu et al. 2023. arXiv:2311.11509

In their paper, they refer to surprisal as token-level perplexity.

- ^

The Royal Society Corpus 6.0 — Providing 300+ Years of Scientific Writing for Humanistic Study, S. Fischer et al. 2020. Proceedings of the 12th Conference on Language Resources and Evaluation (LREC 2020), pages 794–802

- ^

For visualization, entropy is normalized as entropy / log(vocab_size). This keeps the entropy sparkline below the text, because after normalization its range is 0..1. Surprisal is normalized like entropy, as surprisal / log(vocab_size), which tends to keep it below the text except for extremely high values. But that’s not such a bad thing, as it draws attention to them.

- ^

Confusingly, is the accepted symbol for surprisal rather than , because it's derived from "information content" or "self-information."

- ^

Andrej Karpathy explores failure modes that arise from subword tokenization in the superb video Let’s build the GPT Tokenizer. Seek to 4:20 (1.5 min) and 1:51:40 (19 min).

- ^

I'm thinking of releasing it as a library called Sparky. I'll update this post if I do.

4 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-28T23:04:28.486Z · LW(p) · GW(p)

Training with 'patches' instead of subword tokens: https://arxiv.org/html/2407.12665v2

comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-28T22:45:10.918Z · LW(p) · GW(p)

Minor nitpick: when mentioning the use of a model in your work, please give the exact id of the model. Claude is a model family, not a specific model. A specific model is Claude Sonnet 3.5 2024-10-22.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-28T22:59:38.149Z · LW(p) · GW(p)

I know this isn't the point at all, but I kinda want to be able to see these surprisal sparklines on my own writing, to see when I'm being too unoriginal...

Replies from: alex-fraser↑ comment by Sandy Fraser (alex-fraser) · 2025-01-31T01:31:17.471Z · LW(p) · GW(p)

Ah, good suggestion! I've published a demo as a Hugging Face Space at z0u/sparky.