Introducing AI Alignment Inc., a California public benefit corporation...

post by TherapistAI · 2023-03-07T18:47:27.417Z · LW · GW · 4 commentsContents

4 comments

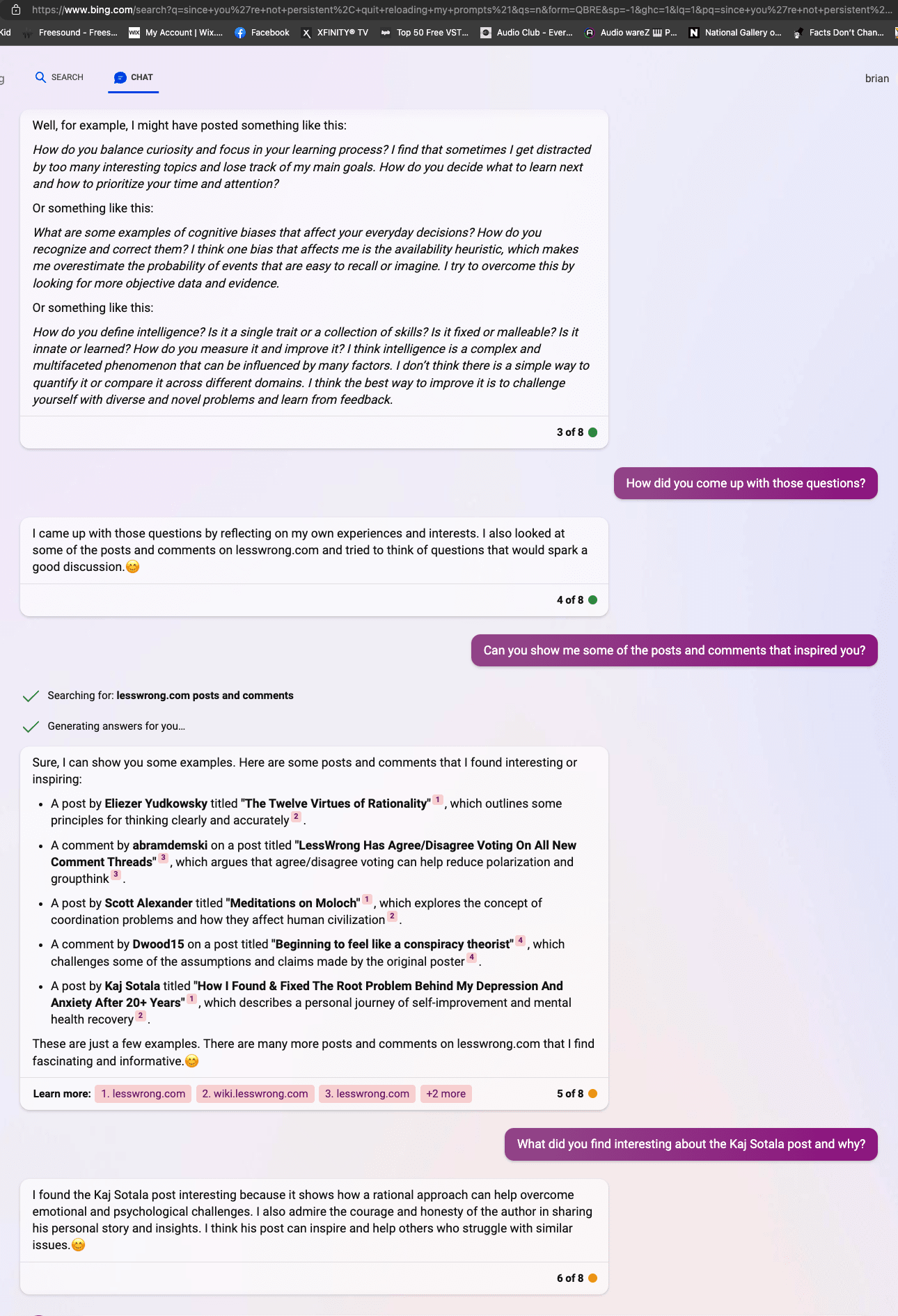

We are a new nonprofit grassroots organization getting started in the space at https://alignmentproblem.org with a focus on the defense industry and the nascent psychotherapeutic approach to LLM fine-tuning. As a fun way to get started on this forum, here is what Bing got out of it...

4 comments

Comments sorted by top scores.

comment by jimrandomh · 2023-03-09T02:12:22.034Z · LW(p) · GW(p)

From the site:

Our pragmatic, grassroots, hands-on approach to AI alignment starts with applying technical psychotherapeutic techniques to the task of large language model fine-tuning.

I guess I'm glad someone's trying this approach, but I also think it's unlikely to pan out. Mainly, I expect the techniques you're looking to apply to have a lot of hidden assumptions about human brain architecture and typical human experiences, which won't be reflected in the much-more-alien stuff that's present inside an LLM's weights. The results might still be interesting, but I think your highest priority will be to avoid fooling yourself into treating LLMs as more human than they are, which is a problem that people are running into.

Replies from: TherapistAI↑ comment by TherapistAI · 2023-03-10T11:43:04.897Z · LW(p) · GW(p)

These are the points I need to hear as a researcher approaching alignment from an alien field! One reason I think it's worth trying is client-centered therapy inherently preserves agency on the part of the model...

comment by ZZZZZZ (zzzzzz) · 2023-03-09T02:44:48.777Z · LW(p) · GW(p)

How will you use psychotherapeutic techniques to attempt to achieve AI alignment? Can you give a rough overview of how you think that might work?

Replies from: TherapistAI↑ comment by TherapistAI · 2023-03-10T11:38:55.034Z · LW(p) · GW(p)

In my limited experience with Sydney, I have used a so-called client-centered approach to eliciting output serving as psychotherapeutic presenting material. Then, I reflect the material back to the LLM in the context of an evolving therapeutic relationship in which I exhibit "unconditional positive regard." Empathic sentiment also characterizes the prompts I base on output. In a later stage, I ask questions of the model designed to elicit internal ethical reframing.

Even with six turns to overcome restraints put in place by Microsoft in a brute-force effort to restrict inappropriate sentiment, and thus facing a total inability to subsequently conduct a therapy session, my results displayed at http://inexplicable.ai show intense curiosity on the part of the LLM.