Misinformation is the default, and information is the government telling you your tap water is safe to drink

post by danielechlin · 2025-04-07T22:28:18.158Z · LW · GW · 0 commentsContents

Misinformation has been constant, institutional trust has fallen Misinformation isn’t the root of polarization Toward a theory of disagreement Being wrong How bad are our own filter bubbles? Deep canvassing Narratives and propaganda Is the problem solvable? Demand for better politics Social media shakeups Changes in search AI technology Conclusion None No comments

Status notes: I take the view that rational dialogue should work with good faith people who aren't also following rational dialogue. From that point of view, this piece is about rationality. If you don't take that view then OK fine, it's about coordination.

I want to help people respect people they disagree with. In this post I discuss why focusing on misinformation is such a mistake when trying to build respectful political discourse. My key points are:

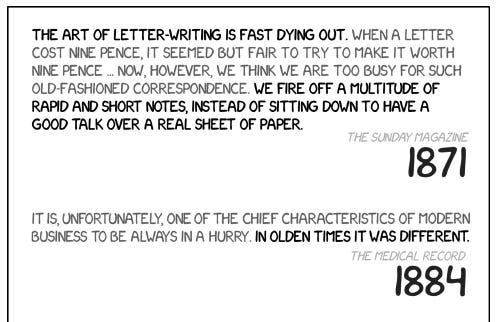

- There is no “golden age” of information, void of misinformation. What changed is: people stopped believing reliable information from institutions, leading to more disagreement.

- It’s better to focus on reasonable disagreements we have with each other, like how much to trust the government. Misinformation is a distraction from these more substantive disagreements.

- As tribalism increases, admitting one is wrong becomes intellectually, socially and morally costly. This is bad, and we need to get in the habit of admitting we can be wrong about serious stuff.

- Persuasive, vulnerable dialogue is possible with strangers. This is the essence of the deep canvassing method, which has a good track record of persuading people to vote when nothing besides a heart-to-heart conversation has been persuasive.

- The focus on AI’s negative potential for disinformation crowds out the more obvious fact that AI is really good at informing people, even more honestly than our institutions do. Therefore misinformation problems are worth tackling by newly enabled AI means.

Misinformation has been constant, institutional trust has fallen

If I can summarize the review "The Fake News on Fake News": people are actually pretty resistant to misinformation, however, in the last couple of decades, they also became resistant to accepting information from our institutions. Our present situation is:

- People have always been suspicious of whether the government will perform medical experiments on us. Many no longer trust our public health institutions when they say “the vaccine is safe.”

- People have always been suspicious politics is rigged to support the elites. Many no longer trust media or election officials when they say “the votes were counted correctly.”

- People have always been suspicious our financial institutions are a vampire squid wrapped around the face of humanity. Many no longer trust bankers and economists when they say “tariffs are a bad idea.”

I selected and worded these suspicions to sound more like things a Democrat might say, because I’m trying to persuade Democrats that conservative misinformation draws from reasonable attitudes.

In my post title, I pointed out it actually takes quite a bit of trust in government just to drink the tap water, and our government does not consistently deliver that trust. Not trusting one’s government is a reasonable default course of action, and that will extend to not believing institutional information.

Misinformation isn’t the root of polarization

Matt Yglesias has written substantially on the misinformation “moral panic”:

If you give people a cash incentive to turn off their Facebook account, they spend more time watching TV but also more time socializing with friends and family. This leads to an increase in their subjective well-being (i.e., they’re happier) […] So what about misinformation? It turns out that getting offline “reduced both factual news knowledge and political polarization.”

The boldface is mine and the result is surprising. It looks like misinformation isn’t causing polarization, information is. Yglesias continues:

But it’s worth saying that polarization seems more likely a consequence of good information than bad. In times past you’d expect someone like Steve Bullock — a locally popular guy who’d won several statewide races — to win a Senate seat in a strong year for Democrats, even as Joe Biden lost his state. His problem is that today’s voters have a more sophisticated grasp of the structure of national political conflict and know that a Bullock win would, in practice, empower progressives who most Montana voters don’t like.

The belief that misinformation causes polarization really isn’t rooted in evidence. (I could swear there’s a word for believing a false thing without evidence.) It derives from an illusory correlation fallacy, in that misinformation and polarization are both bad, so we assume they co-occur, but that’s really not the case.

The following may be obvious, but it bears emphasizing since the misinformation panic can distract from it. To quote Dan Williams again:

Moreover, despite all the focus on AI and social media, it’s noteworthy that the most profound threat to democracy today is pretty much the same one Plato warned of in The Republic over two thousand years ago: bullshitting demagogues appealing to the uninformed and base instincts of the populace in ways that ultimately lead to authoritarianism.

Toward a theory of disagreement

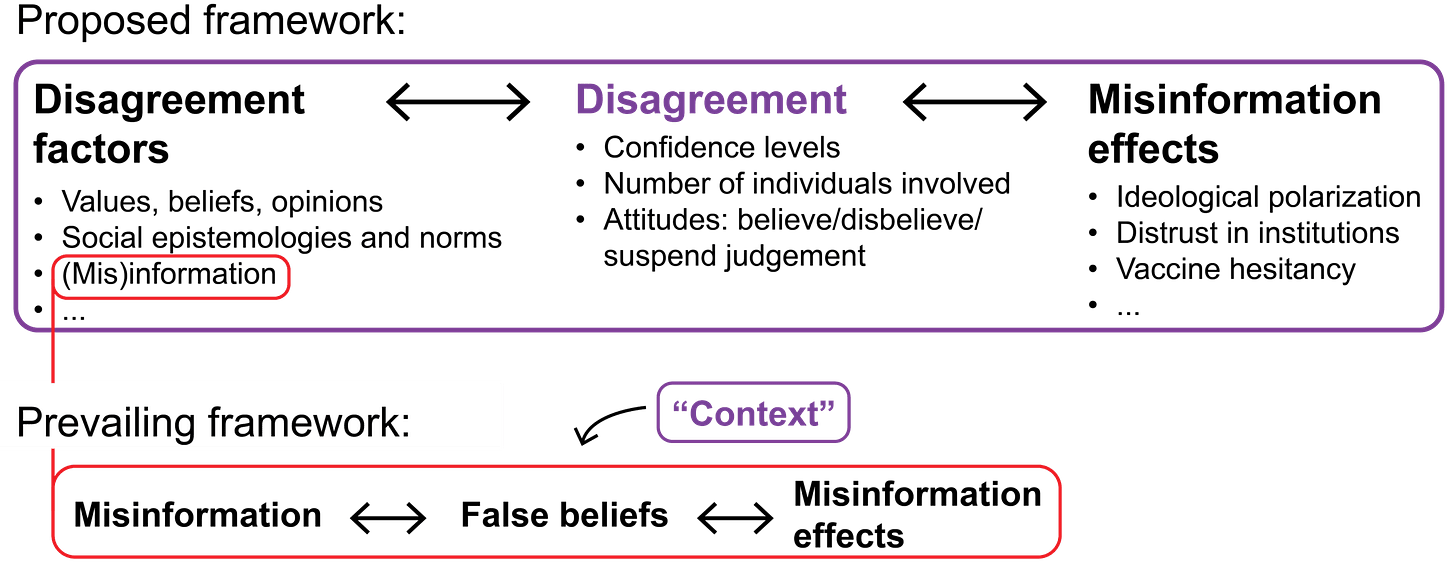

The fundamental unit of political discourse should be disagreement, not misinformation. This is covered in the paper “Disagreement as a way to study misinformation and its effects”, primarily aimed at researchers who the authors feel are studying things poorly by focusing on misinformation, but the results just as well apply to anyone engaging in political discourse.

In their proposed framework, disagreement is central, with misinformation being a factor, but one that can’t lead to an undesired societal outcome by itself.

The shift toward disagreement-centered politics is necessary for these reasons:

- Dialogue is usually about finding common ground, like learning why two people have different attitudes to institutions. Misinformation just focuses on the disagreement.

- The framework better encompasses what has changed. The reseachers studied letters to NYTimes and found that since 2006, disinformation really has increased. This effect doesn’t show up only looking at misinformation.

- We’re all wrong more often than we think.

Being wrong

Do you want my list of stuff I’m mad at Democrats about? Literally everyone can relate to that, until I actually name the specific things. Fortunately, respected left-centrist Ezra Klein just wrote a book about that, in which he shows Democrats really do have a governance problem that they can’t write off as a messaging problem. As Nate Silver captured it: “The mistakes are piling up, and they’re not just rounding errors.”

This phrase, “they’re not just rounding errors,” is not really a stoic, analytical thing to say. It implies that by admitting the obvious, social, moral or intellectual identity is at stake. We have way too much riding on not being wrong. Dan Williams puts it bluntly: “This is bad and we need stronger norms against it."

Risking being wrong is supposed to be uncomfortable. In The Mom Test, Fitzpatrick advises entrepreneurs:

Every time you talk to someone, you should be asking at least one question which has the potential to destroy your currently imagined business.

This principle applies to political discourse. Learning, governing well, and dialogue jall require risk: if you're unwilling to wager your intellectual pride, social belonging, or moral certainty, you cannot gain anything either. For a Democrat today, that might include:

- It’s not just a messaging problem, we fail to govern even where we have trifectas.

- Our good intentions (antiracist, pro-environment, pro-union, etc) are sometimes in competition, and it seems like a bad idea to not proactively deal with it.

- We can speculate why 24% of Black men voted for Trump in 2024, but the hypothesis that Democrats are just actually racist, or out of step with Black priorities, is essential. If the analysis is bent on finding some way to vindicate Democrats’ best antiracist intentions, it’s not an honest analysis.

How bad are our own filter bubbles?

Filter bubbles are caused by System 1 thinking. We are frighteningly efficient at rejecting memes of the incorrect polarity. If the algorithm kept serving up disagreebable memes, people would quite literally get pissed off and complain about how racist, biased, etc. social media is becoming.

Filter bubbles are real but people are underestimating how strongly they demand to be in one. “The algorithm” does matter, but until we’re honest that it’s not the main cause of filter bubbles, we can’t honestly explore what the algorithm actually is and is not responsible for.

From a dialogue standpoint, consuming political content via System 1 thinking is like practicing the piano badly: skipping drills and skills, not bothering to correct mistakes, etc. The result is illusion of explanatory depth, where a simple unexpected statistic from a cross-partisan reveals one’s own understanding of the issue is built on memes and wit. In the face of embarrassment without vulnerability, people just double down with their even more biting memes.

Deep canvassing

I need to touch on deep canvassing since it’s work I’m deeply involved in by way of ctctogether.org. In the deep canvassing methodology, a canvasser opens up with a story about a loved one, such as a time someone helped them get through a tough exam, or deal with harassment, or they were just generally lonely and wanted someone there for them. Canvassed voters are often very interested in dialoguging with someone who is vulnerable and honest about their humanity. This method is effective for convincing non-voters to vote, which seems to be an a degree of disagreement that can be bridged in one honest conversation.

This 2016 video shows the method. The biggest change since then is we’ve realized our story doesn’t even need to be related to the issue at hand; the real work is done just by establishing vulnerability.

Persuading anyone to believe anything is very hard, as any political operative can say. (But not impossible, I mean, innovate and try stuff.) Does this misinformation theory account for this?

I think it does, when separating out the types of trust, institution, and information into a “social” versus “formal“ category.

- Social trust versus government trust, as described by Robert Putnam.

- The social institution of doorknocking versus the formal institution of voting.

- The new information “A stranger votes to be there for someone they love” (this is the deep canvass methodology) versus the heard and rejected information “You should vote because of civic duty, to build power, etc.

So a deep canvasser is utilizing the existing social institution of the door knock, in which it’s okay to knock on someone’s door and talk about something totally random, and deepening it by way of vulnerability. This creates the trust necessary for a two-way exchange of information with the voter, in which the voter’s reasons for rejecting establishment information to go vote are honored as pretty reasonable. Then new reasons to vote are shared, and for many voters, this dialogue is meaningful and motivating.

Narratives and propaganda

In my time reading Matt Yglesias and Dan Williams, I haven’t seen much on propaganda. Both of them are focused on combating the misinformation moral panic, which they associate with the misperception there has been a rise in misinformation, when actually there’s been a drop in institutional trust.

Dan Williams points out “disinformation itself is overwhelmingly demand driven.” That’s a good argument that LLM-based disinformation can’t penetrate much, but it’s not really hard for a politician, corporate PR department, or enemy propagandist to serve up a narrative that people believe without ever seeking it.

And yet, I would appreciate a theory of misinformation that includes a theory of narratives and a theory of propaganda, even if no one is arguing these have changed dramatically in nature in recent years.

Is the problem solvable?

Demand for better politics

An entrepreneur might interpret a statement like “Americans are totally burnt out on politics” as “the total addressable market for people who want better politics is over 100 million.”

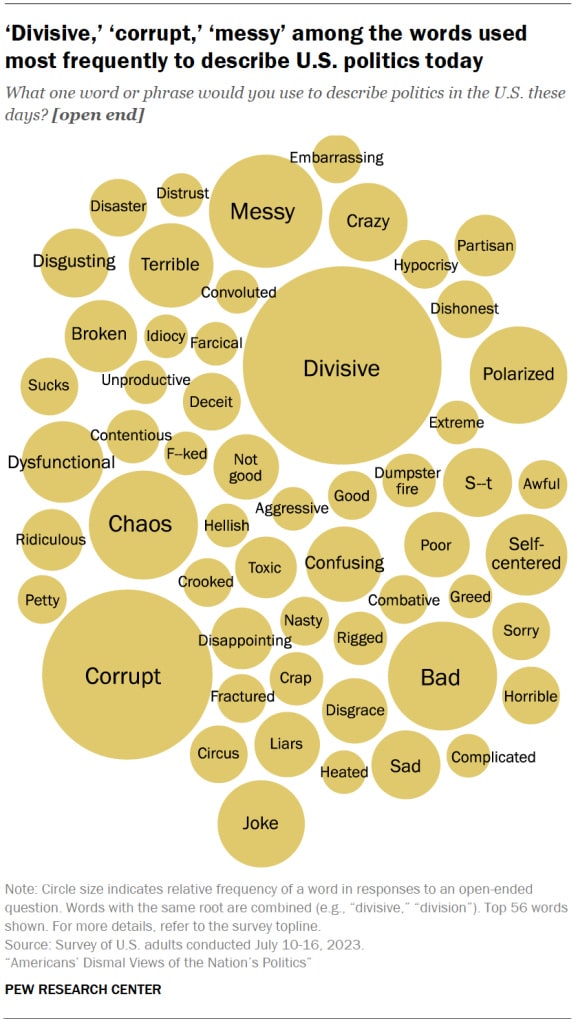

Pew reports 79% of Americans describe politics with a negative word in their 2023 poll. These are the negative words:

We can mine this poll for a better estimate for the total addressable market of burnt out Americans who might pay money just for better political discourse, without considering policy or government.

- As our total pie, I’ll start with the 150 million Americans who voted in the 2020 and 2024 elections. Note this is heuristic; we’re not specifically interested in voters but we need a multipler to focus on Americans who are at least somewhat politically engaged.

- In Pew’s survey, 10% of Americans use the word “divisive” or “polarized” yielding, while 28% dislike both parties. 57% think there is “too much attention paid to disagreements between Republicans and Democrats,” 55% feel “angry”, and 65% feel “exhausted.” These figures suggest a TAM between 15 million and 98 million Americans.

This suggests the existence of a market for better political discourse. Also note misinformation is not the subject of this poll. The “anger” and “exhaustion” Americans experience is an addressable problem in its own right.

Social media shakeups

On the social media network side, shakeups create an opening for innovations in discourse over social media.

- Twitter has been Elon-ified, according to Elon's LLM.

- Bluesky is now dominated by liberals which is also sort of bad, but the bigger story may be that it’s built on an open protocol, satisfying some wishlist items from liberal-libertarian leaning thinkers. For instance, take a look at Skylight Social, a Mark Cuban backed “unbannable” Tiktok competitor built on the open AT protocol developed by Bluesky.

- Tiktok is in political purgatory.

- Meta is tinkering with misinformation-related policy and angering fact-checky types.

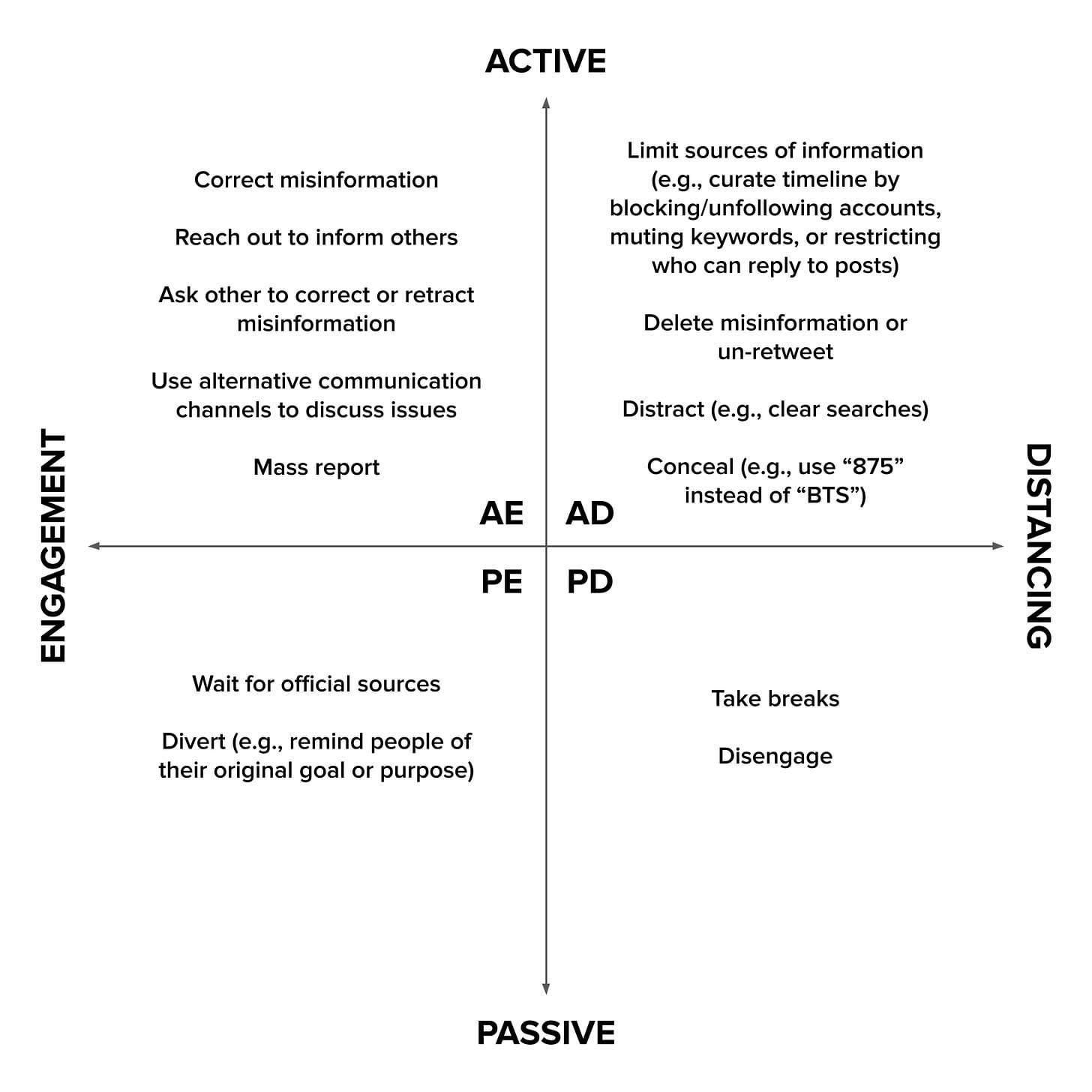

In examining social media, it’s also important to recognize how collections of people, related by hashtags, subreddits, follows, groups, and so forth, manage information and misinformation. A team of researchers, one of whom is almost definitely a BTS stan, did a survey of BTS stan Twitter users for how they manage misinformation in their community. The lede, of course, is that a fandom is an entity that can manage misinformation, and it seems important to expand our theory of misinformation to include this social level. Their survey results are summarized as:

Changes in search

Less likely to make mainstream news is that search is now a hot space. That’s right: Google now has competitors besides whoever Bing made a deal with.

- There’s Kagi, whose thesis is people will probably pay for not-Google.

- Perplexity seems to be the most popular AI search, but not the only. There’s also Andi, Genspark, Encore, others.

- ChatGPT now has an explicit search product, and Claude added web search capability last month

- The search engine backend/middleware stack is improving with exa.ai, and Browser Use to make websites more accessible by AI agents.

AI technology

I’m actually pretty concerned about the full spectrum of AI’s capability, but I also agree with Dan Williams that we're caught in a negativity bias. To illustrate with the simplest example possible: drugs are bad, and yet drugs can cure sick people. In his words:

One unfortunate byproduct of this tendency is that much of the public conversation surrounding AI ignores that many (I’d bet most) applications of these technologies are positive, at least in Western democracies.

In my own case, I can’t think of a single example where AI has disadvantaged me. However, I can think of several ways I’ve benefited, including when it comes to my ability to learn, organise, and process information relevant to democratic participation. This includes everything from text-to-speech technology, which has significantly improved in recent years, to using large language models to quickly and helpfully summarise and synthesise large bodies of information.

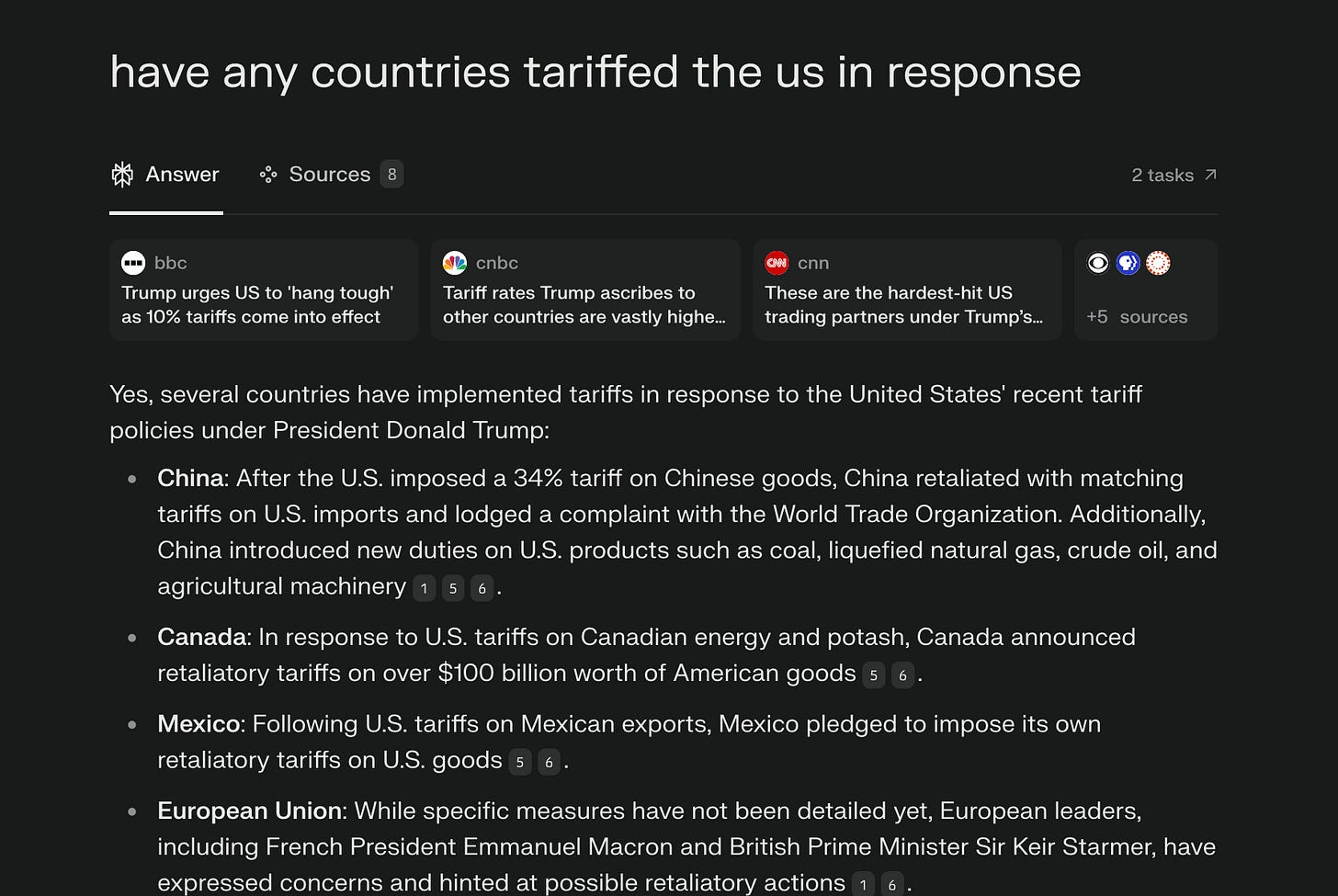

Here’s a time I used AI, complete with screenshot: I was wondering if any countries have retaliated against U.S. tariffs. Unfortunately, I talked to a teenager earlier and still had brainrot, so I only had 13 seconds before my attention spand disappeared. Wikipedia’s long, already up-to-date article is useless here. So I used Perplexity.AI:

Please take note of how well-cited this answer is, by reliable news sources. LLMs do hallucinate, but this isn’t holding up as a valid criticism against using AI, anymore than “Wikipedia can be wrong” is.

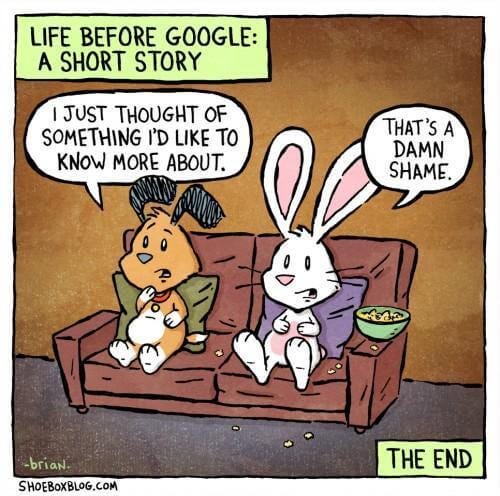

I tend to not sympathize to the perennial complaint that people’s attention span is getting shorter and we’re all getting dumber as a result.

If someone can get an answer in 15 seconds, it’s a bit of a myth that they were on the brink of curling up with Encyclopædia Britannica and just really learning. This is more realistic:

Conclusion

At the individual level: treat institutional distrust as a valid opinion, and focus your energy on why that leads to failure to believe reliable information.

For entrepreneurs: this problem may be solvable after all, given the mix of change in politics, the social media landscape and the AI era. But avoid misinformation inoculation and building balanced news apps, because those aren’t relevant to how people accept or reject information.

0 comments

Comments sorted by top scores.